Epipolar Geometry Improves Video Generation Models (2510.21615v1)

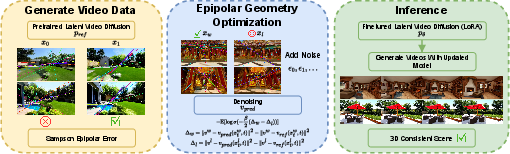

Abstract: Video generation models have progressed tremendously through large latent diffusion transformers trained with rectified flow techniques. Yet these models still struggle with geometric inconsistencies, unstable motion, and visual artifacts that break the illusion of realistic 3D scenes. 3D-consistent video generation could significantly impact numerous downstream applications in generation and reconstruction tasks. We explore how epipolar geometry constraints improve modern video diffusion models. Despite massive training data, these models fail to capture fundamental geometric principles underlying visual content. We align diffusion models using pairwise epipolar geometry constraints via preference-based optimization, directly addressing unstable camera trajectories and geometric artifacts through mathematically principled geometric enforcement. Our approach efficiently enforces geometric principles without requiring end-to-end differentiability. Evaluation demonstrates that classical geometric constraints provide more stable optimization signals than modern learned metrics, which produce noisy targets that compromise alignment quality. Training on static scenes with dynamic cameras ensures high-quality measurements while the model generalizes effectively to diverse dynamic content. By bridging data-driven deep learning with classical geometric computer vision, we present a practical method for generating spatially consistent videos without compromising visual quality.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper is about making AI-made videos look more like real 3D scenes. Many modern video generators are great at colors and textures, but they often mess up geometry—things wobble, stretch, or move in ways that don’t make sense in 3D. The authors show that using a classic math rule from camera geometry, called epipolar geometry, can teach these models to keep the 3D structure stable, reduce visual glitches, and make motion feel more natural.

What questions were the researchers trying to answer?

They focused on three simple questions:

- Can we use basic geometry rules (epipolar geometry) to judge whether a video looks 3D-consistent?

- Can we then use those rules to teach a video generator to prefer better, more stable results?

- Will this training improve videos in general—without making them boring or hurting visual quality?

How did they do it?

The key idea: Use camera rules to check 3D consistency

Imagine taking two photos of the same scene from slightly different positions—like moving your phone a bit to the side and snapping another picture. Each point (say, the tip of a street lamp) should appear in both photos in a predictable way. Epipolar geometry is a set of rules that say where that point should show up in the second photo, given where it was in the first. If those rules are broken, the scene doesn’t feel like a solid 3D world.

- The model generates several videos from the same prompt.

- For pairs of frames (like frame 1 and frame 5), the system finds matching “landmarks” using feature matching (think: spot the same window corners in both frames).

- It estimates the relationship between the two camera views and measures how well the landmarks obey epipolar geometry with a score called the Sampson error. Lower scores mean better 3D consistency.

Teaching by preference instead of strict rules

Rather than using a complex, direct loss (which is hard because these geometry checks aren’t smooth or easily “differentiable”), they use Direct Preference Optimization (DPO). In everyday terms:

- Generate multiple videos for the same prompt.

- Rank them: “This one follows geometry better than that one.”

- Train the model to prefer the better one over the worse one.

This way, the model learns what “good geometry” looks like without needing a perfect numeric reward or fancy differentiable math.

Train on static scenes with moving cameras

If lots of things are moving (like cars or people), it’s much harder to measure geometry correctly. So they train using scenes where the camera moves but the world is static (buildings, landscapes). That makes the measurements clean and reliable. After training, the model still generalizes well to videos with moving objects.

Prevent “cheating” by freezing motion

A model could improve geometry by barely moving the camera or making everything too static. To avoid that, they add a small penalty that discourages the model from making videos with no motion. This keeps motion alive while improving stability.

Practical setup

They fine-tuned a strong open-source video generator (Wan-2.1) using a lightweight adapter (LoRA), so they didn’t have to retrain everything from scratch. They also built a large dataset of generated videos with geometry-based scores, so others can use it too.

What did they find, and why is it important?

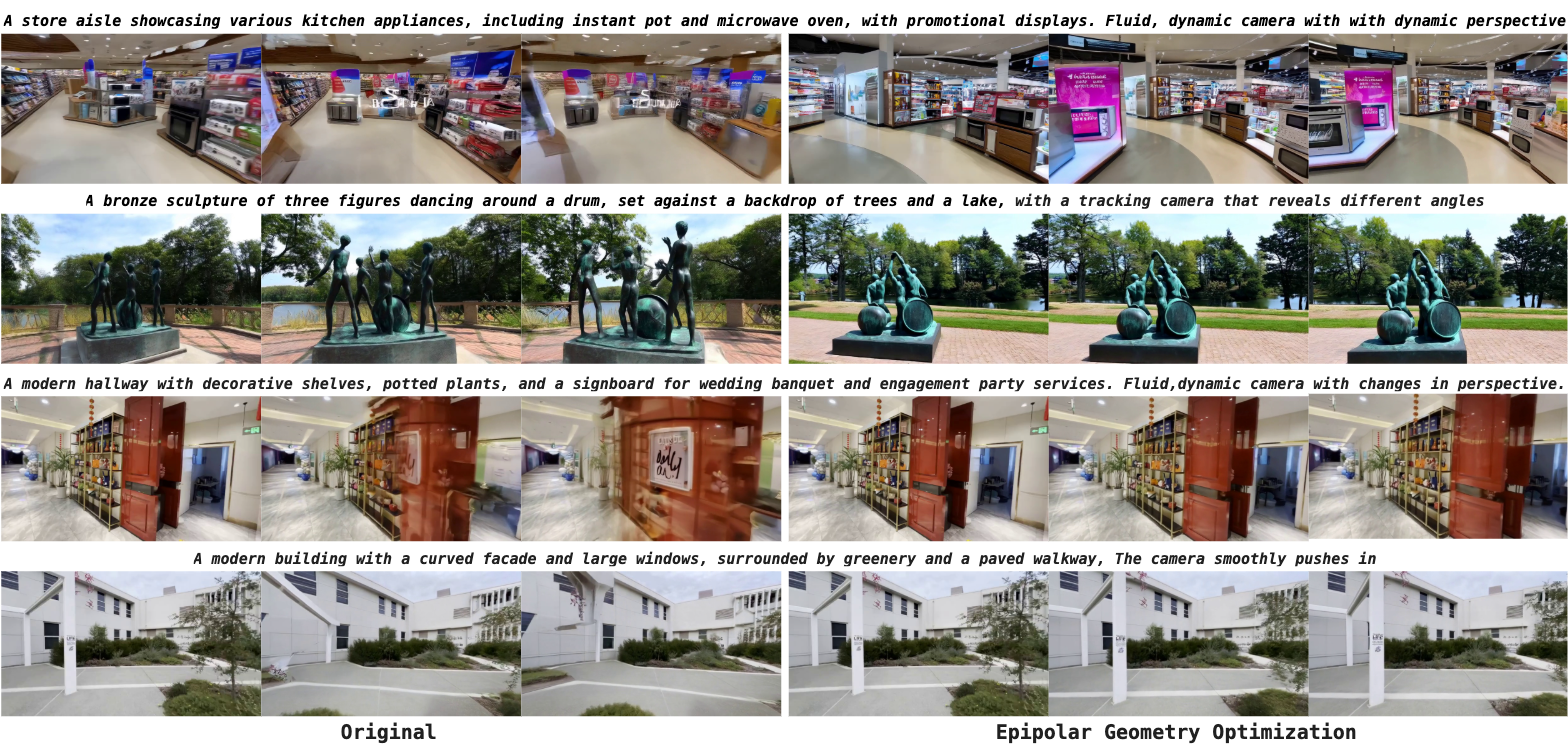

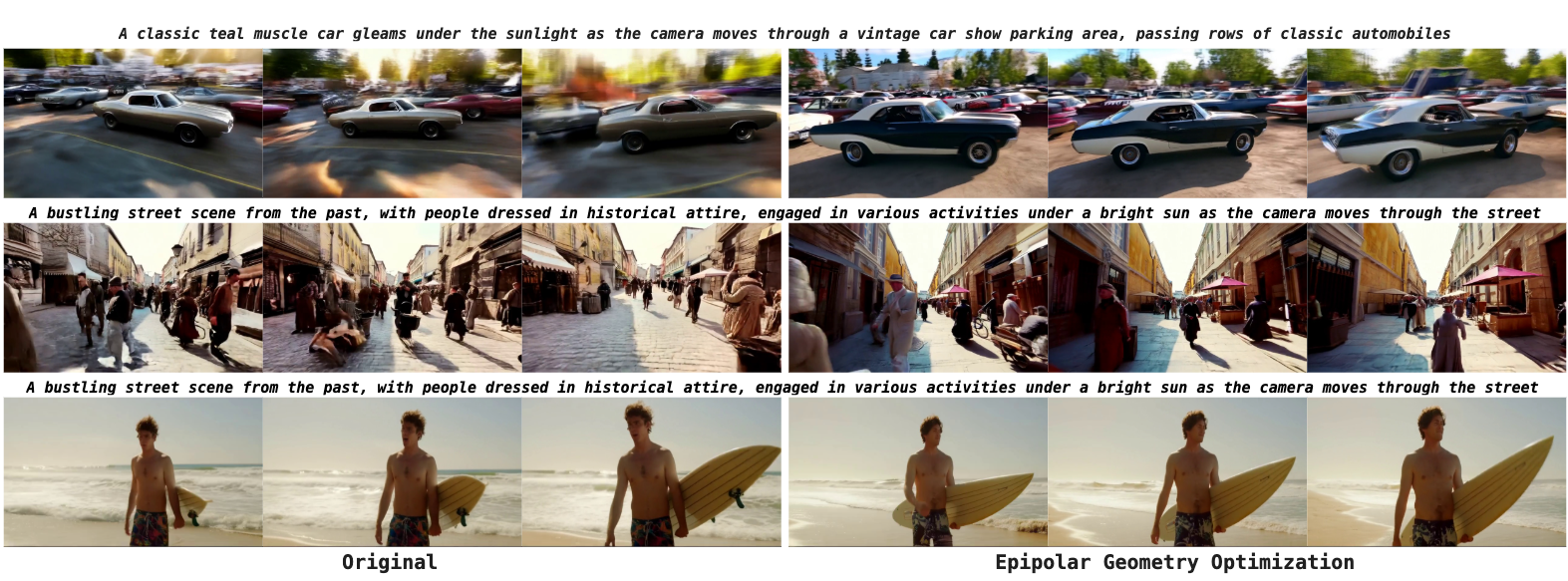

Here’s what improved after training with epipolar geometry:

- More 3D-consistent videos: Fewer perspective errors and less “wobble,” so scenes feel solid and real.

- Smoother motion: Less jitter and flicker, with camera movement that feels natural.

- Fewer visual artifacts: Cleaner frames with reduced glitches.

- Better human preference: When people compared videos, they often preferred the geometry-aligned ones.

- Better for 3D tasks: Videos worked better for building 3D reconstructions, showing that the improvements weren’t just cosmetic—they actually helped downstream applications.

These results matter because they show that simple, classic geometry rules can give cleaner, more reliable signals than modern “learned” quality metrics (which can be noisy or biased). In short: old-school math helps keep new-school AI grounded in the real world.

What’s the impact?

This approach can boost many areas that need stable 3D structure:

- Animation and filmmaking: More believable camera motion and scenes.

- Virtual worlds and VR: Better 3D consistency makes worlds feel real.

- 3D reconstruction and novel view synthesis: Higher-quality inputs mean better 3D models.

- General video generation: Cleaner motion and fewer artifacts, even with moving objects.

Big picture: The paper shows that blending classic computer vision (geometry rules) with modern deep learning (video diffusion models and preference training) can produce videos that look better, move better, and work better for 3D tasks—without sacrificing creativity.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, actionable list of what remains missing, uncertain, or unexplored in the paper.

- Applicability beyond static scenes: the reward is only reliable when the scene is static and the camera moves; devise strategies to handle dynamic objects during training (e.g., static-background segmentation, motion masking) and measure reward corruption in non-static content.

- Degenerate camera motions and scene structures: fundamental matrix estimation and Sampson error are ill-posed for pure rotations, near-planar scenes, textureless regions, repetitive patterns, low light, and heavy motion blur; quantify failure modes and incorporate alternative constraints (homographies for pure rotation/planar scenes, trifocal tensor for multi-frame constraints).

- Camera model assumptions: epipolar constraints assume pinhole cameras with fixed intrinsics; generated videos can exhibit zooms, lens distortions, or rolling-shutter artifacts; evaluate sensitivity to intrinsics changes and add per-frame intrinsics estimation or undistortion/calibration to the reward pipeline.

- Frame-pair selection and video-level scoring are underspecified: define and evaluate a protocol for selecting frame pairs (consecutive vs. spaced), number of pairs per video, and aggregation of Sampson errors (median/trimmed mean/weighted by correspondence confidence).

- Matching reliability and coverage: SIFT/RANSAC can fail or yield sparse correspondences; report the rate of matching/RANSAC failures, normalize rewards by correspondence count, enforce spatial coverage (penalize rewards that ignore artifact-prone regions), and test learned descriptors under controlled conditions.

- Reward gaming and metric robustness: the paper notes oversaturation can hack certain descriptors; analyze if Sampson-based alignment can be gamed via blur, saturation, or texture manipulation; add anti-gaming measures (color-invariant matching, coverage constraints, fidelity regularizers).

- Hyperparameter sensitivity: no systematic ablation for DPO β, temporal penalty λ, LoRA rank r/α, pair-filtering thresholds τ and ε; provide sensitivity analyses and tuning guidelines across datasets and model sizes.

- Trade-off with motion richness: dynamic degree drops after alignment; develop multi-objective training to jointly optimize geometry and motion amplitude (e.g., explicit motion magnitude constraints, Pareto-front tuning, conditional motion-level controls).

- Camera trajectory evaluation: beyond VGGT+Gaussian Splatting metrics, report pose accuracy, trajectory smoothness, and consistency (e.g., SfM/SLAM pose errors, jerk and acceleration statistics) and relate them to visual artifacts.

- Long-horizon stability: assess whether improvements persist for longer videos and variable frame rates; extend reward to multi-frame tensors (trifocal/quadric constraints) that enforce consistency across more than two frames.

- Sample efficiency and training cost: offline generation of tens of thousands of videos is expensive; investigate active preference sampling, online DPO with on-the-fly ranking, rejection sampling at inference, or low-cost proxy rewards.

- Correspondence-weighted scoring: Sampson error depends on the number and quality of matches; introduce confidence-weighted aggregation and explicit handling of low-match frames (e.g., fallback metrics, minimum-coverage requirements).

- Generalization to strongly dynamic content: win-rate improvements do not quantify object-motion fidelity; add benchmarks with annotated moving objects, occlusions, and non-rigid motion to measure object-level consistency and background–object disentanglement.

- Semantic alignment effects: text alignment is only weakly evaluated; measure semantic fidelity (e.g., CLIP-based retrieval, human semantic ratings), analyze regression cases, and design mechanisms to preserve content semantics under geometric alignment.

- Cross-model and scale generality: results are shown for Wan-2.1 (1.3B) with LoRA; evaluate scalability to larger models (Wan-14B) and other architectures (LTX-Video, Hunyuan, SVD), and assess whether training recipes or hyperparameters must change.

- Reward composition: only epipolar geometry is used; paper combinations with other classical constraints (e.g., essential matrix with calibrated intrinsics, epipolar flow consistency, perspective-field priors) and learned but geometry-aware metrics, balancing noise vs. signal.

- Downstream 3D tasks breadth: reconstruction is evaluated via Gaussian Splatting; add NeRF/SDF reconstructions, novel-view synthesis on held-out viewpoints, mesh extraction quality, and camera-control downstream tasks to demonstrate practical utility.

- Failure-case analysis: provide qualitative/quantitative analysis of scenarios where alignment harms quality (e.g., excessive stabilization, semantic drift), and propose mitigation strategies (adaptive penalties, prompt-dependent weighting).

- Reproducibility details: missing specifics for matching thresholds, RANSAC parameters, correspondence filtering, frame sampling strategy, prompt expansion settings, and dataset filtering τ/ε values; release full configs, scripts, and code for the reward pipeline.

- Domain bias and fairness: prompts derive from DL3DV/RealEstate10K and VLM expansions for camera motions; assess bias across scene types, cultures, and lighting/weather conditions, and test whether alignment benefits hold in underrepresented domains.

- Inference-time control: the method improves geometry without explicit camera controls; explore integrating camera-trajectory conditioning or post-hoc trajectory stabilization to give users controllable geometric outcomes.

- Online vs. offline alignment: DPO is used offline with precomputed preferences; compare to online preference generation (e.g., Flow-RWR/DDPO variants) to understand convergence speed, stability, and final quality.

Glossary

- AdamW: An optimization algorithm that decouples weight decay from gradient updates to improve training stability. "using the AdamW \cite{adamw} optimizer with a learning rate of and 500 warmup steps."

- Direct Preference Optimization (DPO): A post-training alignment method that optimizes models using pairwise preference rankings instead of explicit rewards. "Our method implements this through Direct Preference Optimization (DPO) \cite{dpo}, requiring only relative rankings rather than absolute reward values."

- Diffusion-DPO: An adaptation of DPO for diffusion model alignment using preference data. "Diffusion-DPO \cite{diffdpo} introduces Direct Preference Optimization into diffusion model alignment."

- Epipole: The point in an image where all epipolar lines intersect, corresponding to the projection of the other camera’s center. "It can be formulated as , where and are the camera projection matrices, is the pseudo-inverse of , and is the epipole in the second view."

- Epipolar constraint: The fundamental relationship that must hold for corresponding points in two views. "For any two corresponding points in one frame and in another, the epipolar constraint must be satisfied, where is the fundamental matrix."

- Epipolar geometry: The projective geometric relationship between two camera views that constrains the positions of corresponding points. "Epipolar geometry represents the intrinsic projective relationship between two views of the same scene, depending only on the camera's internal parameters and relative positions."

- Epipolar line: The line in one image on which the correspondence of a point in the other image must lie. "This constraint ensures that a point in one view must lie on its corresponding epipolar line in the other view."

- Epipolar-DPO: A DPO-based alignment method that uses epipolar geometry metrics to prefer geometrically consistent generations. "Epipolar-DPO (Ours)"

- Flow-DPO: The DPO loss formulated for rectified flow models, comparing velocity fields on preferred vs. less-preferred samples. "For rectified flow models \cite{flow_match, liu2022flow, albergo2022building}, the Flow-DPO loss \cite{videoreward} is:"

- Fundamental matrix: A matrix encoding the epipolar geometry between two uncalibrated views. "where is the fundamental matrix."

- Gaussian Splatting: A 3D scene representation method that models surfaces with collections of Gaussian primitives for fast rendering and reconstruction. "We initialize 3D Gaussian Splatting from extracted scene structure, run 7000 optimization iterations using Splatfacto~\cite{nerfstudio} on 80\% of frames, and evaluate reconstruction fidelity on the remaining 20\%."

- KL-Divergence: A measure of how one probability distribution diverges from a reference distribution, often used as a regularizer. "since it doesn't include KL-Divergence term the model produce clear significant visual artifacts which is not captured by only consistency metrics."

- Latent diffusion models: Generative diffusion models that operate in a learned latent space rather than pixel space for efficiency. "Latent image diffusion models \cite{sdxl, ldm} finetune models on data highly ranked by aesthetics classifiers \cite{Schuhmann2022LAION}."

- LightGlue: A fast learned local feature matcher that finds correspondences between images. "LightGlue finds good correspondences in clean areas when videos contain artifacts, resulting in misleadingly low epipolar error, whereas we want correspondences across the entire scene so artifacts anywhere produce high error."

- LoFTR: A detector-free transformer-based local feature matching method for establishing image correspondences. "the pipeline can also leverage more recent learned descriptors \cite{lightglue, loftr, xfeat}."

- LoRA (Low-Rank Adaptation): A parameter-efficient fine-tuning technique that injects low-rank adapters into layers to reduce training cost. "we implement our approach using Low-Rank Adaptation (LoRA) \cite{lora} with rank and ."

- LPIPS: A learned perceptual similarity metric that correlates with human judgments of visual similarity. "LPIPS decreases from 0.343 to 0.315 (-8.2\%)."

- MiraData: A large-scale video dataset with long durations and structured captions used for benchmarking. "For generalization, we test on VBench 2.0~\cite{vbench}, MiraData \cite{miradata} and VideoReward~\cite{videoreward} benchmarks extending beyond static scenes."

- Normalized 8-point algorithm: A classical method to estimate the fundamental matrix from eight or more point correspondences with normalization for numerical stability. "We then estimate the fundamental matrix using the normalized 8-point algorithm within a RANSAC \cite{fischler81ransac} framework to handle outliers."

- Perspective realism: A metric/model assessing whether image frames exhibit realistic perspective geometry. "Additionally, perspective realism, measured by a model trained to evaluate whether image frames contain realistic perspective \cite{sarkar2024shadows} improves from 0.426 to 0.428"

- Preference-based optimization: Training that uses relative rankings between outputs instead of absolute rewards to guide model updates. "We align diffusion models using pairwise epipolar geometry constraints via preference-based optimization"

- PSNR (Peak Signal-to-Noise Ratio): A reconstruction fidelity metric quantifying the ratio between maximum signal power and error noise. "PSNR increases from 22.32 to 23.13 (+3.6\%)"

- Pseudo-inverse: A generalized matrix inverse used for solving linear least-squares problems and projective relations. " is the pseudo-inverse of "

- RANSAC: A robust estimation algorithm that fits models by iteratively sampling subsets and rejecting outliers. "within a RANSAC \cite{fischler81ransac} framework to handle outliers."

- Rectified flow: A generative modeling technique that learns a velocity (flow) field to transport noise to data along rectified trajectories. "Video generation models have progressed tremendously through large latent diffusion transformers trained with rectified flow techniques."

- Sampson epipolar error: A first-order approximation of the geometric distance of a point to its epipolar line, used to assess consistency. "we can measure the geometric consistency using the Sampson epipolar error \cite{sampson1982fitting}:"

- SEA-Raft: A variant/extension around RAFT optical flow used for dense correspondence, referenced as a descriptor in ablations. "SEA-Raft achieves highest visual quality (80.3\%), we observe it hacks the reward by preferring oversaturated scenes."

- SIFT (Scale-Invariant Feature Transform): A classic feature descriptor and detector used for robust matching across images. "we first compute a set of point correspondences using SIFT \cite{sift} feature matching."

- Splatfacto: A Nerfstudio implementation for optimizing Gaussian Splatting reconstructions. "run 7000 optimization iterations using Splatfacto~\cite{nerfstudio}"

- SSIM (Structural Similarity Index): A perceptual metric measuring image similarity based on luminance, contrast, and structure. "SSIM improves from 0.706 to 0.729 (+3.2\%)."

- Temporal variation penalty: A regularizer that penalizes low temporal variance to prevent degenerate static outputs during alignment. "To prevent degenerate solutions where the model reduces motion to achieve 3D consistency, we add a temporal variation penalty:"

- VBench: A comprehensive benchmark suite for evaluating video generative models across motion and visual quality. "We measure performance using: (1) VideoReward VLM for motion quality assessment, (2) VBench protocol~\cite{vbench} for standardized motion and visual quality metrics"

- Variational Autoencoder (VAE): A generative model that learns latent distributions via variational inference; here, a 3D VAE variant is used in video systems. "Wan-2.1 \cite{wan} introduced an efficient 3D Variational Autoencoder with expanded training pipelines."

- VGGT: A geometry and camera trajectory estimator used to extract scene parameters from videos for reconstruction. "We test whether generated videos support accurate 3D scene reconstruction using VGGT~\cite{vggt} to extract scene parameters and camera trajectories."

- Vision-LLM (VLM): A multimodal model that jointly processes visual and textual inputs to produce assessments or predictions. "We measure performance using: (1) VideoReward VLM for motion quality assessment"

- VideoReward: A preference-based alignment framework and benchmark for video models using learned reward signals. "VideoReward motion quality evaluation shows substantial improvement with our method achieving 69.5\% win rate compared to baseline."

- Velocity field (in rectified flow): The vector field predicted by a rectified flow model that transports noisy samples toward clean outputs. "guiding the predicted velocity field to align with videos exhibiting better 3D consistency while preserving motion quality."

Practical Applications

Immediate Applications

Below are concrete, deployable uses that leverage the paper’s epipolar preference optimization, Sampson-error scoring, and Flow-DPO+LoRA alignment to improve 3D consistency and motion stability in video generation.

Software and Media Production

- Geometric reranking at inference time

- Sector: Software, Media/Entertainment, Advertising

- What: Generate k candidate videos per prompt and select the best using SIFT+RANSAC+Sampson error. Works with any T2V/I2V model without retraining.

- Tools/products/workflows: ComfyUI/Automatic1111 nodes or a Python SDK for “Epipolar Score” reranking; a server-side microservice to score and return top-N results.

- Assumptions/dependencies: Requires multi-sample generation per prompt (compute cost); metric is most robust on static scenes or shots with predominantly camera motion; depends on sufficient texture and matchable features.

- LoRA-based “Geo-aligned” finetuning of internal video generators

- Sector: Media/Entertainment, AdTech, Creative SaaS

- What: Apply Flow-DPO + LoRA to an existing in-house model to reduce jitter, wobble, and geometric artifacts while preserving visual quality.

- Tools/products/workflows: Hosted finetuning service; LoRA adapter packs integrated into existing model hubs; CI-style evaluation with the paper’s metrics.

- Assumptions/dependencies: Access to the base model and GPU for finetuning; static-scene preference data generation (or reuse the released dataset); careful tuning to avoid reduced motion amplitude (use the static penalty).

- Virtual production and previsualization with stable camera moves

- Sector: Film/TV, VFX, Virtual Production

- What: Generate establishing shots, dolly/craning moves, and location fly-throughs with more reliable perspective and fewer artifacts for previs and animatics.

- Tools/products/workflows: “Stable Virtual Camera” presets; shot-generator plugins in Unreal/Blender; AI b‑roll generators for storyboards.

- Assumptions/dependencies: Prompting favors camera motion in largely static scenes; for dynamic actors, pair with separate character animation passes.

- Postproduction-friendly AI shot extension and background generation

- Sector: VFX, Advertising

- What: Produce geometrically consistent plates and extensions that are easier to track, key, and composite.

- Tools/products/workflows: After Effects/Nuke scripts that score and flag “geo-consistent” takes; automatic re‑generation of low‑score segments.

- Assumptions/dependencies: The scoring relies on detectable features; low-light/low-texture scenes may need learned matchers or additional denoising.

3D Asset Creation and XR

- Better 3D reconstruction from generated videos

- Sector: Gaming, E‑commerce (3D product pages), AEC/Design

- What: Use geo-aligned video outputs as multi-view inputs to Gaussian Splatting/NeRF pipelines for cleaner 3D assets and scene captures.

- Tools/products/workflows: “Generate → Estimate cameras (VGGT) → Gaussian Splatting → Export mesh” pipeline; batch asset creation for catalogs or blockouts.

- Assumptions/dependencies: Works best for rigid/static scenes and well-lit content; reconstruction still needs outlier rejection and quality thresholds.

- XR environment and background generation

- Sector: AR/VR/XR, Live events, Virtual classrooms

- What: Generate stable panoramic/backplate content and looping backgrounds with consistent perspective for immersive experiences.

- Tools/products/workflows: XR scene kits; background packs produced via geo-aligned T2V; live previewing with auto-reranking of takes.

- Assumptions/dependencies: For true 6-DoF, additional multiview sampling or explicit camera control is needed; current method yields improved 3D plausibility but not full free-view navigation.

Robotics and Simulation Data

- Synthetic perception datasets with improved geometric fidelity

- Sector: Robotics, Autonomy, Embodied AI

- What: Generate training videos with stable camera trajectories and realistic perspective to reduce label noise for SfM/VO/SLAM pretraining and evaluation.

- Tools/products/workflows: Dataset factories that auto‑rerank/gate content by epipolar score; curriculum datasets for VO/SLAM.

- Assumptions/dependencies: Best for static environments or scenes with limited independent motion; dynamic objects require motion segmentation or masking to avoid penalizing valid motion.

Quality Assurance, Safety, and Forensics

- Production QA gate for 3D consistency

- Sector: Generative AI platforms, Creative SaaS

- What: Automatically flag or reject videos with high epipolar error (likely jitter/flicker) before publishing or client delivery.

- Tools/products/workflows: CI/CD “geo‑health” checks; dashboards tracking Sampson error distributions per model/version.

- Assumptions/dependencies: Score thresholds must be calibrated by content type; dynamic scenes may need adapted scoring.

- Geometry-based anomaly and manipulation screening

- Sector: Trust & Safety, Forensics, Policy

- What: Use epipolar violations to identify suspicious edits or physically implausible composites in videos.

- Tools/products/workflows: Analyst tool that overlays epipolar lines and returns confidence flags; integration into moderation queues.

- Assumptions/dependencies: Genuine handheld footage can also break assumptions (rolling shutter, fast dynamics); treat as a triage signal, not a definitive detector.

Academia and Education

- Benchmarking and research baselines for geometry-aware video generation

- Sector: Academia, Open-source

- What: Adopt the dataset, metrics, and DPO pipeline to evaluate/compare geometry-aware alignment strategies and new reward functions.

- Tools/products/workflows: Reproducible eval harness; ablation suite (descriptors, metrics, DPO variants).

- Assumptions/dependencies: Access to open-source video generators; consistent evaluation seeds and sampling settings.

- Teaching projective geometry with generative examples

- Sector: Education

- What: Classroom labs demonstrating fundamental matrix estimation, RANSAC, and Sampson error using AI-generated video pairs with controlled artifacts.

- Tools/products/workflows: Notebooks with SIFT/LoFTR + eight-point + RANSAC visualizations; side-by-side “consistent vs inconsistent” clips.

- Assumptions/dependencies: Requires visible features and modest compute for descriptor matching.

Creator and Daily-Life Tools

- “Stable AI Cam” for creators

- Sector: Consumer apps, Creator economy

- What: Mobile/desktop feature that generates multiple candidate clips for a prompt and auto-selects the most geometry-consistent take.

- Tools/products/workflows: Batch generation + on-device/server scoring; user knob for “motion amplitude vs stability” trade-off.

- Assumptions/dependencies: Extra inference time for multiple samples; requires sufficiently textured content for reliable matches.

Long-Term Applications

These use cases require further model development, broader training data (dynamic scenes), algorithmic extensions (e.g., dynamic scene geometry), or systems integration.

World Simulation and Digital Twins

- 4D-consistent world generation with controllable cameras and objects

- Sector: Simulation, Digital Twins, Gaming

- What: Extend epipolar-aligned generation to jointly model camera and object motion with multi-body geometric constraints, enabling persistent 3D structure over long sequences.

- Tools/products/workflows: Multi-reward alignment (epipolar + motion segmentation + physical constraints); plug into simulation engines for synthetic data at scale.

- Assumptions/dependencies: Robust handling of dynamic objects; scalable preference generation that remains noise-free; potentially combine with differentiable reconstruction or bundle adjustment.

Free-Viewpoint and 6‑DoF Video from Sparse Inputs

- Captureless free-view navigation from text/image prompts

- Sector: XR, Telepresence, Sports broadcasting

- What: Generate consistent multi-view videos or light fields for 6-DoF playback, reducing capture rig complexity.

- Tools/products/workflows: Multi-camera path control + geometric rewards; automatic camera-graph sampling + consistency filtering.

- Assumptions/dependencies: Need explicit multiview generation control and stronger multi-frame geometry rewards; memory/compute scaling.

Safety Standards and Policy

- Objective “physical consistency” standards for generative video

- Sector: Policy, Procurement, Standards bodies

- What: Establish epipolar/multiview-consistency thresholds as part of standardized AI video quality and safety audits.

- Tools/products/workflows: Certification suites; model cards including 3D-consistency metrics; procurement checklists for public-sector deployments.

- Assumptions/dependencies: Benchmarks must be robust to real-world capture quirks and dynamic scenes; require consensus on thresholds and test sets.

Deepfake and Tamper Detection

- Multi-view and projective-geometry-based detectors

- Sector: Security/Forensics, Platforms

- What: Combine epipolar errors, perspective realism, and multi-view reconstruction residuals to flag physically implausible video segments.

- Tools/products/workflows: Forensic pipelines that fuse geometric cues with GAN-detectors; courtroom-grade reporting.

- Assumptions/dependencies: Must account for rolling shutter, lens distortion, and legitimate dynamic content; requires large-scale validation to minimize false positives.

Autonomy, Robotics, and AV

- High-fidelity synthetic training corpora for VO/SLAM/Planning

- Sector: Robotics, Autonomous Vehicles

- What: Generate geometry‑consistent training videos with controllable camera paths and realistic parallax for perception pretraining; extend to dynamic, multi-agent scenes.

- Tools/products/workflows: Scenario generators parametrized by routes and IMU priors; joint reward signals for epipolar geometry, motion smoothness, and map consistency.

- Assumptions/dependencies: Generalize beyond static scenes; integrate motion-layer decomposition to avoid penalizing legitimate dynamic motion.

Healthcare and Scientific Visualization

- Physically plausible synthetic endoscopy/operative videos for training

- Sector: Healthcare, Medical Education

- What: Use extended geometric rewards (nonrigid/scene-flow aware) to create consistent synthetic training videos for skill practice and AI training.

- Tools/products/workflows: Domain-specific rewards (specularities, deformable geometry); integration with simulators and HMD-based curricula.

- Assumptions/dependencies: Nonrigid motion and low-texture surfaces challenge classic epipolar metrics; requires learned correspondence and deformable geometry constraints.

Interactive Camera Control and Co-pilots

- User-steerable paths with guaranteed geometric plausibility

- Sector: Creative tools, Game engines

- What: Real-time guidance that enforces epipolar/multiview constraints as users sketch camera paths, reducing “bending lines” and perspective drift.

- Tools/products/workflows: In-editor co-pilots for Unreal/Blender; “make this shot physically plausible” button with on-the-fly rerendering.

- Assumptions/dependencies: Tight latency budgets; fast approximate matching or learned proxies for online scoring; robust handling of reflective/texture-poor scenes.

Joint Training with 3D Reconstruction and Physics

- Differentiable geometry-aware training loops

- Sector: Core AI Research, 3D Vision

- What: Combine preference optimization with differentiable multiview reconstruction, bundle adjustment, or physics (PISA/DSO-style) to unify visual fidelity and physical soundness.

- Tools/products/workflows: End-to-end pipelines mixing non-differentiable rewards (epipolar) with differentiable surrogates; multi-component RLHF/RLAIF.

- Assumptions/dependencies: Compute-heavy; careful reward balancing to avoid degenerate minima; broader benchmark coverage.

Notes on feasibility across applications:

- Core assumption: Metric reliability is strongest when the scene is predominantly static and the camera moves. For dynamic scenes, pair with motion segmentation or dynamic-epipolar/scene-flow metrics.

- Dependencies: Access to base video models; compute for multi-sample reranking or LoRA finetuning; feature-rich frames for correspondence; calibrated trade-off between stability and motion amplitude (use temporal-variation penalty).

- Risks: Learnable matchers can be “gamed” by certain artifacts; low-texture/low-light content reduces scoring reliability; over-optimization toward the metric may slightly reduce motion amplitude without proper regularization.

- IP/compliance: Ensure dataset licensing for prompts/videos; disclose objective metrics used in model cards and user-facing claims.

Collections

Sign up for free to add this paper to one or more collections.