StreamVGGT-based Geometry Encoder

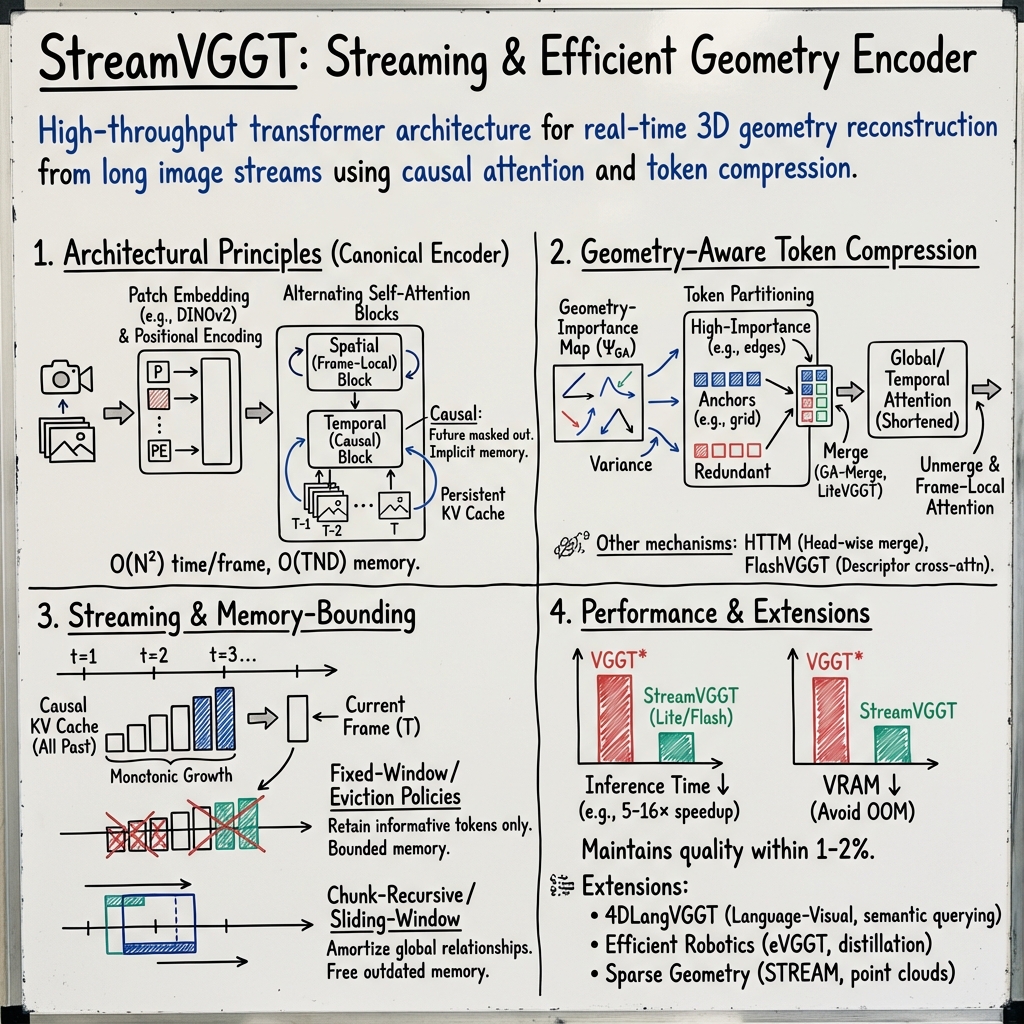

- The paper introduces StreamVGGT-based encoders that implement a two-stage pipeline with patch embedding and alternating self-attention for efficient 3D/4D geometry reconstruction.

- It employs geometry-aware token merging techniques—such as GA-Merge, HTTM, and descriptor-based cross-attention—to reduce computation and memory while preserving reconstruction quality.

- The architecture features streaming strategies with causal KV caching and fixed-window inference, enabling real-time processing and scalable long-sequence vision tasks.

A StreamVGGT-based geometry encoder is a high-throughput, streaming transformer architecture for 3D (and 4D) vision, designed to efficiently process long sequences of images or video frames for dense geometry reconstruction, camera-pose estimation, and related spatial tasks. These encoders leverage autoregressive or causal attention in the temporal domain, optionally with geometry-aware token compression, to provide real-time or memory-bounded inference over arbitrarily long streams, and represent a key advance over the original Visual Geometry Grounded Transformer (VGGT) paradigm for scalable perception and mapping.

1. Architectural Principles

The canonical StreamVGGT encoder consists of a two-stage processing pipeline:

- Patch Embedding and Positional Encoding: Each incoming frame is patchified (typically into a regular grid), and each patch is embedded using a frozen backbone such as DINOv2. Tokens are augmented with spatial and temporal positional encodings; in some advanced versions (e.g., in language-augmented 4D encoders), a 4D positional encoding that includes spatial coordinates (optionally lifted from previous geometry) and the time index is employed.

- Alternating Self-Attention Blocks: The main body is a stack of transformer layers alternating between frame-local (spatial) and temporal (causal) attention. Temporal attention is strictly causal: at time , the query token block attends only to key and value tokens from previous or current frames , . This implicit memory is realized by persistent KV caches. Layernorm, residual connections, and feed-forward networks (FFNs) sit between attention operations.

In notation, for each new frame at time :

with patches, embedding dimension. Embeddings are augmented:

Alternating-attention is realized as:

- Spatial block: within-frame multihead self-attention and FFN.

- Temporal block: cross-frame, causal-attention (masked so that each frame cannot see the future), updating current tokens with global context.

This streaming (“autoregressive”) approach enables processing each new frame in time and memory (storing all past frame KV), compared to for full bidirectional global attention across frames (Zhuo et al., 15 Jul 2025, Wu et al., 4 Dec 2025).

2. Geometry-Aware Token Compression Mechanisms

Due to the quadratic scaling of standard self-attention, StreamVGGT variants integrate geometry-aware token compression to reduce computation and memory footprint without sacrificing reconstruction quality:

- Geometry-Aware Token Merging (GA-Merge, LiteVGGT): Before temporal/global attention, a per-token geometry-importance map is computed, typically as a convex combination of normalized Sobel-edge gradients and local token variance:

Tokens are partitioned into high-importance (sharp edges, texture boundaries), spatially uniform “anchors” (first-frame and grid tokens), and redundant sources. Redundant tokens are merged into their most similar anchors using cosine similarity, reducing sequence length prior to global attention (Shu et al., 4 Dec 2025).

- Head-wise Temporal Token Merging (HTTM): Each attention head independently performs block-wise token merging based on cosine similarity, clustering spatial-temporal neighborhoods and preserving head-specific representational diversity. After global attention, merged outputs are unmapped back to the original token positions, preventing representational collapse caused by forced uniform merges across heads (Wang et al., 26 Nov 2025).

- Descriptor-Based Cross-Attention (FlashVGGT): Patch tokens are downsampled to compact, spatial “descriptor” tokens (e.g., via interpolation). Global relationships are aggregated by cross-attention between full-resolution tokens (as queries) and these descriptors (as keys/values), reducing complexity from to , where is the total number of tokens and (descriptors) is much smaller (Wang et al., 1 Dec 2025).

These mechanisms can be combined with merge-assignment caching, where merge indices are recomputed every layers and reused across subsequent layers to further amortize computation (Shu et al., 4 Dec 2025).

3. Streaming and Memory-Bounding Strategies

In true streaming settings, where image or video frames arrive incrementally:

- Causal KV Caching: For each global/temporal block, keys and values from all previous frames are cached, providing context for temporal self-attention in memory. This resembles large language-model inference, with per-frame computation and monotonic memory growth (Zhuo et al., 15 Jul 2025).

- Fixed-Window or Eviction Policies: To prevent unbounded memory growth, recent advances propose attention-weighted token eviction. The cache is dynamically pruned, retaining only the most informative tokens as determined by metrics such as cumulative attention received, normalized for exposure and row-length, while always preserving certain anchor tokens (e.g., camera or first-frame). Layer-specific memory budgets can be set based on a softmax over per-layer sparsity priors (Mahdi et al., 22 Sep 2025).

- Chunk-Recursive/Sliding-window Inference: Some variants (FlashVGGT, chunked HTTM) process streaming data in recurrence over fixed-sized temporal windows/chunks, amortizing global relationships over compressed states and freeing memory associated with outdated segments. This allows scaling to thousands of frames while preserving online processing semantics (Wang et al., 1 Dec 2025, Wang et al., 26 Nov 2025).

A typical streaming forward pass (GA-Merge) proceeds as:

1 2 3 4 5 6 7 8 9 |

for each frame: 1. Patch embed, form tokens, add pos. encodings. 2. Partition: compute geometry-importance map, select GA/dst/src. 3. Cache/reuse merge assignments. 4. Merge src tokens → dst anchors. 5. Run (shortened) global/temporal attention. 6. Unmerge: replicate cluster outputs to constituent token positions. 7. Frame-local attention. 8. Decode geometry estimates. |

4. Quantitative Performance and Practical Benchmarks

Extensive benchmarks demonstrate that StreamVGGT-based encoders with geometry-aware merging and efficient streaming protocols provide substantial improvements:

| Model (1000 frames, ScanNet50) | Chamfer Dist. ↓ | Inference Time ↓ | VRAM (GiB) |

|---|---|---|---|

| VGGT* (full self-attn) | 0.471 | 724.6 s | OOM > 500 imgs |

| LiteVGGT (GA-Merge+caching+FP8) | 0.428 | 127 s | 45 GiB |

| FastVGGT (merge, train-free) | 0.425 | 180.7 s | – |

| FlashVGGT (compressed desc.) | 1.13 | 35 s | 61 GiB |

| HTTM (Q 20%, K/V 30%) | match Acc/Comp | 102.8 s | – |

Careful selection of kept tokens (typically only 10% by geometric importance or salient sampling) and rolling window/cached merges retain reconstruction quality within 1–2% of the full baseline while achieving 5–16× speedups and similar (or better) memory reductions (Shu et al., 4 Dec 2025, Shen et al., 2 Sep 2025, Wang et al., 1 Dec 2025, Wang et al., 26 Nov 2025).

5. Extensions and Adaptations

StreamVGGT-style encoders serve as core modules in several broader systems:

- Language-Visual Transformers: In multi-modal systems such as 4DLangVGGT, StreamVGGT geometry tokens are retained and bridged into the language space via an explicit semantic decoder, enabling open-vocabulary, real-time 4D semantic querying while retaining geometric and structural fidelity (Wu et al., 4 Dec 2025).

- Efficient Robotics: Student models such as eVGGT distill the geometry-aware capabilities of full VGGT (and by extension StreamVGGT) into smaller, faster encoders, facilitating real-time inference for control and imitation learning while preserving 3D awareness. Streaming extensions can be formed by re-using register tokens as recurrent memory (Vuong et al., 19 Sep 2025).

- Sparse/Irregular Geometry: The “StreamVGG-T” concept extends naturally to sparse-data settings (point clouds, events) by leveraging SSMs (as in STREAM), integrating event time or spatial deltas to compute all-pairs kernel interactions in instead of time, producing competitive or state-of-the-art accuracy in event-based and point-cloud classification (Schöne et al., 2024).

6. Limitations and Future Directions

StreamVGGT-based encoders address the major bottlenecks of classic bidirectional VGGT models but introduce several trade-offs:

- Information Loss: Aggressive token merging or descriptor compression can omit fine details in challenging geometry, though adaptive merging, outlier filtering, and explicit anchor-token preservation mitigate such loss (Wang et al., 26 Nov 2025, Shu et al., 4 Dec 2025).

- Scalability: Memory-bounding via eviction is an effective but heuristic measure, possibly discarding relevant context under pathological frame orderings. Dynamic policies and per-layer prioritization ameliorate this but cannot guarantee optimality (Mahdi et al., 22 Sep 2025).

- Chunked Causality: Sliding-window or chunk-based approaches do not capture truly unbounded-temporal dependencies; their effectiveness depends on window size and scene statistics (Wang et al., 1 Dec 2025).

Further research explores dynamic merge budgeting, more principled memory compression, and unified abstractions combining state-space and transformer models to handle both dense and sparse geometric input efficiently and robustly.

References

- (Shu et al., 4 Dec 2025) LiteVGGT: Boosting Vanilla VGGT via Geometry-aware Cached Token Merging.

- (Shen et al., 2 Sep 2025) FastVGGT: Training-Free Acceleration of Visual Geometry Transformer.

- (Wang et al., 26 Nov 2025) HTTM: Head-wise Temporal Token Merging for Faster VGGT.

- (Wang et al., 1 Dec 2025) FlashVGGT: Efficient and Scalable Visual Geometry Transformers with Compressed Descriptor Attention.

- (Mahdi et al., 22 Sep 2025) Evict3R: Training-Free Token Eviction for Memory-Bounded Streaming Visual Geometry Transformers.

- (Zhuo et al., 15 Jul 2025) Streaming 4D Visual Geometry Transformer.

- (Wu et al., 4 Dec 2025) 4DLangVGGT: 4D Language-Visual Geometry Grounded Transformer.

- (Vuong et al., 19 Sep 2025) Improving Robotic Manipulation with Efficient Geometry-Aware Vision Encoder.

- (Schöne et al., 2024) STREAM: A Universal State-Space Model for Sparse Geometric Data.