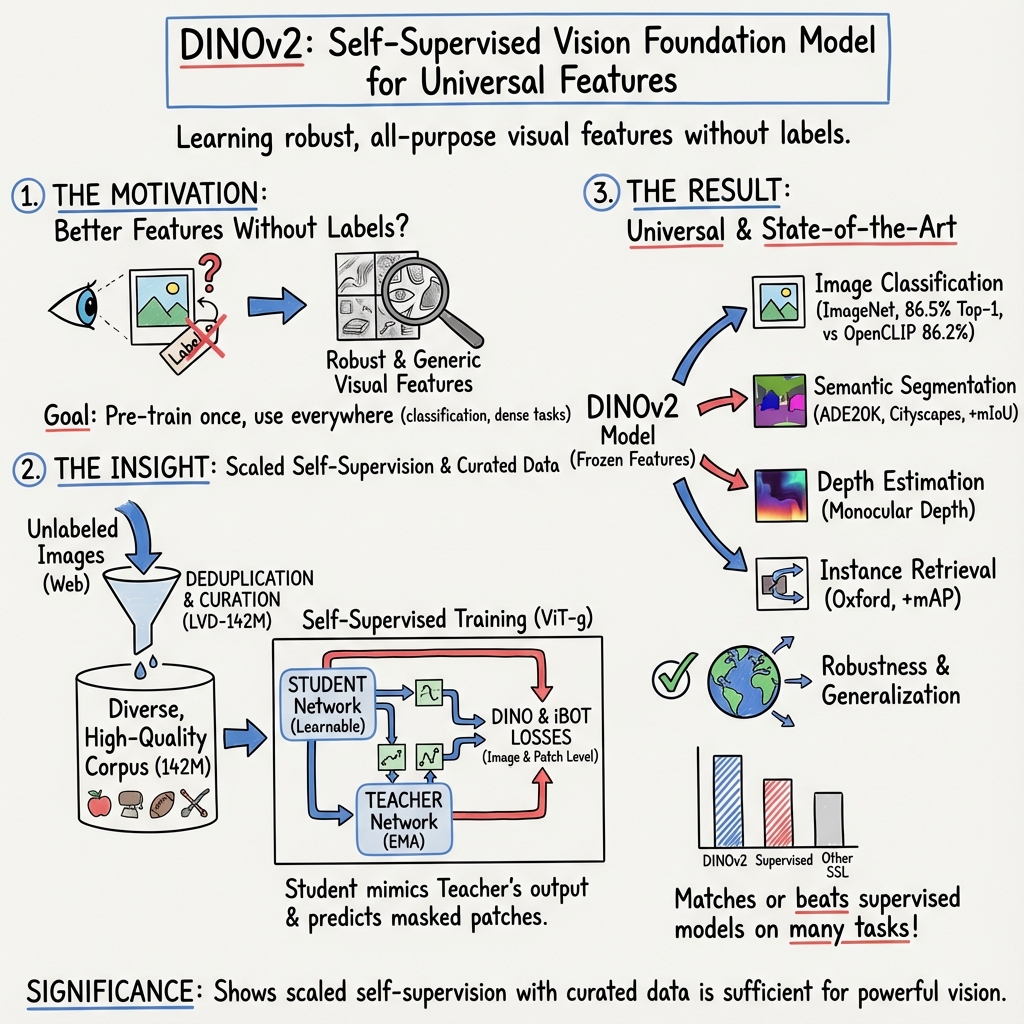

DINOv2: Scalable Self-Supervised ViT Model

- DINOv2 is a self-supervised vision model based on Vision Transformer architecture that generates universal visual features without requiring fine-tuning.

- It uses a student-teacher framework with untied projection heads and efficient attention mechanisms to enhance feature diversity and stability during training.

- The model achieves state-of-the-art performance in image classification, segmentation, and retrieval, demonstrating robust transferability across various vision tasks.

DINOv2 is a self-supervised vision foundation model based on the Vision Transformer (ViT) architecture, designed to generate robust and universal visual features applicable across a wide range of computer vision and multimodal tasks without supervision or fine-tuning. Developed by Oquab et al., DINOv2 marked a significant advance in the scaling and stabilization of self-supervised pretraining, dataset curation, and the utility of transformer-derived representations as generic visual backbones for both image-level and dense prediction problems.

1. Model Foundations and Architecture

DINOv2 leverages large-scale ViT models—such as ViT-S/14, ViT-B/14, ViT-L/14, and ViT-g/14 (up to 1.1B parameters)—that partition images into non-overlapping patches, embed their content via linear projections, and process the resulting token sequences through deep stacks of self-attention layers. Key architectural advancements include:

- Untied projection heads: Separate multi-layer perceptron (MLP) heads are used for image-level and patch-level losses, improving representation richness and stability during scaling compared to earlier approaches where both losses shared a single head.

- SwiGLU feed-forward layers in from-scratch models, and classic MLPs in distilled models.

- Efficient attention mechanism: Custom implementations of FlashAttention and aggressive stochastic depth (up to 40% drop rates) facilitate stable and memory-efficient training of deep transformer models.

- Scaling modifications: Embedding and head dimensions are tuned for optimal throughput, especially in the largest "giant" ViT variants.

2. Training Methodology and Self-Supervision

Training follows a multi-part self-supervised learning design, combining innovations from previous approaches (DINO, iBOT) and introducing further mechanisms for diversity and stability:

- Student-teacher framework: Given two image augmentations, both are processed by a student (parameters learnable) and a teacher (exponential moving average of student). The [CLS] tokens are passed through separate DINO heads, softmaxed, and the student is trained to match the teacher's prediction via cross-entropy:

where and are soft distributions from teacher and student.

- Patch-level (iBOT-style) loss: Masked patches in the student branch are predicted from full teacher features, using independent MLP heads per objective:

- Batch regularization and centering: Teacher outputs are normalized with Sinkhorn-Knopp centering for better batch-wise diversity, and a KoLeo entropy regularizer encourages spread in the feature space.

- Resolution adaptation: The final phase of pretraining occurs at higher input resolution, especially for improved dense (pixel-level) transferability, with negligible extra compute.

Scaling to large models and datasets is enabled by sequence packing, efficient distributed training (FSDP in PyTorch, mixed-precision), and implementation of model distillation, where smaller ViT variants inherit knowledge from the largest frozen model via prediction matching.

3. Data Pipeline and Curated Pretraining Corpus

A central innovation of DINOv2 is its automatically curated LVD-142M dataset (~142 million images), designed to maximize both visual and semantic diversity:

- Deduplication: Near-duplicate removal uses nearest neighbor search on image embeddings and connected-component graph analysis, scrubbing validation/test sets of commonly used benchmarks to prevent leakage.

- Curated and web-sourced pools: Datasets such as ImageNet-1k/22k and Google Landmarks are combined with deduplicated web-crawled images.

- Self-supervised retrieval: Using an SSL ViT, curated images are embedded and used as queries to retrieve similar samples from uncurated pools via cosine similarity:

- Rebalancing and concept coverage: K-means and cluster-based sampling avoid overrepresentation of dominant object classes, ensuring broad concept diversity.

- Scalability: The curation pipeline, accelerated by GPU-based FAISS, processes >1B images in days; this scale and efficiency underpin the model's transferability and unusual robustness.

4. Benchmark Results and Comparative Performance

DINOv2 exhibits state-of-the-art or near-state-of-the-art performance—without text supervision—across a battery of benchmarks:

- Image-level (classification, recognition) results: On ImageNet-1k, the ViT-g model achieves 86.5% top-1 accuracy (linear probe), overtaking OpenCLIP ViT-G (86.2%) and matching weakly-supervised SOTA models.

- Superior generalization is observed in out-of-distribution settings (ImageNet-V2, -ReaL, -A/C/R/Sketch).

- On fine-grained datasets (Food-101, Cars, Aircraft, etc.), DINOv2 matches or surpasses OpenCLIP and prior SSL.

- For video understanding (UCF101, Kinetics-400, SSv2), DINOv2 features are competitive or better than OpenCLIP, especially on action recognition from frozen features.

- Dense prediction tasks: In segmentation (ADE20K, Cityscapes, VOC), DINOv2 with frozen features and simple decoders achieves results close to fully supervised fine-tuned models and notably surpasses all previous SSL/WSL alternatives (e.g., +8–12 mIoU on ADE20K over OpenCLIP).

- In monocular depth estimation, DINOv2 features yield clear improvements over previous methods.

- Robustness and instance-level retrieval: Gains are largest in robustness and transfer benchmarks (ImageNet-A/R/Sketch) and in instance retrieval (Oxford Hard, up to +34% mAP), demonstrating the importance of curated, diverse pretraining data.

- Ablations show that curated data and model scale contribute more than sheer quantity of uncurated data, and larger models amplify the benefits of diversity.

5. Applications and Implications

- All-purpose frozen features: DINOv2 can be used as an out-of-the-box backbone for image and pixel-level tasks (classification, segmentation, retrieval, transfer learning) without additional fine-tuning.

- Dense and patch-level applicability: The representations encode not just global image semantics, but also spatial and geometric information, supporting tasks such as part recognition, matching, and dense correspondence.

- Domain generalization and fairness: DINOv2 is less biased toward Western/high-income visual concepts than past models, and shows strong generalization to out-of-distribution or cross-domain settings (Dollar Street, etc.).

- Scalable and efficient pretraining: Training is significantly faster and less resource-intensive than contemporary text-guided models (e.g., CLIP, OpenCLIP), with open-source code and checkpoints facilitating reproducibility.

6. Significance for Computer Vision and Future Foundation Models

DINOv2 establishes that self-supervised learning, if scaled with high-quality, diverse, and curated data, is sufficient to match or exceed the performance of state-of-the-art text-supervised models on a wide range of vision tasks. It dispels the notion that weak (text) supervision or costly manual annotation is required for robust, transferrable features. The resulting all-purpose features are universal: they serve as an off-the-shelf resource for practitioners and researchers across vision domains. This positions DINOv2 as the vision analogue to LLMs such as BERT and GPT—general, robust, and reusable across virtually all image understanding contexts.

The internal structure of its learned representations, as evidenced by linear probe results and visualizations, further reveals flexibility for adaptation and explainability. DINOv2 thus provides both a technical blueprint and empirical demonstration of the viability of scalable self-supervised pretraining for future foundation models in computer vision.