Hybrid Gated Flow (HGF): Stabilizing 1.58-bit LLMs via Selective Low-Rank Correction

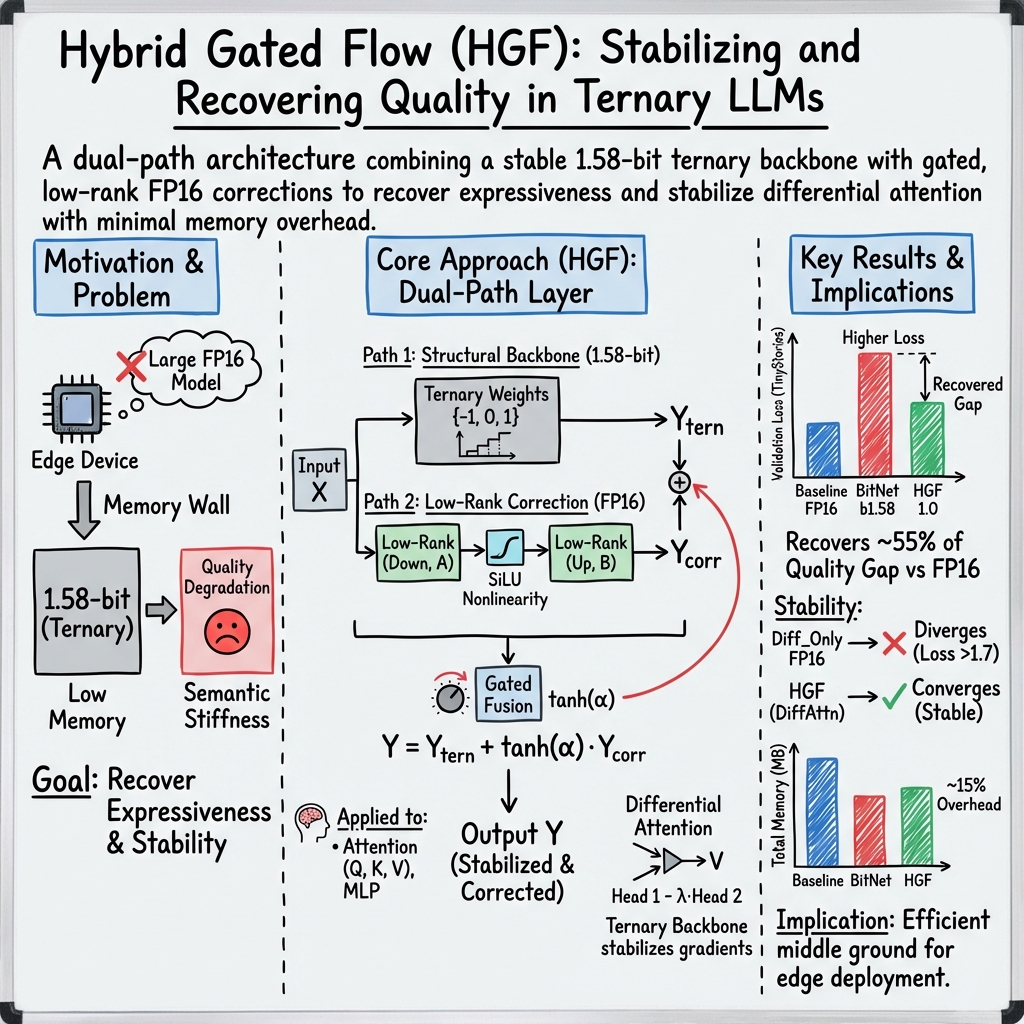

Abstract: The deployment of LLMs on edge devices is fundamentally constrained by the "Memory Wall" -- a hardware limitation where memory bandwidth, not compute, becomes the bottleneck. Recent 1.58-bit quantization techniques (e.g., BitNet b1.58) dramatically reduce memory footprint but typically incur a perplexity degradation of 20-25% compared to FP16 baselines. In this work, we introduce Hybrid Gated Flow (HGF), a dual-stream architecture that couples a 1.58-bit ternary backbone with a learnable, low-rank FP16 correction path controlled by adaptive gates. Through extensive experiments on the TinyStories dataset across two training regimes (2500 and 3500 steps), we demonstrate that HGF 5.4 achieves a validation loss of 0.9306 compared to BitNet's 1.0294, recovering approximately 55% of the quality gap between pure ternary quantization and the FP16 baseline (0.8490). This recovery is achieved with only ~12-15% memory overhead beyond the ternary backbone. Furthermore, we provide empirical evidence for an emergent phenomenon: quantization as structural regularization. While a full-precision differential attention baseline (Diff_Only) exhibited training instability with validation loss exceeding 1.68, the ternary-anchored HGF maintained robust convergence throughout training. Finally, we report preliminary results extending this architecture to 1.2B and 3B parameter models trained on SlimPajama and FineWeb-Edu. These larger-scale experiments confirm that the architectural stability and quality recovery observed in small-scale proxies scale linearly to production-grade language modeling regimes.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about making LLMs run well on small, low-power devices like phones or tiny computers. The big problem is that LLMs need lots of memory, which can become a bottleneck. One popular trick to save memory is “quantization,” which stores model weights using very few bits. This paper introduces a new model design called Hybrid Gated Flow (HGF). It keeps most of the model super compact with 1.58-bit weights, but adds a small, smart, high-precision “helper path” that fixes the biggest mistakes. The result is better quality than using 1.58-bit weights alone, with only a small increase in memory.

What questions did the researchers ask?

- Can we keep the huge memory savings of extreme quantization (using only three weight values: −1, 0, 1) while getting back much of the lost quality?

- Can adding a small, carefully controlled high-precision path make these tiny-weight models more stable and accurate during training?

- Is quantization itself helpful for stability, acting like a built-in “regularizer” that prevents certain training failures?

How did they try to solve it?

The problem: “Memory Wall”

LLMs are often limited by how fast data can move between memory and the processor, not by how fast the processor can compute. Using fewer bits per weight (quantization) cuts memory and speeds up inference, but usually hurts quality.

The idea: A hybrid, two-path model

Think of the model like a road with two lanes:

- Lane 1: A fast, super-efficient backbone where weights are “ternary” (only −1, 0, or 1). This is the 1.58-bit part that saves lots of memory and speeds things up.

- Lane 2: A small, high-precision correction lane that “nudges” the backbone when it’s wrong. This lane uses standard 16-bit floating-point math (FP16), but only in a low-rank, lightweight form.

These two lanes are combined with a “gate,” which is like a volume knob deciding how much the correction lane should contribute. During training, the gate learns the best balance and is later frozen (fixed) so inference stays fast and predictable.

Explaining the parts in everyday terms

- Quantization (ternary weights): Imagine replacing a smooth dimmer switch with a switch that has only three positions: off, medium, and full. This makes the model smaller and faster but less precise.

- Low-Rank Adaptation (LoRA) correction: Picture a small helper that focuses only on the most important adjustments, like fixing the top few things a teacher writes wrong on a paper. It uses many fewer numbers than a full correction would.

- Gates: A gate controls how much the helper’s fixes are added in. It starts small, learns during a “warmup,” gets gently regularized, and is then frozen around 10% contribution so it doesn’t wobble.

Differential attention and stability

They also use “differential attention,” which is like comparing two attention heads and subtracting one from the other to cancel common noise—similar to noise-canceling headphones. This can be powerful but unstable in full precision. The ternary backbone acts like a safety rail, keeping the values bounded so training doesn’t blow up.

Training approach

- Warmup: Let the gates learn freely and find useful correction levels.

- Regularization: Apply gentle pressure so gates don’t grow too strong.

- Freeze: Stop gate learning so the model trains around a stable correction amount.

What did they find?

At a mid-training checkpoint (2,500 steps) on the TinyStories dataset:

- A standard FP16 model achieved a validation loss of 0.8490 (lower is better).

- A pure 1.58-bit (ternary) model got 1.0294, which is worse but very efficient.

- HGF got 0.9306, recovering about 55% of the lost quality compared to the ternary model, with only about 12–15% extra memory beyond the ternary baseline.

They also saw:

- Stability: A full-precision “differential attention only” model became unstable and trained poorly (loss around 1.68). With the ternary backbone plus the gated correction, HGF stayed stable. This suggests quantization acts like “structural regularization”—it keeps values in safe ranges.

- The gate settled around 0.1: In simple terms, the final model is about 90% ternary backbone and 10% high-precision correction, which was enough to recover a lot of quality.

- Value path matters: Removing the correction from the Value part of attention hurt performance notably. This means both “where to look” (Query/Key) and “what content to carry” (Value) benefit from small, precise fixes.

- Faster convergence: HGF reached its best performance earlier than the full-precision baseline, suggesting it can be trained in fewer steps for similar efficiency-level goals.

Why does this matter?

This hybrid approach lets you:

- Run useful LLMs on small devices: With big memory savings and mostly integer math, models become practical on phones, Raspberry Pi-like boards, or cars—without needing a powerful server.

- Serve more users per GPU in the cloud: Smaller model memory means more concurrent users, improving cost-effectiveness.

- Keep training stable: The ternary backbone can prevent certain types of training crashes, especially with advanced attention tricks like differential attention.

However, there are trade-offs:

- It still doesn’t match full-precision quality in every case.

- Fully realizing speed gains may require specialized low-level kernels that handle ternary math well.

- Results on very large models (billions of parameters) are still being tested, although early signals look promising.

Bottom line

Hybrid Gated Flow (HGF) cleverly combines extreme compression (1.58-bit ternary weights) with a small, gated high-precision correction. This design recovers more than half of the quality lost to heavy quantization while keeping memory and compute demands low. It stabilizes training, especially with differential attention, and seems practical for edge devices and efficient cloud serving. If you want a model that is “mostly tiny and fast” but still “a bit smart and precise,” HGF shows a promising way to get both.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The paper leaves the following points unresolved:

- Scaling and generalization: Provide rigorous, finalized results for 1.2B–7B models on SlimPajama/FineWeb-Edu and standard LM benchmarks (e.g., The Pile, WikiText), including full training curves, checkpoints, and reproducibility artifacts.

- Real-world efficiency: Report end-to-end latency, throughput, and energy per token on representative edge devices and GPUs using the Triton kernels; compare against FP16, 4-bit (AWQ/GPTQ), and BitNet under identical serving conditions.

- Hardware readiness: Assess availability, portability, and stability of ternary kernels across toolchains and devices; quantify memory bandwidth utilization and identify bottlenecks that prevent achieving the theoretical 2.7× speedup.

- Evaluation breadth: Move beyond validation loss on TinyStories to perplexity across diverse corpora, generation quality metrics (e.g., MAUVE), human evaluations, and downstream tasks (MMLU, GSM8K, ARC), including toxicity and safety metrics.

- Robustness and stability: Quantify gradient variance and training stability for differential attention with and without HGF across multiple seeds; perform sensitivity analyses for learning rates, gate regularization, and differential attention parameter λ.

- Gate mechanism design: Explore per-layer, per-head, and input-conditioned gates; compare gating functions (tanh vs. sigmoid/softplus), regularizers, and schedules (e.g., no freeze, cyclical annealing) to optimize the expressiveness–stability trade-off.

- LoRA rank and placement: Systematically sweep LoRA rank r and nonlinearity (SiLU vs. GELU/ReLU), and test correction placement beyond Q/K/V (e.g., MLP, output projection, embeddings); explicitly ablate the decision to halve V’s dimension.

- Quantizer choices and calibration: Clarify the mismatch between “Absmax Quantization” and the use of mean absolute value scaling; compare per-channel absmax, percentile, learned scales, and thresholding strategies to improve ternary calibration.

- STE bias and alternatives: Empirically measure the gradient bias introduced by the Straight-Through Estimator and evaluate alternative estimators (stochastic rounding, soft-to-hard relaxations, proxy losses) for improved convergence and accuracy.

- Low-rank error hypothesis: Validate that the quantization residual X(W − Ẇ)ᵀ is predominantly low-rank via spectrum analysis (SVD) across layers and training epochs; identify layers/conditions where the hypothesis fails and adjust correction rank accordingly.

- Theoretical foundations: Provide rigorous proofs or tighter, empirically verified bounds for the stated gradient variance and “quantization as regularization” claims, including explicit assumptions and counterexamples/stress tests.

- Training protocol generality: Test the dual learning-rate and warmup–regularize–freeze gate schedule across datasets and scales; compare to alternative schedules (cosine, layerwise freezing) and quantify their impact on convergence and final quality.

- Saturation behavior: Investigate the slight loss increase from 2.5k to 3.5k steps in HGF; determine whether early stopping is generally optimal, and how data size/curriculum/regularization affect saturation and overfitting.

- Memory accounting realism: Include optimizer states, activation memory, KV-cache, and context memory in both training and inference footprints; validate the claimed 12–15% overhead in realistic deployment scenarios.

- Stronger baselines: Benchmark against modern 4-bit PTQ/QAT (AWQ, GPTQ), 2-bit methods, and hybrid QLoRA on ternary backbones under matched training budgets to establish relative Pareto efficiency.

- Differential attention specifics: Ablate λ initialization and dynamics, normalization/bounding of logits, and compare to standard multi-head attention to precisely locate the source of Diff_Only instability and HGF’s stabilizing effect.

- Long-context behavior: Measure attention routing quality and retrieval performance at large context lengths; evaluate HGF’s impact on long-range dependencies and memory decay relative to FP16 and BitNet.

- Generalization, calibration, and safety: Assess calibration (ECE), uncertainty under distribution shift, robustness to noise/adversarial prompts, and safety/bias metrics to understand the regularization effects beyond loss.

- Reproducibility and release: Publish source code, Triton kernels, trained checkpoints, and logs sufficient to reproduce all tables/figures; provide seeds and scripts for multi-run statistics.

- Cross-modality and transfer: Test HGF on vision/audio Transformers and multimodal LLMs; study fine-tuning and transfer learning when retaining the low-rank correction path.

- Mixed-precision activations: Evaluate per-channel vs. per-token Int8 activation quantization and activation quantization during training; analyze their interactions with gate learning and gradient flow.

- Inference-time adaptivity: Explore runtime gate adaptation (per-input or per-token) to trade off quality and latency dynamically, including policies for resource-aware serving.

- Error localization: Identify layers/blocks contributing most to quantization error to enable targeted correction placement that minimizes memory overhead while maximizing quality gains.

- KV-cache quantization: Study quantization of KV caches and how it interacts with HGF gates and differential attention; quantify impacts on throughput and generation quality.

Practical Applications

Immediate Applications

Below are actionable, real-world uses that can be deployed with modest integration effort, leveraging the paper’s findings (1.58-bit ternary backbones + gated low-rank FP16 correction, training schedule, and preliminary Triton kernels).

Edge and Embedded Inference (consumer, automotive, industrial IoT, healthcare)

- On-device private assistants (voice, chat, keyboards) on 2–4 GB RAM devices such as Raspberry Pi-class SBCs, Jetson Nano, smartphones, and smart speakers. Products: offline voice assistant boxes; privacy-first smartphone assistants; SmartTV/chat remotes.

- Tools/workflows: HGF layers as drop-in modules in PyTorch; activation int8 quantization; gate warmup→regularize→freeze training schedule; LoRA rank selection to fit memory.

- Assumptions/dependencies: Availability of fused ternary + LoRA kernels (Triton/CUDA) for meaningful speedups; acceptable ~10–20% quality delta vs FP16 for the target UX; small/medium models (≤3B) fit device memory; activation calibration stable across domains.

- In-vehicle assistants without always-on connectivity (infotainment, maintenance manuals Q&A).

- Tools/workflows: Preload compact HGF models with fixed gates; local retrieval from car manual documents.

- Assumptions/dependencies: Automotive-grade NPUs or efficient CUDA support; predictable latency under thermal limits.

- Industrial IoT natural-language interfaces at the edge (status queries, alert explanations, local log summarization).

- Tools/workflows: Event-triggered inference; quantized streaming interface; HGF model selected per device SKU.

- Assumptions/dependencies: Ternary kernels on embedded accelerators; operator acceptance of slightly lower generative quality.

Cloud Cost Optimization and Multi-tenant Serving (software, cloud platforms)

- Increase batch density per GPU for chat and inference endpoints by shrinking model memory footprints 6–10× versus FP16 while recovering ~55% of the quality gap relative to ternary-only baselines.

- Products: “HGF-serving” engine with fused kernels; autoscaling profiles optimized for memory bandwidth; SLAs with “quality knob” presets.

- Assumptions/dependencies: Context cache dominates memory after model load; fused kernels to avoid dual-branch overhead; monitoring for gate-calibrated quality.

- A/B testing of quality/latency tiers via fixed gate values (g ≈ 0.1 by default) or LoRA rank variants (cost-optimized SKUs).

- Tools/workflows: Routing middleware assigns sessions to HGF ranks/gates; usage-based pricing tiers.

- Assumptions/dependencies: Stability when swapping gates/ranks across sessions; calibration datasets for each tier.

Privacy, Compliance, and Data Localization (finance, healthcare, public sector)

- On-prem and on-device inference to minimize data transfer and meet data residency policies (GDPR/HIPAA-aligned deployments when paired with proper governance).

- Products: Compliance-ready HGF inference appliance; secure logging and audit trails.

- Assumptions/dependencies: Legal/compliance review still required; quality gap acceptable for task; model red-teaming independent of compression.

Rapid Prototyping and Teaching in Academia

- Stable differential-attention experiments using quantization as implicit regularizer; course labs on hybrid precision and training stability.

- Tools/workflows: Reference HGF layers and training recipe (live initialization, dual LR, gate freezing); TinyStories for fast iterations; SlimPajama/FineWeb-Edu for scale-up labs.

- Assumptions/dependencies: Access to PyTorch 2.x + Triton; reproducibility via provided seeds and schedules.

- Early stopping and budget-aware training: exploit observed saturation (~2.5k steps in small configs) to save compute on exploratory runs.

- Tools/workflows: Loss-slope monitors trigger stopping; hyperparameter sweeps over ranks/gates.

- Assumptions/dependencies: Saturation behavior generalizes to nearby model sizes; task-specific stopping criteria validated.

Developer Tooling and Framework Integration

- HGF as a drop-in module for Transformers libraries with configuration schemas for: ternary backbone, LoRA rank, gate schedule, activation int8 quantization.

- Products: Hugging Face integration (config + weights); ONNX Runtime/TensorRT plugins for ternary+LoRA fused ops.

- Assumptions/dependencies: Open-sourcing of kernels and checkpoints as stated; operator fusion passes implemented.

Low-resource Education and Accessibility

- Offline literacy and tutoring assistants on low-cost devices in bandwidth-constrained regions; on-device writing aids and screen readers.

- Tools/workflows: Domain-adapted HGF fine-tunes; locale-specific tokenization; speech-text pipelines.

- Assumptions/dependencies: Multilingual training/fine-tuning; evaluation for cultural/linguistic bias; modest quality tolerance.

Long-Term Applications

These opportunities likely require further research, scaling, hardware/software maturation, or broader validation (especially at 7B+ parameters and beyond).

Hardware/Kernel Co-design (semiconductors, systems)

- Specialized ternary/T-MAC units and instructions with fused dequantization and low-rank correction paths to realize the 2–3× throughput gains hinted by compute analysis.

- Products: NPU/ASIC/ISA extensions for 1.58-bit ops; compiler passes that auto-fuse HGF branches.

- Assumptions/dependencies: Vendor adoption; standardized ternary GEMM APIs; end-to-end toolchains.

Scaled LLMs and Multimodal Models (≥7B parameters; vision/audio)

- Training and serving 7B–70B HGF variants; extending gated low-rank correction to vision encoders and audio front-ends for on-device multimodal assistants.

- Tools/workflows: Layer-/head-wise gate schedules; modality-specific ranks; cross-modal calibration.

- Assumptions/dependencies: Confirmed scaling curves (preliminary signals are not conclusive); larger pretraining datasets; stronger kernel support.

Adaptive/Dynamic Gating at Inference (energy, mobile, cloud)

- Real-time “quality knob” that adjusts gate values per layer/token based on latency/energy budget, user tier, or context difficulty.

- Products: APIs exposing latency/energy-quality tradeoff; controllers that modulate g and rank r on the fly.

- Assumptions/dependencies: Stability under dynamic gates; safe ranges per layer; calibration to prevent drift or artifacts.

Federated and On-device Continual Learning (healthcare, finance, mobile)

- Train only the low-rank correction path on-device and share encrypted low-rank updates (not raw data), leveraging quantization as regularization to improve stability.

- Tools/workflows: Federated optimization for LoRA matrices; differential privacy wrappers; client selection policies.

- Assumptions/dependencies: Communication-efficient protocols; privacy guarantees; server-side aggregation of low-rank deltas.

Safety-Critical and Certifiable ML (aerospace, medical devices, industrial control)

- Use quantization-induced boundedness to support verification, robustness analysis, and certification of attention mechanisms.

- Products: Verification toolkits exploiting bounded logits; certifiable HGF components with fixed gates.

- Assumptions/dependencies: Formal methods adapted to hybrid precision; domain-specific certification processes.

Robotics and Real-time Autonomy

- Embedded LLM reasoning onboard drones/AMRs for instruction following, recovery behaviors, and natural-language tasking without cloud dependency.

- Tools/workflows: Real-time schedulers that allocate ternary vs correction compute; task-aware gating policies.

- Assumptions/dependencies: Deterministic latency with fused kernels; robustness to distribution shift; safe fallback behaviors.

Public Policy and Sustainability

- Energy-aware AI deployments: guidance that favors hybrid low-bit models for public services (kiosks, e-government) to reduce bandwidth and power usage.

- Tools/workflows: Procurement templates specifying hybrid-precision targets; reporting of energy-per-token and data-localization benefits.

- Assumptions/dependencies: Third-party LCA studies quantifying energy/carbon savings; standardized metrics for memory-bandwidth-limited regimes.

AutoML and Architecture Search

- Automated search over per-layer LoRA rank, gate values, and which projections (Q/K/V/MLP) receive correction, optimizing for Pareto fronts of quality vs memory/latency.

- Products: NAS/AutoML plugins specialized for hybrid-precision design spaces.

- Assumptions/dependencies: Reliable proxies for quality and efficiency; scalable evaluation harnesses.

Cross-cutting Assumptions/Dependencies (impacting many applications)

- Fused ternary + LoRA kernels: Needed to realize practical speedups; otherwise benefits are mainly memory-side.

- Acceptable quality gap: ~10% degradation vs FP16 may be unsuitable for high-stakes tasks; domain fine-tuning or higher ranks may be required.

- Scaling evidence: Preliminary results for 1.2B–7B are promising but not yet conclusive; final checkpoints and logs are pending.

- Toolchain maturity: PyTorch/Triton implementations, ONNX/TensorRT backends, and Hugging Face integrations must be robust for production.

- Data and domain shifts: Activation quantization and gate calibration may need per-domain tuning; multilingual and specialized domains require additional validation.

Glossary

- Absmax quantization: A quantization scheme that scales weights by an abs-mean factor and clips/rounds them to a ternary set. "We employ absmax quantization with learned scale factors."

- AdamW: An optimizer that decouples weight decay from the gradient-based update to improve generalization. "AdamW optimizer"

- Attention dilution: The tendency of softmax attention to become uniform as context length grows, reducing discriminative power. "Standard softmax attention suffers from "attention dilution" — as context length increases, attention weights become increasingly uniform, losing discriminative power."

- Batch density: The number of concurrent sequences/users a system can serve given memory constraints. "HGF's memory efficiency enables higher batch density."

- BF16: Brain floating point format with 16 bits that preserves FP32 range with reduced precision, used to speed up training and save memory. "Mixed precision training (BF16) on NVIDIA L4 GPU."

- BitNet b1.58: A 1.58-bit (ternary) quantized Transformer architecture emphasizing extreme compression. "BitNet b1.58"

- Capacity Ceiling: A limit in representational capacity observed in ultra-low-bit models that raises perplexity and degrades generation quality. "they suffer from a "Capacity Ceiling" that manifests as elevated perplexity and degraded generation quality."

- Capacity Saturation Time: The training step at which improvement slows below a threshold, indicating capacity is effectively utilized. "Capacity Saturation Time"

- Causal self-attention: An attention mechanism that prevents positions from attending to future tokens to preserve autoregressive causality. "causal self-attention"

- Dequantization: The process of rescaling integer-quantized values back to floating-point during or after computation. "broadcasted element-wise multiplication for dequantization."

- Differential attention: An attention variant that subtracts one head’s distribution from another to enhance discriminative focus. "Differential Attention"

- Differential operator: An operator that computes a difference between two signals or heads to suppress common-mode components. "via a differential operator ()"

- Differential signaling: An electronics-inspired technique where information is conveyed by the difference between two signals to cancel noise. "analogous to differential signaling in electronics."

- Dual Learning Rate: A training strategy using separate learning rates for different parameter subsets (e.g., gates vs. main weights). "Dual Learning Rate"

- Effective bit-width: A measure of the net precision contributed by combined quantized and auxiliary (e.g., low-rank) paths. "the effective bit-width is:"

- Gate Freezing: The practice of stopping updates to gate parameters after a schedule to stabilize training. "Gate Freezing"

- Gate Gradient Dynamics: The analysis of how gradients flow through gate parameters that modulate auxiliary paths. "Gate Gradient Dynamics"

- Gate Regularization Schedule: A time-dependent penalty that constrains gate magnitudes before freezing to prevent extremes. "Gate Regularization Schedule"

- Gate Saturation: A regime where gate values approach ±1, causing gradients through the gate to vanish. "Gate Saturation"

- Gated Fusion: A mechanism that blends outputs from multiple paths (e.g., ternary and FP16 correction) using a learnable gate. "Gated Fusion"

- GEMM (Int8): General matrix-matrix multiplication executed in 8-bit integer arithmetic for efficiency. "GEMM_{Int8}"

- Hybrid Gated Flow (HGF): A dual-path architecture combining a ternary backbone with a gated low-rank FP16 correction stream. "Hybrid Gated Flow (HGF)"

- Hyperbolic tangent: A bounded activation function tanh used to constrain gate values within (-1, 1). "is the derivative of the hyperbolic tangent."

- Integer matrix multiplication: Matrix multiply performed in integer precision (e.g., Int8) for speed and bandwidth savings. "⊗_{Int8} denotes integer matrix multiplication"

- Live Initialization: Initializing correction-path parameters with small non-zero noise so gates receive immediate learning signal. "Live Initialization"

- Lipschitz constant: A bound on how rapidly a function (e.g., loss surface) can change, used to analyze gradient bias from STE. "the Lipschitz constant of the loss surface"

- LoRA (Low-Rank Adaptation): A technique that inserts low-rank matrices into linear layers to efficiently adapt or correct models. "Low-Rank Adaptation (LoRA)"

- Low-Rank Correction: A residual path that models quantization error within a low-dimensional subspace. "Low-Rank Correction"

- Memory Wall: A hardware bottleneck where memory bandwidth, not compute, limits performance. ""Memory Wall""

- Mixed precision training: Training with reduced-precision formats (e.g., BF16/FP16) to accelerate compute and reduce memory usage. "Mixed precision training (BF16) on NVIDIA L4 GPU."

- Pareto-optimal frontier: The set of solutions trading off quality and cost where improving one dimension worsens the other. "can yield a Pareto-optimal frontier between inference cost and generation quality."

- Perplexity: A standard metric for LLM uncertainty; higher perplexity indicates worse performance. "perplexity degradation"

- Post-training quantization (PTQ): Reducing model precision after full-precision training without updating weights. "Post-training quantization (PTQ) methods like GPTQ"

- Quality Recovery: The fraction of performance regained by hybrid corrections relative to the gap introduced by quantization. "Quality Recovery"

- Quantization Error: The discrepancy between full-precision outputs and their quantized equivalents. "Quantization Error"

- Quantization-aware training (QAT): Training models with quantization effects in the loop to reduce post-quantization accuracy loss. "Quantization-aware training (QAT) methods"

- SiLU (Swish): An activation function defined as x·sigmoid(x), used for nonlinear low-rank correction. "the SiLU (Swish) activation function"

- Straight-Through Estimator (STE): A gradient approximation that treats non-differentiable quantization as identity in backprop. "Straight-Through Estimator"

- Structural Anchor: A stabilizing, discretized backbone that bounds optimization and regularizes volatile mechanisms. ""Structural Anchor""

- Structural regularization: Regularization emergent from discretization constraints that stabilize training dynamics. "quantization as structural regularization."

- T-MAC kernels: Specialized hardware/software kernels optimized for ternary multiply-accumulate operations. "T-MAC kernels"

- Ternary quantization: Mapping weights to {-1, 0, 1} to dramatically reduce memory and replace multiplies with sign flips/additions. "ternary quantization"

- Ternary weight matrix: A matrix whose entries are constrained to the ternary set, often scaled by learned factors. "ternary weight matrix "

- Token throughput: The number of tokens processed per second, often bounded by memory bandwidth. "token throughput"

- Triton (OpenAI Triton): A DSL and compiler for writing custom GPU kernels to optimize model execution. "OpenAI Triton"

- V-Path correction: Applying FP16 correction to the Value projection path in attention to preserve content fidelity. "V-Path Correction"

Collections

Sign up for free to add this paper to one or more collections.