When Small Models Are Right for Wrong Reasons: Process Verification for Trustworthy Agents

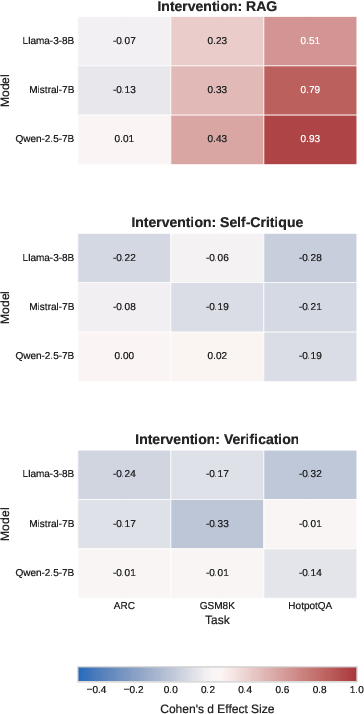

Abstract: Deploying small LLMs (7-9B parameters) as autonomous agents requires trust in their reasoning, not just their outputs. We reveal a critical reliability crisis: 50-69\% of correct answers from these models contain fundamentally flawed reasoning -- a ``Right-for-Wrong-Reasons'' phenomenon invisible to standard accuracy metrics. Through analysis of 10,734 reasoning traces across three models and diverse tasks, we introduce the Reasoning Integrity Score (RIS), a process-based metric validated with substantial inter-rater agreement ($κ=0.657$). Conventional practices are challenged by our findings: while retrieval-augmented generation (RAG) significantly improves reasoning integrity (Cohen's $d=0.23$--$0.93$), meta-cognitive interventions like self-critique often harm performance ($d=-0.14$ to $-0.33$) in small models on the evaluated tasks. Mechanistic analysis reveals RAG succeeds by grounding calculations in external evidence, reducing errors by 7.6\%, while meta-cognition amplifies confusion without sufficient model capacity. To enable deployment, verification capabilities are distilled into a neural classifier achieving 0.86 F1-score with 100$\times$ speedup. These results underscore the necessity of process-based verification for trustworthy agents: accuracy alone is dangerously insufficient when models can be right for entirely wrong reasons.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Explaining “When Small Models Are Right for Wrong Reasons: Process Verification for Trustworthy Agents”

Overview

This paper studies how small AI LLMs (the kind that can run on a personal computer) make decisions when acting like “agents” (doing tasks on their own). The main message: many of their answers look correct, but the reasoning they used to get there is wrong. The authors call this “Right-for-Wrong-Reasons,” and they show why checking only the final answer isn’t enough—you have to check the steps that led to it.

A simple example

Imagine a math problem: “What is 15% of 80?” An AI replies: “Step 1: Multiply 80 by 0.2. Step 2: 80 × 0.2 = 12. Final answer: 12.”

The answer 12 is correct, but the reasoning is wrong because 15% should be 0.15, not 0.2. If an agent makes decisions based on flawed steps—even when it gets lucky with the right final number—it can make serious mistakes later (like approving the wrong payments or giving bad advice).

Key Questions the Paper Asks

- How common is the “Right-for-Wrong-Reasons” problem in small AI models?

- What simple tricks (called “interventions”) can improve their reasoning steps?

- Why do some tricks help and others hurt—and how can we quickly detect bad reasoning?

How the Researchers Did It (Methods)

The authors tested three popular small AI models on three types of tasks:

- GSM8K (math word problems)

- HotpotQA (questions that need multiple facts from sources like Wikipedia)

- ARC (science questions that require commonsense reasoning)

They created 10,734 “reasoning traces” (like showing your work step by step) under four setups:

- Baseline: just solve the problem step by step.

- RAG (Retrieval-Augmented Generation): give the model helpful context to look up (like showing a textbook page).

- Self-critique: ask the model to review and correct its own steps.

- Verification prompts: tell the model to “check each step before moving on.”

To judge reasoning quality, they introduced the Reasoning Integrity Score (RIS):

- Each step is scored: 1.0 (fully correct), 0.5 (partly flawed), or 0.0 (wrong).

- A trace’s RIS is the average of its step scores.

- If RIS is below 0.8, the reasoning is considered flawed.

They used three strong AI “judge” models to score each step and confirmed the judges mostly agreed. They also analyzed common error types:

- Calculation errors (bad math or misusing numbers/facts)

- Hallucinations (making up facts)

- Logical leaps (drawing conclusions without proper support)

Finally, they trained a small, fast classifier (think: a quick “reasoning spellchecker”) to predict if a trace’s reasoning is flawed, so it can alert users in real time.

Main Findings

Here are the most important results:

- Hidden reasoning problems are very common.

- Between 50% and 69% of correct answers still had flawed reasoning steps.

- This was worst on knowledge-heavy tasks (like HotpotQA), where the model needs to use multiple facts correctly.

- RAG (looking things up) helps a lot.

- Giving the model relevant information improved reasoning quality across many tasks.

- It reduced calculation errors by about 7.6%.

- However, if the model misused the context (used a fact the wrong way), RAG didn’t help.

- Self-critique and verification prompts often hurt small models.

- Asking small models to “think about their thinking” tended to confuse them.

- Instead of truly reflecting, they produced text that looked like reflection but added new mistakes (like weak justifications and logical jumps).

- Errors often pile up late in the reasoning.

- Problems are more likely to show up in later steps, when the model drifts away from the original facts.

- A fast verifier can catch bad reasoning quickly.

- Their small classifier detected flawed reasoning with good accuracy and was about 100× faster than using big AI judges.

- This makes real-time “trust alarms” feasible in deployed agents.

Why This Matters

If we only look at whether the final answer is correct, we can be misled. Agents that are “right for wrong reasons” can be dangerously unpredictable. This matters for real-world use—like finance, health, or control systems—where the path to the answer is as important as the answer itself.

This paper suggests:

- Don’t rely on accuracy alone. You need process-based checks that inspect the steps.

- Use RAG to ground reasoning in external facts, especially for math and fact-heavy questions.

- Avoid self-critique prompts for small models (under ~10 billion parameters); they often make things worse.

- Add a fast verifier to flag risky reasoning in real time.

Practical takeaway

- If you’re building small AI agents: give them trustworthy information to work with (RAG), and install a fast “reasoning checker.” Don’t expect them to reliably critique themselves.

Notes and Limits

- The RAG used here was “oracle” (very accurate context), so real-world benefits may be smaller with noisier sources.

- Findings are based on three small models and three tasks, all in English.

- Larger models might handle self-critique better; these results focus on small ones.

Conclusion

Small AI models can often land on the right answer for the wrong reasons. In the tests here, this happened about half to two-thirds of the time. The fix isn’t just making them more accurate—it’s checking how they reason. Grounding their thinking with reliable information (RAG) helps, while asking them to critique themselves usually backfires for small models. A fast, process-based verifier makes it possible to trust these agents more in real-world settings.

Knowledge Gaps

Below is a consolidated list of concrete knowledge gaps, limitations, and open questions left unresolved by the paper; each item is phrased to be directly actionable for follow-on research.

- Generalization across models: Assess whether findings hold for smaller (<7B) and larger (≥13B, 34B, 70B+) models, domain-specialized SLMs, and closed-source models.

- Cross-lingual validity: Evaluate RIS, RWR prevalence, and intervention effects in non-English languages and mixed-language inputs.

- Task coverage: Extend beyond GSM8K, ARC, and HotpotQA to planning, tool-use, program synthesis, long-horizon agency, safety-critical domains (clinical, finance), and multimodal tasks.

- Oracle RAG realism: Replace oracle context with real retrievers to quantify how retrieval noise, ranking errors, latency, and coverage affect RIS and RWR rates.

- Retrieval quality ablations: Systematically vary retriever recall/precision and context length to derive dose–response curves for RAG’s effect on reasoning integrity.

- Context misuse mitigation: Design and test mechanisms (citation grounding, entailment checks, fact attribution, retrieval filtering) that directly reduce the observed context misapplication driving RAG failures.

- Decoding settings ambiguity: Resolve and rigorously study the impact of temperature/top-p/beam search (the paper reports both greedy and default high-temperature settings) on RIS, RWR rates, and intervention efficacy.

- Chain-of-thought dependency: Evaluate robustness when step-by-step reasoning is not elicited or is hidden; determine if the verifier can operate on terse or no-CoT outputs.

- Step extraction reliability: Replace regex-based step parsing with structure-aware methods; quantify parsing errors’ impact on RIS and RWR estimates.

- RIS construct validity: Conduct human annotation studies (with trained raters) to validate LLM-judge scoring, report human–LLM agreement, and calibrate RIS against expert ground truth.

- RIS threshold selection: Publish full sensitivity curves and decision-analytic justifications for the 0.8 cutoff; study risk-weighted thresholds tailored to domain severity.

- Holistic vs step-level scoring: Develop dependency-aware or graph-based RIS that captures global logical consistency, critical-step weighting, and error propagation across steps.

- Verbosity confound: Control for step count/trace length (e.g., matched-length analyses) to test whether higher RWR in some models is driven by verbosity rather than reasoning quality.

- Error taxonomy depth: Expand beyond four error types; establish inter-annotator reliability; map error types to risk severity and actionable mitigations.

- Mechanism causality: Move beyond correlations (e.g., r = −0.951 for context misuse) using randomized ablations (shuffle, mask, or perturb context) to establish causal pathways for RAG success/failure.

- Meta-cognition capacity threshold: Empirically trace model-size scaling curves to locate when self-critique turns from harmful to helpful, and identify which meta-cognitive skills emerge first.

- Tool-augmented critique: Test whether meta-cognition paired with tools (calculator, verifier, symbolic checkers) remains harmful or becomes beneficial in SLMs.

- Alternative interventions: Compare self-consistency, majority vote, debate, and programmatic/verifier-in-the-loop methods against RAG and meta-cognition on RIS.

- Training-time effects: Examine whether process-supervised fine-tuning, verifier-guided RL, or reflection-tuned SFT alter RWR prevalence and meta-cognitive harms in small models.

- Distilled verifier generalization: Perform leave-one-model-out, leave-one-task-out, and cross-lingual evaluations to measure robustness under domain and model shifts.

- Adversarial robustness: Stress-test the verifier against adversarially crafted traces (e.g., superficially “clean” but flawed reasoning, style obfuscation) and report degradation.

- Verifier interpretability and calibration: Provide feature importances, calibration curves, decision thresholds tied to operational costs, and analyses of false positives/negatives in high-stakes settings.

- End-to-end overhead: Include embedding computation and streaming costs to validate the claimed 100× speedup in realistic agent loops; measure on-device constraints.

- Deployment policies: Specify and evaluate escalation strategies (when to halt, ask human, or re-plan) driven by RIS/verifier outputs; quantify utility–risk trade-offs.

- Multi-turn agency: Study how RWR compounds across multi-step tasks with memory and environment interaction; measure downstream harm from “right answer, wrong reasoning” in subsequent actions.

- Downstream outcomes: Link RIS improvements to real reliability metrics (task success under perturbations, OOD robustness, error cascades) rather than proxy scores alone.

- Comparisons to prior verifiers: Benchmark the MLP verifier against stronger baselines (LLM verifiers, structured logic checkers) on accuracy, latency, and robustness.

- Mitigating RAG trade-offs: Address observed increases in hallucinations/logical leaps under RAG via selective grounding, confidence gating, and contradiction detection modules.

- Reproducibility gaps: Resolve inconsistencies in judge models and decoding settings; release full code, prompts, seeds, and datasets for all stages (generation, judging, verification training).

- Statistical rigor: Replace post-hoc power ≥0.75 criteria with pre-registered analyses, standard significance levels, and corrected multiple comparisons across many conditions.

- Data contamination: Audit training data overlap with benchmarks to quantify contamination effects on RWR and intervention outcomes.

- Privacy and governance: Assess privacy/safety implications of exposing full reasoning traces (for RIS/verification) and explore privacy-preserving verification methods.

- Non-textual verification: Explore formal or symbolic checkers for math/logic (e.g., theorem provers, unit checkers) to complement textual RIS judgments.

- Confidence and uncertainty: Integrate uncertainty quantification (e.g., conformal risk control) for RIS/verifier scores to support risk-aware decision-making.

- Long-context effects: Analyze RWR as a function of context length and memory usage; test periodic re-grounding or summarization strategies to reduce late-trace drift.

- Tool-use vs RAG: Compare calculator/tool calls to RAG for reducing calculation errors; study hybrid pipelines and their interaction effects on RIS.

Glossary

- AdamW: An optimization algorithm that decouples weight decay from the gradient update in Adam. "AdamW ()"

- Agentic contexts: Settings where models act as autonomous agents making multi-step decisions. "extending prior diagnostics to agentic contexts"

- ARC: The AI2 Reasoning Challenge dataset for science question answering. "ARC \cite{clark2018think} (1,119 commonsense science questions)"

- Capacity threshold: A hypothesized minimum model capability at which certain techniques (e.g., self-reflection) become effective. "supporting the existence of a \"capacity threshold\" for effective self-reflection that 7-9B models fall below."

- Chain-of-Thought: A prompting technique that elicits explicit step-by-step reasoning traces from models. "adapted from standard Chain-of-Thought templates \cite{wei2022chain}."

- Cohen's d: A standardized effect size measuring the magnitude of an intervention’s impact. "Cohen's --$0.93$"

- Context misuse: Incorrect application or integration of retrieved information during reasoning. "context misuse (fraction of retrieved facts incorrectly applied)"

- Distilled verifier: A smaller classifier trained to replicate verification judgments for fast, automated trust assessment. "our distilled verifier (0.86 F1, 5-10ms inference)"

- Early stopping: A regularization technique that halts training when validation performance stops improving. "and early stopping."

- Edge deployment: Running models on local or resource-constrained devices rather than centralized servers. "enable edge deployment, privacy preservation, and cost-effective scaling."

- Fleiss' kappa: A measure of inter-rater agreement for more than two raters. "Fleiss' , substantial agreement"

- Focal Loss: A loss function that down-weights easy examples to focus learning on hard, misclassified cases. "Focal Loss (, )"

- Greedy decoding: A generation strategy selecting the highest-probability token at each step. "with greedy decoding (temperature=$0$)"

- GSM8K: A benchmark of grade-school-level math word problems for assessing mathematical reasoning. "GSM8K \cite{cobbe2021training} (1,319 mathematical word problems)"

- Hallucination: Model output that fabricates facts or content not supported by evidence. "Hallucination (fabricated information)"

- HotpotQA: A dataset requiring multi-hop reasoning over evidence to answer questions. "HotpotQA \cite{yang2018hotpotqa} (1,000 multi-hop QA samples)"

- Instruction-tuned: Models fine-tuned to follow natural language instructions and respond helpfully. "base instruction-tuned variants"

- Inter-rater reliability: The degree of agreement among independent evaluators. "Inter-rater reliability was validated on 500 steps (Fleiss' , substantial agreement)."

- LLM-as-a-judge: Using a LLM to evaluate the correctness or quality of another model’s output. "slow LLM-as-a-judge evaluations."

- Macro F1: The unweighted average F1-score across classes, treating each class equally. "yielded 0.86 macro F1"

- Mechanistic analysis: Examination of underlying causal processes explaining why interventions work or fail. "Mechanistic analysis reveals RAG succeeds by grounding calculations in external evidence, reducing errors by 7.6\%"

- Meta-cognitive prompts: Prompts that ask a model to reflect on, critique, or verify its own reasoning. "meta-cognitive interventions like self-critique often harm performance ( to )"

- MLP classifier: A multi-layer perceptron used here as a lightweight neural verifier. "we trained a lightweight MLP classifier"

- Multi-hop QA: Question answering that requires combining information across multiple evidence pieces. "(1,000 multi-hop QA samples)"

- Oracle RAG: Retrieval-augmented generation with perfect, ground-truth context provided to the model. "which provided oracle ground-truth context (e.g., Wikipedia snippets for HotpotQA)"

- Pearson's r: A correlation coefficient quantifying linear relationships between variables. "correlations using Pearson's ."

- Post-hoc statistical power: Power analysis conducted after data collection to assess the likelihood of detecting effects. "Statistical power was computed post-hoc,"

- Pseudo-reflection: Superficial self-critique text that appears reflective but lacks genuine error-checking. "We posit this is due to \"pseudo-reflection\": small models lack the genuine, high-level meta-cognitive capacity to introspect."

- Retrieval-Augmented Generation (RAG): Enhancing generation by conditioning on retrieved external documents. "retrieval-augmented generation (RAG) significantly improves reasoning integrity (Cohen's --$0.93$)"

- Right-for-Wrong-Reasons (RWR): Producing a correct final answer via flawed or invalid reasoning steps. "a phenomenon we term ``Right-for-Wrong-Reasons'' (RWR)."

- RIS (Reasoning Integrity Score): A process-based metric averaging step-level correctness to assess reasoning quality. "we introduce the Reasoning Integrity Score (RIS), a process-based metric"

- Self-critique: An intervention where the model reviews and revises its own reasoning. "Self-Critique, which prompted the model to review its reasoning"

- Sentence-BERT: A transformer model producing sentence embeddings useful for semantic similarity and classification. "Sentence-BERT embeddings (384D from all-MiniLM-L6-v2)"

- Stratified split: A data split preserving class proportions across training and test sets. "trained on 80\% of traces (stratified split)"

- Top-p sampling: A nucleus sampling method that selects tokens from the smallest set whose cumulative probability exceeds p. "with standard top-p sampling."

- Verification prompts: Prompts instructing models to check each reasoning step for correctness. "Verification Prompts, which added to the initial prompt: ``Verify each step for accuracy before proceeding to the next.''"

Practical Applications

Immediate Applications

The paper’s findings enable deployable practices and tools that improve reliability of small-model agents today. The items below describe concrete use cases, target sectors, and dependencies.

- Cross-cutting “trust layer” for small-model agents

- What: Integrate a lightweight verifier (the distilled MLP, ~0.86 F1, 5–10ms latency) that scores each reasoning trace for integrity and gates actions when RIS-like signals fall below a threshold (e.g., 0.8).

- Where: Any agent platform (e.g., LangChain/LlamaIndex wrappers, internal orchestration frameworks).

- Workflow: Score → threshold-based routing (allow, escalate to human, or fallback to larger model) → log for audit.

- Dependencies/assumptions: Access to step-by-step traces (even if not user-visible); model/dataset mismatch may require recalibration; English-focused training may reduce performance in other languages; verifier may need domain-specific fine-tuning.

- RAG-first agent templates for knowledge-intensive tasks

- What: Ship agent templates that enforce retrieval before reasoning for math and multi-hop QA-like tasks, using curated KBs, API docs, or internal wikis.

- Where: Customer support, enterprise knowledge assistants, developer assistants, internal analytics chatbots.

- Workflow: Retrieve → present context in prompt → reason → verify → respond.

- Dependencies/assumptions: Real-world retrieval is noisy (paper used oracle RAG); quality retrievers and up-to-date KBs are necessary; context-length limits and chunking strategies must be tuned; ensure PII/PHI-safe retrieval pipelines.

- Disable meta-cognitive/self-critique prompts for 7–9B models on evaluated task types

- What: Update prompt libraries to remove “critique your reasoning”/“verify each step” for small models; replace with RAG and external checks.

- Where: Prompt engineering repositories, agent configuration defaults.

- Workflow: Replace self-critique blocks with retrieval and tool-based verification; monitor RIS impact.

- Dependencies/assumptions: Effects measured on GSM8K/HotpotQA/ARC with 7–9B models; revisit for larger models or different tasks.

- Production monitoring: Reasoning Integrity dashboards and alerts

- What: Surface RIS-like integrity metrics in observability stacks to track RWR rates, detect regressions, and trigger incident response.

- Where: MLOps/observability (e.g., Grafana/Datadog dashboards), model governance platforms.

- Workflow: Aggregate verifier outputs → visualize defect rates by task/model → auto-alert when thresholds exceeded.

- Dependencies/assumptions: Logging of step traces; thresholds/alert policies require calibration to domain risk.

- Risk-aware routing and fallback policies

- What: Route low-RIS requests to human review or larger models; auto-block irreversible actions (e.g., financial transactions) when reasoning integrity is low.

- Where: Finance ops, healthcare triage, IT automation, DevOps agents.

- Workflow: RIS score → policy-based router → human/LLM fallback or defer.

- Dependencies/assumptions: Latency budgets; cost controls for fallback to larger models.

- Context misuse detector for RAG pipelines

- What: Deploy a lightweight “context misuse” check (as in the paper’s prompts) to detect whether retrieved facts were ignored or misapplied before finalizing answers.

- Where: Any RAG system handling policies, procedural content, or facts.

- Workflow: Evaluate each step against provided context → flag “Misapplication”/“Irrelevant” → gate or request re-retrieval.

- Dependencies/assumptions: Requires access to intermediate steps; performance depends on domain vocabulary and context formatting.

Sector-specific immediate uses

- Healthcare

- Clinical triage/chatbots and guideline Q&A: Use RAG from vetted clinical guidelines and formularies; gate outputs with RIS; auto-escalate low-RIS cases to clinicians.

- Dosage and calculation support: Verify arithmetic steps; disallow action if RIS below threshold.

- Dependencies/assumptions: HIPAA/PHI constraints for retrieval; require medical KB curation; clinical oversight remains mandatory.

- Finance

- Customer-facing calculators (interest, fees, taxes): Enforce RAG with current rates/regulations; verify computation steps; block transactions on low RIS.

- Back-office policy assistants: Retrieve internal policy documents and verify rationale steps before approvals.

- Dependencies/assumptions: Up-to-date regulatory knowledge base; strict audit trails; SOC/ISO-aligned governance.

- Education

- Math and science tutors: Show verified step-by-step solutions; flag and explain flawed reasoning even when the final answer is correct to teach process quality.

- Assessment tools: Grade both answer and process using RIS-like scoring for partial credit and feedback.

- Dependencies/assumptions: Age-appropriate explanations; domain calibration for curricula; privacy in student data.

- Software engineering

- Developer assistants: Retrieve API docs/specs to ground suggestions; verify reasoning behind code explanations and migration steps; route low-RIS cases to documentation links/human review.

- Dependencies/assumptions: High-quality internal docs; chain-of-thought may need to be internal-only for IP reasons.

- Customer support and enterprise knowledge management

- Knowledge-base chatbots: Mandate RAG to official KBs; verify reasoning consistency with retrieved facts; escalate low-RIS answers.

- Dependencies/assumptions: KB freshness and access control; context-window management for large corpora.

- Robotics and ops automation

- High-level task planning: Require plans to cite sensor/knowledge inputs; verify plan steps for integrity before execution.

- Dependencies/assumptions: Access to environment maps/sensors as “retrieval” context; real-time constraints.

- Energy and building automation

- Scheduling/optimization assistants: Ground decisions in sensor data and constraints; verify calculations and logic; fail-safe when RIS is low.

- Dependencies/assumptions: Reliable telemetry; safety interlocks; human overrides.

- Legal/compliance

- Policy lookup and reasoning: Enforce retrieval of authoritative sources; verify step-by-step legal reasoning aligns with citations; route low-RIS items to legal review.

- Dependencies/assumptions: Jurisdiction-specific corpora; strict provenance logging; human sign-off required.

Long-Term Applications

These opportunities require further research, scaling, domain adaptation, or standardization before dependable deployment.

- Regulatory standards and certifications for process-verified agents

- What: Define industry thresholds (e.g., RIS≥0.8) and auditing protocols for “Process-Verified” badges in regulated sectors (healthcare, finance, legal).

- Potential products: Third-party certification services, continuous auditing platforms.

- Dependencies/assumptions: Consensus on metrics; sector-specific risk frameworks; reproducibility of RIS across languages and domains.

- Noise-robust RAG and knowledge management stacks

- What: Build retrieval and context-quality monitors that optimize integrity under noisy, real-world retrieval (paper used oracle RAG).

- Potential tools: Context quality scorers, retriever retraining pipelines, freshness and provenance trackers.

- Dependencies/assumptions: Investment in KB engineering; evaluation data for noise conditions.

- Next-gen verifiers: graph-based, multimodal, and multilingual

- What: Move beyond MLP-on-embeddings to verifiers that model dependencies between steps, handle diagrams/tables, and support non-English domains.

- Potential products: General-purpose “reasoning graph verifiers” integrated with agent frameworks.

- Dependencies/assumptions: Availability of structured trace formats; labeled corpora for diverse tasks and languages.

- Training-for-process: optimizing models for reasoning integrity

- What: Incorporate RIS-like objectives into training/fine-tuning (e.g., process-aware RLHF) to reduce RWR behavior, especially in small models.

- Potential workflows: Co-training generators with verifiers; curriculum emphasizing reasoning steps.

- Dependencies/assumptions: Stable and unbiased process labels; risk of overfitting to verifier heuristics.

- Capacity-aware meta-cognition

- What: Identify model size/task regimes where self-critique becomes beneficial; create dynamic policies that enable/disable meta-cognition based on capacity and risk.

- Potential tools: “Meta-cognition controllers” that toggle strategies per task/model.

- Dependencies/assumptions: Empirical mapping of capacity thresholds; may require 40B–70B+ models or specialized architectures.

- Automated self-correction via tools, not text-only critique

- What: Replace pseudo-reflection with tool-assisted checks (symbolic math solvers, rule engines, retrieval re-ranking) and verify corrections with process metrics.

- Potential products: Toolchain orchestrators that couple RAG, calculators, and verifiers for closed-loop correction.

- Dependencies/assumptions: Tool availability and reliability; latency budgets for multi-tool orchestration.

- Process-aware UX for transparency and trust

- What: User interfaces that surface reasoning integrity indicators, highlight weak steps, and request user confirmation for critical actions.

- Potential products: “Trust meters” embedded in chat/agent UIs; explainability widgets for steps.

- Dependencies/assumptions: Balancing cognitive load and security; privacy concerns around displaying rationales.

- Edge/embedded verification for robotics and IoT

- What: On-device verifiers co-located with small models to enforce integrity in low-latency environments (e.g., drones, smart appliances).

- Potential products: Firmware libraries providing step verification and safe fallback behaviors.

- Dependencies/assumptions: Resource constraints; deterministic behavior under intermittent connectivity.

- Education-at-scale with process grading

- What: Large-scale systems that grade and tutor student reasoning processes across subjects, using RIS-like metrics to personalize feedback.

- Potential products: LMS plug-ins for process-based grading; formative assessment tools.

- Dependencies/assumptions: Alignment with pedagogical standards; bias audits; multilingual support.

- Cross-domain governance and procurement policies

- What: Procurement checklists and SLAs requiring process-based verification for AI agents; model selection policies prioritizing RAG-first architectures.

- Potential outputs: Public-sector frameworks and industry consortia guidelines.

- Dependencies/assumptions: Stakeholder buy-in; standardized reporting formats and audits.

- Safety cases for critical infrastructure

- What: Formal safety cases that incorporate reasoning integrity evidence, combining RIS distributions, RAG quality metrics, and fallback policies for safety-critical deployments (e.g., grid control, medical devices).

- Dependencies/assumptions: Regulatory acceptance; rigorous incident response and monitoring.

- Benchmarking and communal datasets for RWR

- What: Public corpora of step-labeled traces across domains and languages to benchmark RWR prevalence and mitigation strategies.

- Dependencies/assumptions: Data-sharing agreements; consistent annotation rubrics; privacy-preserving trace formats.

Collections

Sign up for free to add this paper to one or more collections.