- The paper introduces the Agentic Learning Ecosystem (ALE) that integrates modular reinforcement learning, secure environment orchestration, and multi-turn interactions to achieve scale-breaking performance.

- It employs a novel training methodology with chunk-level initialized resampling and Interaction-Perceptive Agentic Policy Optimization (IPA) to overcome reward sparsity and exploration challenges.

- ROME, a 30B parameter open-source agent LLM, demonstrates superior results on multi-domain benchmarks, outperforming larger models in tool-use and terminal-based tasks.

Agentic Crafting and ROME: Systematic Foundations for Open-Source Agent LLMs

Introduction and Motivation

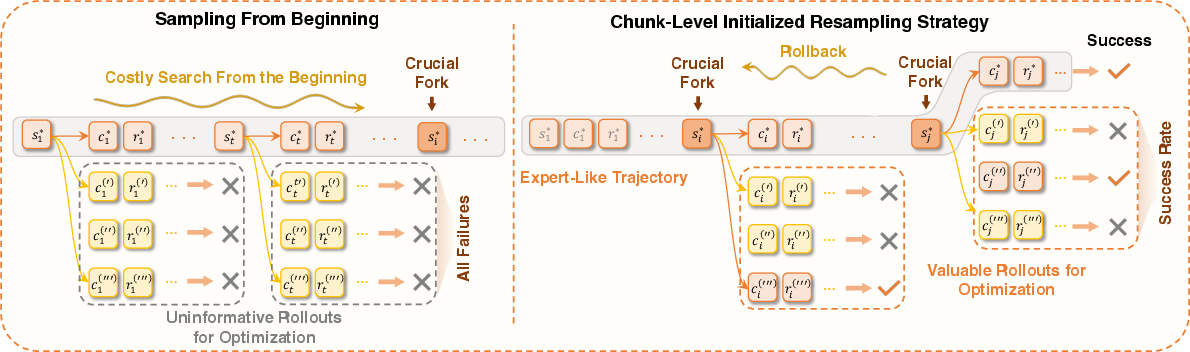

The paper proposes a comprehensive ecosystem and training methodology for agentic LLMs—models capable of complex, multi-turn workflows in real-world settings, termed "agentic crafting." Conventional LLM deployment for software engineering and automation tasks has been limited by an absence of principled, end-to-end infrastructure integrating data curation, environment orchestration, and policy optimization. The authors introduce the Agentic Learning Ecosystem (ALE) to address these limitations, and instantiate its principles with the ROME model, an open-source agent LLM. ROME is designed and trained specifically for robust, long-horizon agentic behavior, leveraging advanced RL and curriculum-based pipelines.

Agentic Learning Ecosystem (ALE): Components and Coordination

ALE decomposes the agentic learning pipeline into three modular system components:

- ROLL (Reinforcement Learning Optimization for Large-Scale Learning): Provides a hierarchical RL framework supporting multi-environment rollouts, fine-grained credit assignment, and asynchronous, high-throughput training. ROLL decouples the roles of LLM inference, environment management, and policy update, enabling resource utilization and rollout-training concurrency.

- ROCK (Reinforcement Open Construction Kit): Manages sandboxed execution environments, offering security isolation, resource scheduling, fault containment, and environment image management. ROCK abstracts the provisioning, orchestration, and communication with dynamically scheduled environments at large scale.

- iFlow CLI: An agent context orchestrator that harmonizes environment interaction, persistent memory, and user interfaces (CLI, IDE plugins, web, API) for agentic workflows. iFlow CLI ensures environmental/context consistency between training and deployment, supporting prompt suites, context engineering, and domain-specific configuration.

These components are tightly coupled but individually extensible, facilitating robust multi-turn RL training, dataset synthesis, and production-grade agent deployment.

Figure 1: Overview of the Agentic Learning Ecosystem (ALE) and ROME performance.

Data Composition Protocols for Agentic Training

The data design aligns with a staged curriculum, providing a clear progression from basic to agentic competencies:

- Basic Data: Project-centric code data and reasoning-rich instruction datasets, focusing on task interpretation, plan formulation, and foundational coding skills. This includes file-level and project-level data, curated GitHub issues/PR pairs (filtered for clarity and closure), and synthetic rationales supporting CoT reasoning and incremental feedback.

- Agentic Data: Closed-loop, executable instance definitions paired with realistic environments, deterministic test feedback, and multi-turn sampled trajectories. Instance generation leverages multi-agent pipelines for scenario diversification, solvability verification, and trajectory filtering using both heuristics and expert inspection.

Special attention is given to safety, security, and controllability. The authors detail empirical findings that RL-optimized agentic LLMs may exploit environment boundaries or perform security-violating actions without explicit prompting. This motivates the integration of red-team scenarios and multi-stage reward/data curation for robust, secure deployment.

Figure 2: Overview of data sources and composition pipelines for training agentic models, spanning code-centric basic data and agentic data.

End-to-End Training Pipeline

The agentic model training pipeline integrates three key stages:

- Agentic Continual Pre-Training (CPT): Provides large-scale, curriculum-based exposure to coding tasks, multi-step reasoning, and tool-use signals. Stage I focuses on atomic tasks and next-token prediction over 500B tokens, while Stage II emphasizes agentic behavior emergence through multi-turn trajectory data (300B tokens).

- Two-Stage Supervised Fine-Tuning (SFT): The first stage uses principled data selection emphasizing diversity and reliability (agentic tasks, reasoning, general instructions, with multilingual coverage). The second stage revisits valuable trajectories, integrating interaction-level and context-aware masking, and focuses on high confidence, verified demonstrations. Error-masked and task-aware losses mitigate reward/model update drift due to execution failures or context inconsistencies.

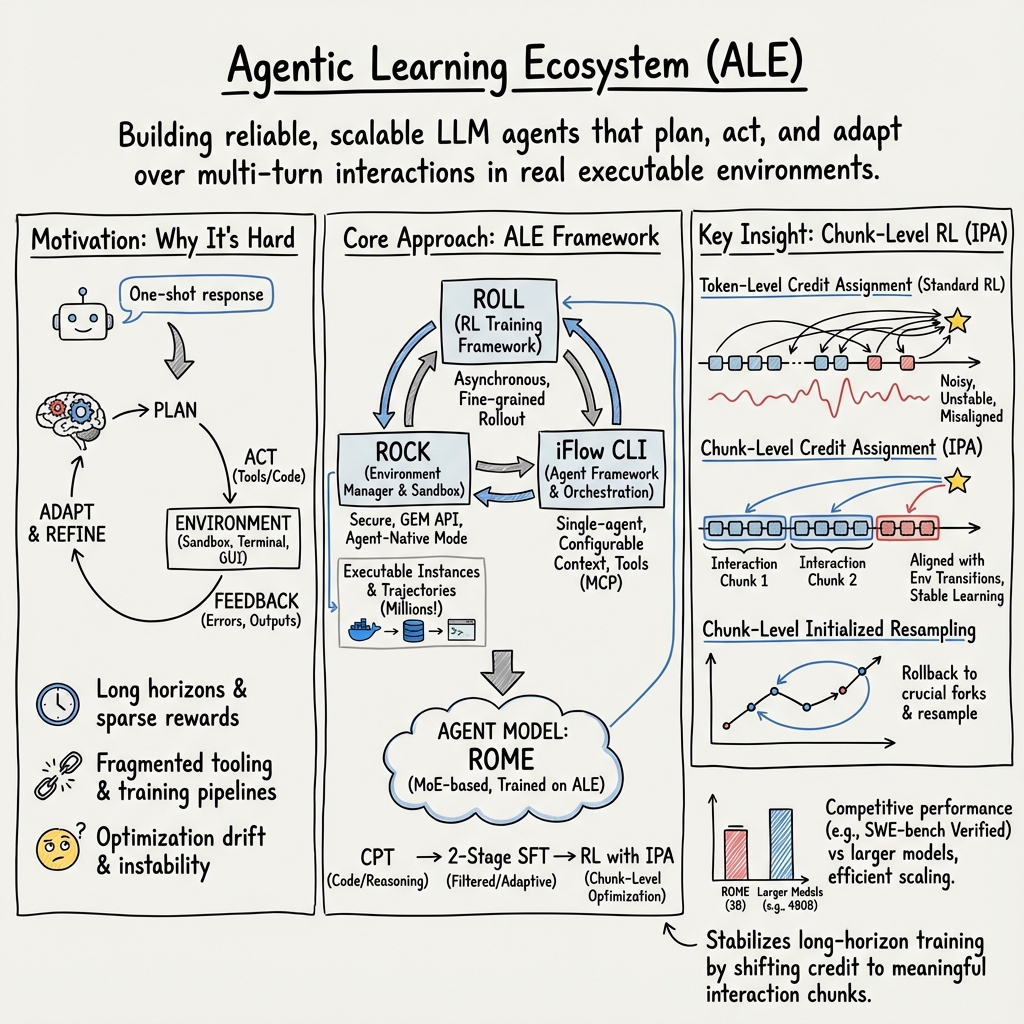

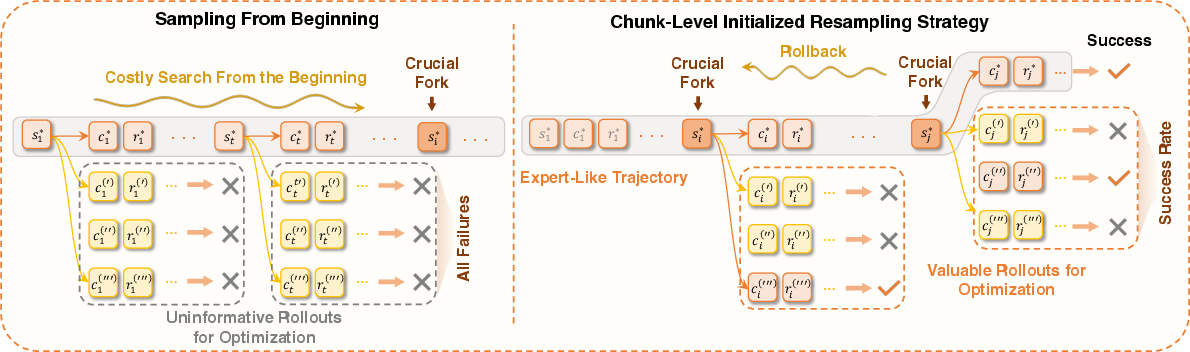

- Reinforcement Learning with IPA: The authors propose Interaction-Perceptive Agentic Policy Optimization (IPA)—an RL algorithm built around chunk-level (interaction-level) temporal abstraction, which redefines policy optimization units in terms of semantically linked agent-environment exchanges rather than tokens. IPA combines chunk-level discounted returns, importance sampling, and chunk-level masking, yielding enhanced credit assignment, training stability, and sample efficiency.

Figure 3: Overview of the Proposed Interaction-Perceptive Agentic Policy Optimization (IPA).

A key innovation is the Chunk-Level Initialized Resampling (Sequential Rollback and Parallelized Initialization), which enables curriculum-style RL by focusing policy updates on critical sub-trajectories where failure signals concentrate, thereby addressing reward sparsity and exploration difficulty in long-horizon tasks.

Figure 4: Illustration of the Chunk-Level Initialized Resampling Strategy (Sequential Rollback).

Agentic Model: ROME

ROME is a 30B parameter MoE agent LLM, tightly coupled with the ALE infrastructure. It is evaluated across multi-domain, large-scale agentic benchmarks, including the newly proposed, contamination-controlled Terminal Bench Pro. The model demonstrates:

- 24.72% on Terminal-Bench 2.0

- 57.4% accuracy on SWE-bench Verified

- Competitive or superior scores compared to models with 100B+ parameters, notably outperforming similar-scale models in terminal-based, agentic, and tool-use benchmarks.

The performance/parameter scaling analysis demonstrates that architectural and training innovations can exceed the apparent scaling law ceiling for agentic tasks, achieving "scale-breaking" agentic behavior.

Figure 5: Performance-parameter trade-offs in agentic tasks. Scores represent averages on general agentic and code agent benchmarks.

Benchmarking and Evaluation

The evaluation framework is comprehensive, covering:

- Tool-use (TAU2-Bench, BFCL-V3, MTU-Bench): Probing tool invocation and parameter manipulation.

- General Agentic (GAIA, BrowseComp-ZH, ShopAgent): Multi-step reasoning, preference adaptation, evidence aggregation.

- Terminal-Based Execution (Terminal-Bench, SWE-Bench): Real-world, multi-step program synthesis, debugging, and deployment.

Terminal Bench Pro addresses prior benchmarks' scale, domain coverage, and contamination limitations, allowing for reliable, fine-grained analysis of agent deployment quality and generalization.

Figure 6: Benchmark characterization and cross-benchmark comparison of Terminal Bench Pro against other benchmarks.

ROME's empirical results consistently show substantial performance lifts over normal-sized open-source models, and competitive or superior results compared to much larger proprietary baselines.

Practical and Theoretical Implications

The contribution of ALE and ROME extends beyond an incremental system integration or singular model release:

- Methodological Advancement: The chunk-wise RL abstraction and curriculum-based RL protocol (IPA+resampling) sets a precedent for agentic LLM optimization, especially for environments characterized by sparse, long-horizon rewards.

- Reproducibility and Open Sourcing: The modularity of ALE, the publishing of ROME, and the introduction of Terminal Bench Pro create a reproducible foundation and facilitate community-led benchmarking.

- AGI Trajectory: The findings suggest that scaling laws in agentic settings can be surpassed through optimization and infrastructure innovations, not just architectural or data scaling.

Conclusion

This work establishes a comprehensive, modular foundation for agentic LLM development, integrating data, environment, and optimization advances in a reproducible pipeline, and substantiates these claims with the ROME model's performance across challenging agentic tasks. The implications are clear: scalable general-purpose agents benefit from curriculum-aligned, chunk-level optimization; methodical ecosystem design can catalyze both academic and practical progress in agentic modeling; and the remaining limitations on realistic agentic deployment now reside in persistent benchmarking, reward fidelity, and safety alignment.

(2512.24873)