- The paper introduces ReMe, a dynamic procedural memory framework that transforms static memory into an evolving system for improving agent decision-making.

- It employs multi-faceted distillation, context-adaptive reuse, and utility-based refinement to optimize experience extraction and memory management.

- Experimental results demonstrate that smaller models using ReMe outperform larger baselines, highlighting enhanced efficiency and resourceful lifelong learning.

Detailed Summary of "Remember Me, Refine Me: A Dynamic Procedural Memory Framework for Experience-Driven Agent Evolution"

The paper "Remember Me, Refine Me: A Dynamic Procedural Memory Framework for Experience-Driven Agent Evolution" (2512.10696) introduces a novel memory management framework known as ReMe. This framework aims to enhance the capabilities of LLM-based agents by transforming static procedural memory into a dynamic, evolving cognitive system. The framework is structured to bridge the gap between mere data storage and intelligent reasoning, thereby promoting both theoretical advancements and practical applications in AI.

Introduction and Background

The need for adaptive memory systems in AI agents is driven by the transition from static LLMs to interactive, autonomous systems capable of handling dynamic and complex tasks. Traditional methods of procedural memory have been criticized for their "passive accumulation" approach, which treats memory as a static repository with limited utility for reasoning and decision-making. ReMe addresses these limitations by introducing dynamic mechanisms that improve experience acquisition, reuse, and refinement. The system's effectiveness is demonstrated through benchmarks on BFCL-V3 and AppWorld.

Figure 1: Example of how agents complete one stock trading task with and without past experience.

Methodology

Overview and Framework Structure

ReMe's architecture is designed to maintain a compact, high-quality procedural memory pool through three key mechanisms: multi-faceted experience distillation, context-adaptive reuse, and utility-based refinement. These components ensure that the memory system is not only extensive but also efficient and relevant.

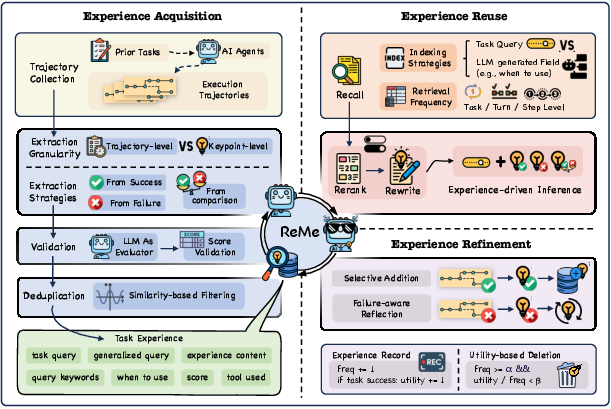

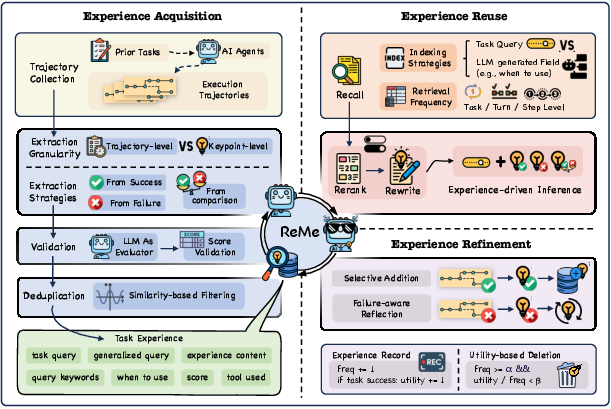

Figure 2: The ReMe framework comprises three alternating phases. The system first constructs the initial experience pool from the agent's past trajectories. For new tasks, relevant experiences are recalled and reorganized to guide agent inference. After task execution, ReMe updates the pool, selectively adding new insights and removing outdated ones.

Experience Acquisition

ReMe employs a multi-faceted distillation process to extract reusable experiences from execution trajectories, emphasizing the need to filter out noise and identify success patterns and failure triggers. This involves intelligent summarization and a validation step to ensure quality control.

Experience Reuse and Refinement

For novel tasks, ReMe uses scenario-aware indexing to retrieve relevant experiences that are then adapted to new contexts, providing a tailored approach to knowledge application. The utility-based refinement mechanism ensures that the memory pool remains effective by periodically pruning low-utility entries and reinforcing successful ones.

Figure 3: Ablation on retrieval keys. The experiments are evaluated on BFCL-V3 in ReMe.

Experimental Results

The experiments conducted demonstrate that ReMe sets a new state-of-the-art performance in memory-augmented agents. A notable finding is the memory-scaling effect, whereby smaller models with ReMe outperform larger baseline models without memory augmentation. This highlights the computational efficiency of self-evolving memories and their potential to facilitate lifelong learning in agents.

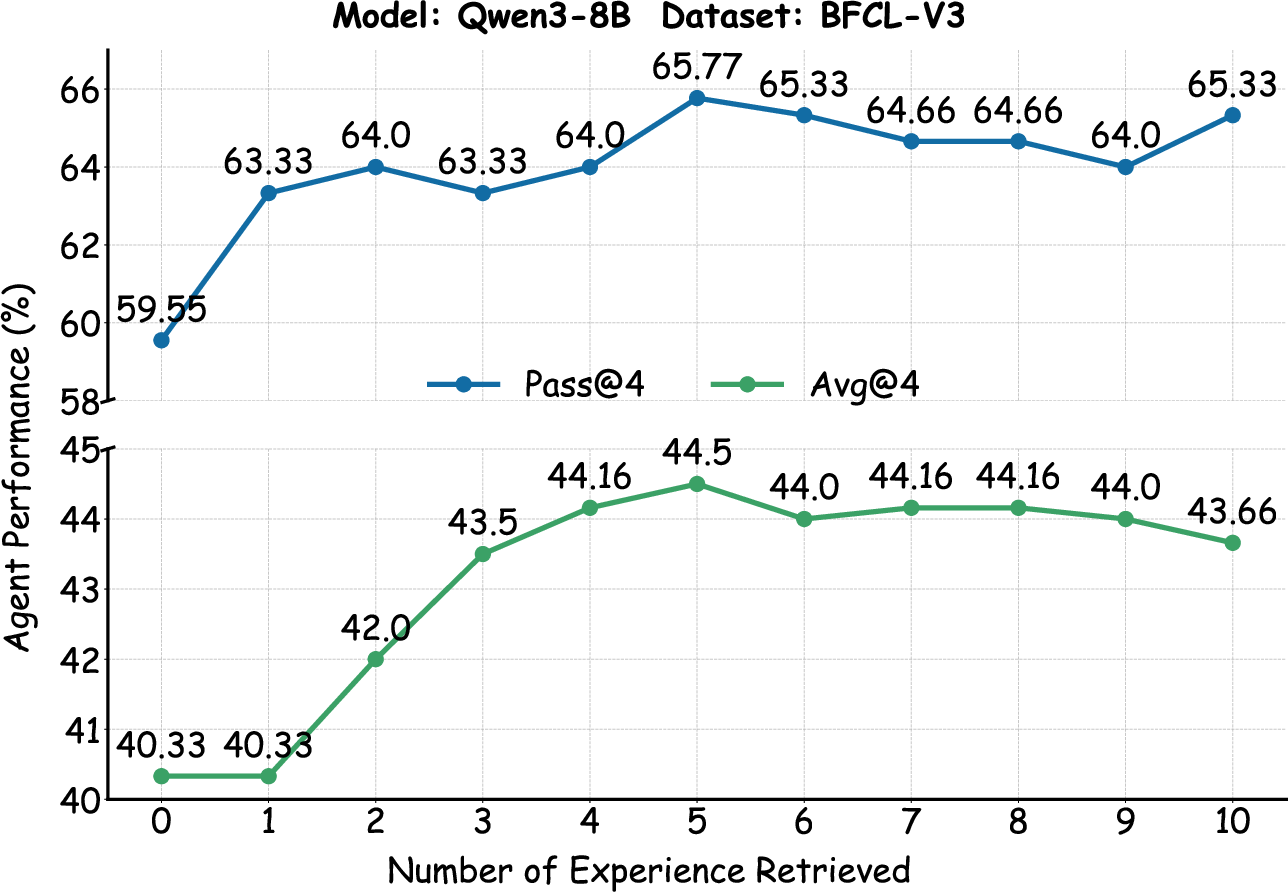

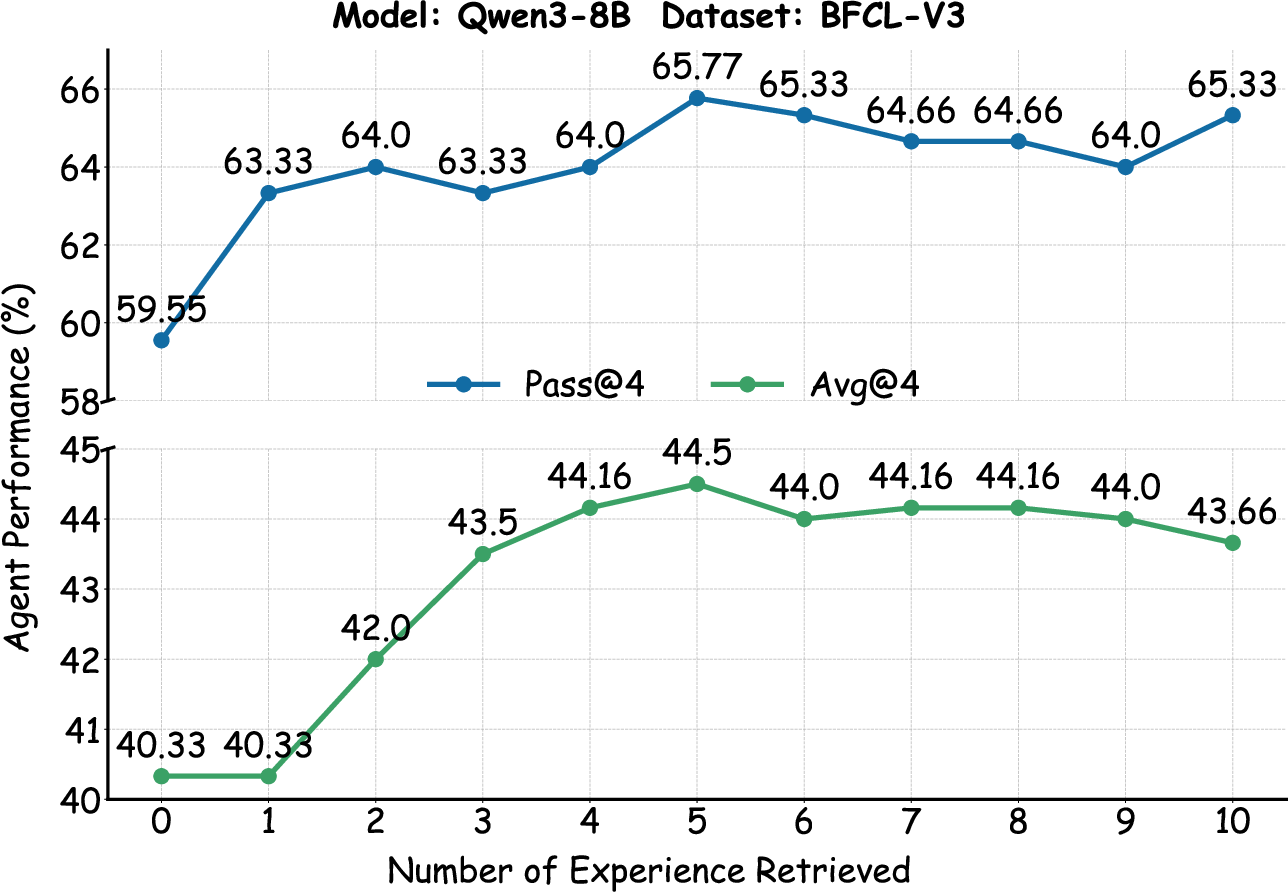

Figure 4: Effect of retrieved experience number on agent performance (%) in ReMe.

Discussion and Implications

The ReMe framework represents a significant advancement in procedural memory systems, offering a pathway to resource-efficient lifelong learning. By dynamically adapting to new information and optimizing memory usage, ReMe enhances both the robustness and versatility of AI agents. The results suggest that sophisticated memory mechanisms can reduce the dependency on large-scale models, thus broadening the scope of applicability in various domains.

Conclusion

ReMe demonstrates a transformative approach to procedural memory by replacing passive accumulation with active, adaptive learning strategies. This innovation not only addresses the limitations of traditional memory systems but also paves the way for more intelligent and resource-efficient agent designs. Future work could further explore context-aware retrieval mechanisms and advanced summarization strategies to amplify the framework's impact.

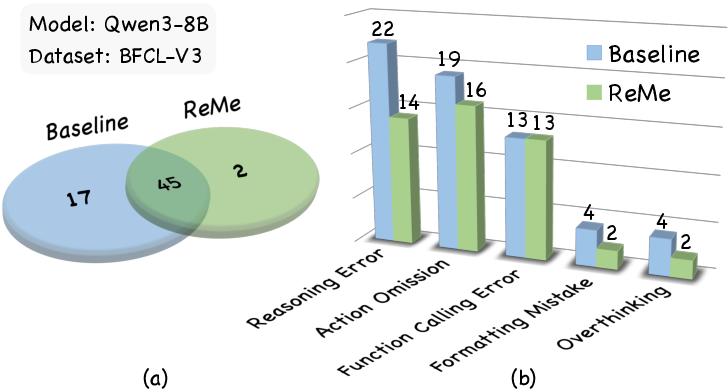

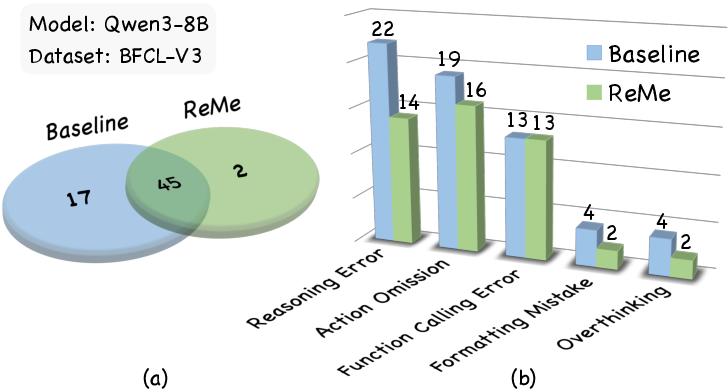

Figure 5: Statistics of failed tasks with and without ReMe.