- The paper introduces an adaptive memory framework that dynamically optimizes memory retrieval, utilization, and storage in LLM agents.

- It employs a Mix-of-Expert gate function and learnable aggregation with SFT and DPO methods to enhance decision-making efficiency.

- Experimental results on tasks like HotpotQA demonstrate that on-policy optimization reduces reasoning steps and improves complex task performance.

"Learn to Memorize: Optimizing LLM-based Agents with Adaptive Memory Framework"

Introduction

The paper "Learn to Memorize: Optimizing LLM-based Agents with Adaptive Memory Framework" addresses the limitations of current memory mechanisms in LLM-based agents. Traditional memory mechanisms largely rely on predefined rules set by human experts, which leads to higher labor costs and suboptimal performance, especially in interactive scenarios. The authors propose an adaptive memory framework that optimizes LLM-based agents by modeling memory cycles.

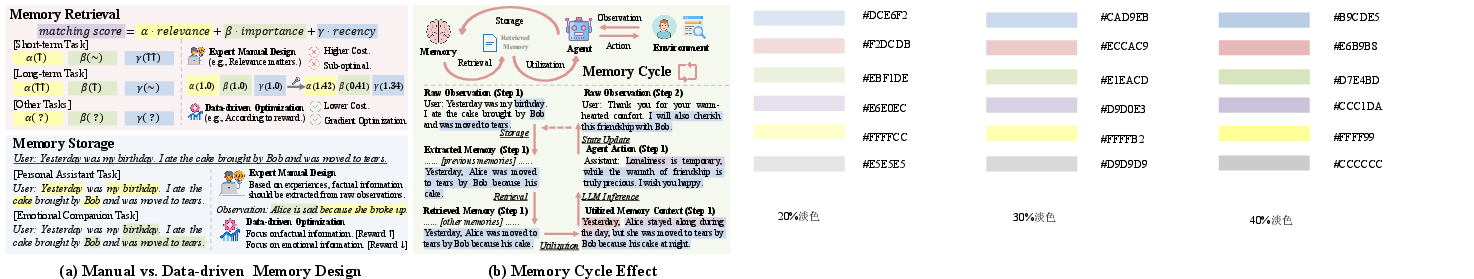

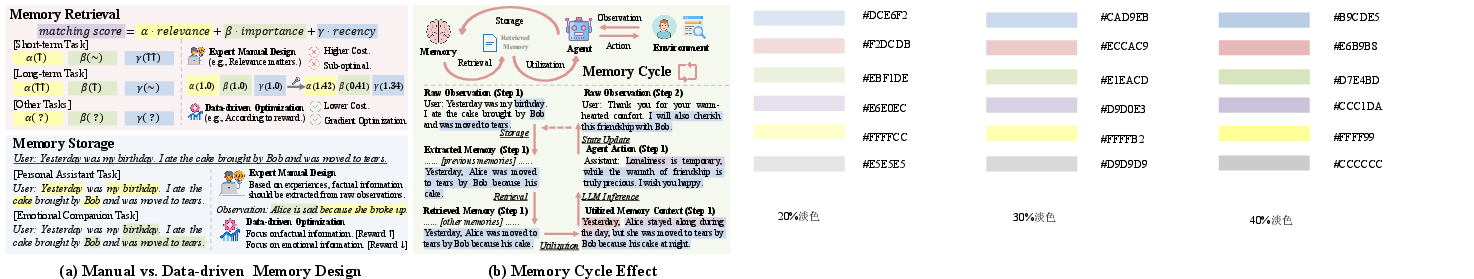

Figure 1: (a) In memory retrieval, the optimal weights for different aspects vary across different tasks. Similarly, in memory storage, the attention of information storage is task-dependent as well. However, manual model adaptation by human experts results in higher labor costs and suboptimal performance. (b) We demonstrate the memory cycle during interactions between agents and environments.

Methodology

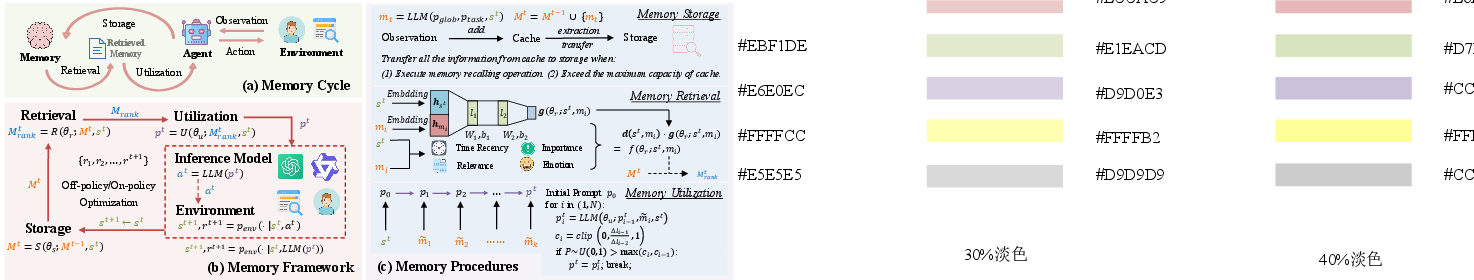

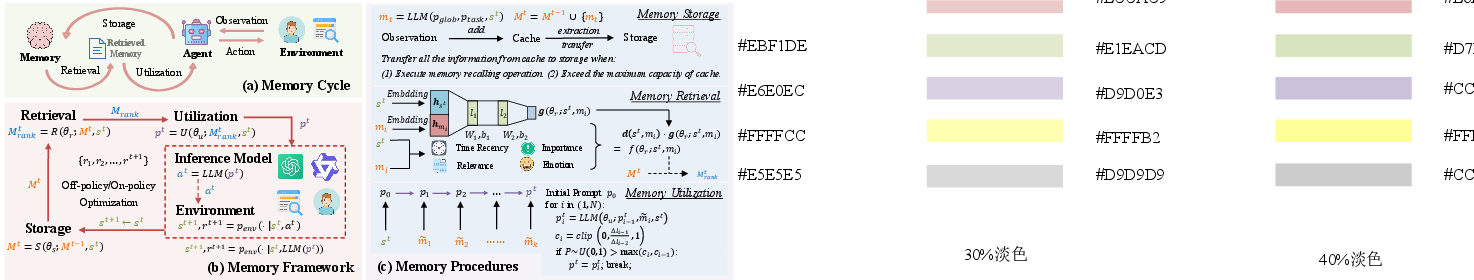

The proposed framework centers around three main procedures: memory retrieval, utilization, and storage. For memory retrieval, a Mix-of-Expert (MoE) gate function is employed to dynamically weigh different metrics depending on the task at hand. This approach contrasts with traditional methods which use fixed weights and often fail to optimize retrieval efficiency across diverse environments.

The memory utilization process involves a learnable aggregation mechanism that is optimized using both Supervised Fine-Tuning (SFT) and Direct Preference Optimization (DPO) methods. This process allows for the integration of dynamic memory usage into prompts fed to LLMs, thereby enhancing decision-making capabilities.

Figure 2: Overview of the memory cycle effect and adaptive memory framework.

For memory storage, task-specific reflections are used to adaptively store observations as memories. This adaptive process replaces rigid, manually-designed instructions with a reflection-based approach that learns from interactions to optimize what information is stored.

Optimization Strategies

The authors propose both off-policy and on-policy optimization strategies to train the memory framework. Off-policy optimization leverages pre-collected interaction data to refine memory mechanisms, while on-policy optimization continuously interacts with the environment to adjust model parameters in real-time. The latter strategy, though computationally intensive, addresses potential distribution shifts encountered in the off-policy mode.

Experimental Results

The experimental evaluation conducted across varied datasets such as HotpotQA (with different difficulty levels) demonstrates the effectiveness of the proposed framework. The results show that the on-policy optimized model significantly outperforms baselines, especially in harder tasks that require complex reasoning across multiple steps.

One notable observation is that models utilizing this framework tend to require fewer reasoning steps to arrive at correct answers, suggesting improved efficiency in decision-making. The results also indicate variability in performance depending on the inference models used, highlighting differences in their in-context learning capabilities.

Implications and Future Work

The introduction of an adaptive, data-driven memory framework for LLM-based agents offers a substantial improvement over traditional memory methods. Practically, this research could lead to more efficient and versatile AI systems in areas where dynamic interaction and continuous learning are paramount.

Future work should focus on extending these techniques to domains that rely on implicit memory or require different reasoning structures. There is also potential to further explore optimization strategies that mitigate the costs associated with on-policy updates.

Conclusion

This paper contributes an innovative memory framework that enables LLM-based agents to adaptively optimize memory retrieval, utilization, and storage in interactive environments. By implementing both off-policy and on-policy optimization techniques, the proposed framework enhances the learning efficiency and decision-making capability of agents, making it a valuable advancement in the development of intelligent systems.