Persistent Memory in LLM Agents

- Persistent memory for LLM agents is a structured framework that enables long-term retention, dynamic organization, and selective retrieval to enhance sequential decision-making.

- Advanced algorithms integrate reinforcement learning and hierarchical memory modules to update and manage stored interactions efficiently without retraining core model parameters.

- Human-like recall and collaborative multi-agent memory strategies empower personalized, adaptive, and secure multi-session applications in complex, real-world environments.

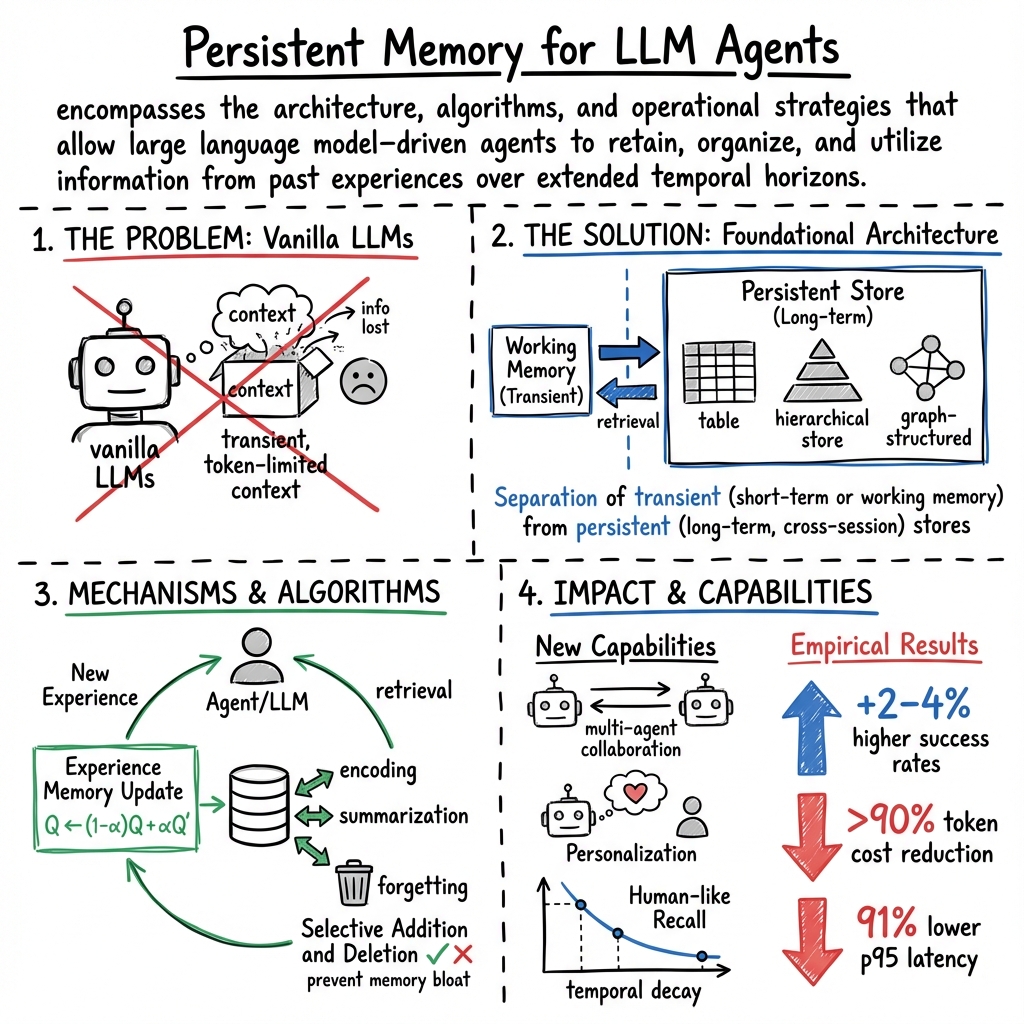

Persistent memory for LLM agents encompasses the architecture, algorithms, and operational strategies that allow LLM–driven agents to retain, organize, and utilize information from past experiences over extended temporal horizons. This capability is vital for robust sequential decision-making, accurate personalization, lifelong learning, multi-agent collaboration, and the handling of complex, multi-step tasks. Persistent memory for LLM agents has evolved rapidly, drawing on both cognitive science and algorithmic innovations, and is now a critical research frontier with significant empirical advances.

1. Foundational Memory Architectures and Principles

Persistent memory in LLM agents distinguishes itself through long-term retention, structured organization, and dynamic update mechanisms—going beyond the transient, token-limited context commonly associated with vanilla LLMs. Early conceptual advances position the external memory mechanism as a structured, evolving table, buffer, or hierarchical store separate from the internal, static model weights.

- REMEMBERER implements persistent episodic memory as a table of interaction records—each storing task description, observation, action, and Q value—updated by reinforcement learning with experience memory (RLEM). This enables the agent to reason over both past successes and failures without fine-tuning the LLM parameters (Zhang et al., 2023).

- Human cognitive models, such as Baddeley’s working memory and the Atkinson-Shiffrin model, have inspired the incorporation of centralized working memory hubs, episodic buffers, and mechanisms for encoding, prioritization, and layered retrieval (Guo et al., 2023).

- Architectural innovations include multi-level memory hierarchies (e.g., Core/Episodic/Semantic/Procedural in MIRIX (Wang et al., 10 Jul 2025); STM/MTM/LPM in MemoryOS (Kang et al., 30 May 2025); and commit/branch/merge/versioned hierarchy in Git-Context-Controller (Wu, 30 Jul 2025)), as well as graph-structured and event-based segmentation from cognitive science (Nemori’s Two-Step Alignment and Predict-Calibrate principles (Nan et al., 5 Aug 2025)).

Key Principle: Separation of transient (short-term or working memory) from persistent (long-term, cross-session) stores, with dynamic organizational structures and explicit mechanisms for creation, update, retention, and pruning, is the unifying concept in persistent memory architectures.

2. Memory Update, Retrieval, and Management Algorithms

Algorithmic frameworks for persistent memory prioritize efficient, scalable learning without constant retraining.

- Experience Memory Update: RLEM updates the persistent table via Q-learning rules; positive and negative exemplars are selected dynamically for in-context prompting, employing one-step or n-step bootstrapping:

This allows for incremental policy improvement through memory editing rather than LLM parameter updates (Zhang et al., 2023).

- Hierarchical and Modular Operations: Modern frameworks such as MemEngine decompose persistent memory into pluggable modules: encoding (e.g., embedding with E5), retrieval (semantic search via cosine similarity), summarization, forgetting, and meta-learning. These can be mixed/matched to implement known strategies like MemoryBank, MBMemory, FUMemory, and tree-structured aggregations (Zhang et al., 4 May 2025).

- Selective Addition and Deletion: Empirical findings show the necessity of rigorous selection for both storage (addition) and removal (deletion), as indiscriminate strategies propagate errors and degrade long-term agent performance. Utility-based and retrieval-history-based deletion prevent memory bloat and error propagation—yielding up to 10% performance gains over naive strategies (Xiong et al., 21 May 2025).

- Chunking and Compression: For stateful LLM inference on constrained or mobile hardware, persistent conversational state (e.g., KV cache) can be compressed and swapped using chunk-wise, tolerance-adaptive quantization, recompute-pipelined loading, and eviction policies (LCTRU queues) (Yin et al., 2024). This reduces memory load and latency for on-device LLM agents.

- Graph-based and Event Segmentation: Memory architectures such as Mem0 and Nemori build memory graphs or semantically segmented episodes to capture relational and temporal dependencies, allowing for efficient multi-hop, temporal, and topic-based retrieval (Chhikara et al., 28 Apr 2025, Nan et al., 5 Aug 2025).

3. Personalization, Human-Like Recall, and Adaptivity

Persistent memory directly enables advanced forms of agent personalization and lifelong learning.

- Dynamic Human-like Recall: Models quantify memory consolidation using temporal decay modulated by recall relevance and frequency, emulating psychological memory retention curves:

Here, contextual relevance (cosine similarity), time since event, and recall frequency jointly influence memory accessibility (Hou et al., 2024).

- Prospective and Retrospective Reflection: Reflective memory management (RMM) constructs memory at adaptive granularities (utterance, turn, session, or topic) and refines retrieval using feedback from response citations, applying online reinforcement learning to rerank memory relevance (Tan et al., 11 Mar 2025).

- Self-Organizing, Cognitive-Inspired Architectures: Nemori autonomously segments conversational streams into semantically aligned episodes (Boundary Alignment) and continually updates semantic knowledge via active prediction-calibration loops based on the Free-Energy Principle. Prediction discrepancies (prediction gaps) drive the integration of new knowledge, moving beyond passive fact extraction (Nan et al., 5 Aug 2025).

- Hierarchical, Modular Structures: MIRIX employs six distinct memory types (Core, Episodic, Semantic, Procedural, Resource, Knowledge Vault), each managed by a dedicated agent and coordinated by a meta memory controller. This structure supports both multimodal inputs and task-specific persistence (Wang et al., 10 Jul 2025).

4. Collaborative and Multi-Agent Memory

Persistent memory mechanisms have evolved to support multi-agent, multi-user systems, with explicit attention to access control, sharing, and provenance.

- Collaborative Memory Framework: Memory is partitioned into private (user-specific) and shared (cross-user) tiers. Bipartite graphs encode dynamic user-agent-resource permissions, enforcing both read and write access policies and storing immutable provenance for full auditability (Rezazadeh et al., 23 May 2025).

- Memory Sharing and Collective Intelligence: Agents participating in the Memory Sharing framework operate with real-time PA (prompt–answer) pairs, dynamically filtered and scored before being injected into a growing shared pool. Inter-agent memory sharing fosters collective self-enhancement and learning (Gao et al., 2024).

- Domain and Popularity Effects: Agents such as AgentCF++ explicitly separate domain-specific and fused cross-domain memories, incorporating interest group and group-shared memory for modeling popularity phenomena among users with similar traits (Liu et al., 19 Feb 2025).

5. Empirical Results, Benchmarks, and Evaluation

Significant empirical progress has validated the benefits of persistent memory mechanisms.

- Memory-Augmented RL Agents: In navigation and goal-directed RL tasks (e.g., WebShop, WikiHow), REMEMBERER yields +2–4% higher success rates and far greater robustness compared to ReAct and baseline LLMs, requiring orders-of-magnitude fewer training steps (Zhang et al., 2023).

- Long-Context and Conversational Tasks: Systems such as Mem0 and MIRIX have established new SOTA performance—attaining up to 26% relative improvement on LLM judge metrics, 91% lower p95 latency, and >90% token cost reduction relative to full-context approaches (Chhikara et al., 28 Apr 2025, Wang et al., 10 Jul 2025). Nemori maintained superior accuracy and ≈90% token reduction on LoCoMo and LongMemEval benchmarks at context lengths exceeding 100K tokens (Nan et al., 5 Aug 2025).

- Memory Management Impact: Systematic studies show that selective addition and deletion boost long-term agent performance by 10% and mitigate the risks of error propagation and misaligned experience reuse (Xiong et al., 21 May 2025).

- Comprehensive Evaluation: MemBench introduces a multi-aspect benchmark framework covering factual and reflective memory, multiple interaction scenarios, and diverse metrics (accuracy, efficiency, capacity), revealing trade-offs between memory size, retrieval efficiency, and contextual coherence (Tan et al., 20 Jun 2025).

6. Security, Privacy, and Operational Challenges

Persistent memory introduces new privacy and operational concerns.

- Privacy Leaks via Memory Retrieval: The MEXTRA attack demonstrates that stored memory records (demonstration queries) can be exfiltrated by black-box prompt attacks, exploiting the retrieval mechanisms of LLM agents (Wang et al., 17 Feb 2025). Vulnerability depends on similarity functions, embedding models, memory size, retrieval depth, and LLM backbone.

- Mitigations: Defensive strategies include hardcoded system prompt filtering, memory de-identification, user/session-level isolation, and robust access control for both storage and output (Wang et al., 17 Feb 2025, Rezazadeh et al., 23 May 2025).

- Evaluation of Management Strategies: Ill-designed memory management (e.g., unbounded growth or indiscriminate retention) can cause error proliferation, capacity constraints, and degraded robustness under distribution shifts or resource scarcity (Xiong et al., 21 May 2025, Tan et al., 20 Jun 2025).

7. Practical Applications and Future Directions

Persistent memory has immediate implications for a broad array of intelligent systems.

- Production-Ready Agents: Hierarchically managed memory—such as in Mem0 and MemoryOS—supports efficient, context-rich dialogue over extended sessions, with applications in customer service, personal assistants, and automated research workflows (Chhikara et al., 28 Apr 2025, Kang et al., 30 May 2025).

- Scientific and Tool-Oriented Agents: SciBORG leverages finite-state automata memory, enabling reliable execution of complex scientific and automation workflows where persistent state tracking is critical (Muhoberac et al., 30 Jun 2025).

- Multimodal and Real-Time Systems: MIRIX supports multimodal (e.g., screenshot-based) and real-time monitoring, leveraging a modular agent ensemble for rich, persistent user memory across domains and formats (Wang et al., 10 Jul 2025).

- Versioned and Branchable Context: The Git-Context-Controller introduces milestone-based memory checkpointing, branching, and merging—mirroring software version control for agent context, allowing for reflection, error recovery, and collaborative, long-horizon workflows (Wu, 30 Jul 2025).

- Cognitively Inspired, Self-Evolving Agents: Nemori and other cognitive-inspired systems are advancing the self-organization and continual adaptation of agent memory, significant for future lifelong learning agents (Nan et al., 5 Aug 2025).

Persistent memory for LLM agents is thus characterized by structured, dynamic, and context-sensitive organization; principled update and retrieval mechanisms; rigorous privacy and access control; and scalability to both individual and collaborative, multi-modal, and multi-session environments. Continued convergence of cognitive science, reinforcement learning, advanced memory architectures, and rigorous benchmarking is expected to further enhance the robustness, generality, and real-world impact of LLM-driven AI agents.