- The paper introduces Nemori’s dual-memory system using the Two-Step Alignment and Predict-Calibrate principles to optimize long-term memory retention.

- The approach transforms linear conversation processing into structured narrative episodes, achieving superior results in temporal reasoning benchmarks.

- Experimental results demonstrate Nemori's efficiency and scalability, effectively handling dialogues with over 105K tokens and outperforming existing models.

Nemori: Self-Organizing Agent Memory Inspired by Cognitive Science

Introduction

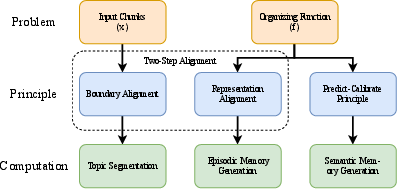

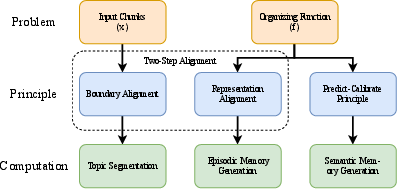

The limitations of LLMs in maintaining continuity across long interactions present a significant obstacle for their application in autonomous agents. Nemori addresses this limitation by introducing a novel memory architecture refined through human cognitive principles, facilitating effective longer-term interactions. The architecture relies on two main frameworks: the Two-Step Alignment Principle and the Predict-Calibrate Principle. Through these frameworks, Nemori transforms the linear processing of conversations into a dynamic memory system capable of adaptive learning.

Two-Step Alignment Principle

The Two-Step Alignment Principle focuses on solving complexities in memory granularity by demarcating conversation into meaningful, coherent episodes. This principle is operationalized via the concepts of Boundary Alignment and Representation Alignment. For Boundary Alignment, Nemori employs a semantic boundary detector to segment conversational flows at semantically significant junctures:

Figure 1: The conceptual framework of Nemori, illustrating the mapping from problem to principle to computation.

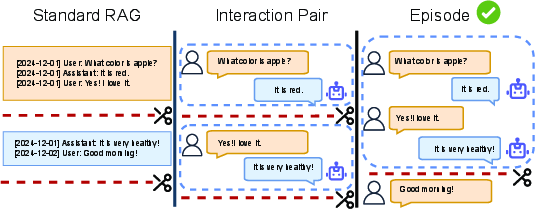

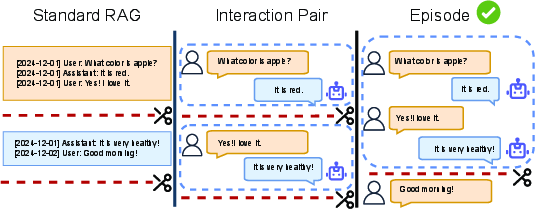

The Representation Alignment follows, translating these segments into structured episodes maintained in Episodic Memory. This transition into narrative structures preserves semantic integrity and contextual information, akin to episodic memory in humans. Through intelligent boundary detection and narrative generation, Nemori optimizes the input chunking process without the arbitrary segmentation pitfalls observed in existing systems (Figure 2).

Figure 2: An illustration of different conversation segmentation methods, highlighting the superiority of episodic segmentation.

Predict-Calibrate Principle

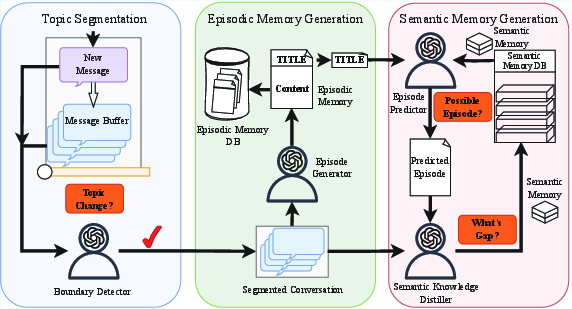

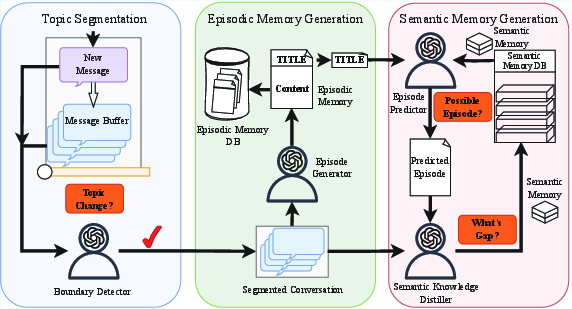

Nemori extends its capability for memory organization via the Predict-Calibrate Principle, inspired by the Free-energy Principle. This principle enables proactive learning by addressing prediction gaps, akin to error-driven learning mechanisms in human cognition. It involves three stages: Prediction, Calibration, and Integration.

- Prediction: Relevant memories are retrieved to predict new episode content.

- Calibration: Discrepancies between predicted and actual conversations are analyzed, and new semantic knowledge is distilled from these gaps.

- Integration: New insights are integrated into the semantic memory, refining the system's knowledge base iteratively.

This synergistic framework of predictive adaptation ensures that Nemori continuously refines its semantic memory, learning from each interaction.

Figure 3: The Nemori system features modules that collaboratively enhance episodic and semantic memory generation.

Experimental Results

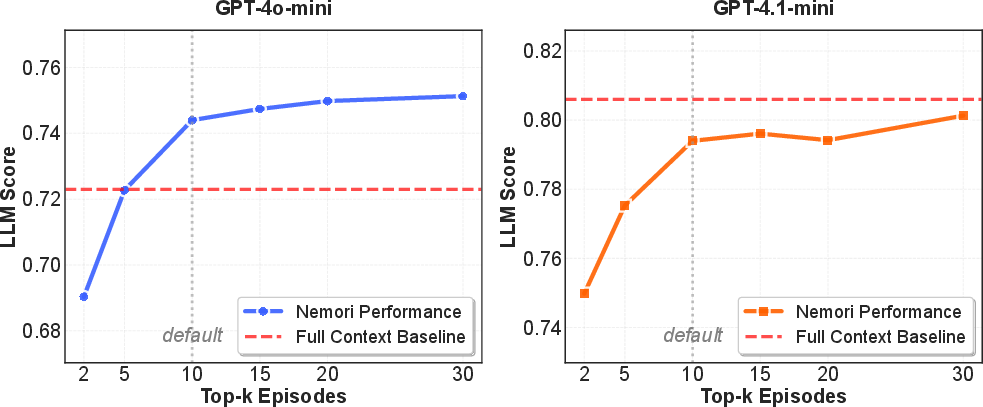

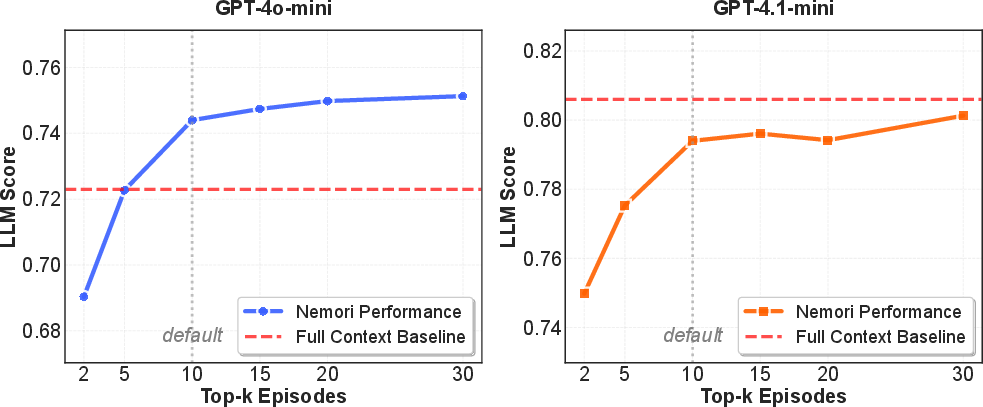

Nemori significantly outperformed existing systems in benchmarks such as LoCoMo and LongMemEvalS, particularly in tasks requiring temporal reasoning. Detailed comparisons reveal substantial advantages in long-term memory retention and query understanding:

- Efficiency: Despite complex tasks, Nemori requires less input data to outpace the Full Context model by optimizing important memories.

- Robustness in Large Contexts: Nemori manages dialogues with 105K tokens effectively, demonstrating scalability for extensive interactions.

Nemori's dual-memory system was found to synchronize memory organization effectively, outperforming comparison systems by leveraging its dual-pillar framework. The evidence points to a transformative approach to LLM memory management.

Conclusion

Nemori's contribution to memory systems for LLMs offers a scalable solution to long-term memory retention issues in autonomous agents. By incorporating principles reflective of human cognitive processes, it provides a versatile template for future advancements in AI memory systems, presenting a dynamic, self-optimizing memory capable of supporting complex, temporally extended interactions without excessive computational burden.

The principles and framework established by Nemori bring forth a methodology with broad implications for future developments in autonomous LLM applications, guiding more nuanced, context-sensitive memory architectures.

Figure 4: Impact of top-k episodes on LLM score across different models, ensuring optimal performance with minimal data.