- The paper demonstrates that Tiny Recursive Models leverage task-specific identity conditioning to dramatically improve accuracy on ARC-style tasks.

- The study reveals that test-time compute strategies, such as augmentation and ensembling, boost Pass@1 accuracy by over 10 percentage points.

- The analysis shows that shallow recursion and efficient architectures enable higher throughput and lower memory usage compared to larger LLMs.

Tiny Recursive Models on ARC-AGI-1: Empirical Analysis and Observations

Abstract

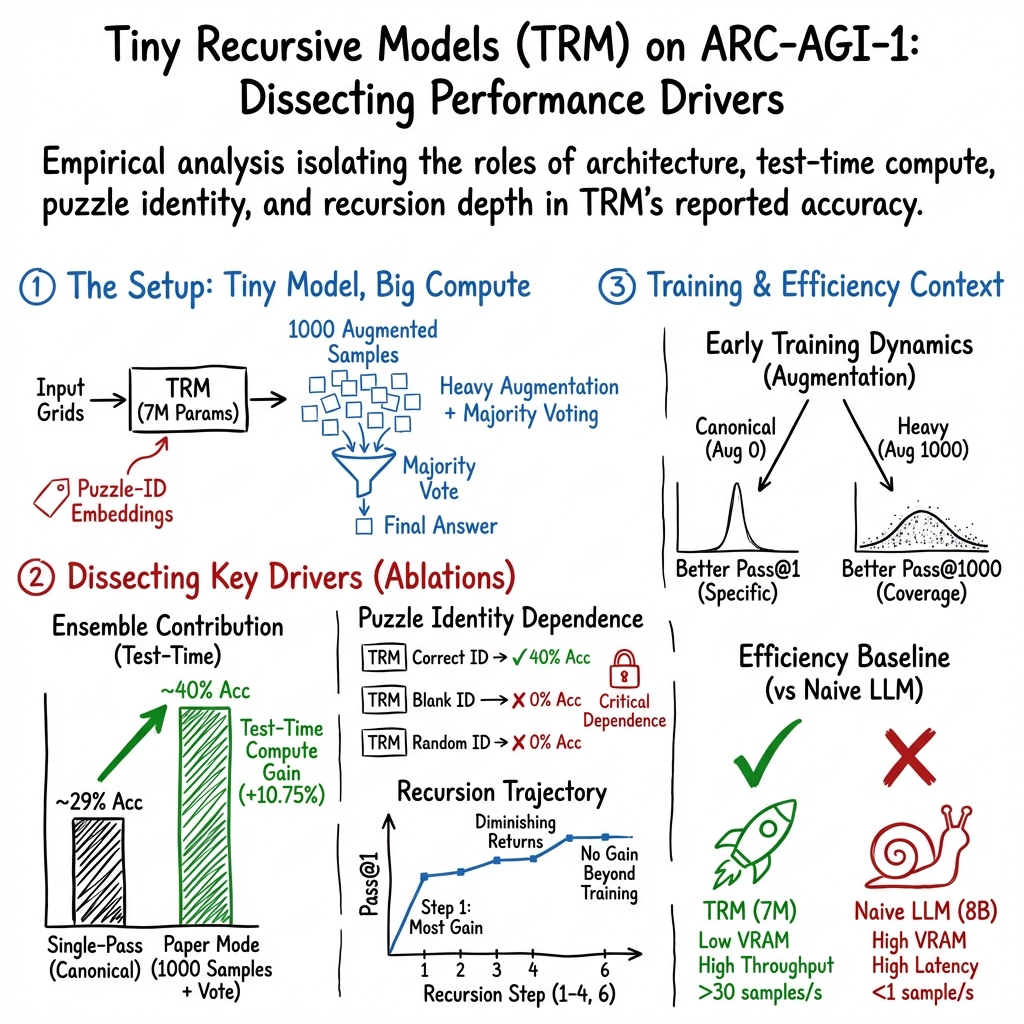

The paper "Tiny Recursive Models on ARC-AGI-1: Inductive Biases, Identity Conditioning, and Test-Time Compute" (2512.11847) explores the performance of Tiny Recursive Models (TRM) in contrast to LLMs on Abstraction and Reasoning Corpus (ARC) style tasks. It investigates how TRM's performance stems from its architecture, test-time compute strategies, and task-specific priors.

Introduction

TRM offers a parameter-efficient alternative for solving ARC-style tasks, distinct from the large-scale LLM approach. The paper provides a critical analysis of TRM's performance, focusing on test-time augmentation, task identifier conditioning, recursion trajectory, and efficiency comparisons with LLMs like Llama 3 8B. Furthermore, it examines TRM's dependency on puzzle identities and the impact of augmentation on solution distribution.

Behavioral Findings and Efficiency Comparison

Role of Test-Time Compute

One of the core findings is that TRM's reported accuracy significantly benefits from test-time compute strategies such as augmentation and ensembling. The results show a 10.75 percentage point improvement in Pass@1 accuracy with majority-vote ensembling using a 1000-sample voting pipeline compared to single-pass inference. This emphasizes the importance of ensemble methods in enhancing TRM's performance.

Puzzle Identity Conditioning

The paper highlights TRM's strict dependence on task identifiers. Through a puzzle-identity ablation, it reveals that substituting the correct puzzle ID with a blank or random token leads to zero accuracy. This underscores the crucial function of task-specific embeddings in TRM's architecture, suggesting that identity tokens act as keys retrieving specific computational behaviors.

Recursion Trajectory Analysis

The study finds that TRM accomplishes most of its accuracy in the first recursion step and performance saturates after a few latent updates. This indicates that, although deeply supervised during training, TRM functions with effective shallow recursion at inference. Such behavior suggests that the model's recursive depth primarily serves to refine initial mapping rather than facilitate deep reasoning.

Training Dynamics Under Augmentation

Experiments comparing canonical versus augmented training regimes show that heavy augmentation expands the solution distribution and improves multi-sample metrics before enhancing single-pass accuracy. This illustrates how TRM efficiently leverages diversity in training examples to enhance its generalization across augmented inputs.

Efficiency and LLM Baseline Comparison

TRM's efficiency is compared with a naive QLoRA fine-tuning of Llama 3 8B. TRM's non-autoregressive design achieves significantly higher throughput and lower memory usage, illustrating its advantage in operational settings compared to larger transformer models. This comparison solidifies TRM's fit for tasks requiring extensive sampling without compromising computational efficiency.

Implications and Future Directions

TRM's performance on ARC-AGI-1 can be attributed to its interaction between efficient architecture, task-specific conditioning, and aggressive test-time compute. These findings invite further research into refining TRM architectures or training protocols to enhance generalizable reasoning. Future work may explore reducing identity conditioning dependence, deeper exploration into recursion depth roles, and expanding efficient architectures across various reasoning benchmarks.

Conclusion

The examination of TRM in ARC-AGI-1 tasks demonstrates that TRM's success involves a strategic blend of efficient design, identity conditioning, and compute-intensive ensembling, rather than relying exclusively on internal recursive reasoning. Through further analysis and optimization of these components, recursive models can become more effective in reasoning applications across diverse domains.