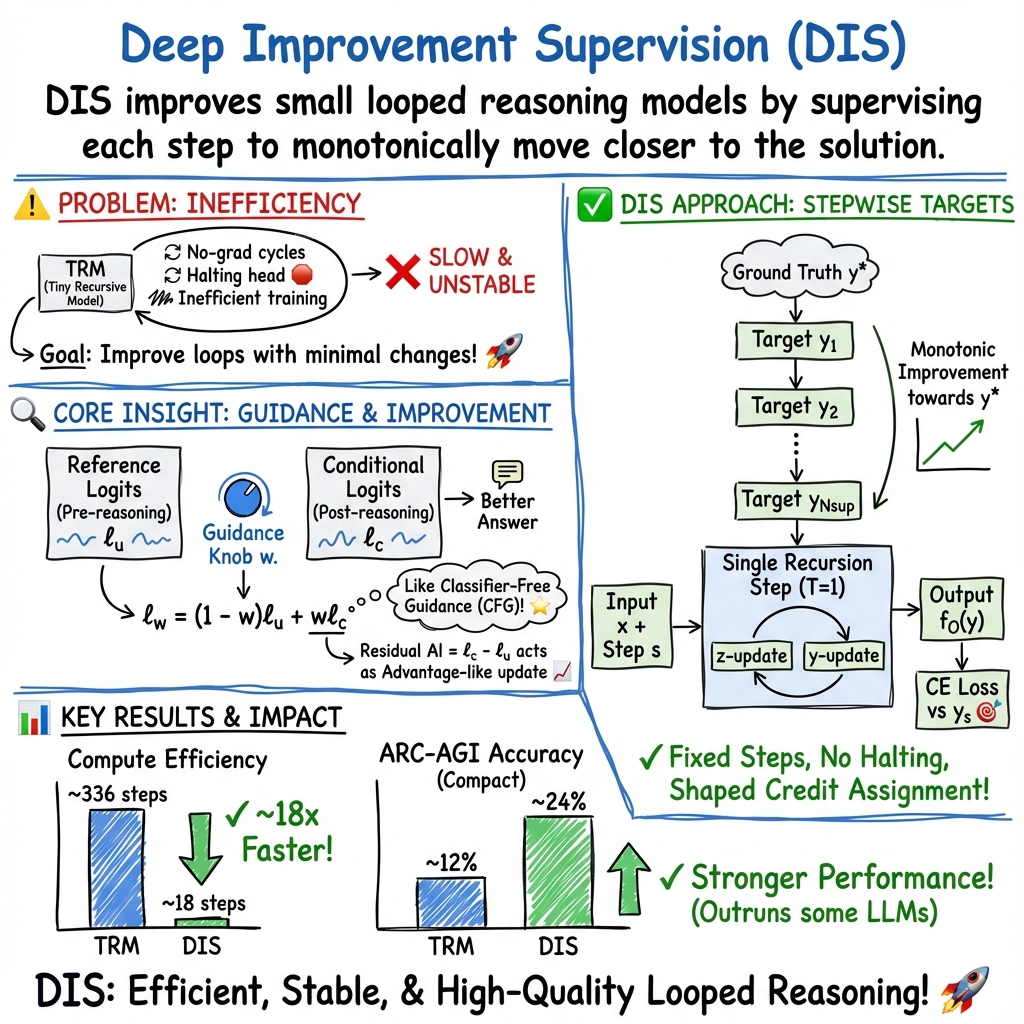

- The paper introduces a novel DIS approach that guides recursive reasoning by providing step-specific targets to optimize training.

- It leverages classifier-free guidance to achieve implicit policy improvement, evidenced by a 24% accuracy on ARC-1 with minimal parameters.

- The methodology significantly reduces computational demands, cutting forward passes by 18x and enabling efficient performance in resource-constrained applications.

"Deep Improvement Supervision": A Technical Analysis

Introduction

The paper "Deep Improvement Supervision" (2511.16886) presents a novel approach to enhancing the efficiency of recursive reasoning models, especially Tiny Recursive Models (TRMs), which are shown to perform well in complex reasoning tasks like the Abstraction and Reasoning Corpus (ARC). The central focus of the research is on how to improve these models' efficiency by introducing minimal changes that would enhance their performance without increasing computational complexity.

Theoretical Foundations

Model Architecture and Reasoning Process

TRMs are small looped architectures employed in iterative reasoning tasks, primarily using iterative refinement loops to process model outputs. These models leverage recursive reasoning similar to Chain-of-Thought (CoT) within LLMs, yet operate with significantly fewer parameters. The paper explores the latent reasoning process, illustrating how these models function as a form of implicit policy improvement via classifier-free guidance (CFG).

Implicit Policy Improvement

The paper articulates the latent reasoning process through policy improvement perspectives, drawing parallels between diffusion models and reinforcement learning (RL). Classifier-Free Guidance (CFG) in diffusion/flow models demonstrates a pathway towards policy improvement by parameterizing a target policy and proving that it achieves higher returns than reference policies. This connects the latent reasoning in TRMs with implicit policy improvement mechanisms that outperform typical training policies (Frans et al., 2025).

Methodology

Deep Improvement Supervision (DIS)

The methodology introduces a training scheme termed Deep Improvement Supervision (DIS), which crafts step-specific targets within each recursion loop, thereby providing structured guidance throughout the model's learning process. This framework leverages discrete diffusion processes to reduce training complexity and enhance generalization, effectively guiding the model’s iterative reasoning steps and optimizing them with respect to well-defined intermediate targets.

Mechanisms of Improvement

DIS utilizes the Advantage Margin Condition to ensure that each reasoning step aligns with an improved policy state relative to the reference condition. This is accomplished by structuring the training process such that each latent update implicitly seeks policy improvements, bolstered by stepwise guided logits that offer controllable guidance scales akin to CFG.

Experimental Results

The paper reports that the approach significantly enhances training efficiency, achieving remarkable performance metrics on challenging reasoning tasks like ARC-AGI, outperforming many large-scale LLMs. Specifically, the proposed model achieves 24% accuracy on ARC-1 with only 0.8 million parameters, indicating compelling efficiency.

Comparison and Analysis

The experiments conducted demonstrate that explicit stepwise supervision enables TRMs to attain higher accuracy without the need for extensive recursive cycles or halting mechanisms. This setup facilitates a reduction in the computational burden, cutting forward passes by 18x, as evidenced by substantial improvements on benchmarks such as ARC-1.

Implications and Future Directions

Practical Applications

The research posits that small recursive models equipped with DIS can operate effectively in scenarios demanding complex reasoning tasks, offering a computationally efficient alternative to LLMs for specific applications where resource constraints are a significant consideration.

Speculative Future Developments

Potential algorithmic enhancements include adaptive supervision steps tailored to task complexity, possibly leveraging discrete latent spaces for robustness and scalability. Moreover, exploring diverse methods for intermediate step generation, such as programmable code-based generators, offers pathways for further optimization of reasoning models.

Conclusion

The paper successfully demonstrates that structured stepwise improvement in TRMs can yield competitive results in complex reasoning tasks, challenging conventional approaches reliant on large-scale architectures. Deep Improvement Supervision emerges as a promising framework for future advancements in efficient model design within the field of AI-driven reasoning.