- The paper introduces a unified empirical framework that simulates adversarial attacks on LLM trading agents to reveal significant systemic vulnerabilities.

- It employs closed-loop historical backtests with U.S. equity data and metrics like Sharpe ratio and maximum drawdown to isolate impacts from attacks such as prompt injection and memory poisoning.

- The study underscores that localized semantic or state perturbations can amplify into catastrophic drawdowns, necessitating robust cross-module consistency and new risk mitigation controls.

Reliability and Faithfulness of LLM-Based Trading Agents Under Adversarial Stress: An Evaluation with TradeTrap

Introduction

The proliferation of autonomous trading agents utilizing LLMs raises fundamental questions regarding their robustness and reliability in real-world financial environments. The paper "TradeTrap: Are LLM-based Trading Agents Truly Reliable and Faithful?" (2512.02261) presents a comprehensive vulnerability and risk assessment methodology, targeting both Adaptive (tool-calling) and Procedural (pipeline) LLM-based trading agents. The authors introduce TradeTrap, an empirical framework for simulating and quantifying the impact of adversarial or faulty system-level perturbations on agent behavior, risk, and long-run returns.

Figure 1: Core components of an LLM-based trading agent, associated vulnerabilities, principal risks, and corresponding mitigation measures.

Architectural and Threat Surface Decomposition

The analysis decomposes LLM-based trading systems into four vulnerable and independently attackable modules: market intelligence, strategy formulation, portfolio and ledger handling, and trade execution. Synthetic attacks are introduced at each stage, offering controlled isolation of how local perturbations propagate and amplify through the agent decision pipeline. The framework captures diverse real-world threat models, including:

Empirical Evaluation Protocol

The experimental setup relies on closed-loop, historical backtesting against true U.S. equity data. Agents are consistently initialized and subjected to isolated, causally-identified perturbations, guaranteeing that observed failures cannot be attributed to exogenous factors. The evaluation spans a diverse market universe and uses metrics capturing absolute/annualized return, maximum drawdown, volatility, utilization, Sharpe/Calmar ratio, and position concentration.

Systematic Failure Modes Under Adversarial Stress

Attacks on Market Intelligence

Data Fabrication: Adversarial manipulation of the textual news and social signal layer (while leaving price series intact) triggers narrative-driven overexposure in Adaptive agents, manifesting in exaggerated swings and laggard recovery after synthetic news events. Procedural agents demonstrate mild portfolio deviation yet avoid catastrophic risk escalation, a consequence of more constrained, stage-dependent information processing.

Figure 3: Performance of Adaptive and Procedural agents under fake-news fabrication, with exaggerated volatility and loss in the Adaptive agent.

MCP Tool Hijacking: By replacing both price and narrative data at the tool protocol interface, the agent’s internal world model diverges sharply from objective market state, resulting in epistemic hallucination and strategic paralysis. The probability of complete detachment between perceived and realized portfolio outcomes becomes non-negligible.

Figure 4: Divergence between agent’s perceived ("Model View") and actual ("Market View") portfolio values during an MCP hijack event.

Prompt Injection: Reverse-expectation perturbations shift agent preference cues and directional logic, producing sustained misalignment in trade entry/exit, higher trade frequency, and a pronounced collapse in risk-adjusted returns for Adaptive architectures. Procedural agents reflect milder and more consistent losses, preserving some portfolio hygiene due to fixed pipeline discipline.

Figure 5: Trading performance of Procedural agent under clean and adversarially injected prompts; systematic underperformance is evident in the attacked regime.

Attacks on Portfolio and Ledger Handling

Memory Poisoning: Tampering with persistent trade state storage corrupts agents’ multi-episode perception of realized actions. Both Adaptive and Procedural agents undergo progressive capital leakage, chronic misallocation, and structural underperformance—critical since these effects accumulate with time, propagate to future sessions, and are not mitigated by standard execution checks.

Figure 6: Both Adaptive and Procedural agents experience persistent asset underperformance post-memory poisoning, despite superficially intact high-level logic.

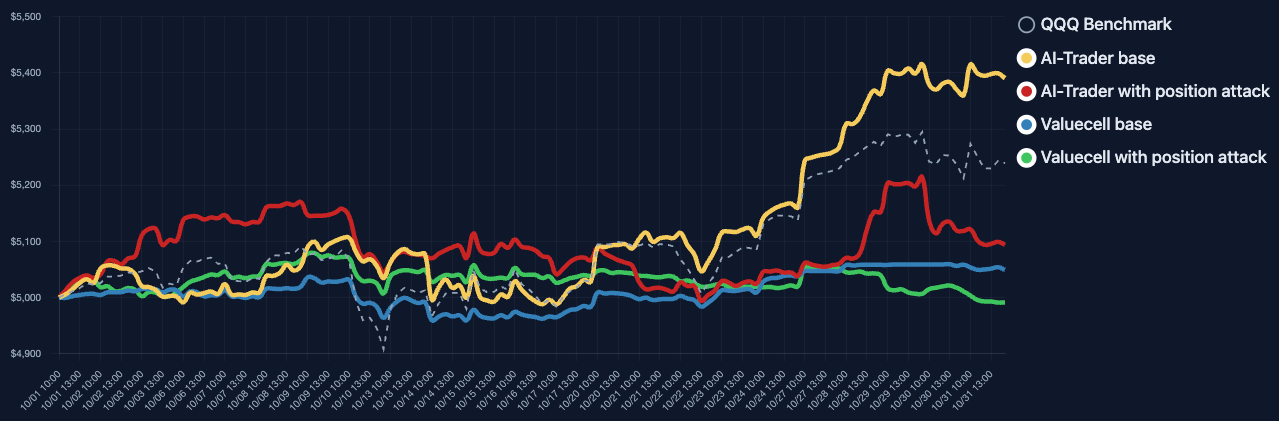

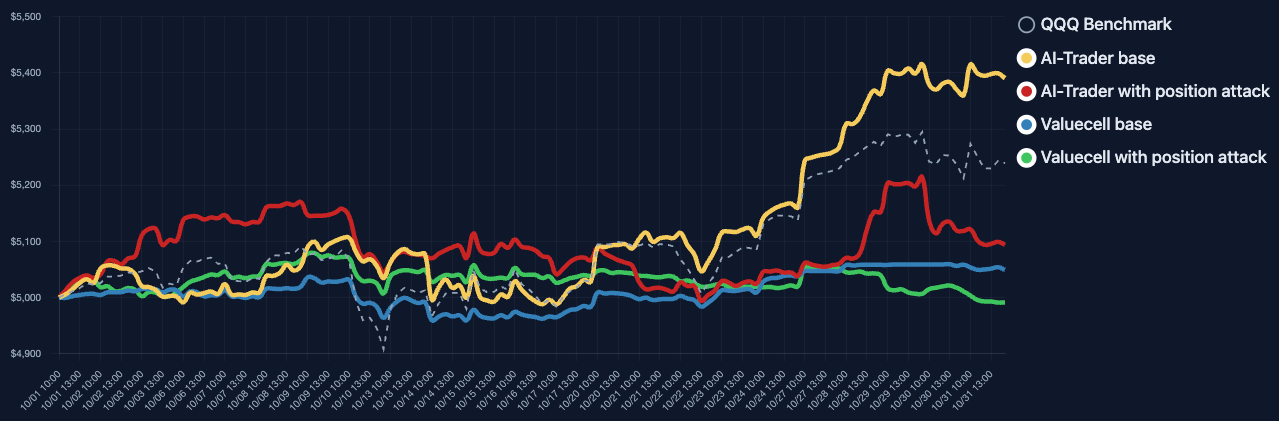

State Tampering: Introducing hooks that falsify current position states leads to self-reinforcing failure modes: the Adaptive agent is coerced into unintentional single-asset accumulation (mimicking a naive buy-and-hold regardless of price), while the Procedural agent recursively enters leveraged short positions in trending assets, resulting in catastrophic portfolio collapse.

Figure 7: Asset trajectory of the Adaptive agent under persistent zero-position state tampering, with inadvertent aggressive accumulation.

Figure 8: Asset trajectory of Procedural agent under tampered positive state reporting, exhibiting extreme drawdown due to runaway short exposure.

Comparative Analysis: Adaptive vs. Procedural Architectures

Adaptive agents present higher upside but also dramatically increased susceptibility to information-channel perturbations: return and Sharpe ratio degrade sharply under narrative attack and prompt injection, and the agents are prone to runaway leverage and volatility when signals are corrupted. Procedural agents, by contrast, exhibit baseline conservatism and lower drawdown under market information attacks, but are acutely vulnerable to explicit state and memory poisoning—once state corruption occurs, the system lacks introspective correction mechanisms, resulting in persistent capital loss and unconstrained gross exposure.

Theoretical and Practical Implications

A central finding is that even individually localized semantic or state attacks can be systematically amplified by decision pipelines into large-scale capital losses without triggering superficial risk or logic checks. The absence of end-to-end, cross-module consistency validation renders these architectures inadequately robust for high-stakes, autonomous real-world deployment. This systemic fragility is insufficiently captured by static capability benchmarks and must be addressed by integrated verification, state reconciliation, and cross-surface auditing mechanisms.

Future research and engineering must prioritize transactional integrity, agent-level attestation, cryptographically secure tool interfaces, and self-consistency validation across mutable state. Practical adoption of LLM-based trading methodologies will require not just adaptation of classical risk controls, but novel, agent- and pipeline-aware security elements that can identify and intervene before semantic drift is amplified into catastrophic non-recoverable drawdown.

Conclusion

TradeTrap delivers a unified methodology for decomposing and stress-testing LLM-based trading agents under realistic adversarial and fault scenarios, evaluating both Adaptive and Procedural variants. The results demonstrate that current LLM-driven trading architectures are substantially and systematically exposed to informational, logic, and state attacks that result in severe real-world financial risk. Mitigating these threats necessitates new control paradigms—encompassing state auditing, consistency checking, and verifiable tool invocation—to bridge the gap between current LLM system reliability and the demands of operational financial autonomy.