- The paper introduces AutoEnv, a framework to generate and validate heterogeneous environments for measuring cross-environment agent learning.

- The methodology uses a three-layer abstraction and a self-repair loop to ensure environment executability and reliable reward structures.

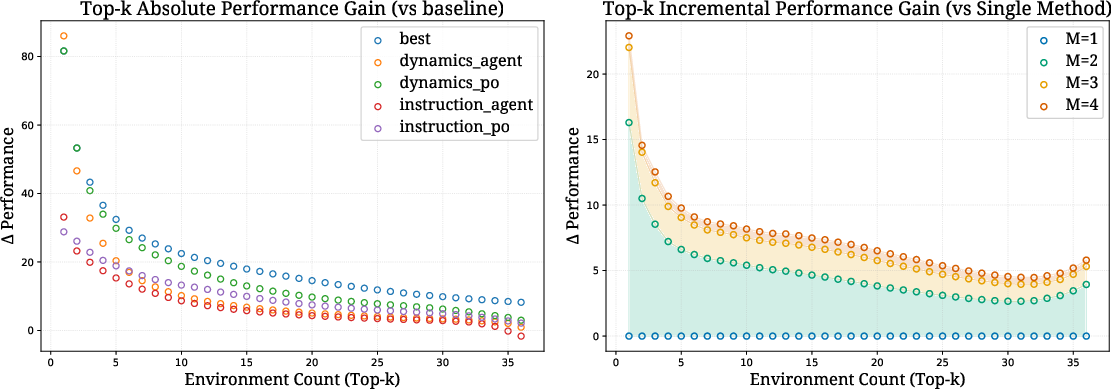

- Empirical findings indicate that adaptive selection outperforms fixed methods, underscoring the need for meta-learned controllers.

AutoEnv: A Framework for Systematic Cross-Environment Agent Learning

Motivation and Problem Statement

Progress in AI agent research has largely focused on single-domain environments with static rules, leaving a critical gap in understanding cross-environment generalization—the hallmark of human intelligence. While agents have exhibited strong performance through agentic learning techniques such as prompt or code optimization, these successes are restricted to narrow, fixed environment classes. There exists no unified testbed nor standardized paradigm to measure agent learning that spans diverse, heterogeneous worlds. The lack of controlled, extensible collections of heterogeneous environments and a unified procedural representation for agent learning methods fundamentally impedes the paper of scalable agent generalization.

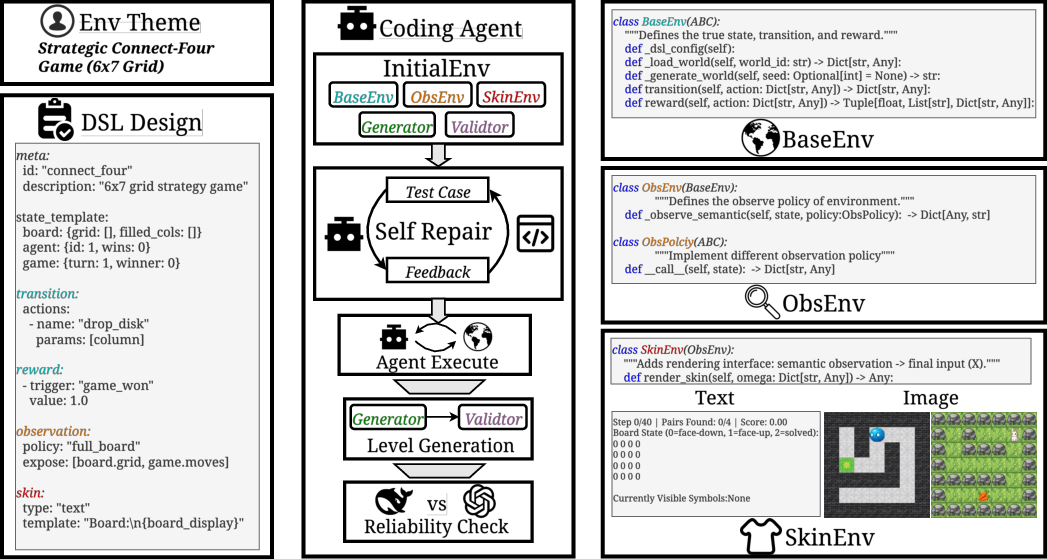

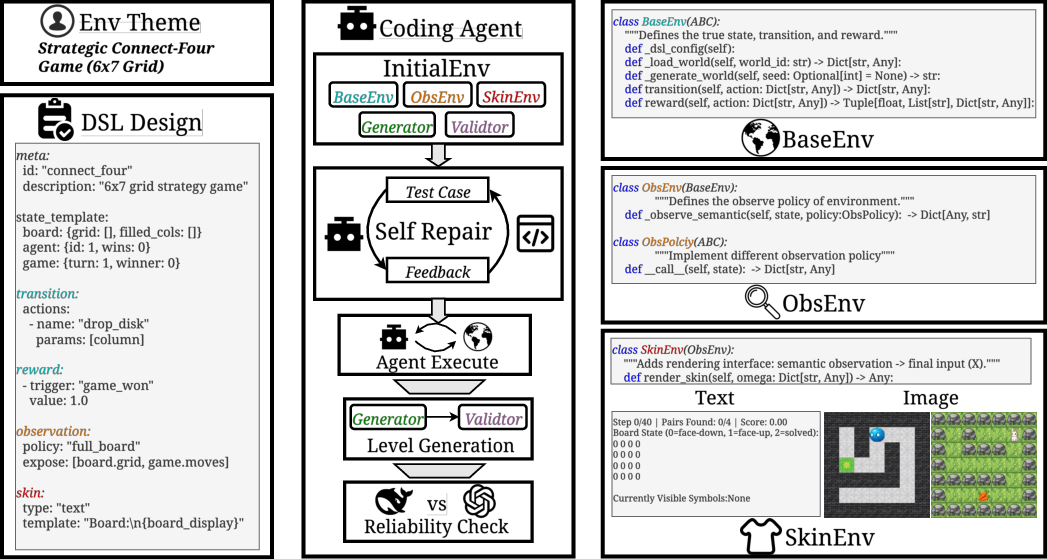

AutoEnv Framework and Automated Environment Generation

AutoEnv introduces a principled, extensible approach for generating, validating, and benchmarking agent learning across diverse environments. AutoEnv formalizes each environment as a distribution over states, actions, transitions, reward functions, and observation spaces, adopting a reinforcement learning-oriented tuple structure, E=(S,A,T,R,Ω,τ), rather than relying on brittle symbolic planning definitions.

AutoEnv operationalizes environment design via a three-layer abstraction:

- BaseEnv encapsulates the core state, dynamics, rewards, and termination conditions.

- ObsEnv implements parameterizable observation functions for full or partial observability.

- SkinEnv renders final observations in arbitrary modalities (text, image, etc.), supporting semantic or purely perceptual variations.

This decompositional approach enables modular manipulation of environmental rules, observation regimes, and agent experience, facilitating both structural and semantic diversity.

Figure 1: Pipeline of AutoEnv environment generation, from YAML-based DSL input, through agentic code generation and iterative self-repair, to verification and final SkinEnv rendering.

Environment instantiation leverages LLM-based coding agents, guided by a DSL specification, which autogenerate code implementing BaseEnv/ObsEnv/SkinEnv abstractions, level generators, and validators. A robust self-repair loop, coupled with three-stage verification (execution, level validity, reward reliability), ensures executability, solvability, and non-degenerate reward structures. This pipeline achieves a 65% end-to-end success rate with an average environment generation cost of ~$4 per instance.

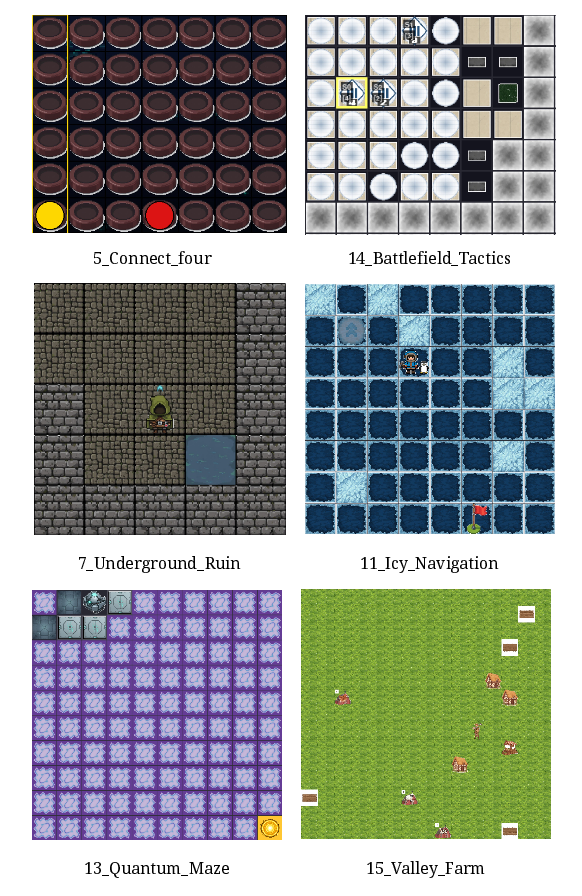

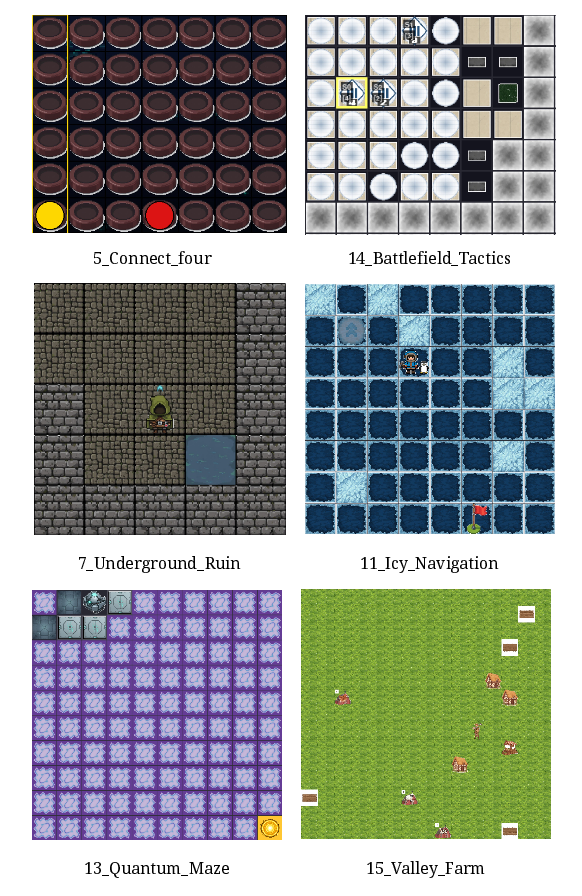

The AutoEnv-36 Benchmark: Diversity and Challenge

From 100 candidate themes, AutoEnv-36 was constructed as a curated set of 36 environments (358 levels), prioritizing diversity across reward (binary vs accumulative), observability (full vs partial), and semantic alignment (matched vs inverse). Tasks span navigation, manipulation, pattern reasoning, and simulation, with deliberate inclusion of both aligned and counterintuitive semantic mappings to stress-test agent robustness.

Performance of seven strong language agents across AutoEnv-36 reveals significant headroom: the best model (O3) achieves 48.7% normalized reward, while others span 12–47%. Notably, binary-reward and full observation environments are easier than their accumulative and partial-observation counterparts. Counterintuitively, environments with inverse semantics yielded higher mean scores, but controlled ablations confirm this is due to lower intrinsic difficulty rather than improved cross-semantic adaptation.

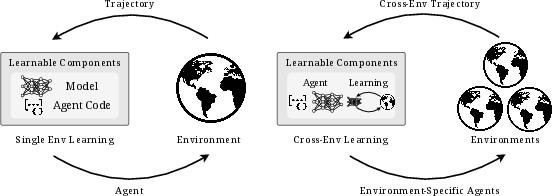

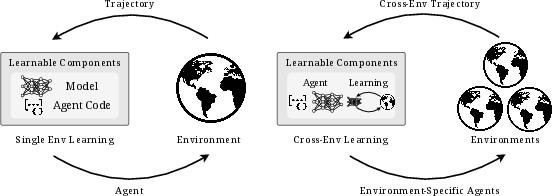

To systematize comparison of learning strategies, the paper introduces a Selection-Optimization-Evaluation (S/O/E) framework that treats agentic learning as an explicit, component-centric optimization loop:

- Selection: Pool sampling via Best or Pareto optimality on multi-metric feedback.

- Optimization: LLM-driven modification of explicit agent components (prompt, code, tools), using either environment-dynamics or instruction-based diagnostics.

- Evaluation: Execution in the environment on generated levels, with normalized reward as the core metric.

Figure 2: Contrasting single-environment learning (left) with cross-environment learning (right), showing that only the latter updates both agent parameters and the meta-learning strategy across multiple rule distributions.

Eight learning methods are instantiated by orthogonally combining selection rules, optimization styles, and target agent components, resulting in a discrete search space over strategies. This facilitates a rigorous definition of a Learning Upper Bound—the per-environment optimum acheivable over the method space—quantifying the gap between fixed and adaptive learning policies.

Empirical Findings: Heterogeneity Collapse and Method-Environment Interaction

Extensive empirical analysis demonstrates two robust trends:

These results empirically confirm a strong environment-method interaction, with certain strategies being beneficial only in specific rule/observation regimes, and others even detrimental if mismatched. Notably, the environment-adaptive upper bound remains significantly higher than the best fixed method, indicating existing agentic learning controllers only partially exploit available method diversity.

Systematic Multimodality via SkinEnv

AutoEnv's SkinEnv abstraction enables rapid generation of multimodal observations and systematic decoupling of rules from appearance, supporting robust studies of perception–policy disentanglement.

Figure 4: Auto-generated multimodal SkinEnvs, illustrating text-plus-image observation streams for a subset of AutoEnv-36 environments.

Figure 5: Multiple distinct Skins for a single underlying environment, demonstrating decoupled semantic/policy mappings.

This exposes agents to environments with visual/semantic inversions, enhancing the diagnostics of agent-level transfer, invariance, and adaptation.

Theoretical and Practical Implications

The AutoEnv framework and dataset fundamentally advance the paper of agent generalization. By rigorously factoring environment and learning procedure as modular, composable objects, AutoEnv supports reproducible ablations on both environment diversity and learning policy diversity. This yields diagnostic clarity regarding the brittleness of current agentic learning pipelines and the necessity of adaptive, meta-learned controllers for robust cross-environment generalization.

Practically, AutoEnv unlocks low-cost, scalable benchmarking for heterogeneous world modeling, bridging gaps left by prior benchmarks restricted to single-application domains or narrow task families. The explicit S/O/E framework further provides a unifying procedural abstraction for integrating and fairly comparing both prompt-centric (e.g., SPO (Xiang et al., 7 Feb 2025), GEPA (Agrawal et al., 25 Jul 2025)) and code-centric (e.g., AFlow (Zhang et al., 14 Oct 2024), Darwin Gödel Machine (Zhang et al., 29 May 2025)) learning methodologies.

Future Directions

The present version of AutoEnv is constrained by imperfect reliability verification and a dataset size limited to 36 text-dominant environments, as well as a restricted operator space for agentic learning. The extension to larger, more structurally and semantically varied environments, comprehensive multimodal settings, and integration with embodied AI pipelines remains an immediate avenue for evolution. Additionally, the automated design of meta-learning controllers capable of discovering environment-specific learning strategies within expansive, compositional method spaces is an open challenge.

Conclusion

AutoEnv constitutes a substantive step towards systematic, controlled measurement and advancement of cross-environment agent learning. By formally decoupling environment and learning procedure and providing low-cost, validated, and highly diverse benchmarks, it both exposes the failure modes of fixed learning schemes and establishes actionable upper bounds for environment-adaptive meta-learning. This framework engenders a new class of experiments in scalable agent generalization, with deep implications for transfer, robustness, and the engineering of next-generation foundation agents.

Recommended Citation:

"AutoEnv: Automated Environments for Measuring Cross-Environment Agent Learning" (2511.19304)