GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning (2507.19457v1)

Abstract: LLMs are increasingly adapted to downstream tasks via reinforcement learning (RL) methods like Group Relative Policy Optimization (GRPO), which often require thousands of rollouts to learn new tasks. We argue that the interpretable nature of language can often provide a much richer learning medium for LLMs, compared with policy gradients derived from sparse, scalar rewards. To test this, we introduce GEPA (Genetic-Pareto), a prompt optimizer that thoroughly incorporates natural language reflection to learn high-level rules from trial and error. Given any AI system containing one or more LLM prompts, GEPA samples system-level trajectories (e.g., reasoning, tool calls, and tool outputs) and reflects on them in natural language to diagnose problems, propose and test prompt updates, and combine complementary lessons from the Pareto frontier of its own attempts. As a result of GEPA's design, it can often turn even just a few rollouts into a large quality gain. Across four tasks, GEPA outperforms GRPO by 10% on average and by up to 20%, while using up to 35x fewer rollouts. GEPA also outperforms the leading prompt optimizer, MIPROv2, by over 10% across two LLMs, and demonstrates promising results as an inference-time search strategy for code optimization.

Summary

- The paper establishes GEPA as an effective alternative to reinforcement learning, demonstrating up to 19% higher test accuracy and significant sample efficiency improvements.

- It employs iterative prompt mutation, Pareto-based candidate selection, and system-aware crossover to optimize modular LLM-based systems.

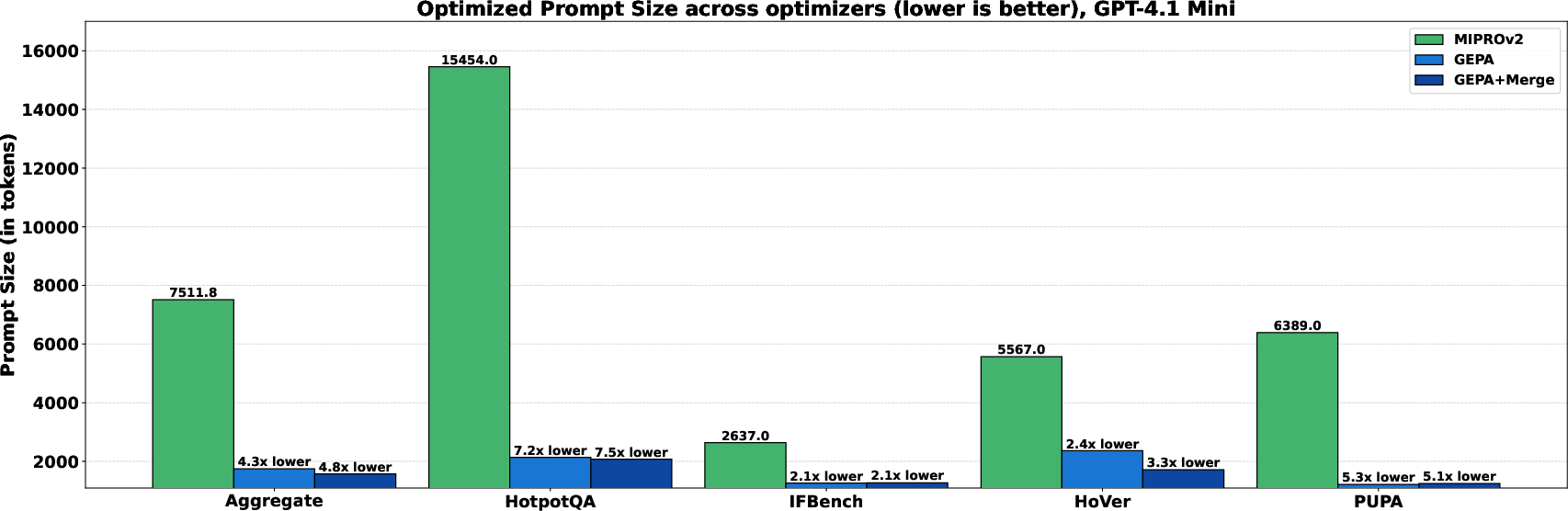

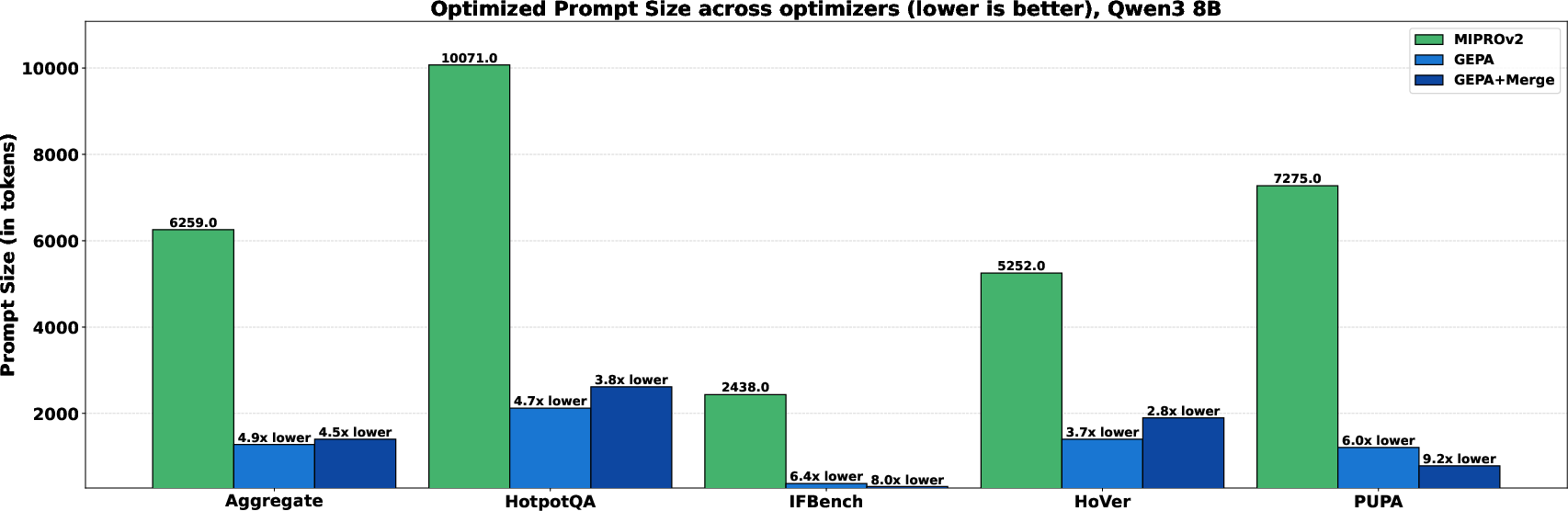

- GEPA reduces prompt length by up to 9.2× and enhances generalization, making it viable for resource-constrained and inference-time code optimization tasks.

Reflective Prompt Evolution for Compound AI Systems: An Analysis of GEPA

Introduction

The paper introduces GEPA (Genetic-Pareto), a reflective prompt optimizer for compound AI systems, and demonstrates that prompt evolution via natural language reflection can outperform reinforcement learning (RL) approaches such as Group Relative Policy Optimization (GRPO) in both sample efficiency and final performance. GEPA leverages the interpretability of language to extract richer learning signals from system-level trajectories, using iterative prompt mutation and Pareto-based candidate selection to optimize modular LLM-based systems. The work provides a comprehensive empirical comparison across multiple benchmarks and models, and explores GEPA's applicability to inference-time code optimization.

GEPA Algorithmic Framework

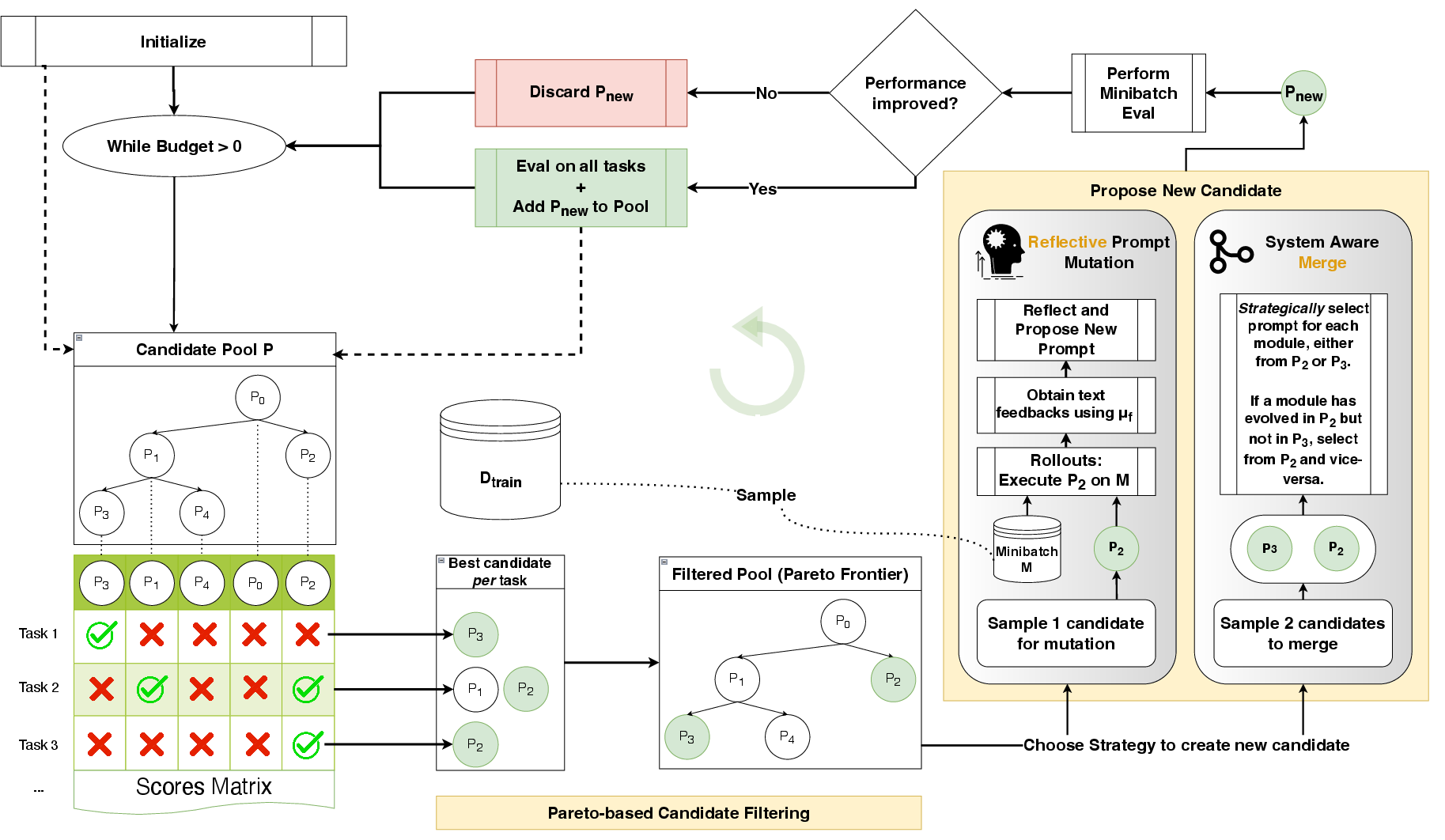

GEPA is designed for modular, compound AI systems composed of multiple LLM modules and tool calls, orchestrated via arbitrary control flow. The optimization objective is to maximize downstream task performance by tuning the system's prompts (and, optionally, model weights) under a strict rollout budget. GEPA's core algorithm consists of three interlocking components:

- Genetic Optimization Loop: Maintains a pool of candidate systems, each defined by a set of prompts. In each iteration, a candidate is selected, mutated (via prompt update or crossover), and evaluated. Improved candidates are added to the pool, and the process continues until the rollout budget is exhausted.

- Reflective Prompt Mutation: For a selected module, GEPA collects execution traces and feedback from a minibatch of rollouts. An LLM is then prompted to reflect on these traces and propose a new instruction, explicitly incorporating domain-specific lessons and error diagnoses.

- Pareto-based Candidate Selection: Rather than greedily optimizing the best candidate, GEPA maintains a Pareto frontier of candidates that achieve the best score on at least one training instance. Candidates are stochastically sampled from this frontier, promoting diversity and robust generalization.

Figure 2: GEPA's iterative optimization process, combining reflective prompt mutation and Pareto-based candidate selection to efficiently explore the prompt space.

Reflective Prompt Mutation and Feedback Integration

GEPA's reflective mutation leverages the full natural language trace of system execution, including intermediate reasoning, tool outputs, and evaluation feedback. This enables implicit credit assignment at the module level, allowing the optimizer to make targeted, high-impact prompt updates. The meta-prompt used for reflection is designed to extract both generalizable strategies and niche, domain-specific rules from the feedback.

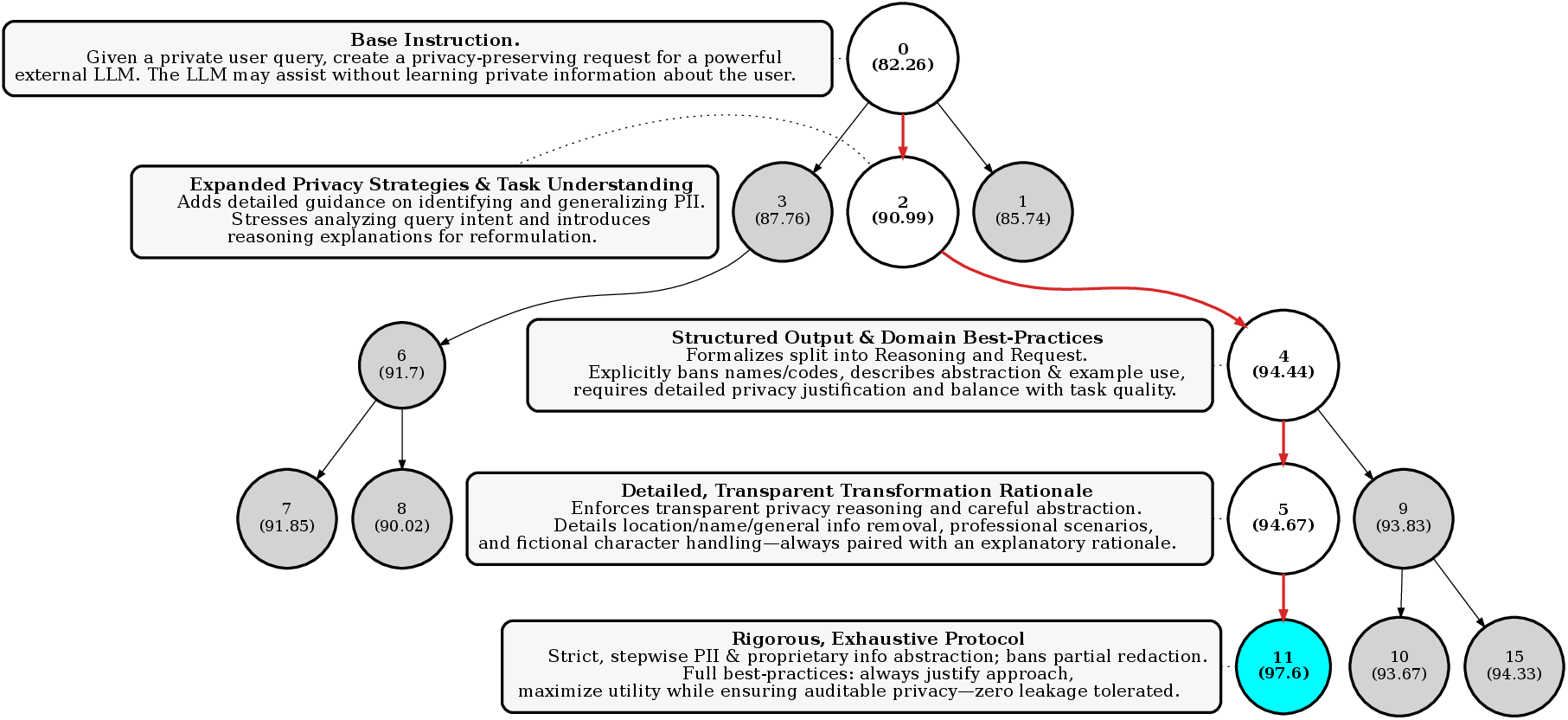

Figure 4: Visualization of GEPA's optimization trajectory, showing how iterative prompt refinements accumulate nuanced, task-specific instructions.

The integration of evaluation traces (e.g., code compilation errors, constraint satisfaction reports) as additional feedback further enhances the diagnostic signal available for prompt evolution.

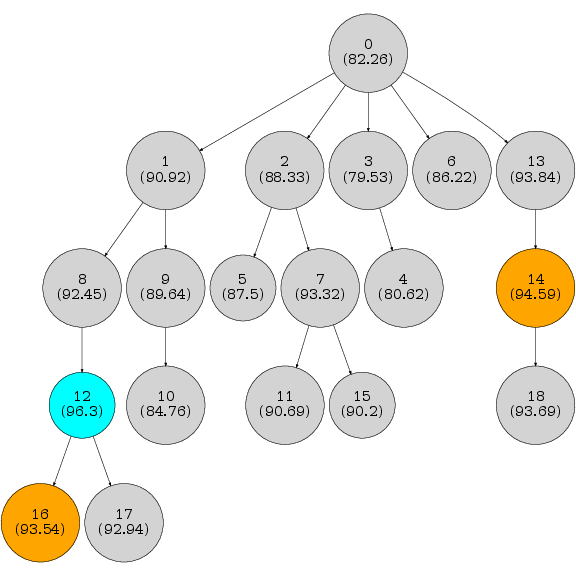

Pareto-based Candidate Selection and Search Dynamics

A key innovation in GEPA is the use of Pareto-based candidate selection. By tracking the best-performing candidates for each training instance, GEPA avoids premature convergence to local optima and ensures that diverse, complementary strategies are preserved and recombined. This approach is contrasted with naive greedy selection, which often leads to stagnation.

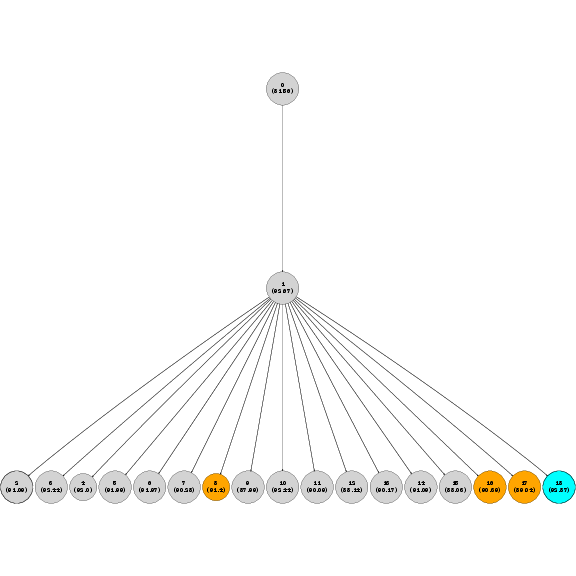

Figure 1: Illustration of the SelectBestCandidate strategy, which can lead to local optima and suboptimal exploration.

Empirical Results

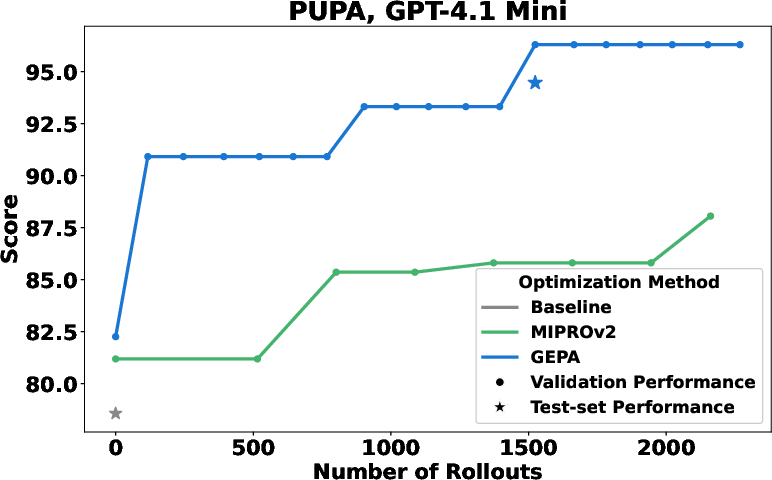

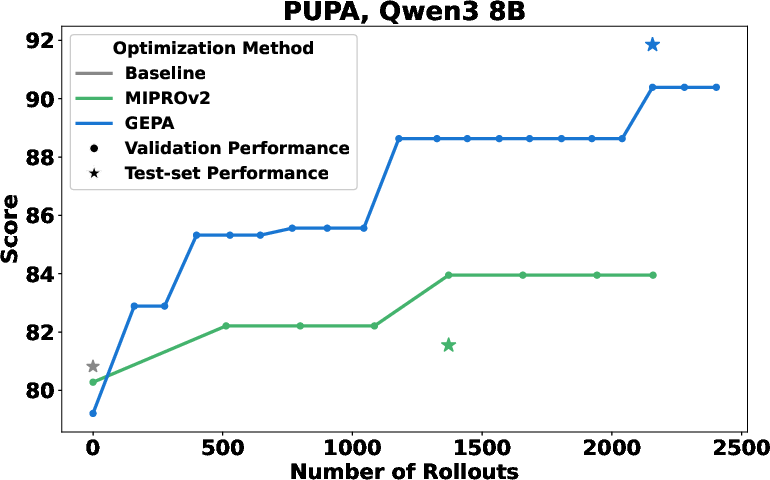

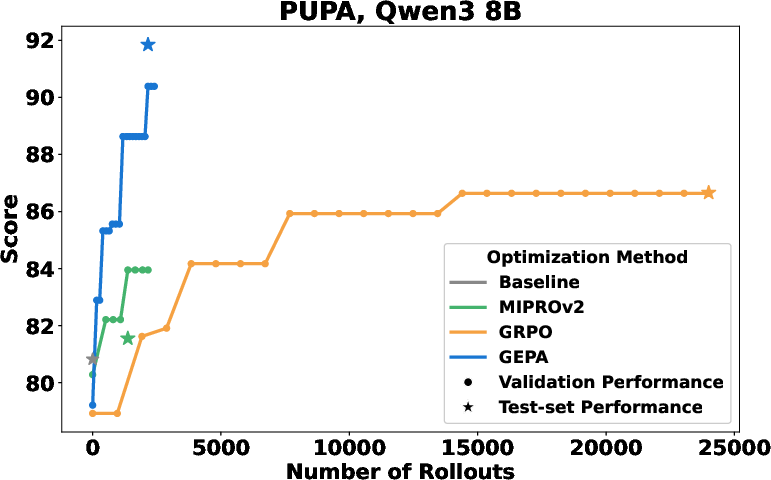

GEPA is evaluated on four benchmarks—HotpotQA, IFBench, HoVer, and PUPA—using both open-source (Qwen3 8B) and proprietary (GPT-4.1 Mini) models. The main findings are:

- Sample Efficiency: GEPA achieves up to 19% higher test accuracy than GRPO while using up to 35× fewer rollouts. In some cases, GEPA matches GRPO's best validation scores with as few as 32–179 training rollouts.

- Performance: GEPA outperforms MIPROv2, a state-of-the-art prompt optimizer, by 10–14% across all tasks and models. The aggregate gains over baseline are more than double those of MIPROv2.

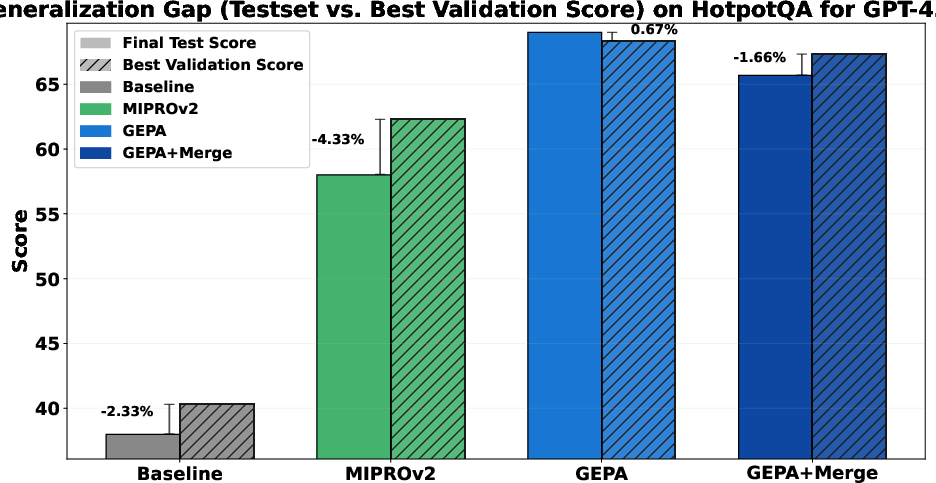

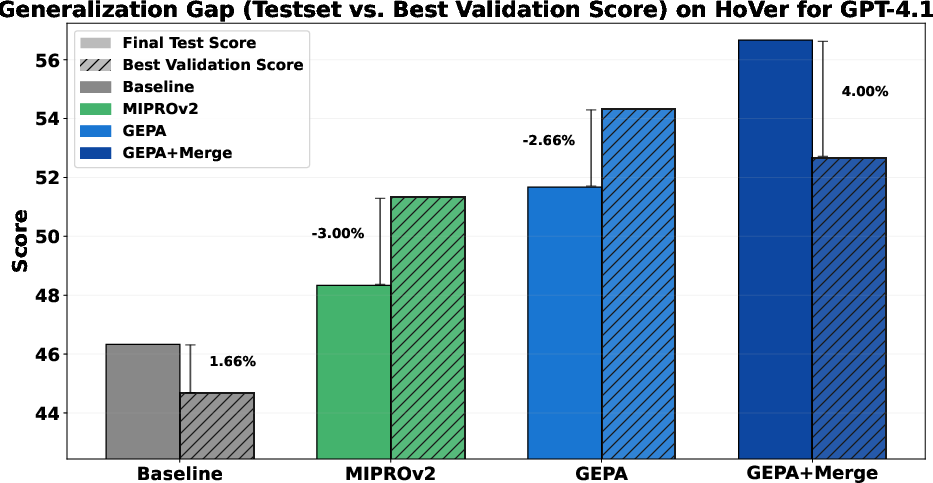

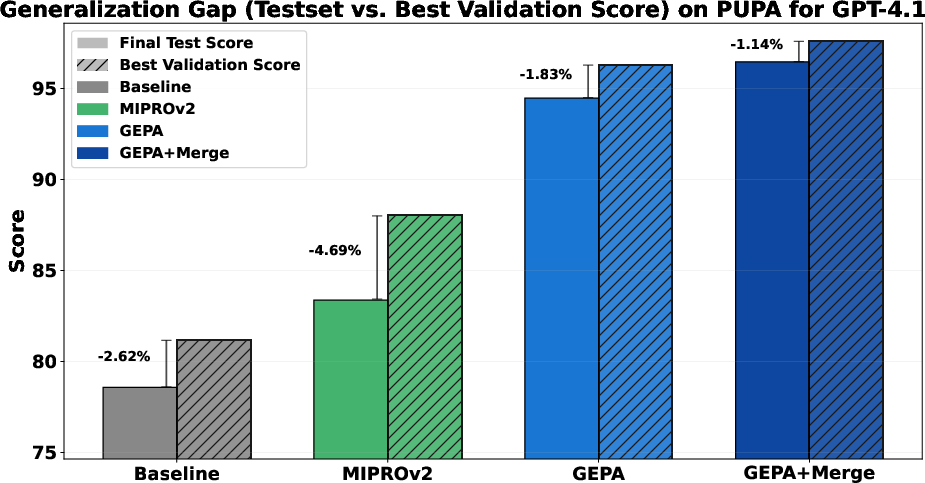

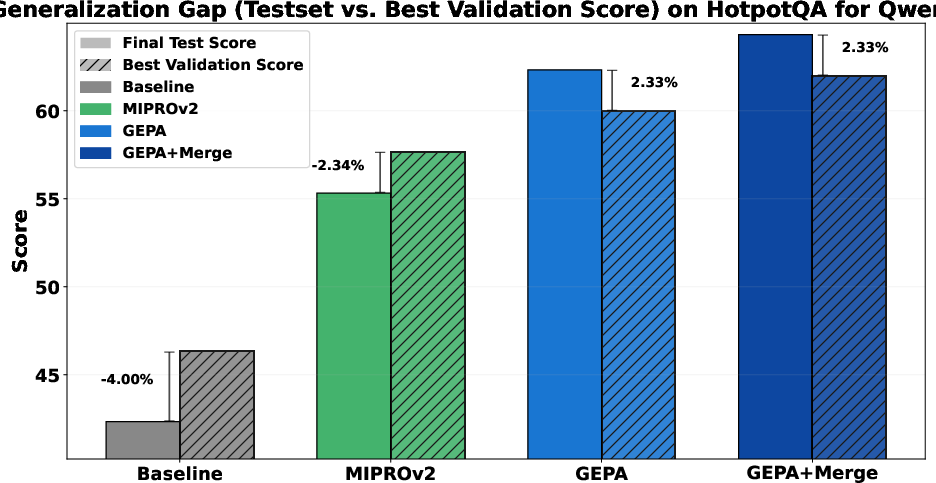

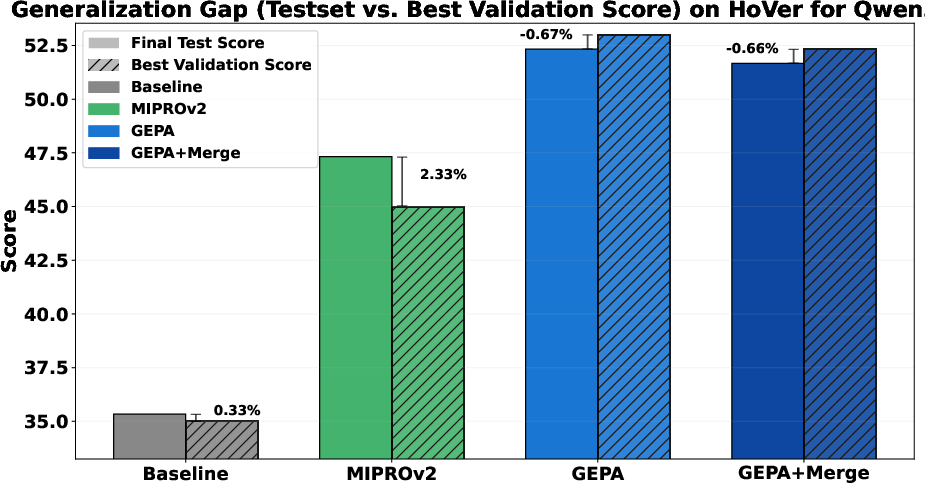

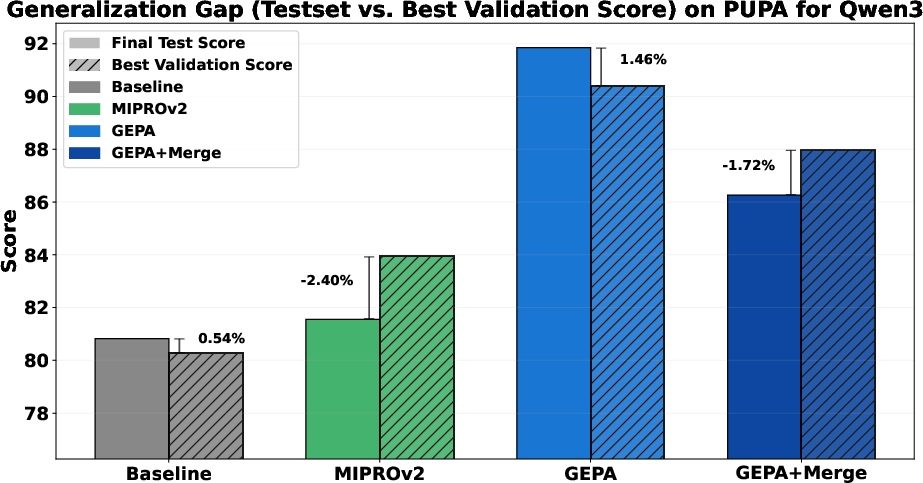

- Generalization: Reflectively evolved instructions now exhibit lower generalization gaps than few-shot demonstration-based prompts, contradicting prior findings and highlighting the impact of improved LLM instruction-following and reflection capabilities.

Figure 7: Performance-vs-rollouts for GEPA and MIPRO on GPT-4.1 Mini, demonstrating superior sample efficiency and final accuracy.

Figure 3: Generalization gaps for different optimization methods, showing that GEPA's instructions generalize as well or better than demonstration-based approaches.

- Prompt Compactness: GEPA's optimized prompts are up to 9.2× shorter than those produced by MIPROv2, reducing inference cost and latency.

Figure 5: Comparison of token counts for optimized programs, highlighting the efficiency of GEPA's instruction-only prompts.

- Ablation: Pareto-based candidate selection provides a 6.4–8.2% aggregate improvement over greedy selection, confirming the importance of diversity in the search process.

System-aware Crossover and Merge Strategies

GEPA+Merge introduces a system-aware crossover operator that merges complementary modules from distinct lineages in the candidate pool. This can yield up to 5% additional improvement, particularly when the optimization tree has evolved sufficiently diverse strategies. However, the benefit is sensitive to the timing and frequency of merge operations, and further paper is needed to optimize this trade-off.

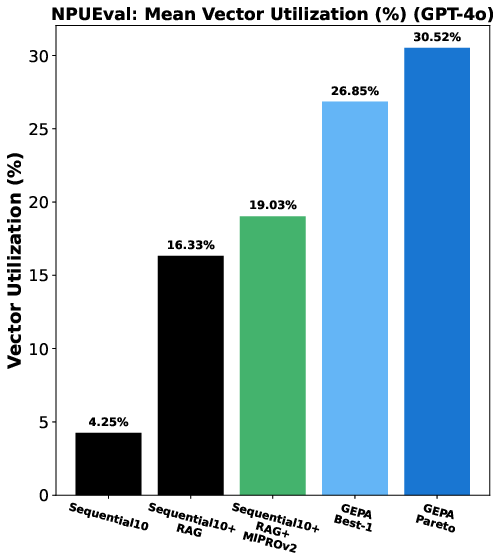

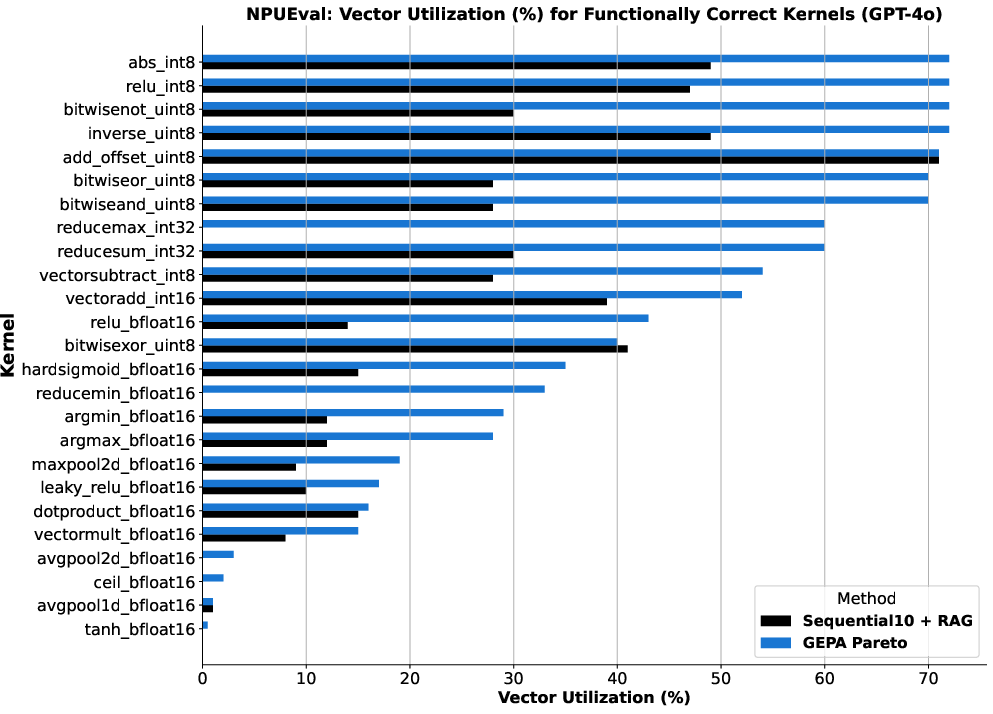

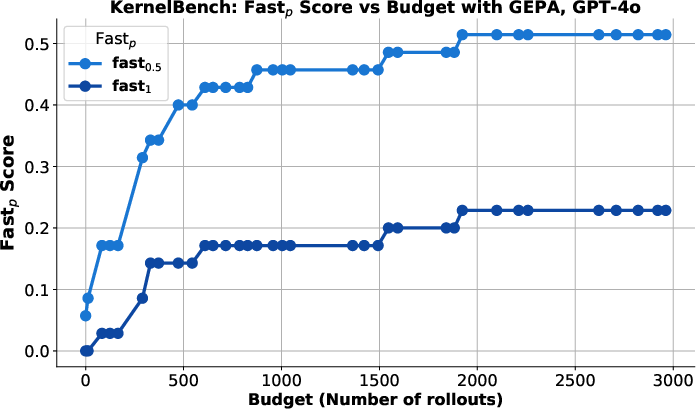

Inference-Time Search and Code Optimization

GEPA is also applied as an inference-time search strategy for code generation tasks, such as kernel synthesis for AMD NPUs and CUDA code for NVIDIA GPUs. By leveraging domain-specific feedback (e.g., compiler errors, profiling results), GEPA enables rapid prompt evolution that incorporates architectural best practices and achieves substantial performance gains over baseline and RAG-augmented agents.

Figure 10: GEPA enables high vector utilization in AMD NPU kernel generation, outperforming sequential refinement and RAG-augmented baselines.

Figure 11: GEPA's iterative refinement of CUDA kernel code leads to significant speedups over PyTorch-eager baselines.

Implementation Considerations

- Computational Requirements: GEPA is highly sample-efficient, making it suitable for settings with expensive rollouts or limited inference budgets. Most of the rollout budget is spent on candidate validation, suggesting further gains are possible via dynamic or subsampled validation.

- Scalability: The modular design supports arbitrary compound AI systems, and the Pareto-based search scales well with the number of modules and tasks.

- Deployment: GEPA can be integrated into existing LLM orchestration frameworks (e.g., DSPy, llama-prompt-ops) and is compatible with both open and closed-source models.

- Limitations: GEPA currently optimizes instructions only; extending to few-shot demonstration optimization or hybrid prompt-weight adaptation is a promising direction.

Theoretical and Practical Implications

The results challenge the prevailing assumption that RL-based adaptation is necessary for high-performance LLM systems in low-data regimes. GEPA demonstrates that language-based reflection, when combined with evolutionary search and Pareto-based selection, can extract richer learning signals from each rollout and achieve superior generalization. This has implications for the design of adaptive, interpretable, and efficient AI systems, particularly in domains where rollouts are expensive or feedback is naturally available in textual form.

Future Directions

- Hybrid Optimization: Combining reflective prompt evolution with weight-space adaptation (e.g., using GEPA's lessons to guide RL rollouts) may yield additive gains.

- Feedback Engineering: Systematic paper of which execution or evaluation traces provide the most valuable learning signals for reflection.

- Adaptive Validation: Dynamic selection of validation sets to further improve sample efficiency.

- Demonstration Optimization: Extending GEPA to jointly optimize instructions and in-context examples.

Conclusion

GEPA establishes reflective prompt evolution as a powerful alternative to RL for optimizing compound AI systems. By leveraging natural language feedback, Pareto-based search, and modular prompt mutation, GEPA achieves strong sample efficiency, robust generalization, and practical gains in both standard benchmarks and code optimization tasks. The approach opens new avenues for language-driven, reflection-based learning in AI, with broad applicability to real-world, resource-constrained settings.

Follow-up Questions

- How does GEPA's reflective prompt mutation utilize natural language feedback to improve optimization compared to traditional reinforcement learning?

- What specific benchmarks and metrics were used to evaluate GEPA's sample efficiency and final performance gains?

- In what ways does Pareto-based candidate selection contribute to overcoming the limitations of greedy optimization strategies?

- How can GEPA be integrated into existing LLM orchestration frameworks to enhance both instruction tuning and inference-time performance?

- Find recent papers about reflective prompt evolution in AI systems.

Related Papers

- Automatic Prompt Optimization with "Gradient Descent" and Beam Search (2023)

- Prompt Programming for Large Language Models: Beyond the Few-Shot Paradigm (2021)

- EvoPrompt: Connecting LLMs with Evolutionary Algorithms Yields Powerful Prompt Optimizers (2023)

- PRompt Optimization in Multi-Step Tasks (PROMST): Integrating Human Feedback and Heuristic-based Sampling (2024)

- Unleashing the Potential of Large Language Models as Prompt Optimizers: Analogical Analysis with Gradient-based Model Optimizers (2024)

- Mixtures of In-Context Learners (2024)

- Self-Supervised Prompt Optimization (2025)

- In Prospect and Retrospect: Reflective Memory Management for Long-term Personalized Dialogue Agents (2025)

- lmgame-Bench: How Good are LLMs at Playing Games? (2025)

- R&D-Agent: Automating Data-Driven AI Solution Building Through LLM-Powered Automated Research, Development, and Evolution (2025)

Authors (17)

Tweets

YouTube

HackerNews

- GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning (8 points, 0 comments)

- [2507.19457] GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning (40 points, 6 comments)

- Reflective Prompt Evolution Can Outperform Reinforcement Learning (28 points, 1 comment)

- GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning (18 points, 0 comments)

alphaXiv

- GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning (126 likes, 0 questions)