- The paper introduces an interactive UI-to-code framework that iteratively refines generated code to closely match target UI designs.

- It employs a 9B-parameter transformer-based VLM with multi-stage training and reinforcement learning using human-aligned visual rewards.

- Results demonstrate state-of-the-art performance in UI generation, polishing, and targeted editing across both synthetic and real-world benchmarks.

UI2CodeN: A Visual LLM for Test-Time Scalable Interactive UI-to-Code Generation

Introduction and Motivation

Automating UI development remains a formidable challenge in multimodal learning due to the intricacy of mapping rich, visually diverse user interfaces to long, executable code representations. While VLMs have demonstrated prowess in vision-language understanding, their application to UI-to-code generation is hindered by limited multimodal coding capabilities and failure to leverage the intrinsically interactive nature of UI development workflows. UI2CodeN addresses these deficiencies by proposing an interactive UI-to-code paradigm and introducing a 9B-parameter open-source VLM, trained with a comprehensive multi-stage regime. Critically, UI2CodeN demonstrates strong capabilities in UI-to-code generation, UI polishing through iterative feedback, and targeted UI editing, establishing new SOTA performance on established and novel real-world benchmarks.

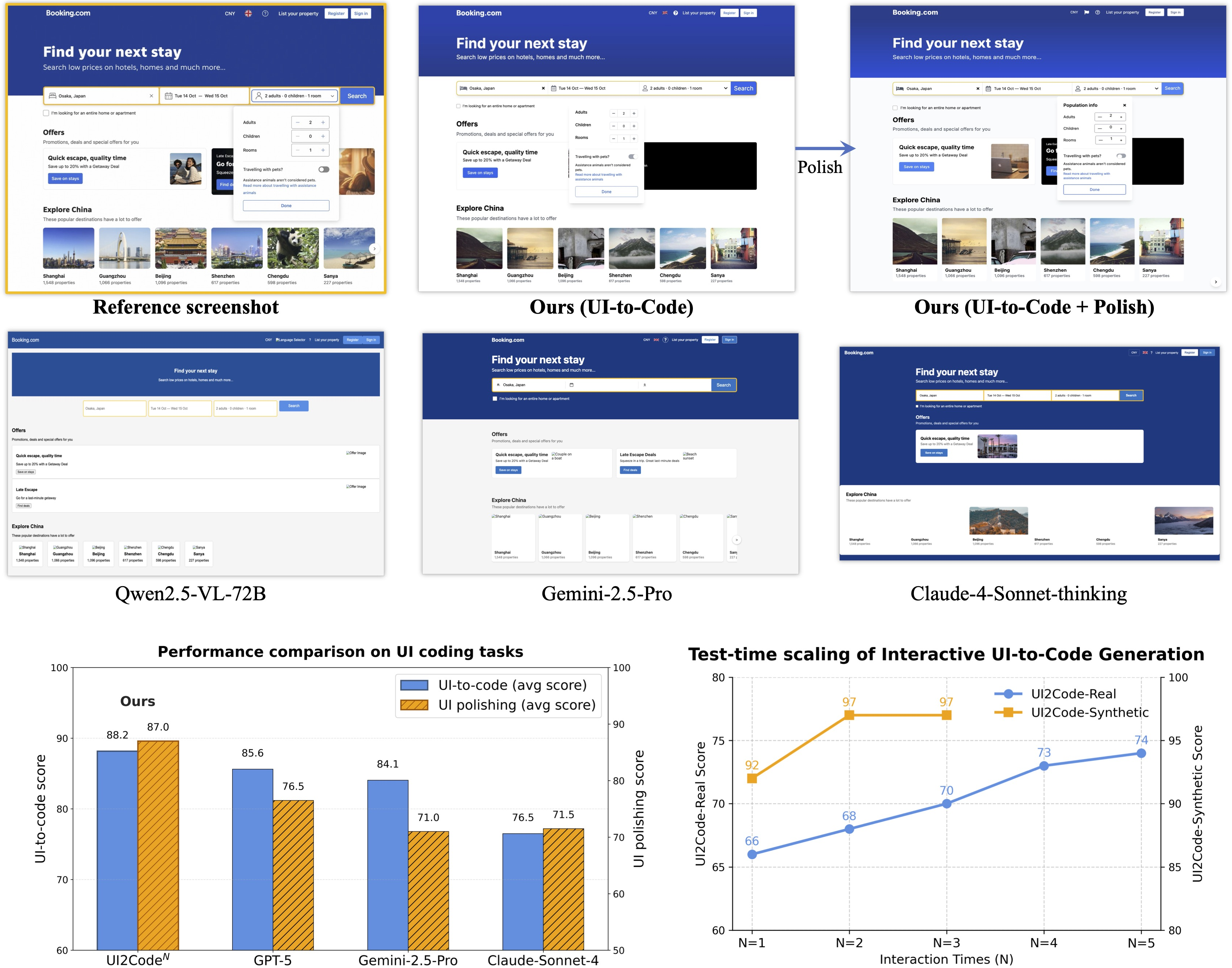

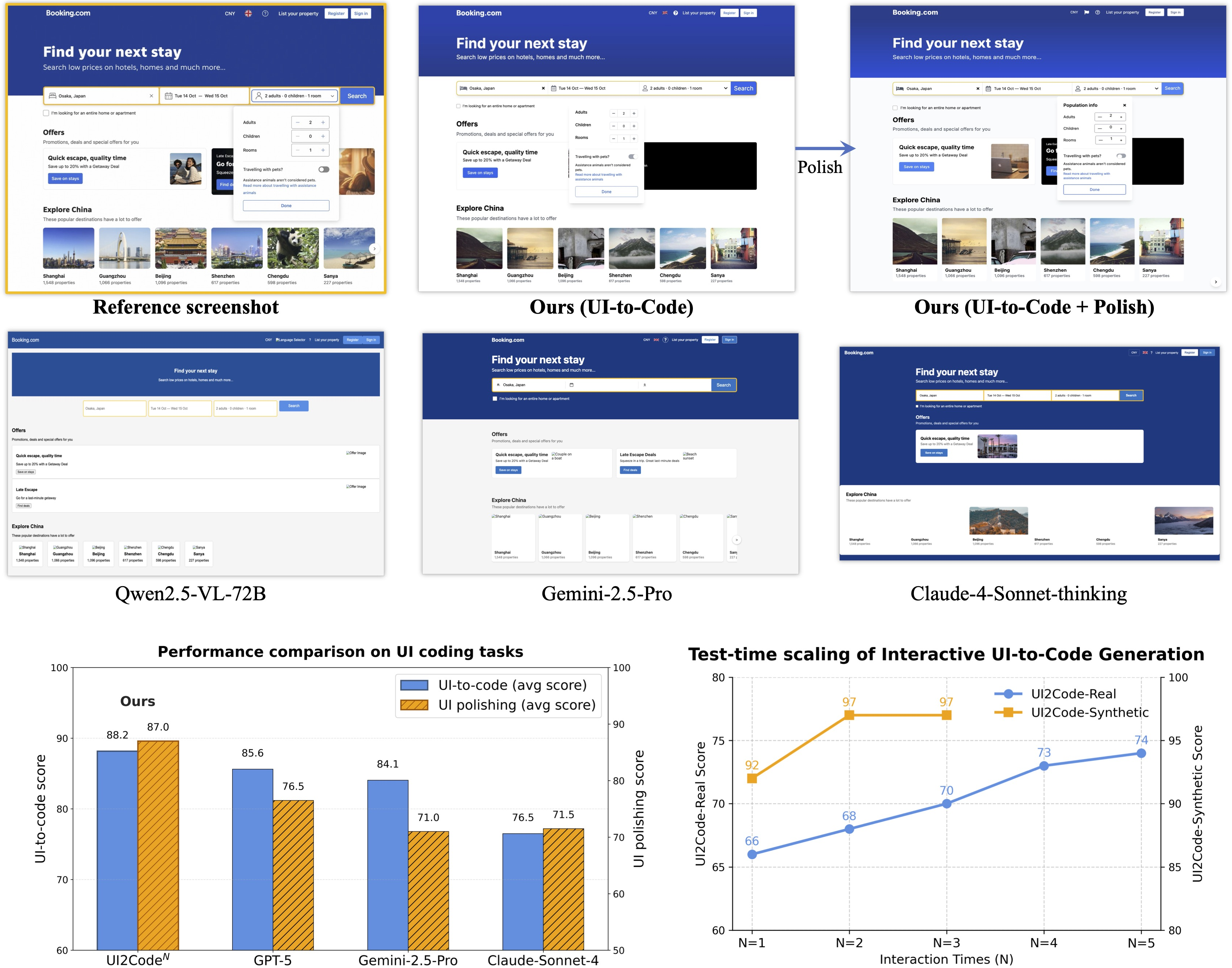

Figure 1: Model comparison on a UI-to-code input, showing higher visual fidelity for UI2CodeN and additional gains via iterative UI polishing; bottom-right: scaling curve with performance gains through test-time interaction.

Interactive UI-to-Code Paradigm

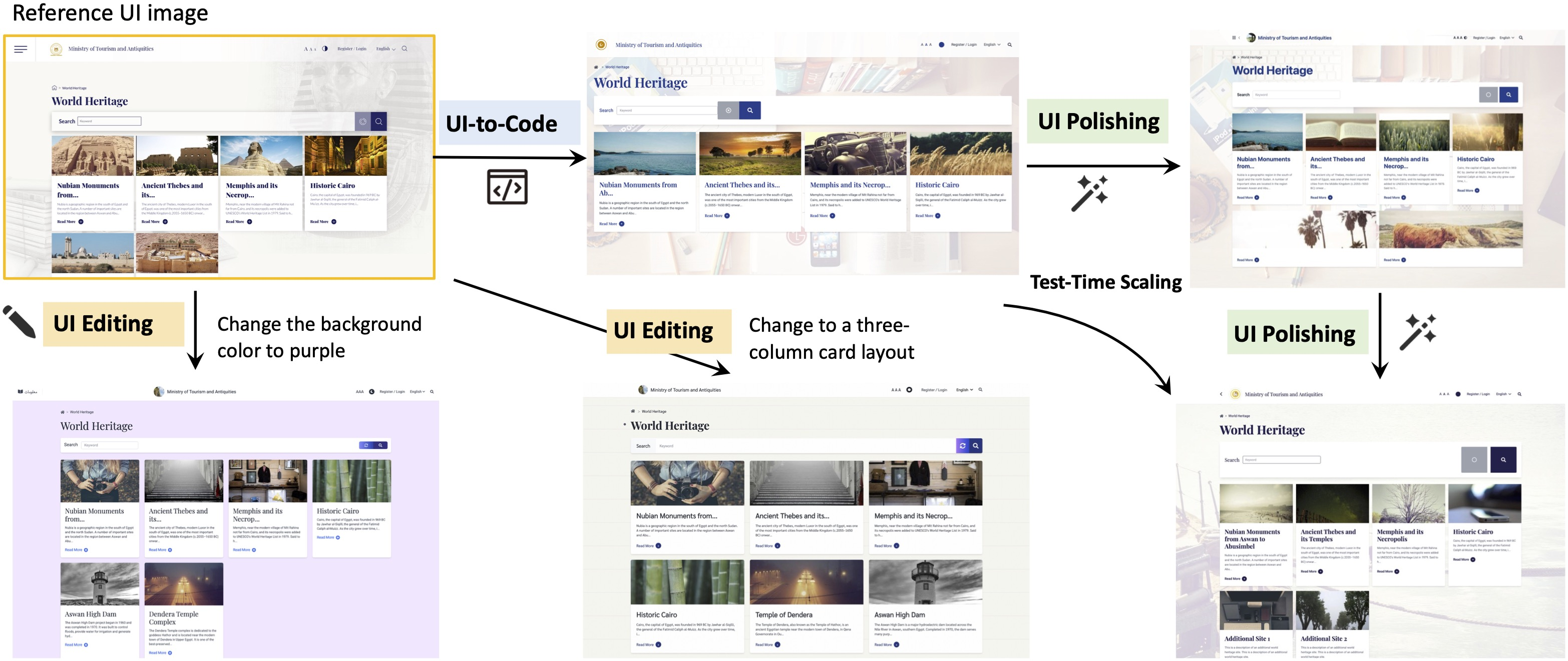

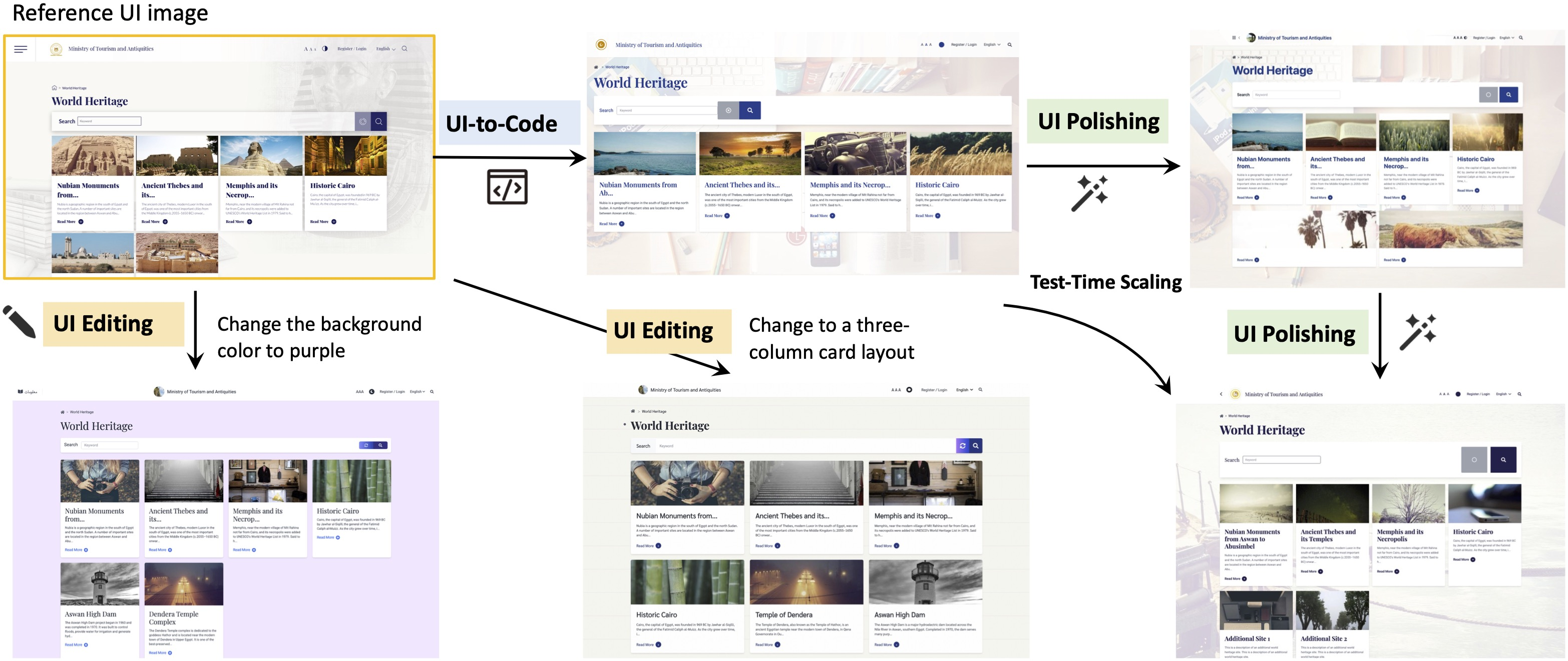

Most prior UI-to-code models treat the task as one-shot image-to-code generation, which disregards the cyclical draft-refine process prevalent in front-end engineering. UI2CodeN instead formalizes a three-stage interactive framework:

- UI-to-Code: The model is conditioned on a reference UI image and produces initial code approximating the target layout and styling.

- UI Polishing: Iterative refinement of the code is performed, with each round consuming the previous code, its rendering, and the target reference. Multiple rounds allow systematic reduction of residual visual discrepancies, operationalizing test-time scaling.

- UI Editing: The model enables user-driven editing via targeted modification instructions, fostering collaborative design and facilitating incremental updates rather than complete regeneration.

Figure 2: Overview of the interactive UI-to-code workflow—generation, polishing, and editing—yielding continuous improvement and granular control.

This paradigm is computationally practical owing to its support for recursive code improvement during inference, an ability absent from conventional approaches.

Model Architecture and Multi-Stage Training

UI2CodeN employs a 9B-parameter transformer-based VLM architecture, initialized from a strong vision-language checkpoint (GLM-4.1V-9B-Base). The data pipeline and training specifics are as critical as the model design:

Continual Pre-Training

- Corpus: ∼10M real-world webpage–HTML pairs, crawled from Common Crawl–derived sources, complemented by high-fidelity synthetic datasets like WebCode2M and WebSight.

- Objective: Image–code grounding using GUI referring expression tasks, interleaving coding data with general vision-language objectives.

- Engineering: 20M samples, sequence length up to 32k tokens to accommodate long-form HTML/CSS, batch size 1536, and code corpora >1B tokens for foundational coding competence.

Supervised Fine-Tuning

- Data: 80k carefully constructed QA examples across UI-to-code, polishing, and editing, using deep reasoning output format with intermediate "think" steps for robust, transparent generation.

- Engineering: Code samples predominantly generated and validated using strong LLMs to maximize diversity and correctness; 5 epochs, batch size 256.

Reinforcement Learning (RL)

- Tasks: Joint RL for UI-to-code and UI polishing.

- Reward: Human-aligned, VLM-based visual similarity scores (GLM-4.5V as verifier), using an advanced round-robin comparator design for consistent and calibrated multi-candidate ranking. CLIP-based rewards were empirically found to underperform both in sensitivity and human-alignment.

- Corpus: 12K real webpages from Mind2Web, plus 30K synthetic; candidates diversified by code generation from multiple VLMs, including model checkpoints from UI2CodeN's own training.

- Ablations: The benefit of real-world data in RL and the necessity of robust visual reward signals were empirically verified.

Experimental Results

UI2CodeN demonstrates significant SOTA gains over all prior open-source VLMs and strong competitiveness with closed-source models:

- UI-to-Code (Design2Code, Web2Code, Flame, UI2Code-Real): Scores range from 76–95, outperforming Qwen2.5-VL-72B and InternVL3-78B by large margins (e.g., 40+ on Design2Code). On real-world UIs, open-source competitors are surpassed by over 30 points.

- UI Polishing: All previous open-source baselines fail to exceed 50% accuracy (thus unreliable for iterative refinement in practice). UI2CodeN achieves 80.0% accuracy on real and 94.0% on synthetic polishing tasks, matching or surpassing Claude-4-Sonnet and Gemini-2.5-pro.

Empirically, multiple test-time polishing rounds result in monotonic gains, with real-world UI fidelity improving up to 8 points over five rounds—a unique advantage enabled by the interactive paradigm.

Reward Design and Data Ablations

Experiments confirm that advanced reward schemes (VLM-based round-robin comparator) are necessary for effective RL in this setting; CLIP-based rewards induce suboptimal policies, and vanilla verifiers suffer from calibration drift.

The inclusion of real webpage data in RL further closes the sim-to-real gap: adding real-world samples increases UI-to-code-Real and UI polish-Real accuracy by 5–15 points, especially for visually complex, uncurated web designs.

Practical and Theoretical Implications

UI2CodeN provides an actionable recipe for end-to-end visual-to-code systems:

- For Practitioners: The model’s interactive cycle—generation, polish, edit—aligns with existing Figma-to-code and real-world front-end workflows. It enables test-time quality-control loops, robust code adaptation, and integration with designer-specified edits, not achievable with one-shot LLM or VLM agents. Full code and models are open-sourced for reproducibility and customization.

- For Model Developers: The multi-stage learning paradigm demonstrates that (i) real-world data, even if noisy, is integral for pre-training; (ii) data curation and task diversity in SFT add critical robustness; (iii) RL with human-aligned visual verifiers unlocks performance unachievable by token-level objectives.

- Theoretically: The findings reinforce that high-fidelity visual code generation cannot be trivially reduced to standard vision–language problems—pixel-perfect code synthesis requires interactive learning and rewards that directly reflect perceived visual similarity.

Future Directions

The UI2CodeN paradigm invites several lines for further research:

- Scalability: Extension to even more complex, multi-view/multimodal UI scenarios (e.g., responsive, mobile-native layouts).

- Granular Editability: Incorporation of semantic edit instructions, style transfer, or higher-level program synthesis within the iterative loop.

- Verifier-Driven RL: The modular reward framework can be adapted for other domains where human-aligned, visually-grounded RL is essential (e.g., graphics design, scientific visualization).

- Benchmarks and Evaluation: The design and release of real-world, unpruned, and visually diverse evaluation benchmarks will be crucial to tracking progress in this area.

Conclusion

UI2CodeN re-frames visual-to-code generation as an interactive, iterative process and demonstrates that model design, training, and evaluation must all align to this workflow to approach human-level performance. Through a combination of staged data utilization, curriculum-inspired fine-tuning, and advanced RL reward mechanisms, the model achieves leading fidelity and editability on both synthetic and real-world UI tasks. The methods and released artifacts set a new foundation for research and application in automated UI coding systems.