ScreenCoder: Advancing Visual-to-Code Generation for Front-End Automation via Modular Multimodal Agents (2507.22827v1)

Abstract: Automating the transformation of user interface (UI) designs into front-end code holds significant promise for accelerating software development and democratizing design workflows. While recent LLMs have demonstrated progress in text-to-code generation, many existing approaches rely solely on natural language prompts, limiting their effectiveness in capturing spatial layout and visual design intent. In contrast, UI development in practice is inherently multimodal, often starting from visual sketches or mockups. To address this gap, we introduce a modular multi-agent framework that performs UI-to-code generation in three interpretable stages: grounding, planning, and generation. The grounding agent uses a vision-LLM to detect and label UI components, the planning agent constructs a hierarchical layout using front-end engineering priors, and the generation agent produces HTML/CSS code via adaptive prompt-based synthesis. This design improves robustness, interpretability, and fidelity over end-to-end black-box methods. Furthermore, we extend the framework into a scalable data engine that automatically produces large-scale image-code pairs. Using these synthetic examples, we fine-tune and reinforce an open-source VLM, yielding notable gains in UI understanding and code quality. Extensive experiments demonstrate that our approach achieves state-of-the-art performance in layout accuracy, structural coherence, and code correctness. Our code is made publicly available at https://github.com/leigest519/ScreenCoder.

Summary

- The paper introduces a modular multi-agent framework that decomposes UI-to-code generation into grounding, planning, and generation stages.

- It demonstrates state-of-the-art performance with high block match, text similarity, and position alignment scores across diverse UI-image/code pairs.

- The system additionally functions as a scalable data engine for VLM training, employing dual-stage post-training to refine visual and semantic fidelity.

Modular Multimodal Agents for Visual-to-Code Generation: An Analysis of ScreenCoder

Introduction

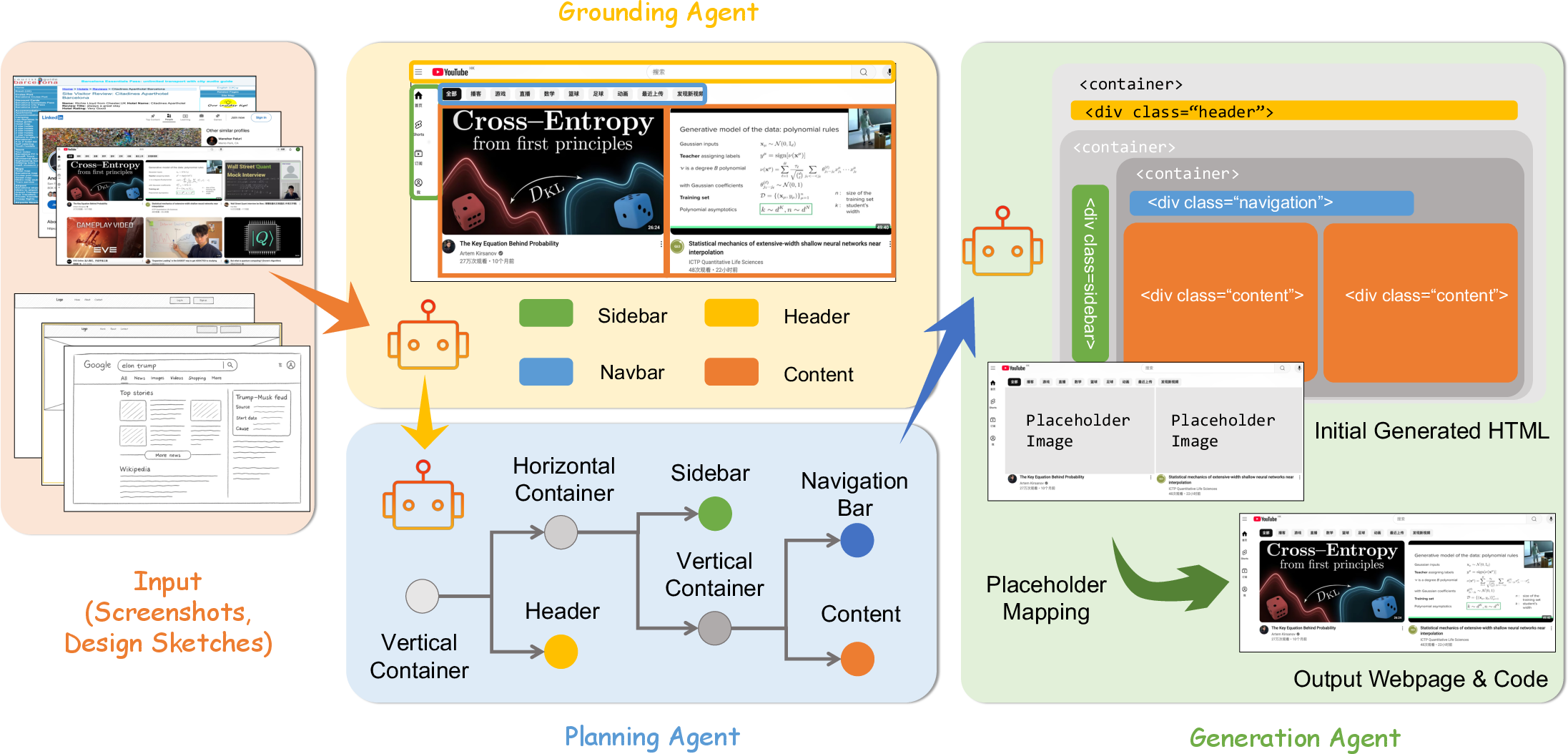

ScreenCoder introduces a modular, multi-agent framework for automating the transformation of user interface (UI) designs—specifically screenshots or design sketches—into executable front-end code. The approach is motivated by the limitations of existing vision-LLMs (VLMs) and LLMs in UI-to-code tasks, particularly their inability to robustly capture spatial layout, visual design intent, and domain-specific engineering priors when operating in an end-to-end, black-box fashion. ScreenCoder addresses these challenges by decomposing the problem into three interpretable and sequential stages: grounding, planning, and generation. This essay provides a technical analysis of the ScreenCoder architecture, its empirical performance, and its implications for the future of multimodal program synthesis and front-end automation.

Modular Multi-Agent Architecture

ScreenCoder’s architecture is characterized by a strict modularization of the UI-to-code pipeline, with each agent specializing in a distinct sub-task:

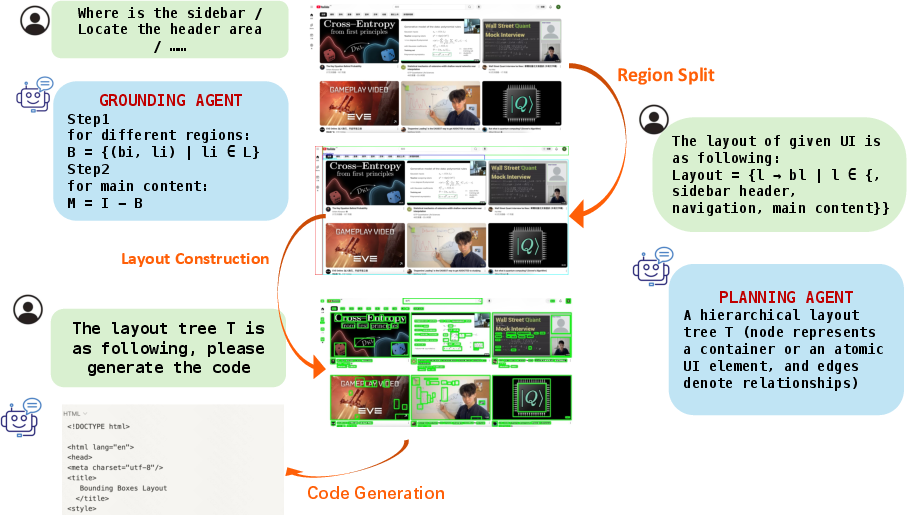

- Grounding Agent: Utilizes a VLM to detect and semantically label key UI components (e.g., header, navbar, sidebar, content) from input images. The agent is prompt-driven, allowing extensibility to new UI elements and supporting interactive, language-driven design modifications.

- Planning Agent: Constructs a hierarchical layout tree from the grounded components, leveraging domain knowledge of web layout systems (notably CSS Grid). The agent applies spatial heuristics and compositional rules to organize components, producing a normalized, interpretable layout specification.

- Generation Agent: Synthesizes HTML/CSS code by traversing the layout tree and generating code for each component via adaptive, context-aware prompts to a LLM. The agent supports user instructions for interactive design and ensures semantic and structural consistency in the output.

Figure 1: Overview of ScreenCoder’s modular pipeline, illustrating the sequential roles of grounding, planning, and generation agents in transforming UI screenshots into front-end code.

This modular decomposition enables explicit reasoning over UI structure, facilitates the injection of front-end engineering priors, and provides interpretable intermediate representations, in contrast to monolithic VLM-based approaches.

Component-Level Image Restoration

A notable extension in ScreenCoder is its component-level image restoration strategy. Recognizing that real-world UIs often contain images (e.g., profile pictures, backgrounds) that are lost when replaced by generic placeholders, ScreenCoder applies UI element detection (UIED) to the original screenshot. Detected image regions are aligned with placeholder regions in the generated code using affine transformations and bipartite matching (Hungarian Algorithm) based on Complete IoU. The final HTML code is post-processed to replace placeholders with high-fidelity image crops, improving both visual and semantic fidelity of the rendered UI.

Scalable Data Engine and Dual-Stage Post-Training

ScreenCoder is not only an inference-time system but also a scalable data engine for VLM training. The framework is used to generate large-scale, high-quality image-code pairs, which are then employed in a two-stage post-training pipeline for open-source VLMs:

- Supervised Fine-Tuning (SFT): The model is fine-tuned on the synthetic dataset using an autoregressive LLMing objective, aligning visual layout structure with code syntax.

- Reinforcement Learning (RL): The model is further optimized using Group Relative Policy Optimization (GRPO) with a composite reward function that integrates block match, text similarity, and position alignment metrics. This stage directly optimizes for visual and semantic fidelity in the generated code.

Empirical Evaluation

ScreenCoder is evaluated on a large-scale, diverse dataset of 50,000 UI-image/code pairs and a curated benchmark of 3,000 real-world UI-image/code pairs. The evaluation protocol includes both high-level (CLIP-based visual similarity) and low-level (block match, text similarity, position alignment, color consistency) metrics, following the Design2Code standard.

ScreenCoder demonstrates state-of-the-art performance across all metrics, outperforming both open-source and proprietary VLMs (e.g., GPT-4o, Gemini-2.5-Pro, LLaVA 1.6-7B, DeepSeek-VL-7B, Qwen2.5-VL, Seed1.5-VL). Notably, the agentic (modular) version achieves the highest block match (0.755), text similarity (0.946), and position alignment (0.840) scores, with competitive CLIP similarity (0.877). The fine-tuned VLM variant also shows strong results, validating the effectiveness of the data engine and dual-stage training.

Figure 2: Qualitative example of the UI-to-code pipeline, illustrating VLM-generated functional partitions, hierarchical layout tree, and the resulting front-end code.

Qualitative analysis confirms that ScreenCoder produces structurally coherent, visually faithful, and semantically accurate code, with robust handling of complex layouts and diverse component types.

Discussion and Implications

Interpretability and Human-in-the-Loop Design

The modular pipeline enables interpretable intermediate outputs (e.g., layout trees), supporting interactive design workflows and human-in-the-loop feedback. Designers can intervene at any stage—adjusting component labels, modifying layout trees, or re-prompting code generation—without restarting the entire process. This flexibility is absent in end-to-end VLM approaches.

Generality and Extensibility

While the current implementation targets web UIs, the architecture is readily extensible to other domains (e.g., mobile, desktop, game UIs) by adapting the grounding vocabulary and planning heuristics. The prompt-driven nature of the grounding agent facilitates rapid expansion to new component types and design paradigms.

Data Generation and Model Alignment

ScreenCoder’s data engine addresses the scarcity of high-quality, large-scale image-code datasets, a major bottleneck in VLM alignment for structured generation tasks. The dual-stage post-training pipeline demonstrates that synthetic data, when generated with structural and semantic fidelity, can substantially improve VLM performance on UI-to-code tasks.

Limitations and Future Directions

Despite strong empirical results, several challenges remain. Robustness to noisy or low-resolution screenshots, optimization of inference latency, and adaptation to highly dynamic or interactive UIs are open problems. Future work may explore real-time preview, editable intermediate representations, and continuous learning from user corrections in deployment environments.

Conclusion

ScreenCoder represents a significant advance in visual-to-code generation by introducing a modular, interpretable, and extensible multi-agent framework. Its decomposition of the UI-to-code task into grounding, planning, and adaptive code generation stages yields robust, high-fidelity front-end code synthesis and supports interactive, human-in-the-loop design. The system’s role as a scalable data engine further enables effective post-training of VLMs, closing the gap between visual understanding and structured code generation. ScreenCoder lays a strong foundation for future research in multimodal program synthesis, dataset-driven model alignment, and practical front-end automation.

Follow-up Questions

- How does the modular design enhance the interpretability of intermediate UI representations?

- What specific spatial heuristics and compositional rules are used in the planning stage?

- How are quantitative metrics like block match and text similarity computed in ScreenCoder?

- What challenges remain for adapting ScreenCoder to noisy or low-resolution UI inputs?

- Find recent papers about visual-to-code generation using modular agents.

Related Papers

- pix2code: Generating Code from a Graphical User Interface Screenshot (2017)

- Design2Code: Benchmarking Multimodal Code Generation for Automated Front-End Engineering (2024)

- UICoder: Finetuning Large Language Models to Generate User Interface Code through Automated Feedback (2024)

- Automatically Generating UI Code from Screenshot: A Divide-and-Conquer-Based Approach (2024)

- Web2Code: A Large-scale Webpage-to-Code Dataset and Evaluation Framework for Multimodal LLMs (2024)

- Sketch2Code: Evaluating Vision-Language Models for Interactive Web Design Prototyping (2024)

- CodeVisionary: An Agent-based Framework for Evaluating Large Language Models in Code Generation (2025)

- MLLM-Based UI2Code Automation Guided by UI Layout Information (2025)

- DesignCoder: Hierarchy-Aware and Self-Correcting UI Code Generation with Large Language Models (2025)

- LOCOFY Large Design Models -- Design to code conversion solution (2025)

Tweets

HackerNews

- ScreenCoder: An intelligent UI-to-code generation system (58 points, 14 comments)