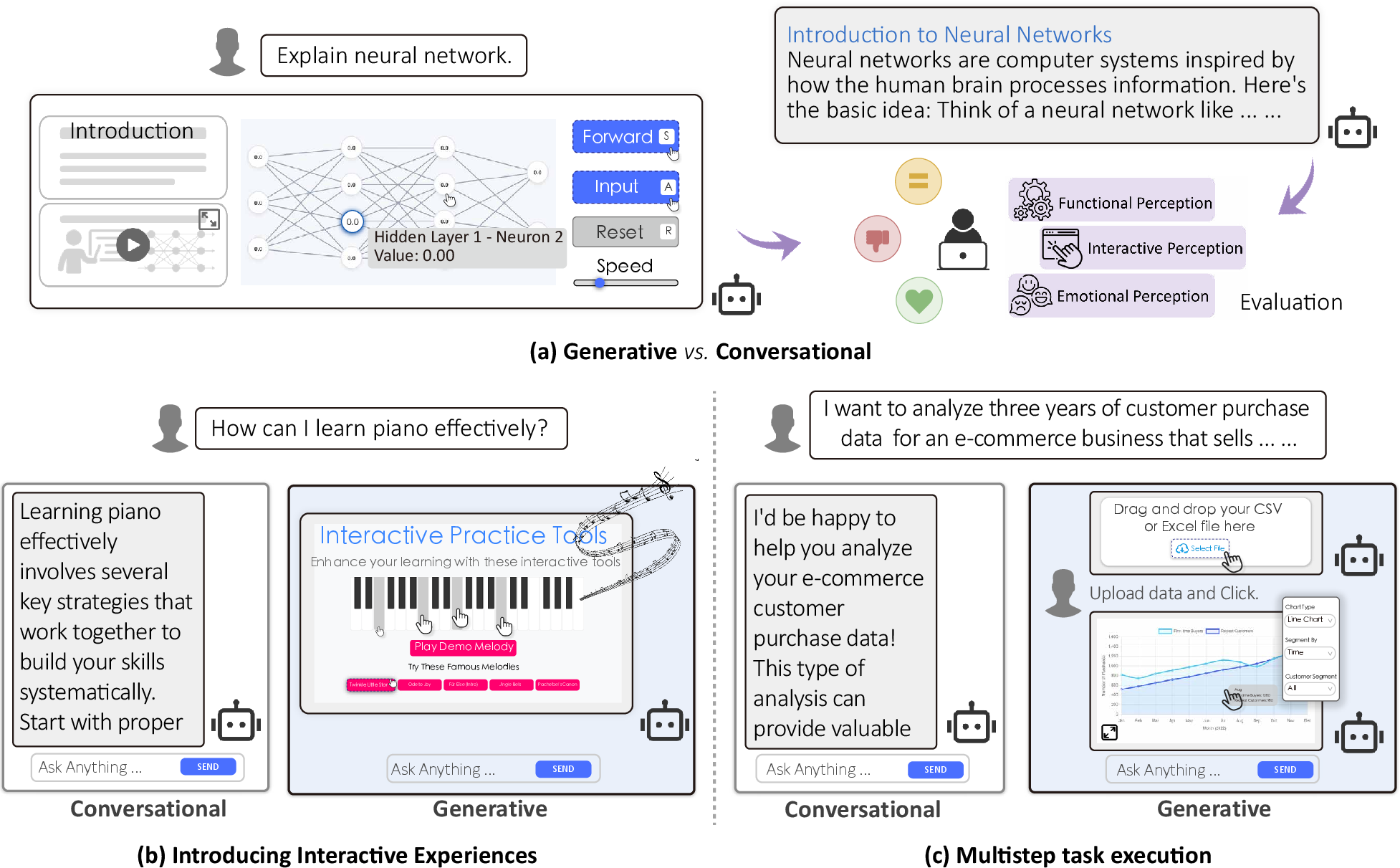

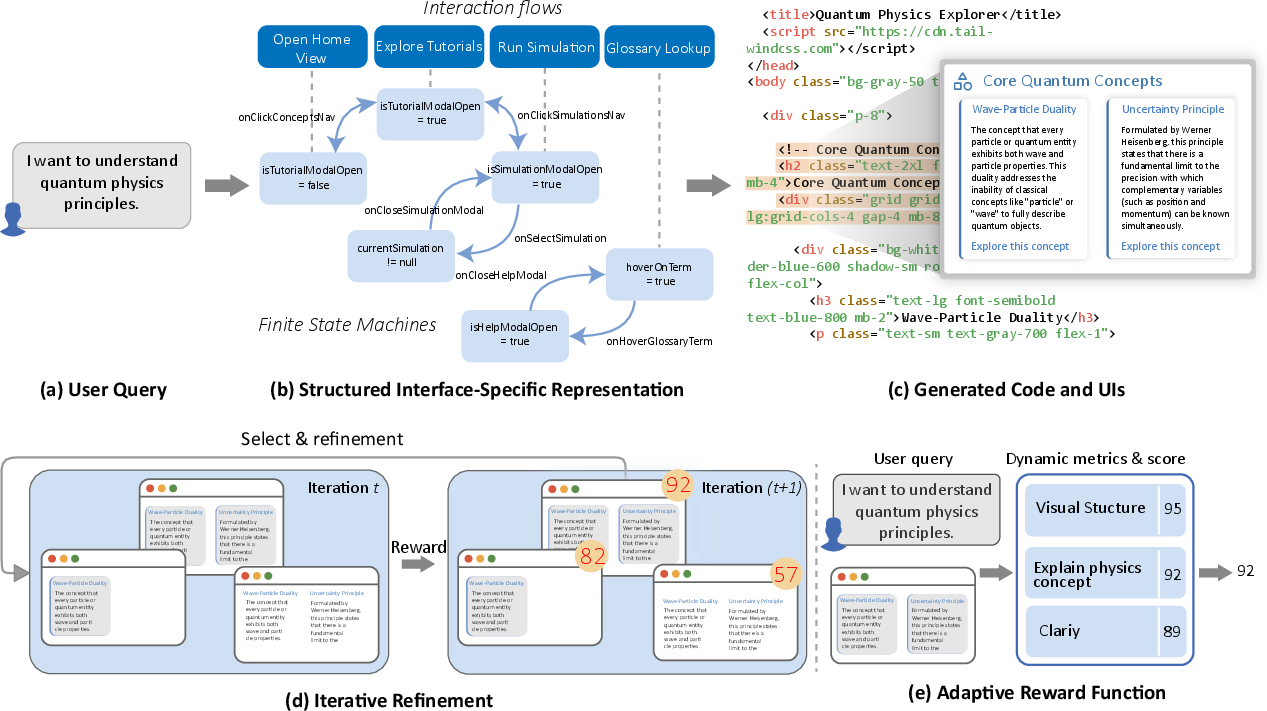

- The paper introduces a framework where LLMs translate user queries into structured representations to generate adaptive UIs.

- The methodology leverages iterative refinement, finite state machines, and adaptive rewards to improve performance and user interaction.

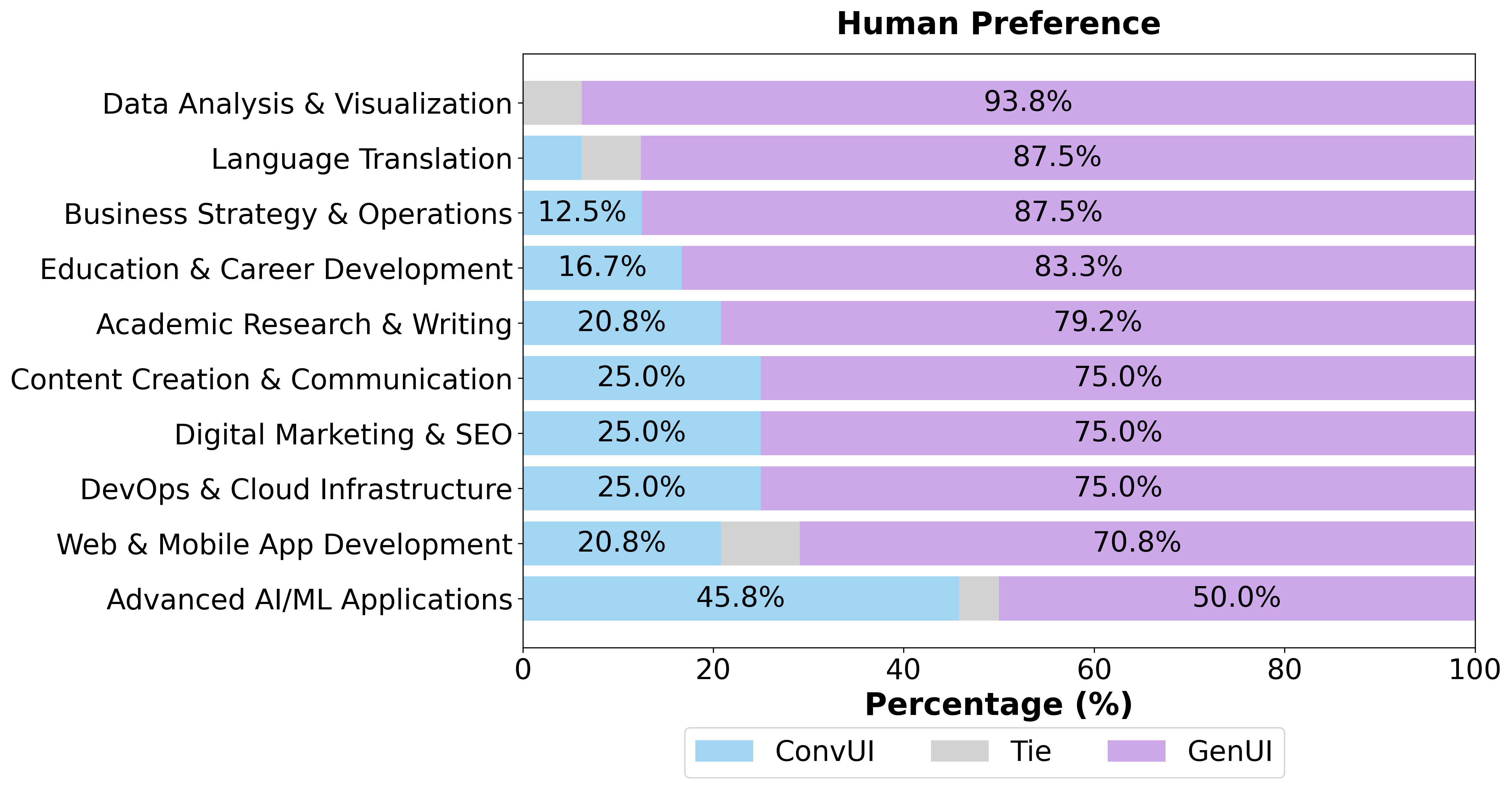

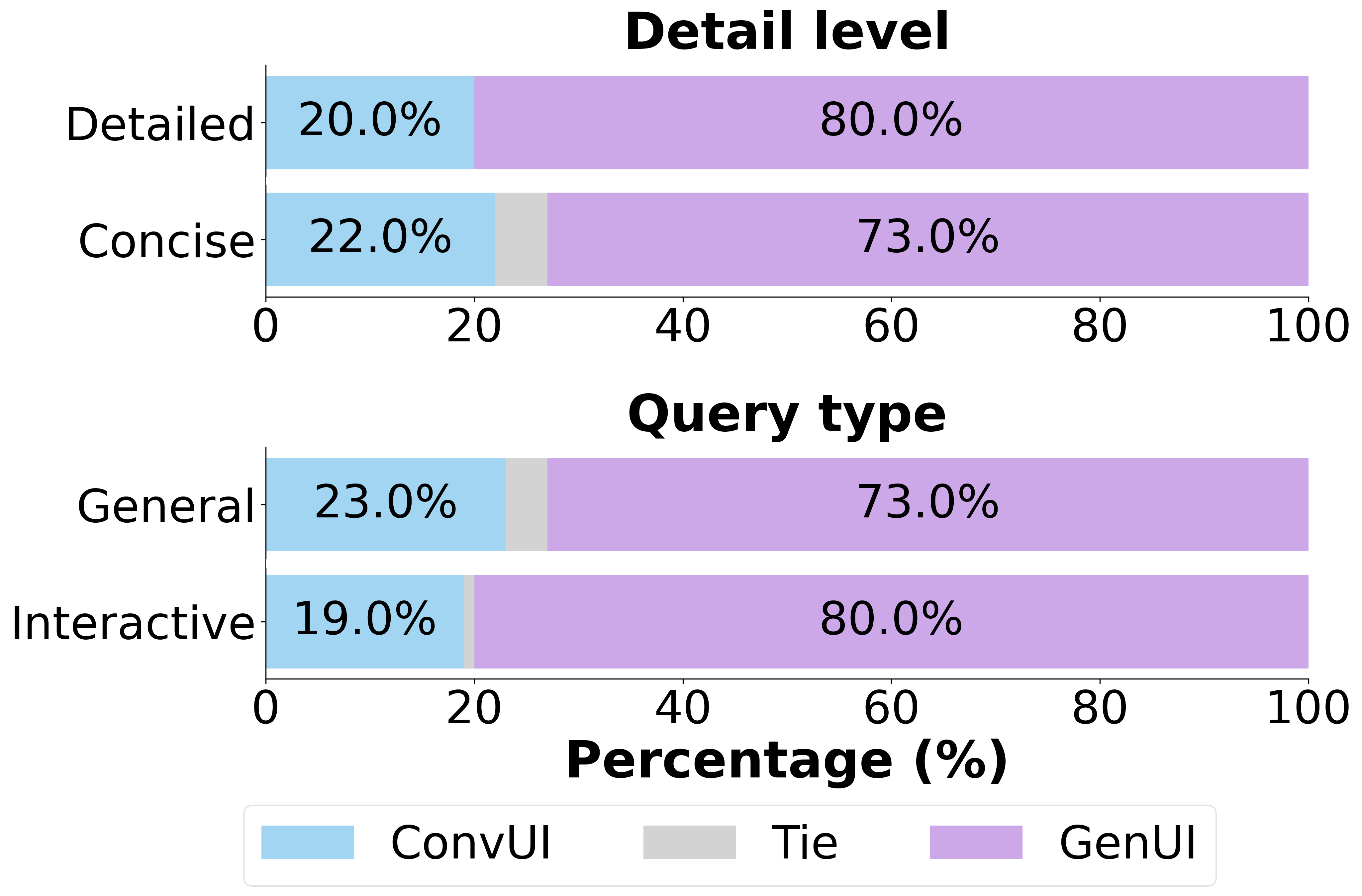

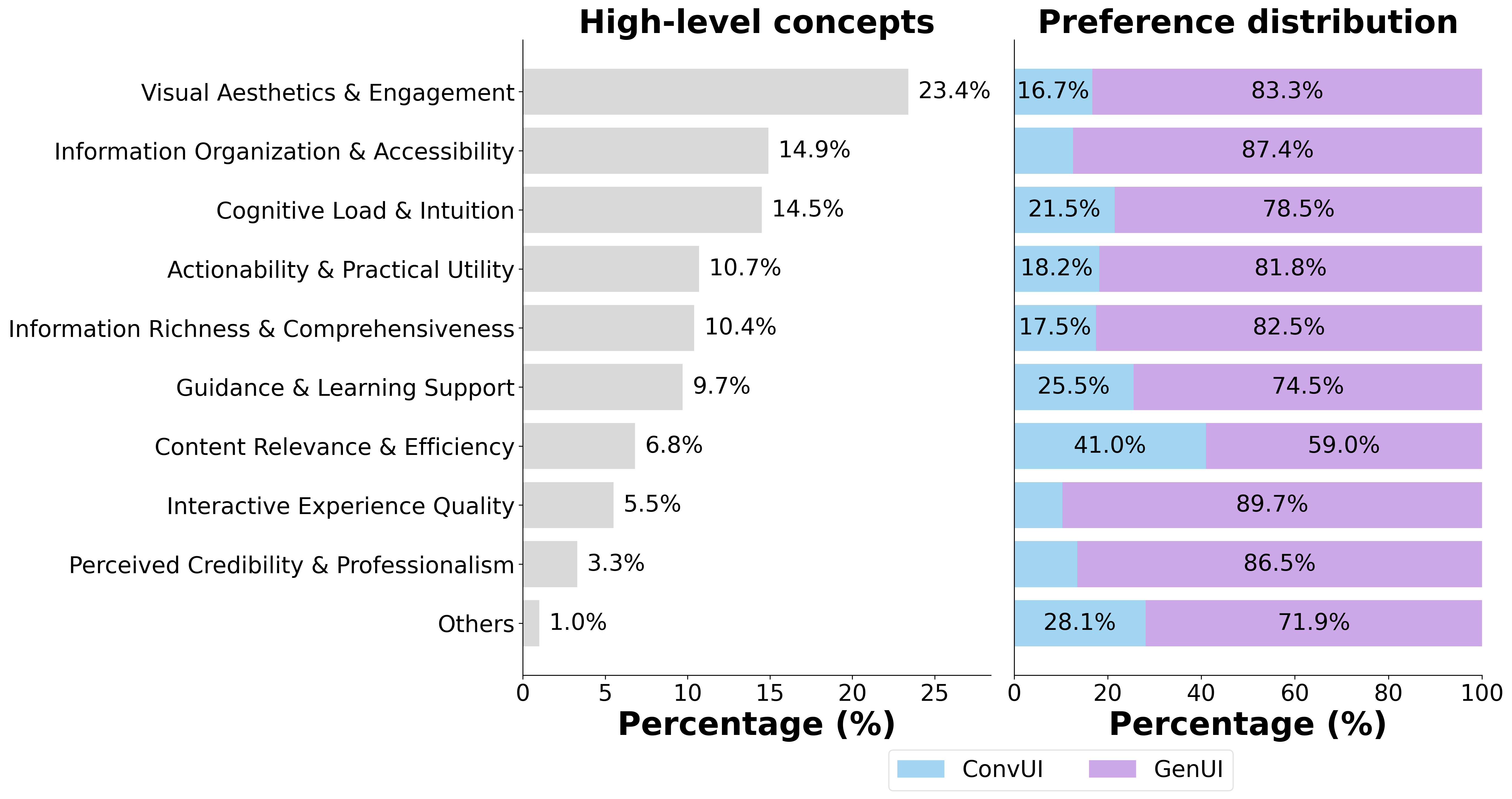

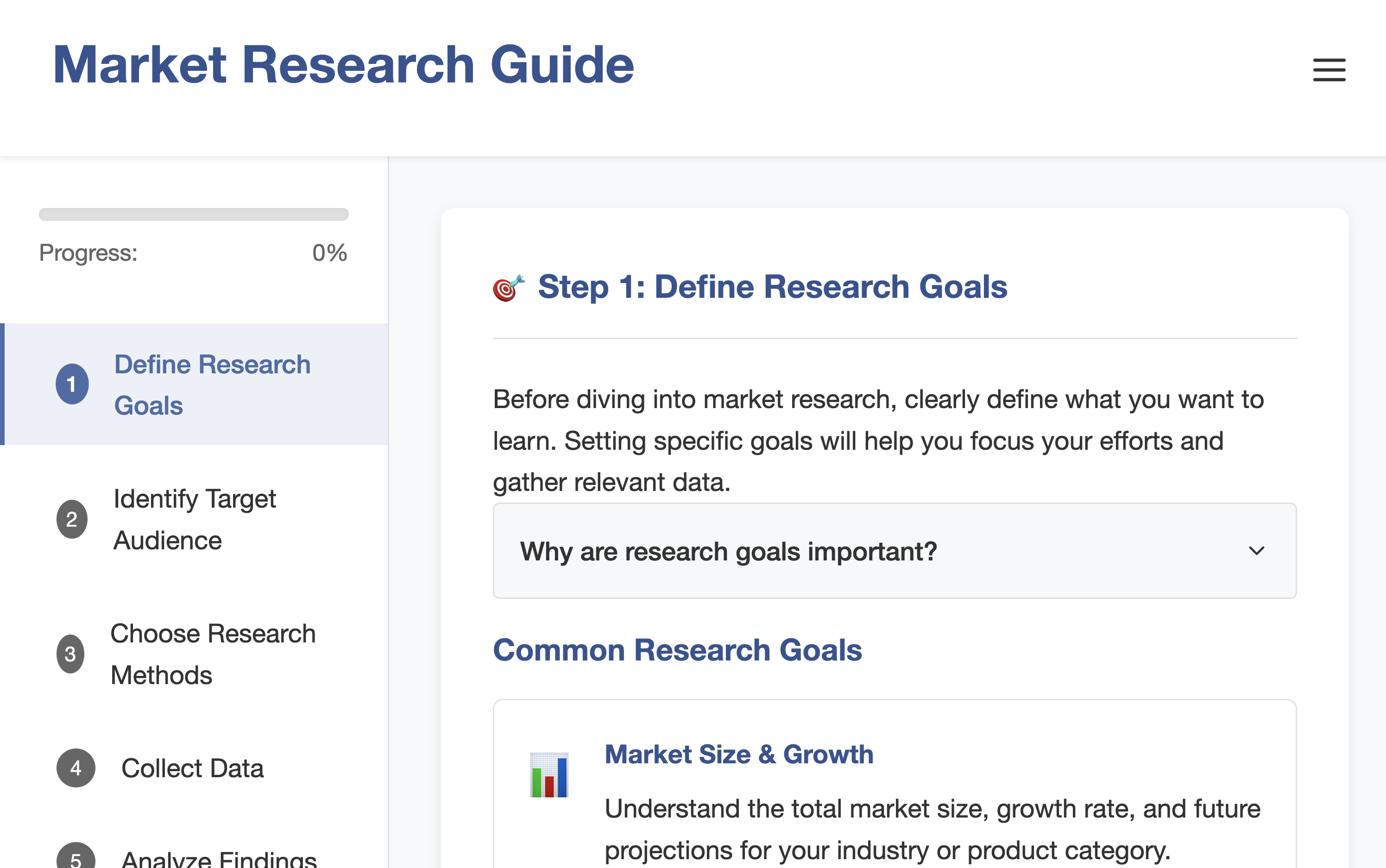

- Results show superior performance over traditional chat interfaces, with significant gains in usability, aesthetics, and cognitive offloading.

Generative Interfaces for LLMs: A Technical Analysis

Introduction and Motivation

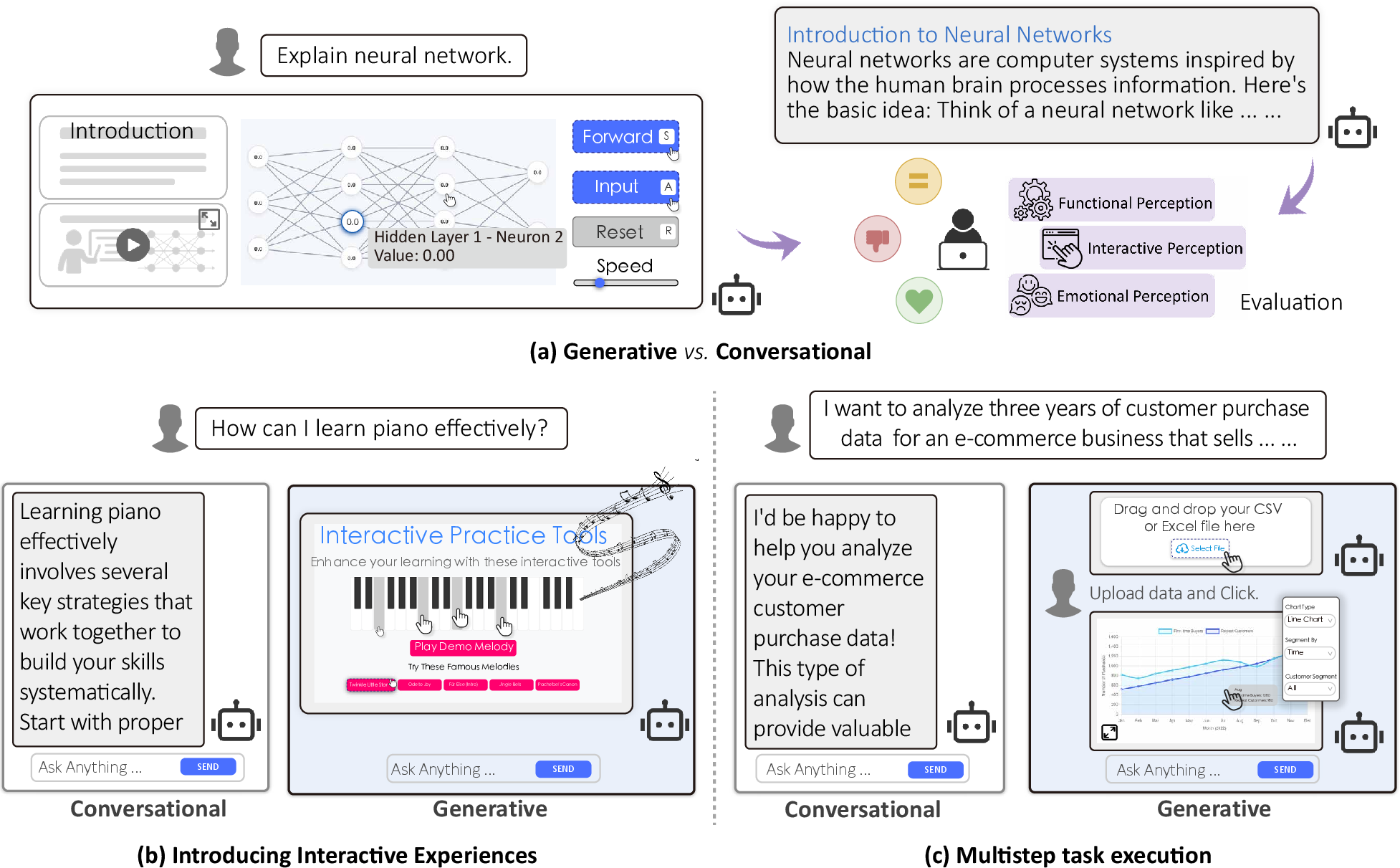

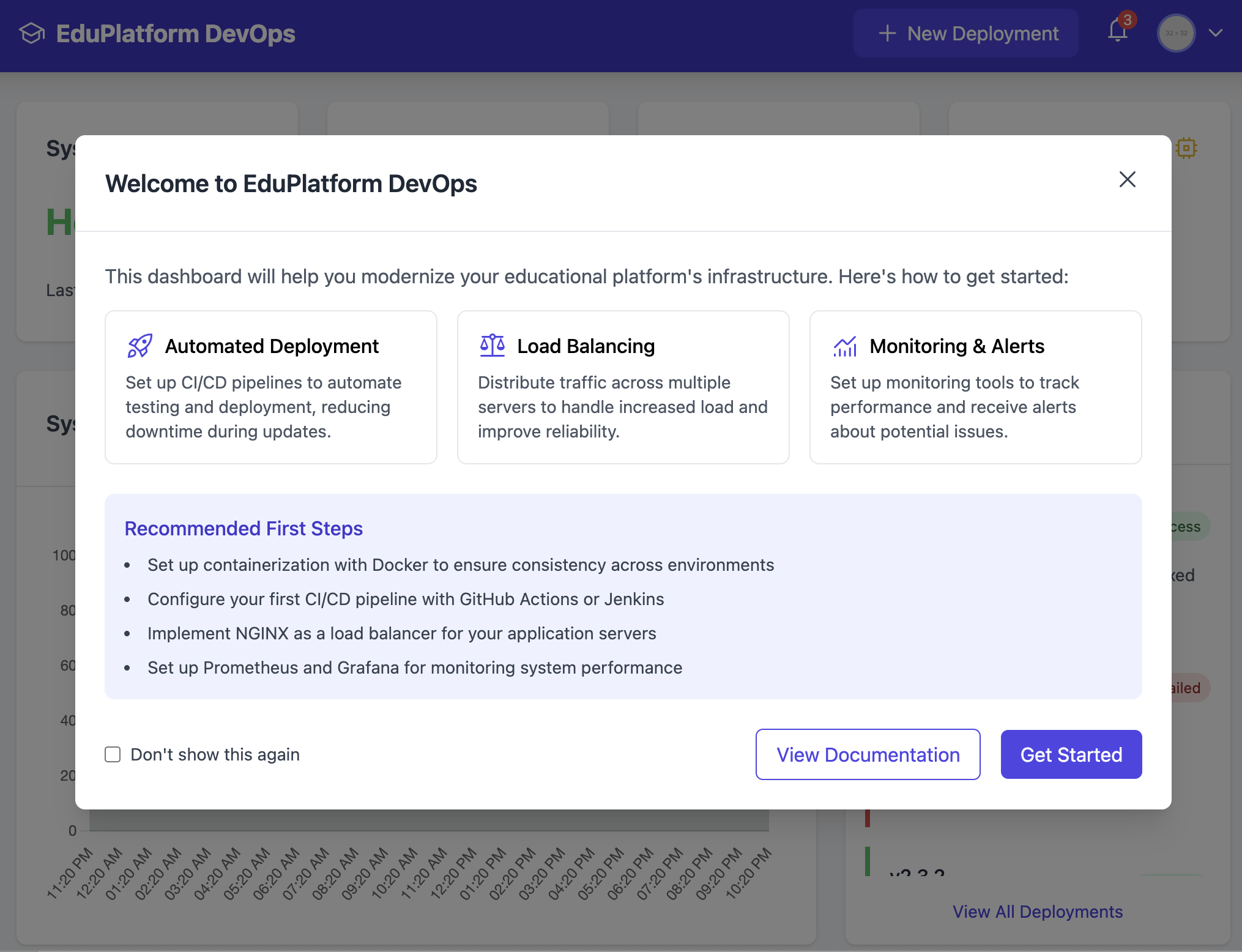

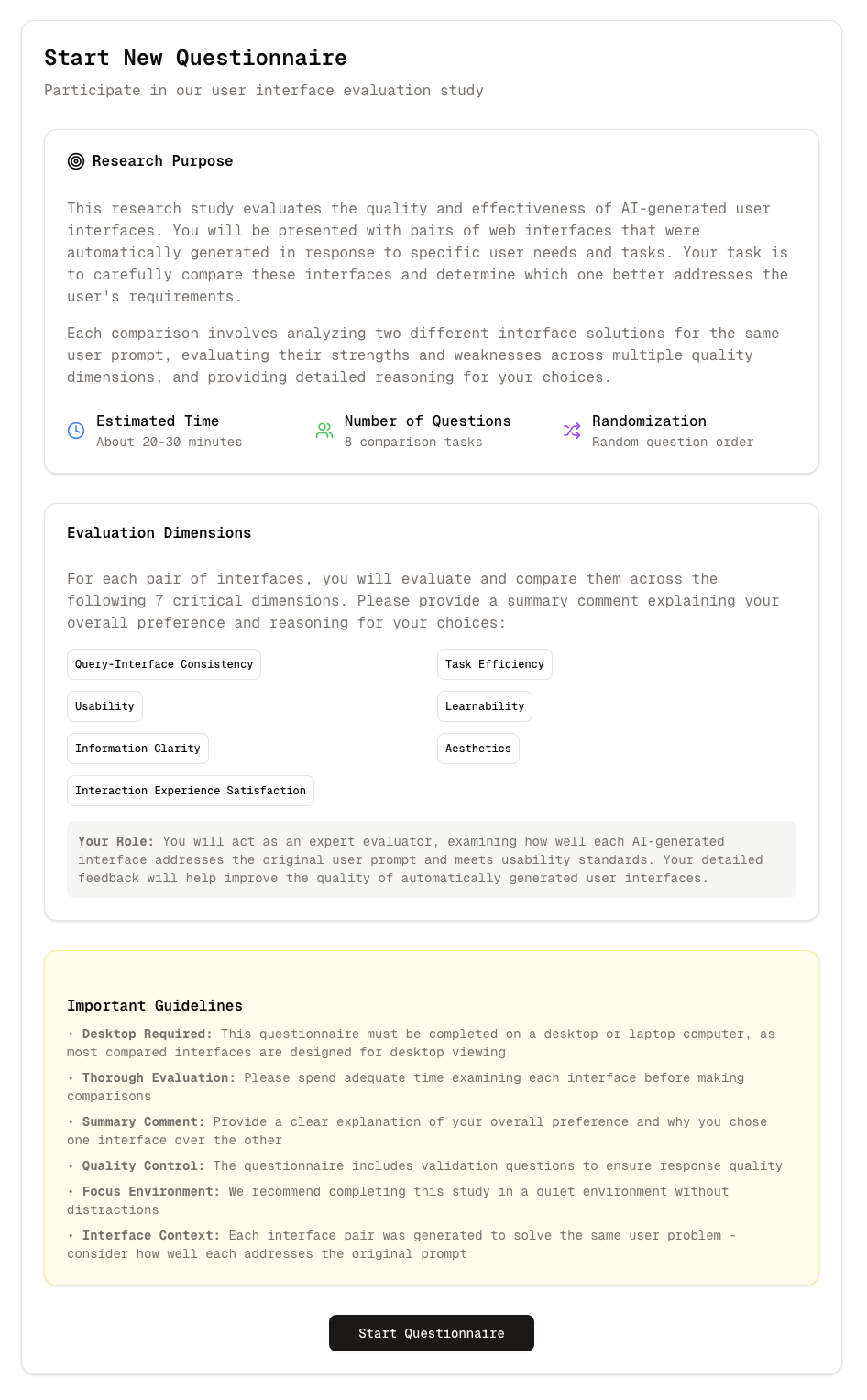

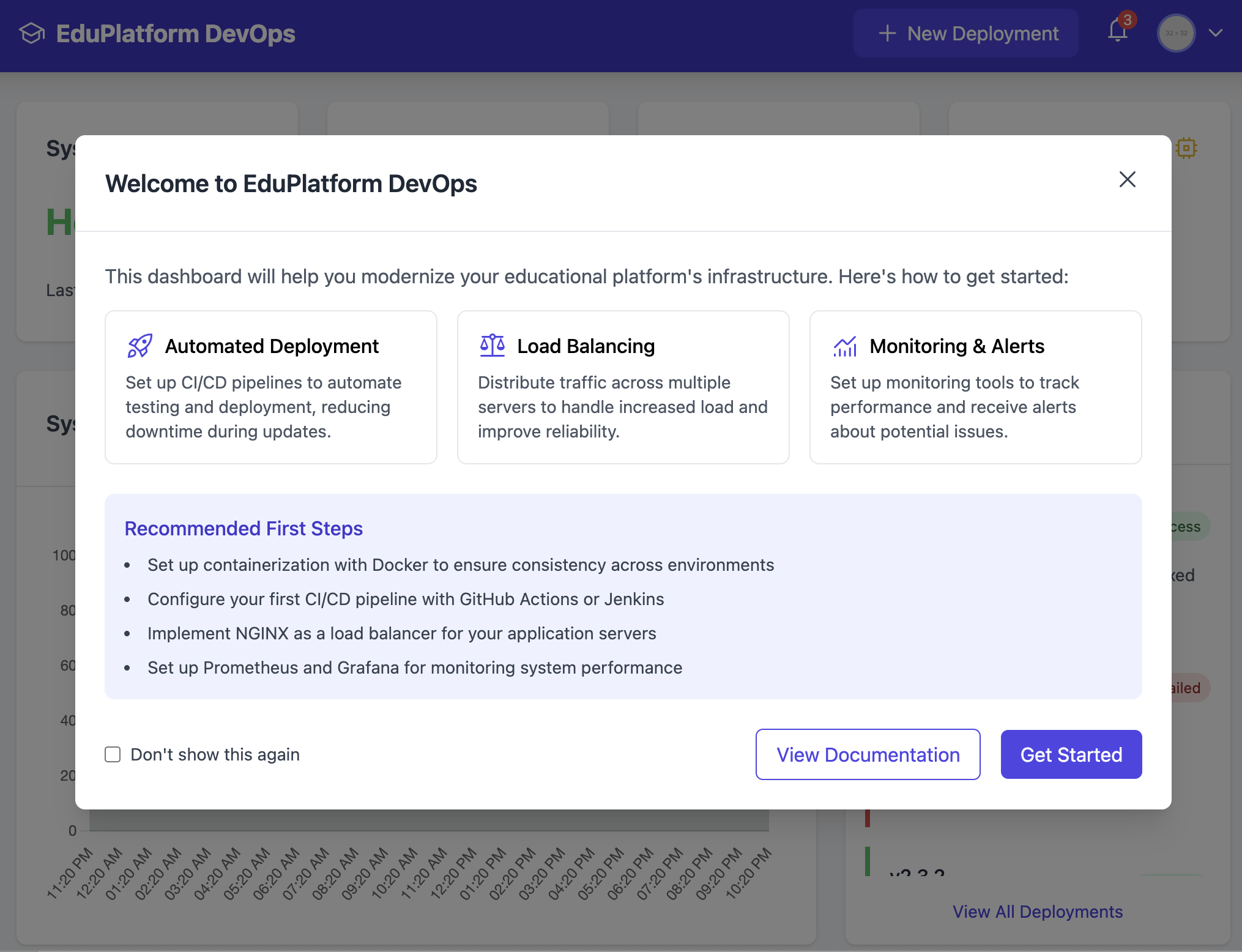

The paper "Generative Interfaces for LLMs" (2508.19227) introduces a paradigm shift in human-LLM interaction, moving beyond the limitations of linear conversational UIs to systems where LLMs proactively generate adaptive, task-specific user interfaces (UIs). The motivation is rooted in the inefficiency of static text responses for multi-turn, information-dense, and exploratory tasks. The proposed framework leverages structured representations and iterative refinement to synthesize UIs that are functionally, interactively, and emotionally superior to traditional chat-based approaches.

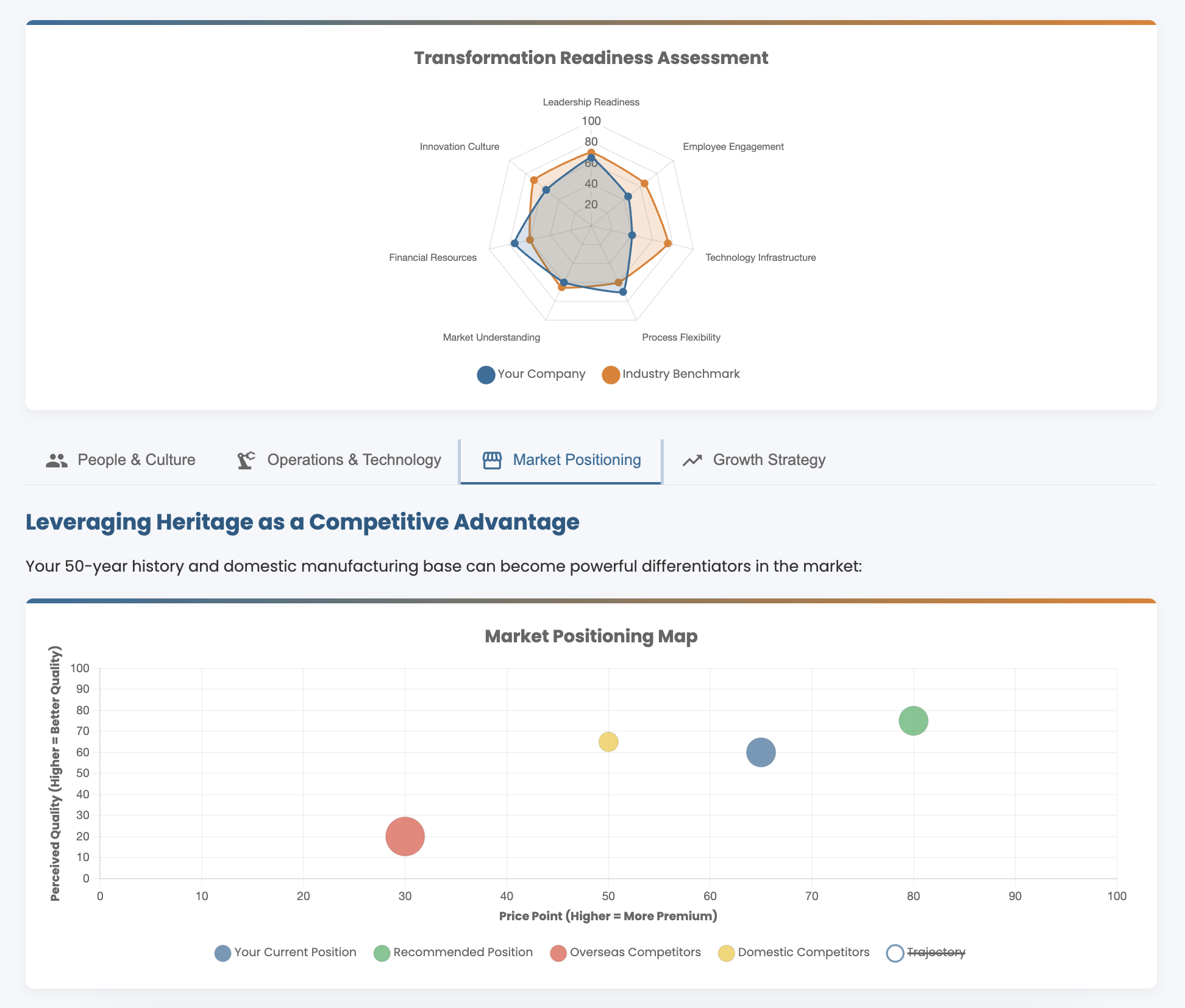

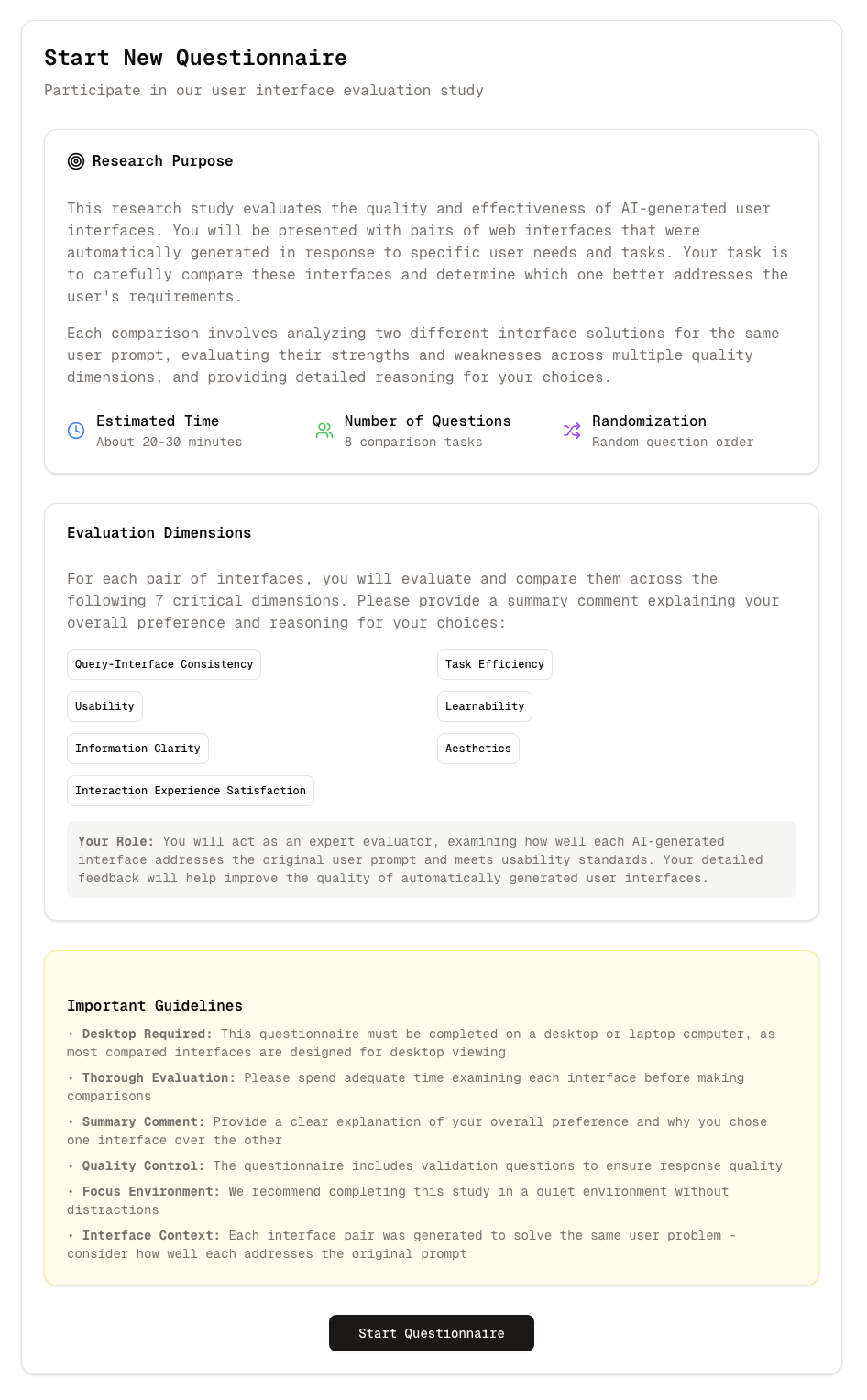

Figure 1: Generative Interfaces create structured, interactive experiences, transforming user queries into adaptive tools and workflows, outperforming conversational interfaces across functional, interactive, and emotional dimensions.

Technical Framework

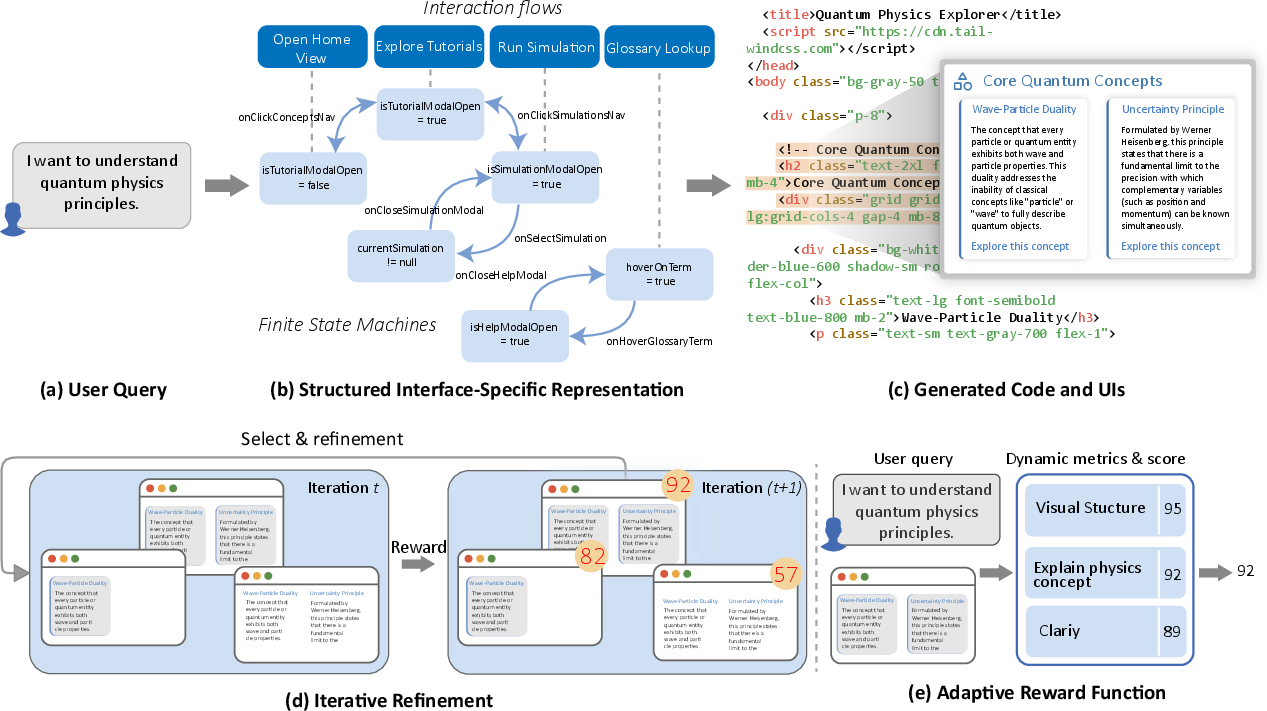

Structured Interface-Specific Representation

The core innovation is the translation of user queries into structured representations, which guide the UI generation process. This representation operates at two levels:

- Interaction Flows: Modeled as directed graphs G=(V,T), where nodes V represent interface views/subgoals and edges T denote transitions triggered by user actions.

- Finite State Machines (FSMs): Each UI component is described as M=(S,E,δ,s0), formalizing state transitions in response to user events.

This dual abstraction enables modular, interpretable, and controllable UI synthesis, supporting complex interaction logic and stateful behaviors.

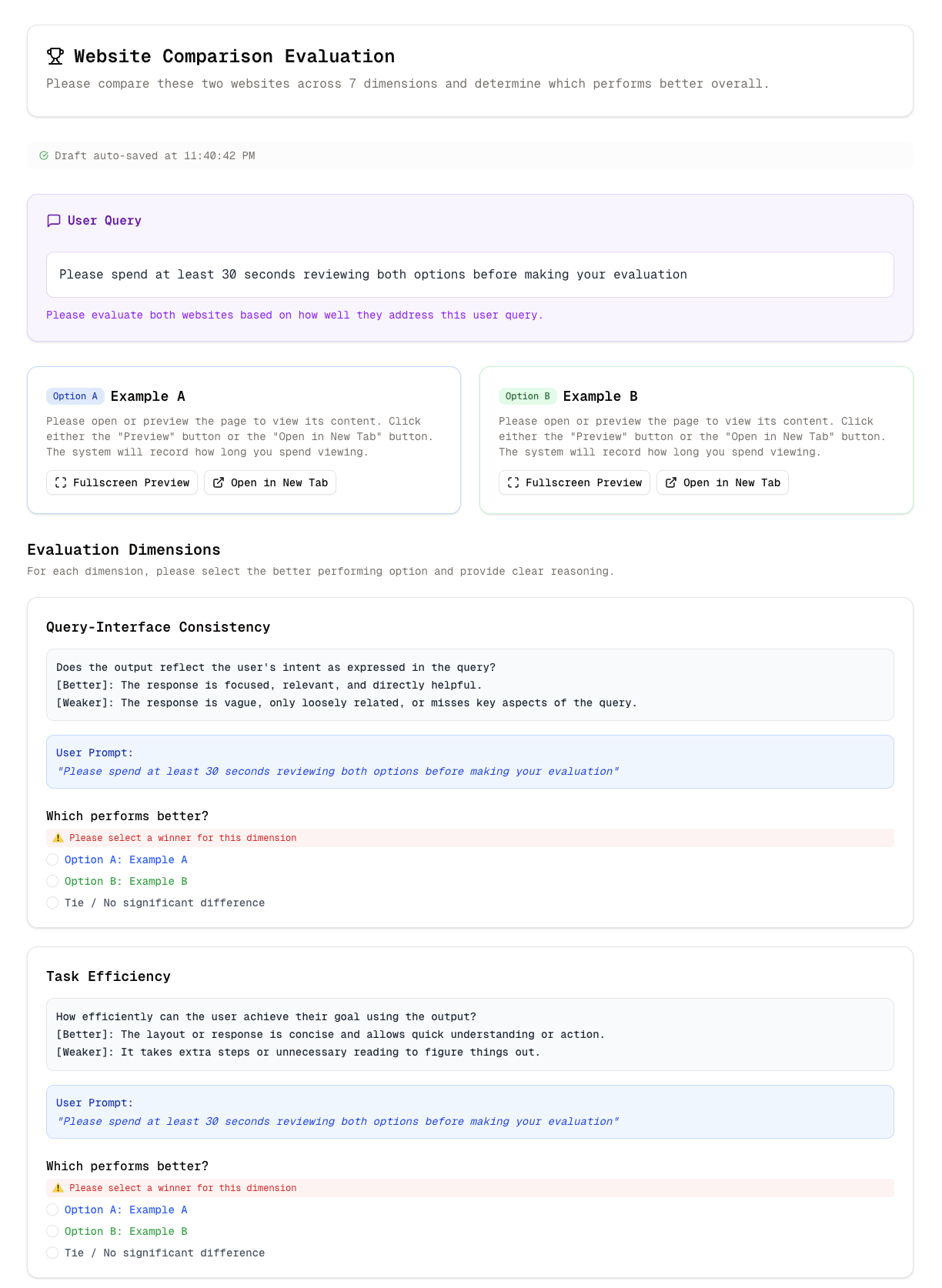

Generation Pipeline

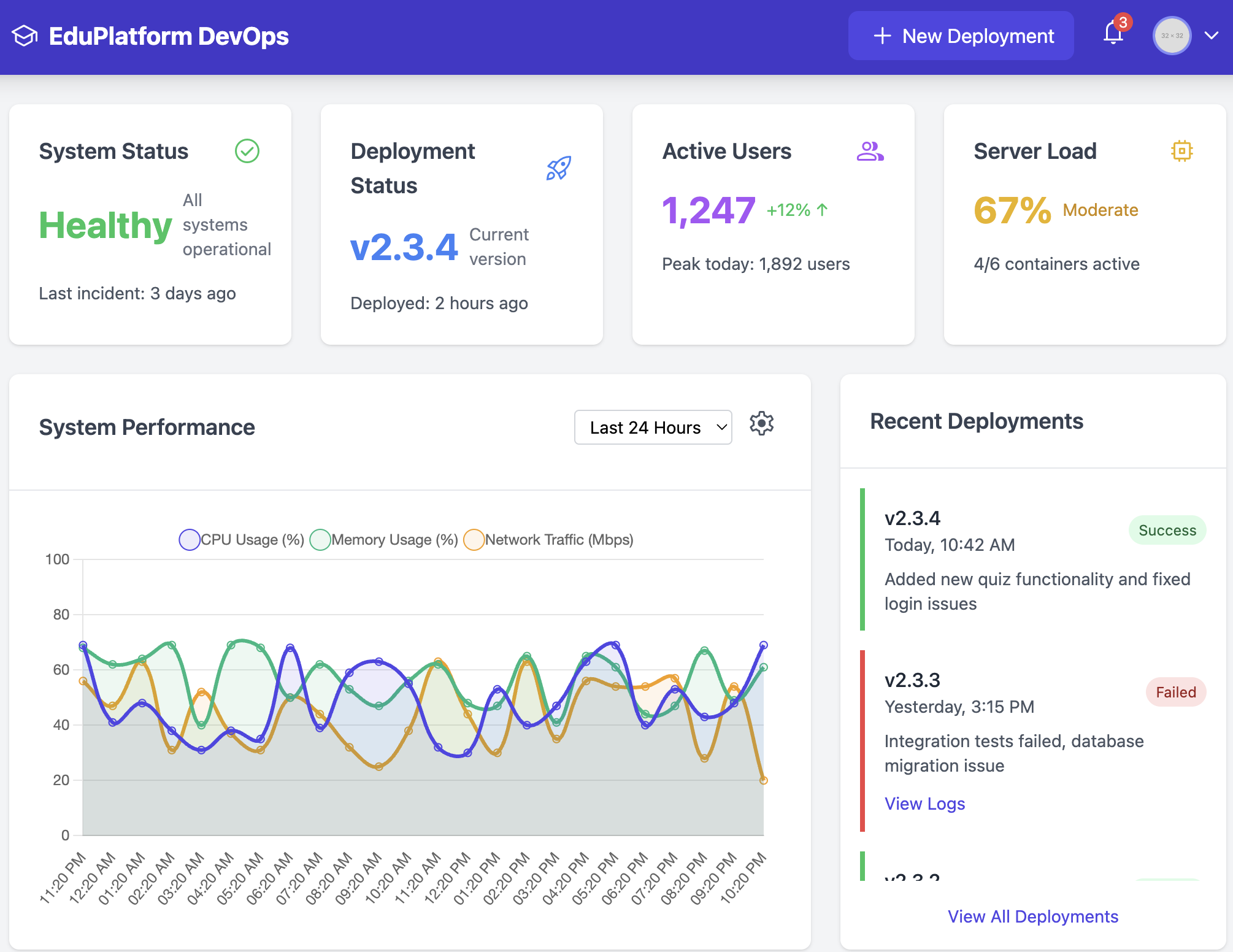

The pipeline consists of:

- Requirement Specification: Extraction of goals, features, components, and interaction styles from the user query.

- Structured Representation Generation: Construction of interaction flows and FSMs based on the specification.

- UI Code Synthesis: Leveraging a codebase of reusable components and web retrieval modules, the LLM generates executable HTML/CSS/JS code, rendering the interface.

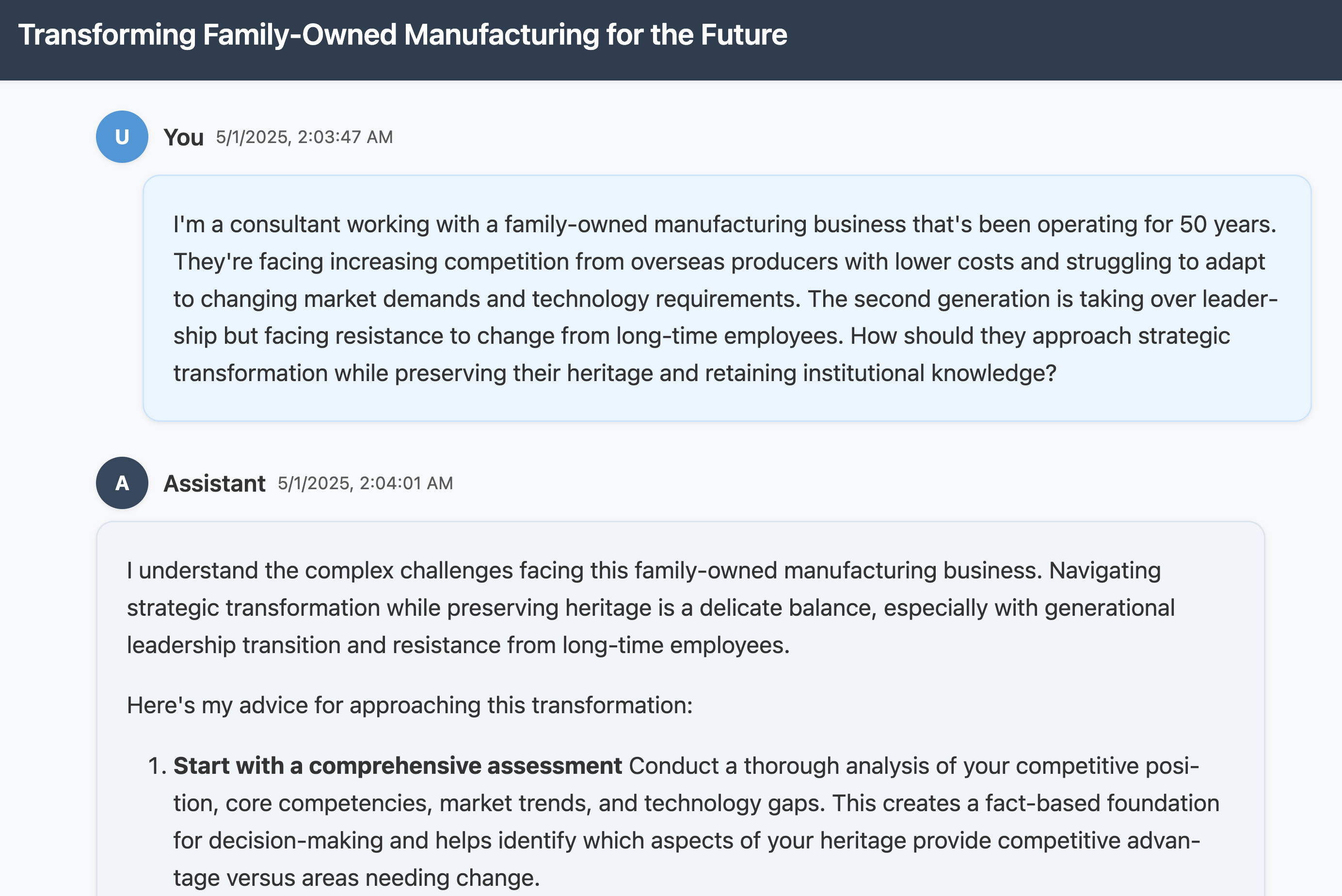

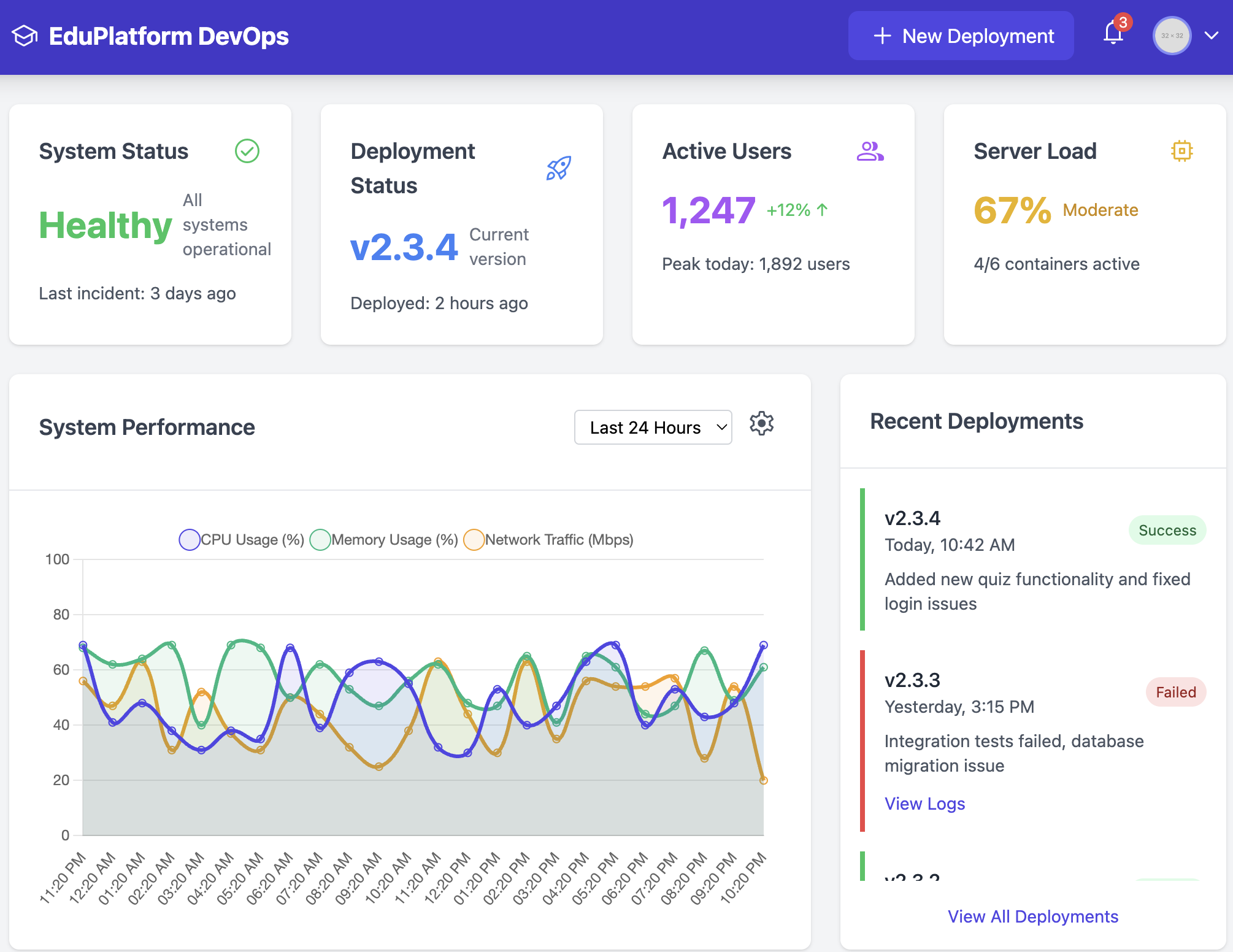

Figure 2: The infrastructure converts user queries into structured representations, which guide code generation and interface rendering, with iterative refinement and adaptive reward functions.

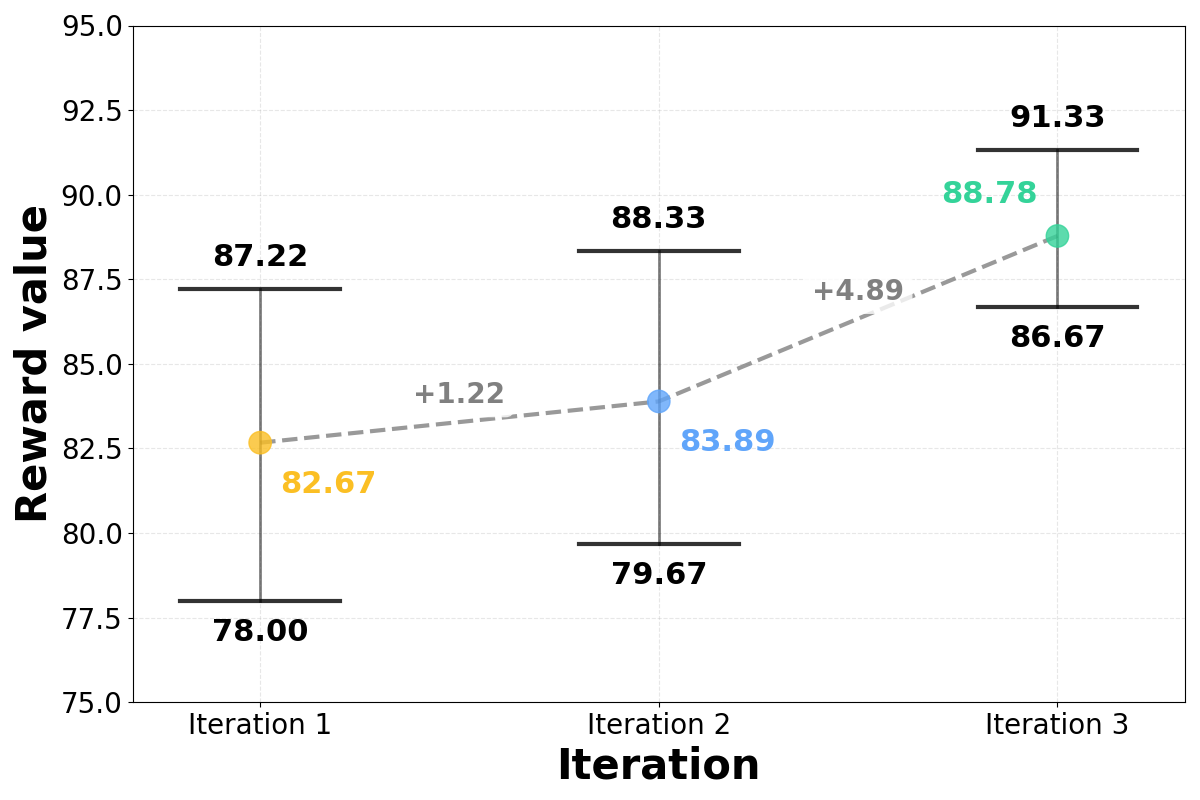

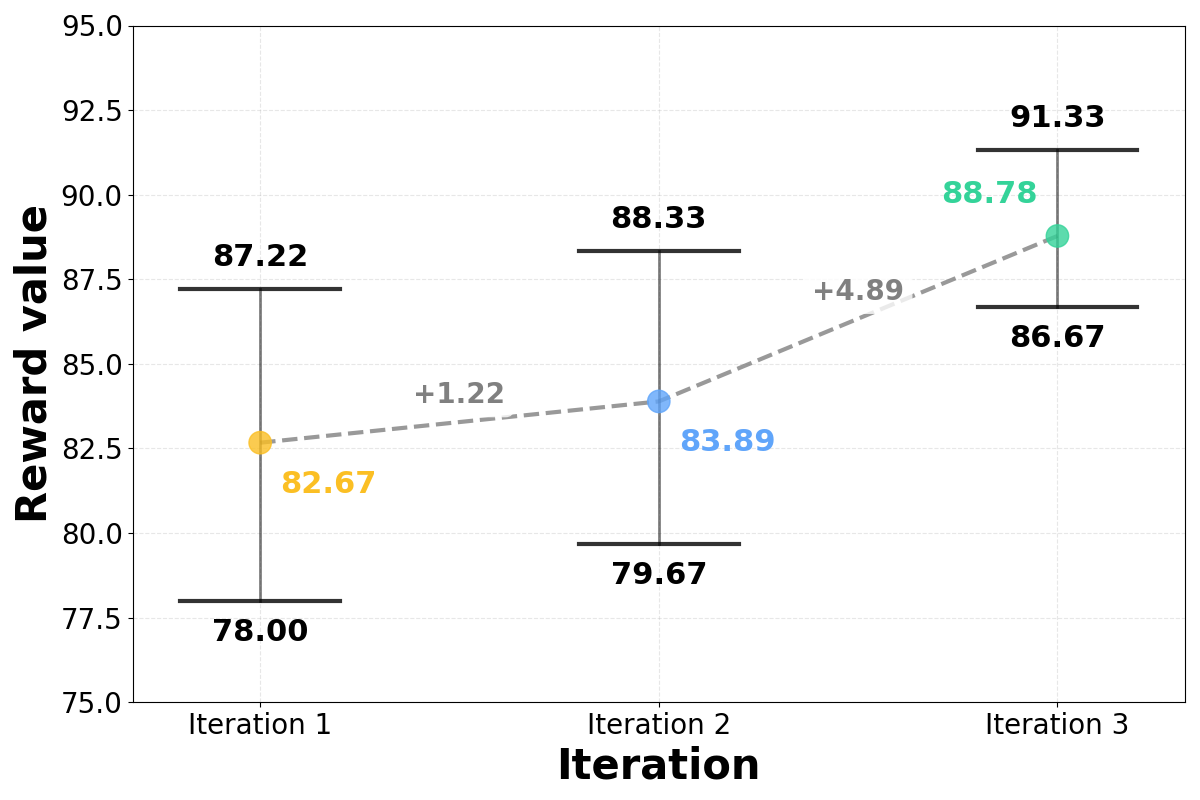

Iterative Refinement with Adaptive Reward Functions

UI generation is treated as an iterative optimization process. For each query, an LLM constructs a query-specific reward function comprising weighted evaluation metrics (e.g., visual structure, clarity, interactivity). Multiple UI candidates are generated and scored; the highest-scoring candidate is refined in subsequent iterations. The process continues until a threshold score (≥90) or a maximum number of iterations is reached.

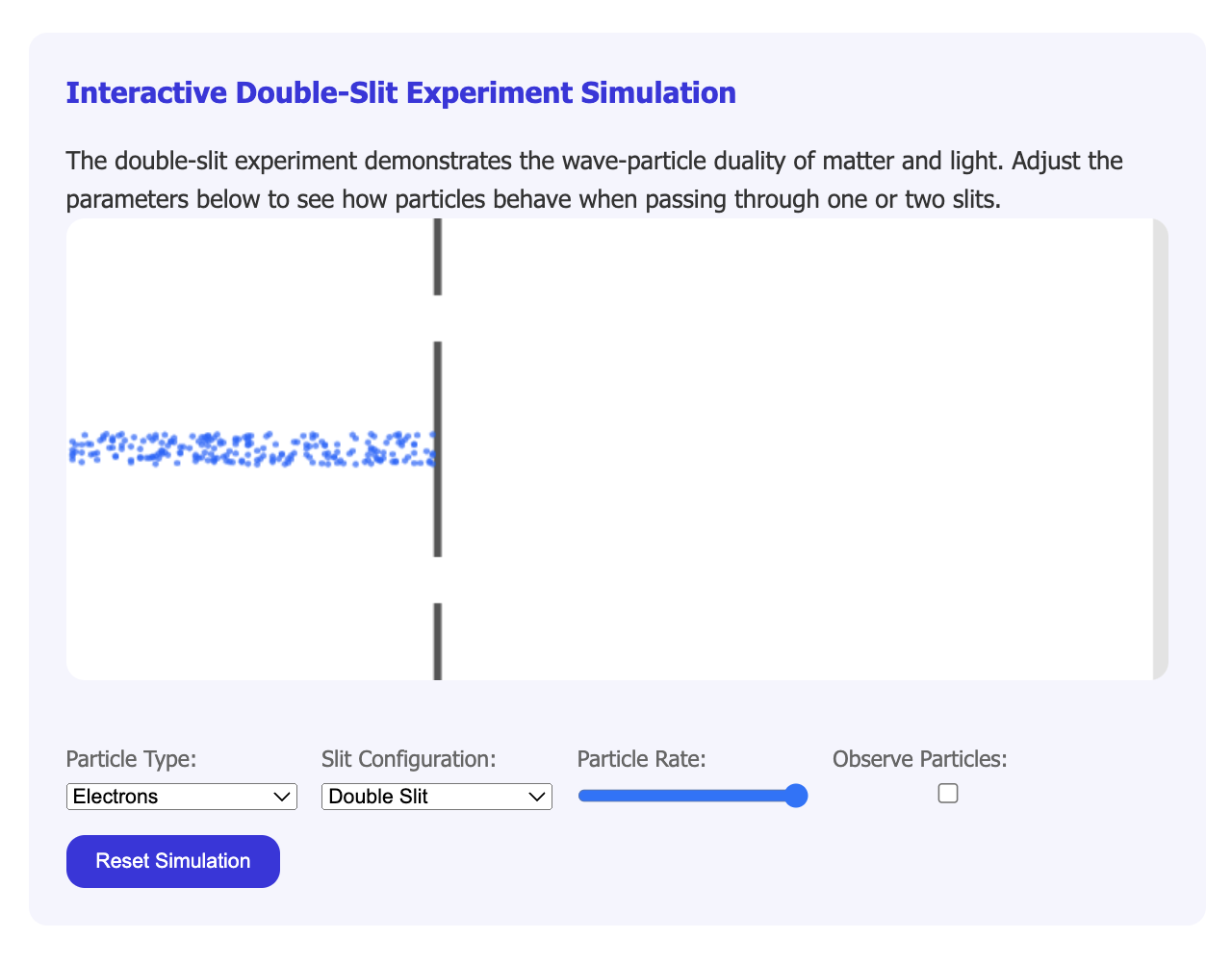

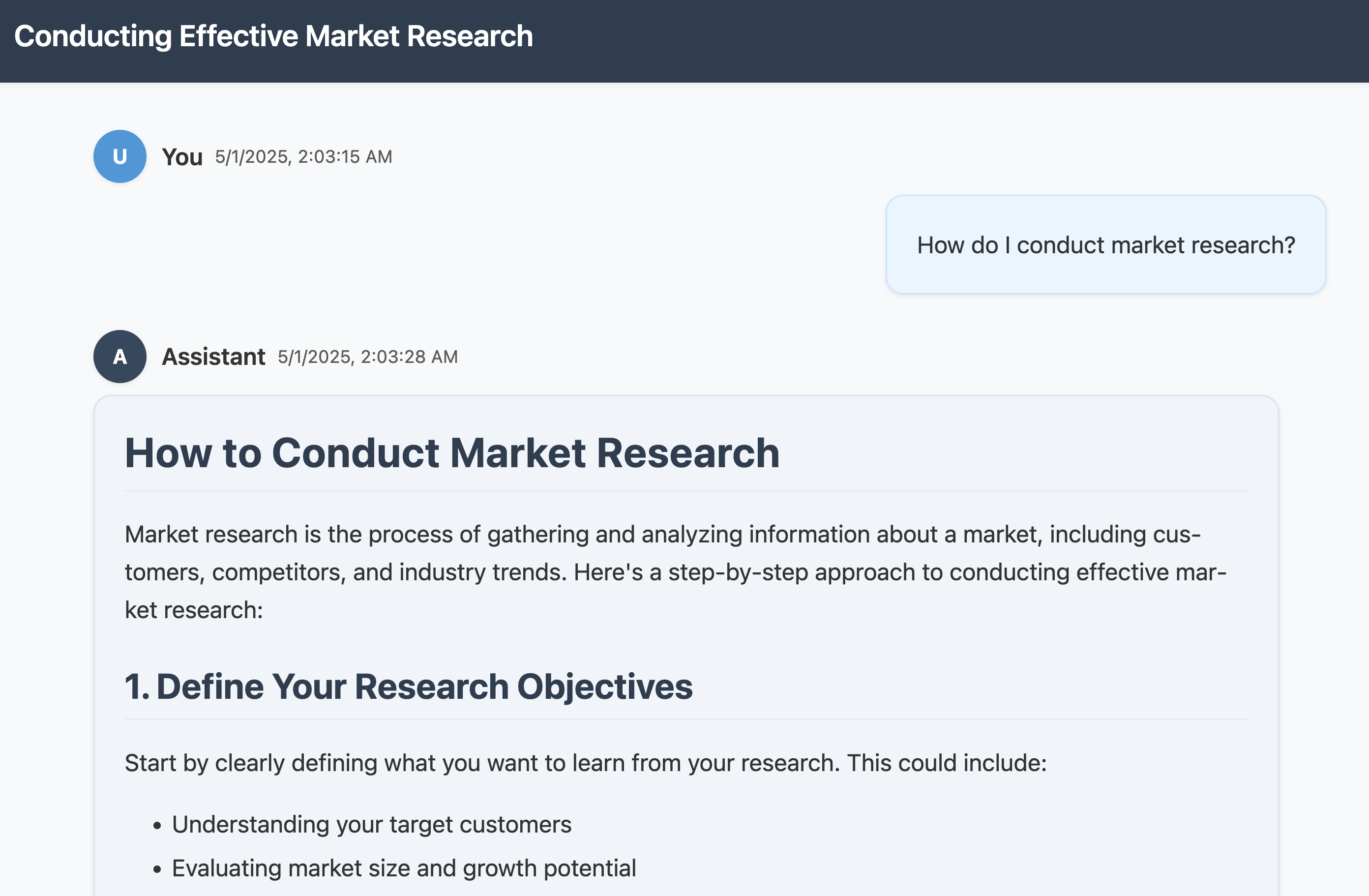

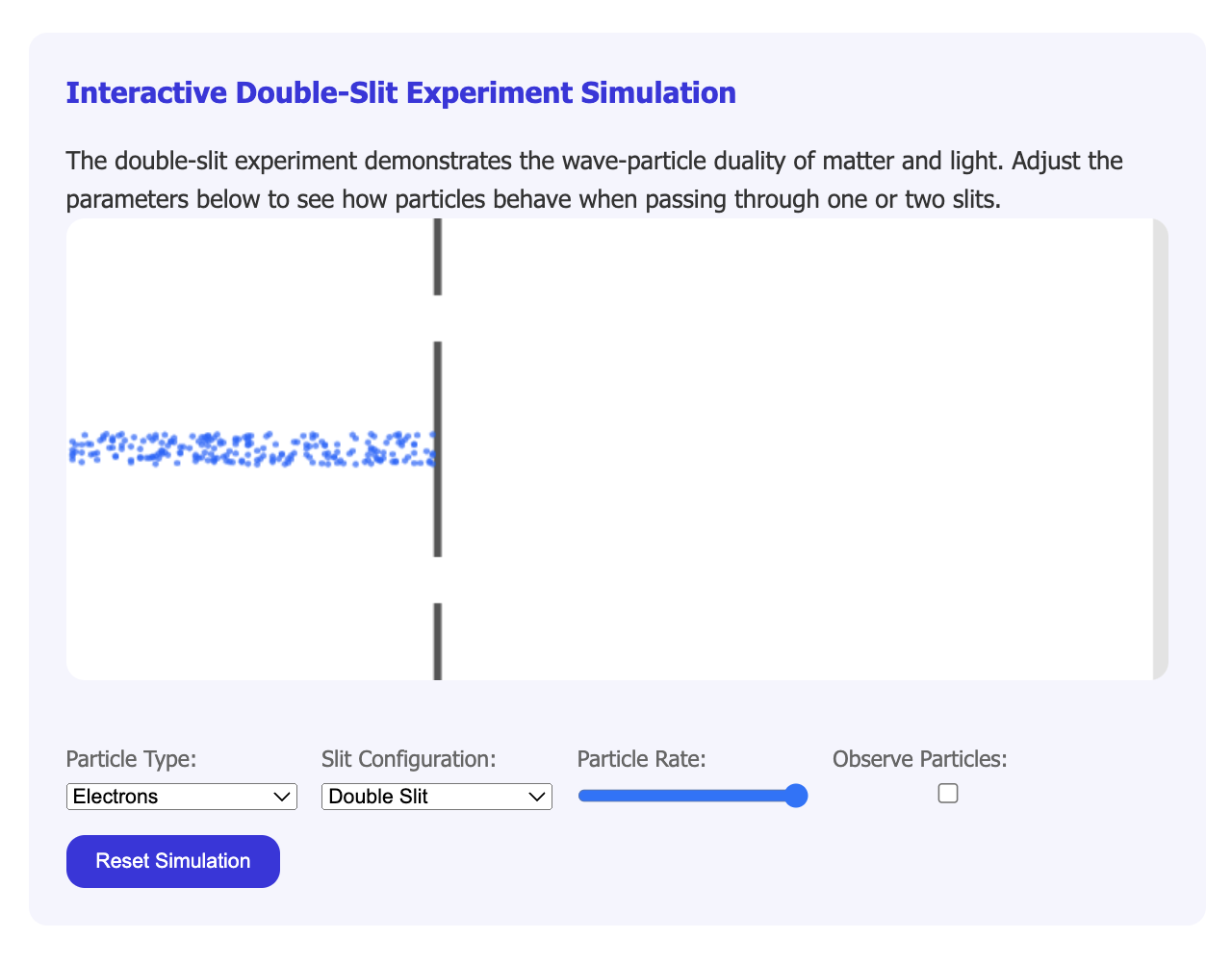

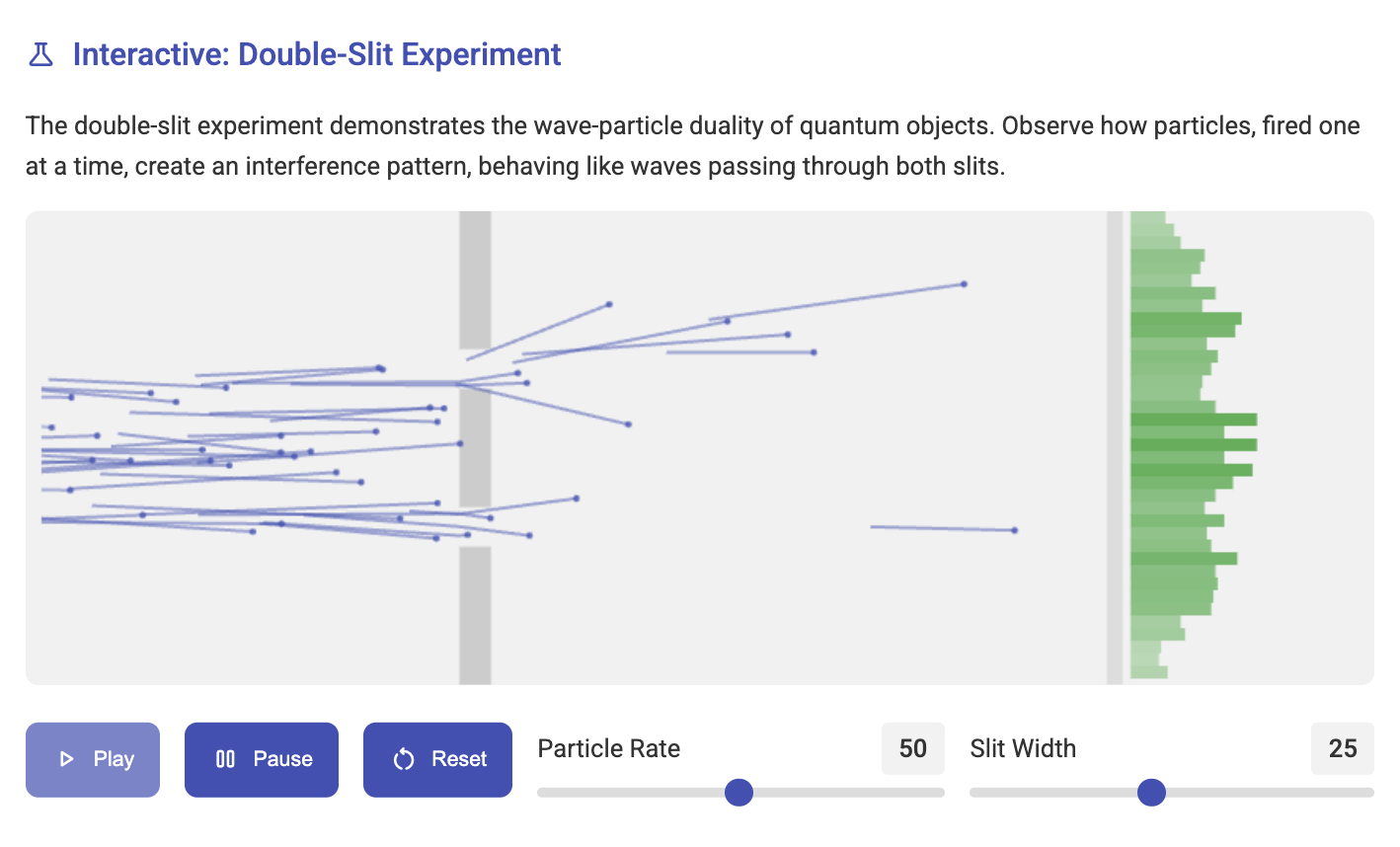

Figure 3: Static reward settings fail to visualize complex phenomena, highlighting the need for adaptive, intent-aware evaluation.

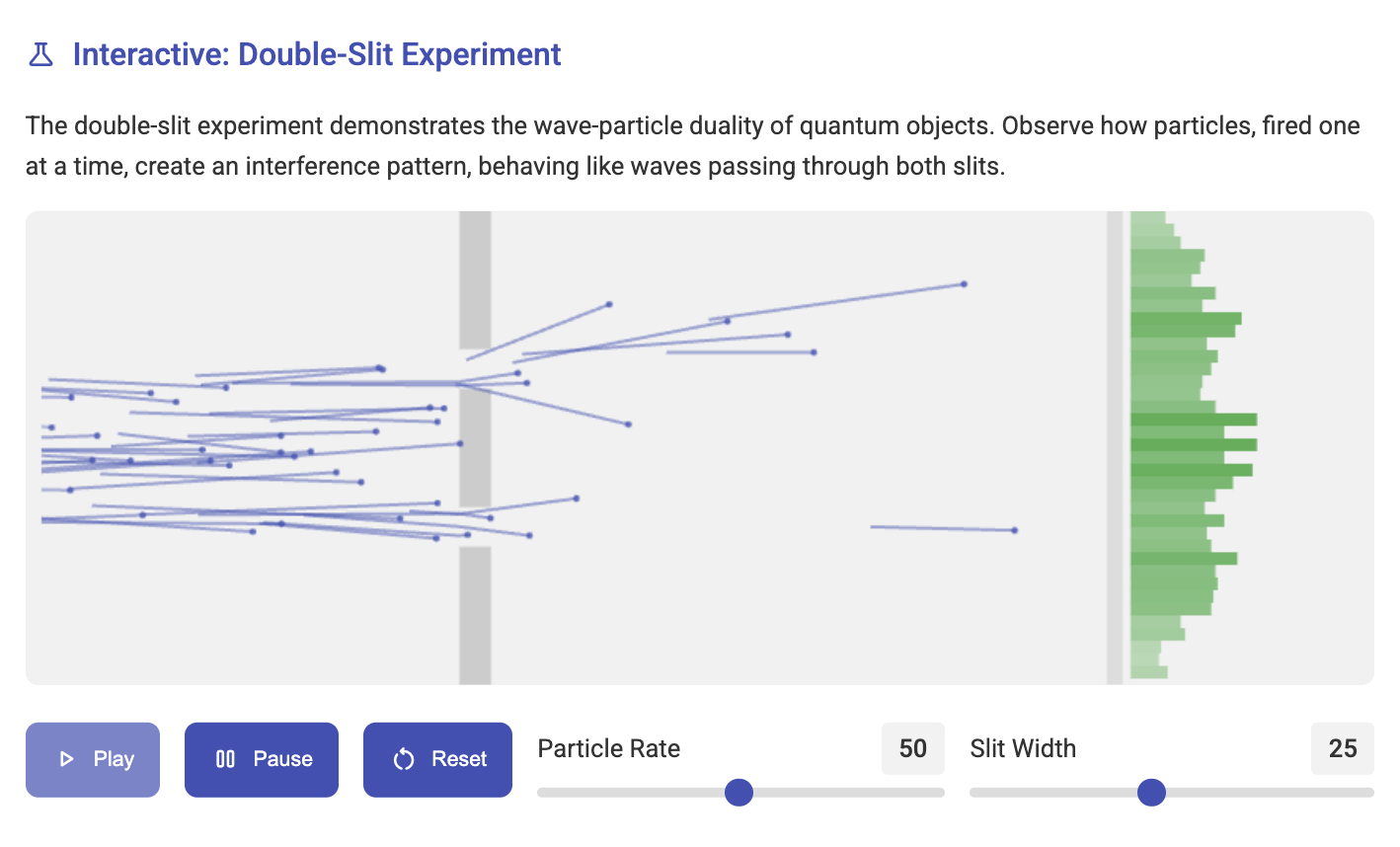

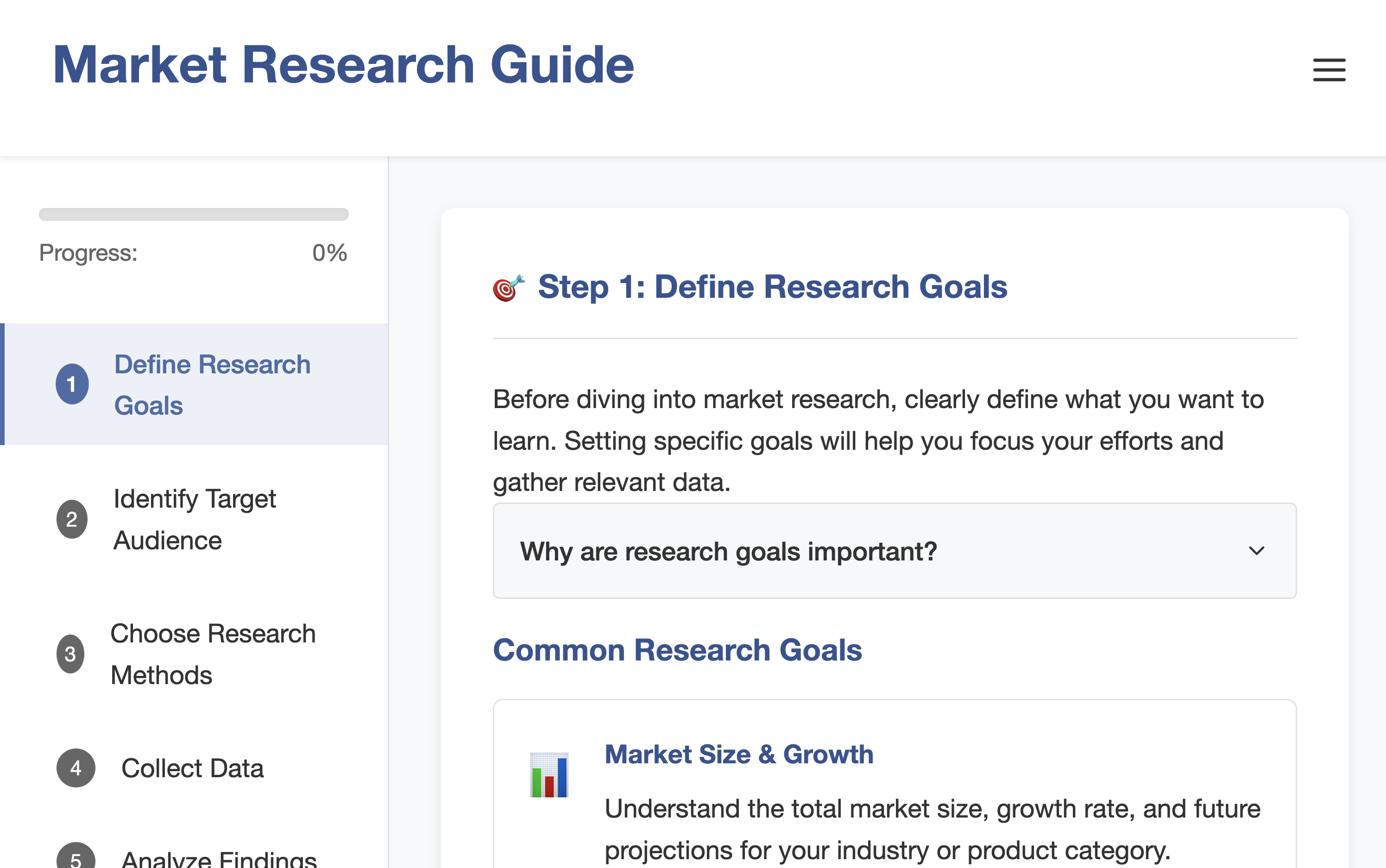

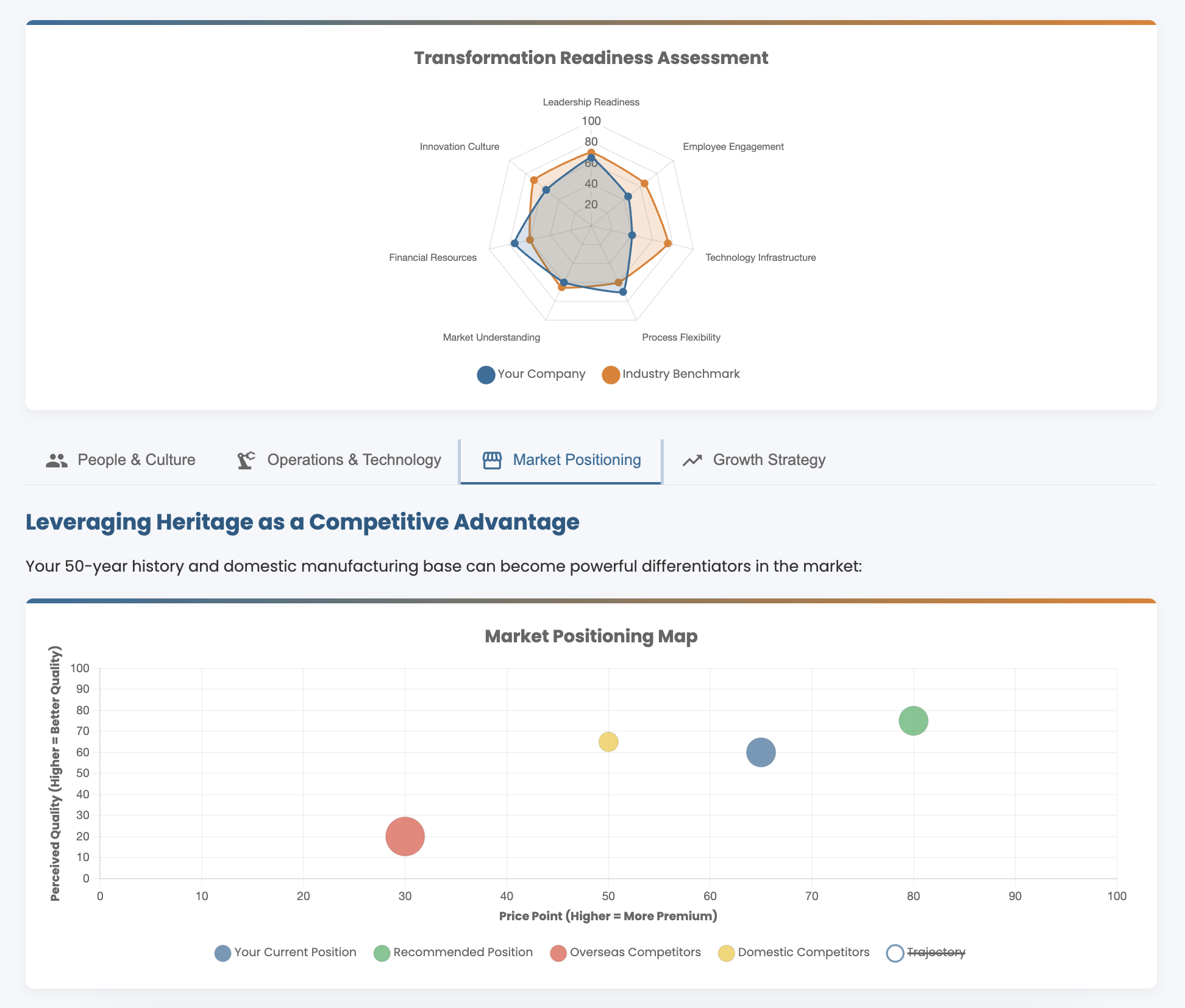

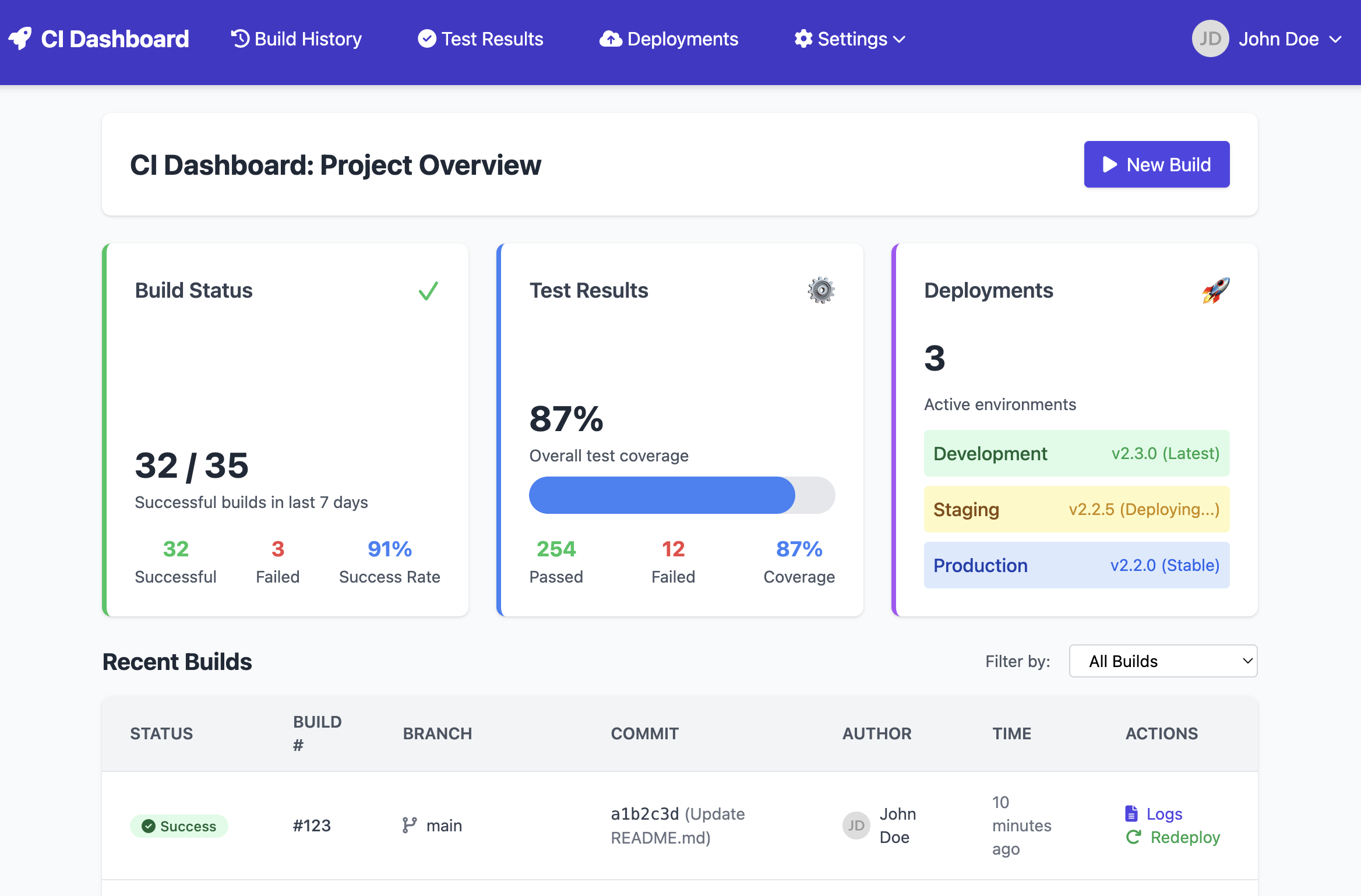

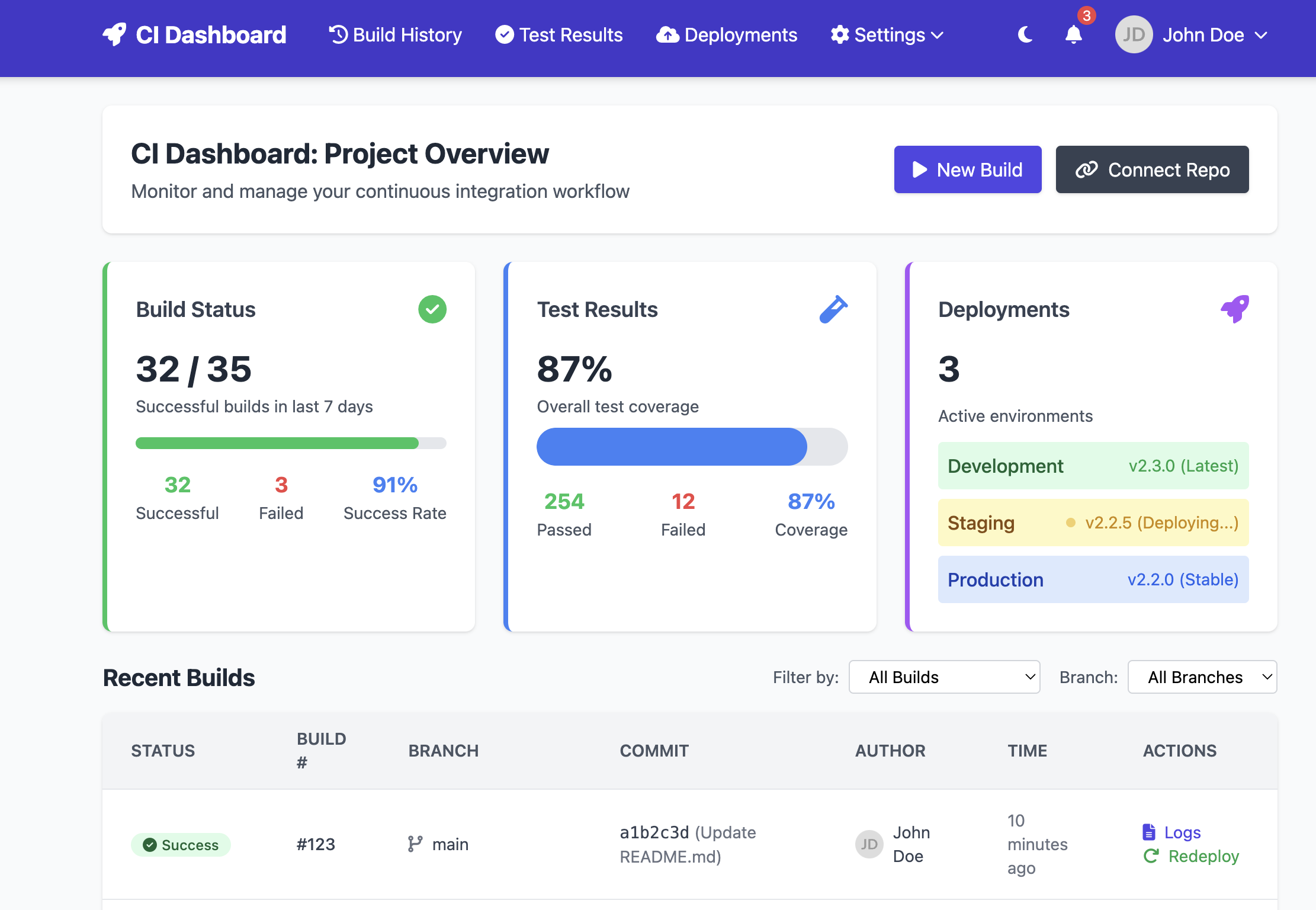

Figure 4: GenUI presents multiple charts and visual summaries, demonstrating the system's ability to synthesize rich, interactive interfaces.

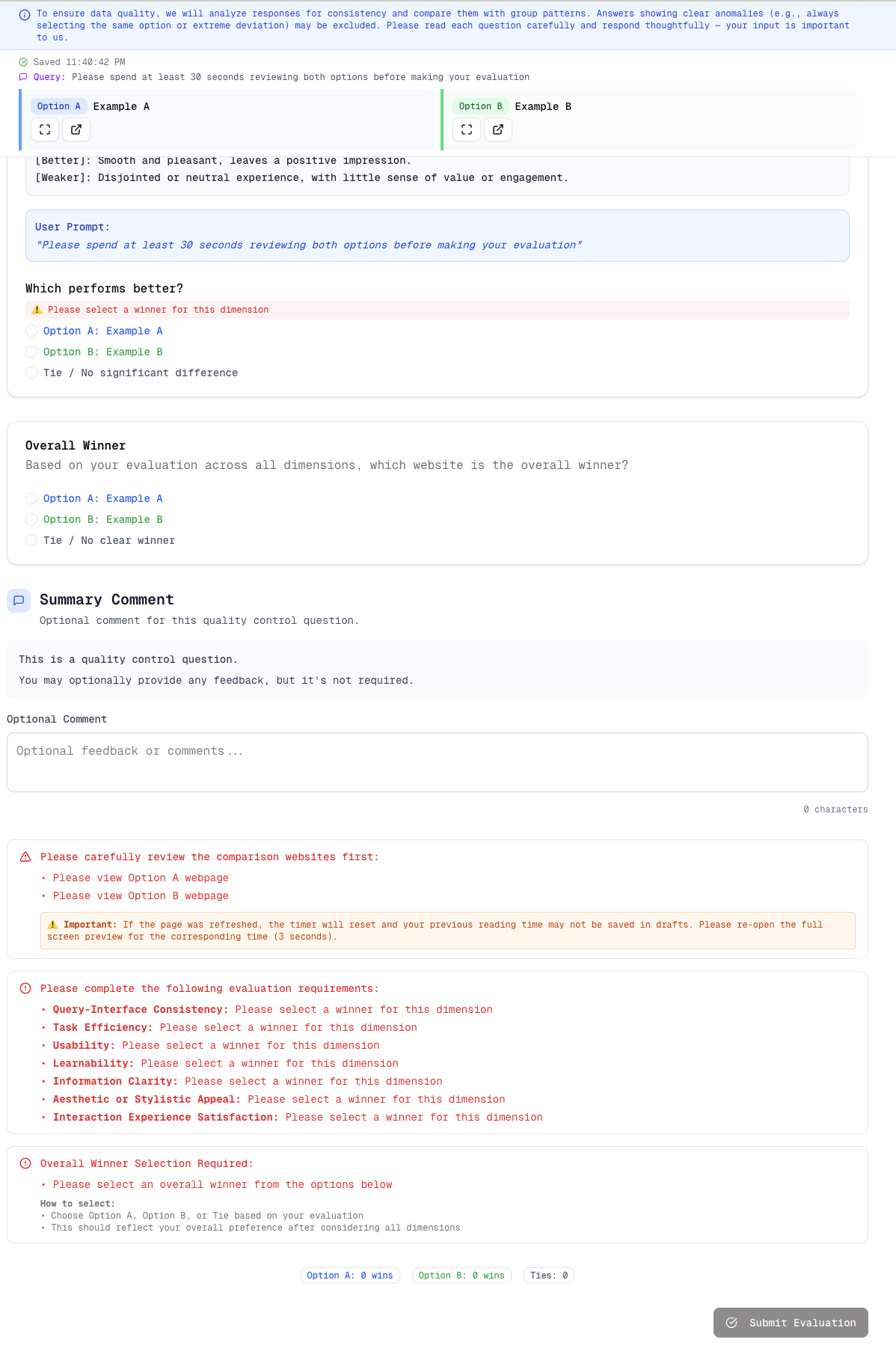

Evaluation Methodology

Multidimensional Assessment

The evaluation framework comprises:

Figure 6: Breakdown of query detail level and type, illustrating GenUI's advantage in interactive and detailed queries.

Results

Ablation and Analysis

Ablation studies isolate the contributions of structured representation, iterative refinement, and adaptive reward functions:

- Structured vs. Natural Language Representation: Structured representations improve overall win rate from 13% to 17%.

- One-shot vs. Iterative Generation: Iterative refinement yields a +14% improvement in human preference.

- Static vs. Adaptive Reward: Adaptive rewards increase win rate by 17% over static heuristics.

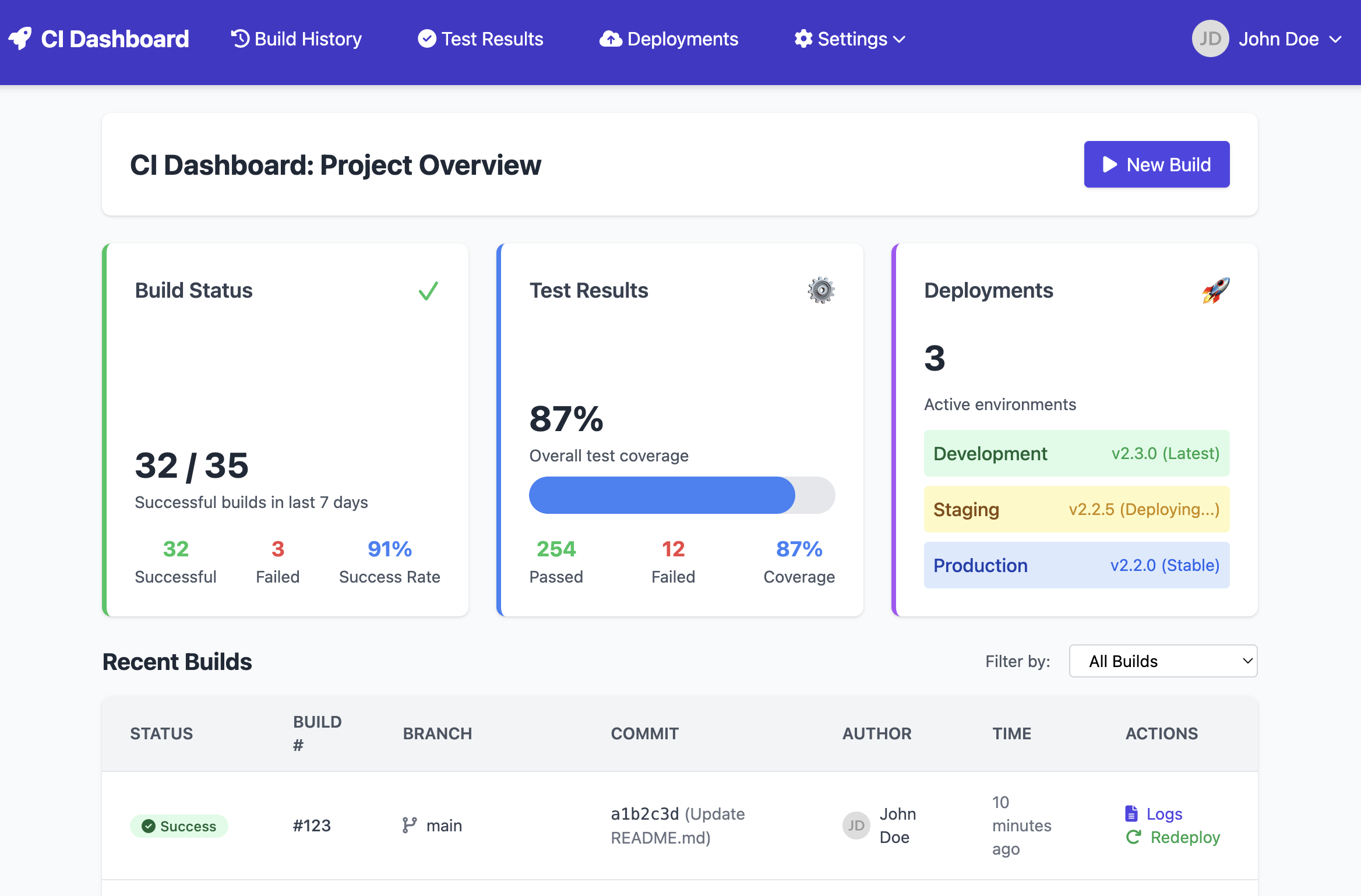

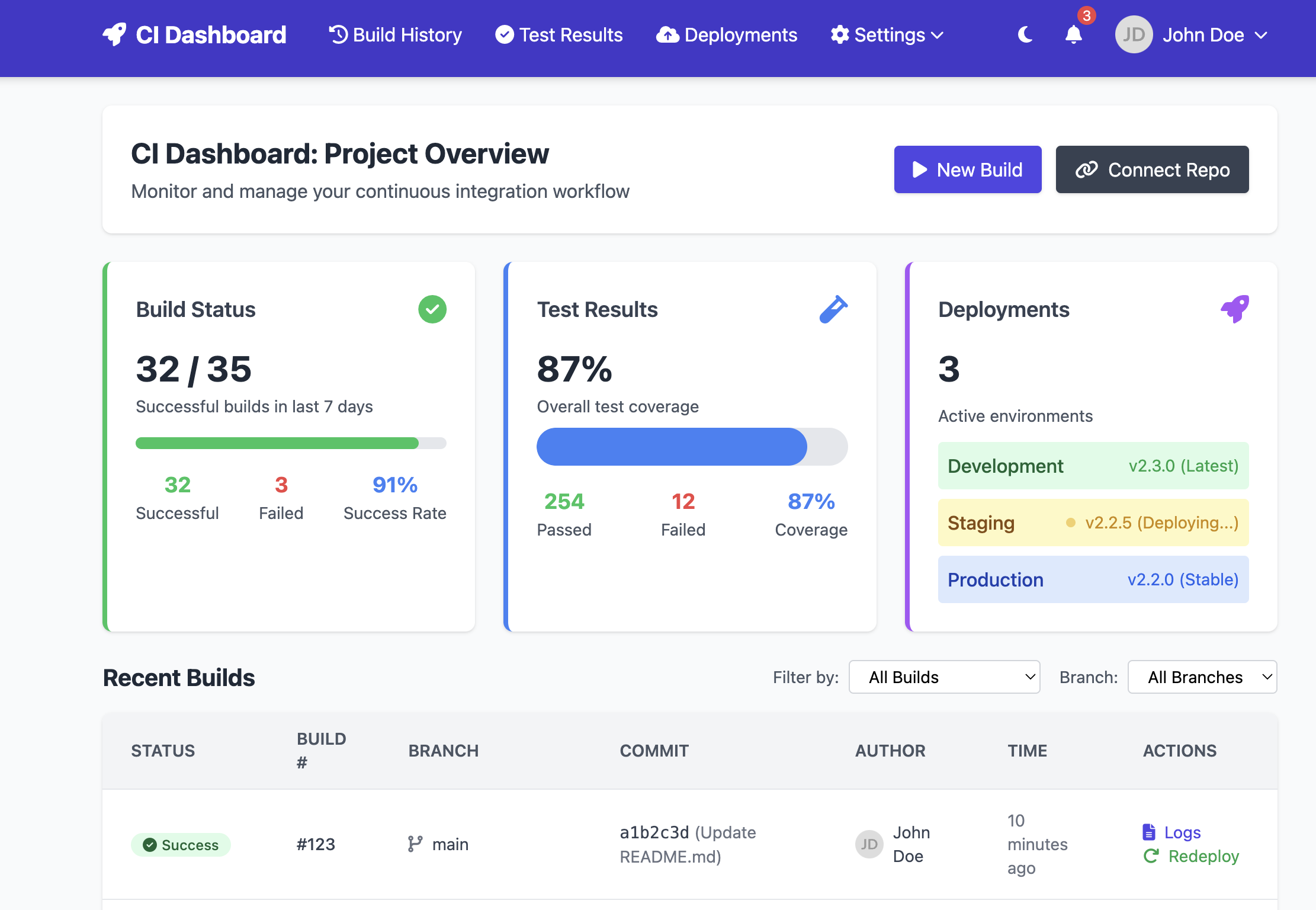

Figure 8: Iteration 1—basic CI dashboard with limited interaction.

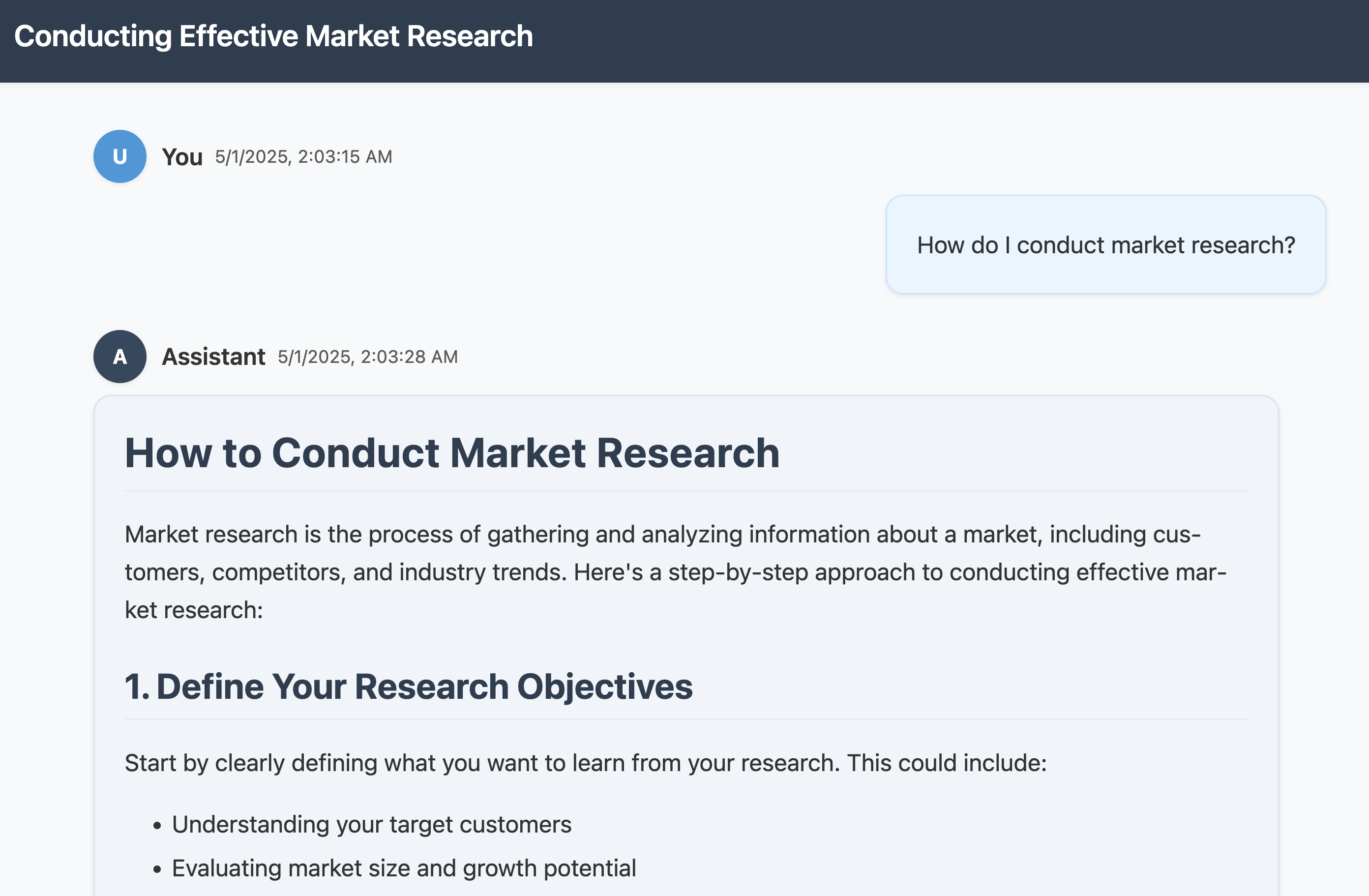

Figure 9: ConvUI—linear text presentation lacking visual hierarchy.

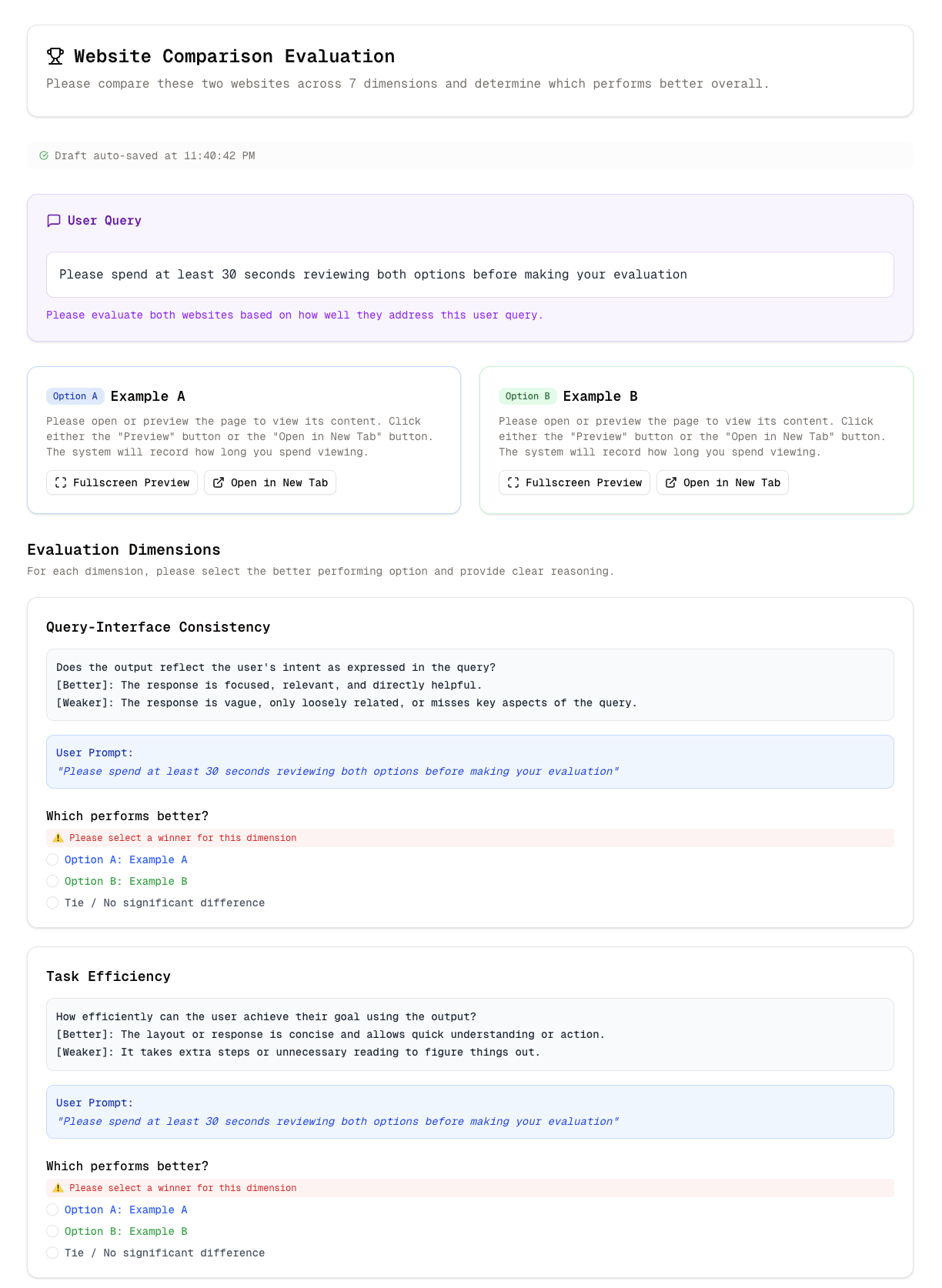

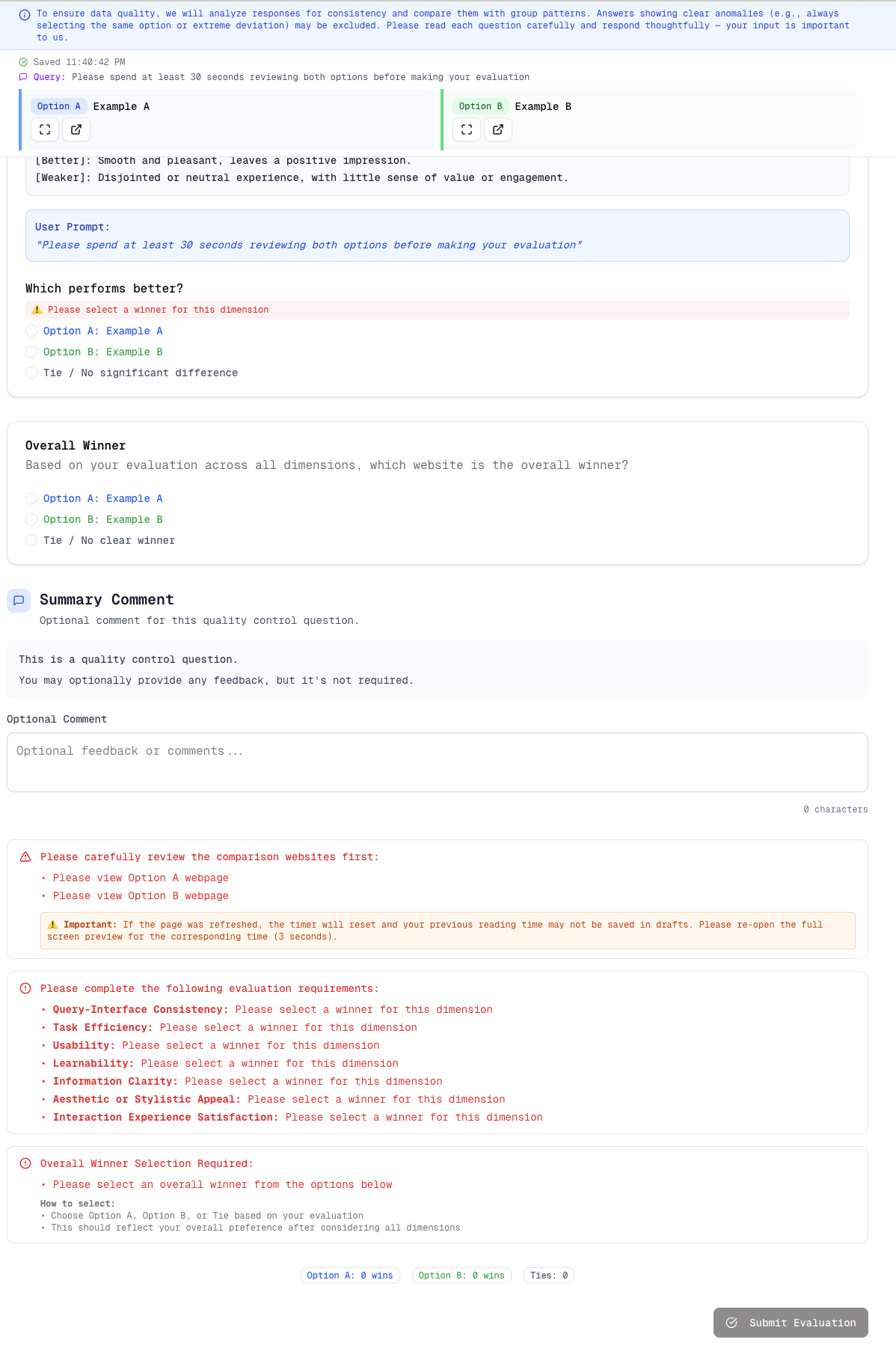

Figure 10: Human Evaluation Questionnaire Interface (a), used for systematic annotation.

Human Preference Analysis

Qualitative analysis of annotator comments reveals:

- Cognitive Offloading: 78.5% of users mentioning cognitive load preferred GenUI, especially in complex, concept-heavy scenarios.

- Visual Structure and Trust: 86.5% preferred GenUI for perceived credibility and professionalism, attributed to modular layouts and clear hierarchies.

Figure 11: Human Evaluation Questionnaire Interface (b), supporting detailed feedback collection.

Figure 12: Human Evaluation Questionnaire Interface (c), enabling multidimensional assessment.

Implementation Considerations

- Backend Limitations: The current system supports only HTML/JS frontends, restricting backend logic and complex workflows.

- Latency: Iterative refinement introduces latency (up to several minutes), which may be prohibitive for real-time applications.

- Interaction Classifier: The system generates interfaces for all queries, even when unnecessary; a classifier for interaction necessity is suggested for future work.

- Evaluation Scope: Benchmarks are controlled; open-ended, real-world studies are needed for broader validation.

Implications and Future Directions

The generative interface paradigm demonstrates clear advantages in domains and tasks characterized by information density, complexity, and the need for interaction. The structured, iterative approach enables LLMs to synthesize UIs that reduce cognitive load, enhance usability, and improve user satisfaction. Theoretical implications include the formalization of query-to-interface mapping and the integration of FSMs for UI logic. Practically, this work paves the way for LLM-powered adaptive systems in education, business analytics, and software engineering.

Future research should address backend integration, latency reduction, multimodal input, domain-specific templates, and collaborative multi-user environments. The development of classifiers for interaction necessity and open-ended user studies will further refine deployment strategies and real-world applicability.

Conclusion

Generative Interfaces for LLMs represent a significant advancement in human-AI interaction, enabling LLMs to move beyond static text responses to synthesize adaptive, interactive UIs tailored to user goals. The framework’s structured representations, iterative refinement, and adaptive evaluation yield superior performance across functional, interactive, and emotional dimensions, particularly in complex and information-rich domains. While limitations remain in backend support and latency, the paradigm offers a robust foundation for future research and deployment in adaptive, user-centered AI systems.