- The paper introduces GENESIS, which integrates semantic and episodic memory via dual VAEs and a retrieval-augmented generation mechanism.

- It demonstrates capacity-controlled reconstruction and generalization, quantifying memory fidelity through rate-distortion analysis and diversity metrics.

- The model predicts gist-based distortions and creative recombination in memory, offering actionable insights into empirical memory phenomena.

GENESIS: A Generative Model of Episodic–Semantic Interaction

Introduction and Theoretical Context

The GENESIS model addresses a central problem in cognitive neuroscience: the computational integration of semantic and episodic memory systems. Traditional frameworks, such as the Complementary Learning Systems (CLS) theory, posit a division of labor between rapid hippocampal encoding (episodic) and gradual cortical learning (semantic). However, these models often assume independence between the two systems and fail to account for empirical phenomena such as semantic intrusions, gist-based distortions, and the constructive recombination of episodic content. GENESIS proposes a unified, generative architecture that formalizes memory as the interaction between two limited-capacity VAEs—a Cortical-VAE for semantic learning and a Hippocampal-VAE for episodic encoding—embedded within a retrieval-augmented generation (RAG) framework.

Model Architecture

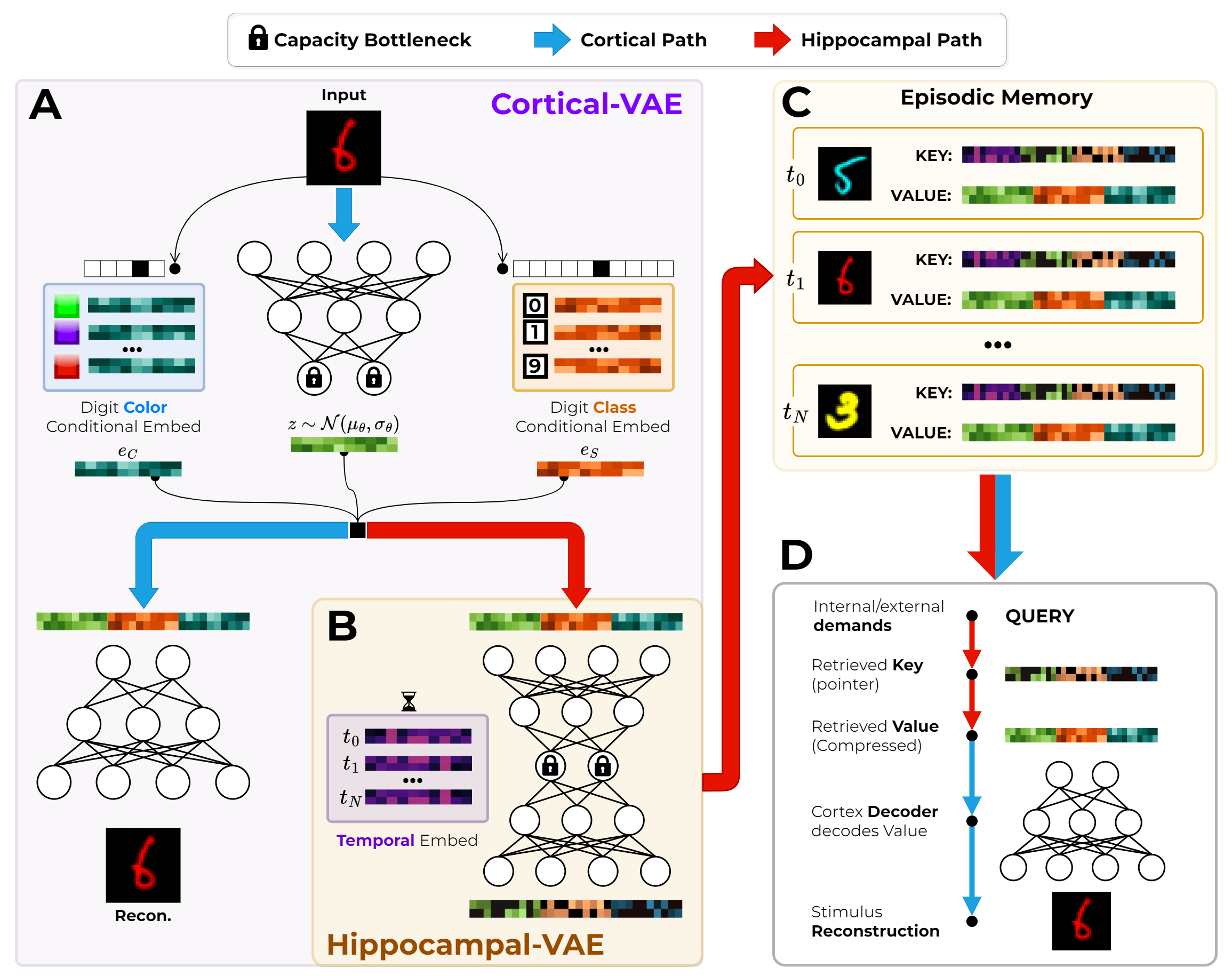

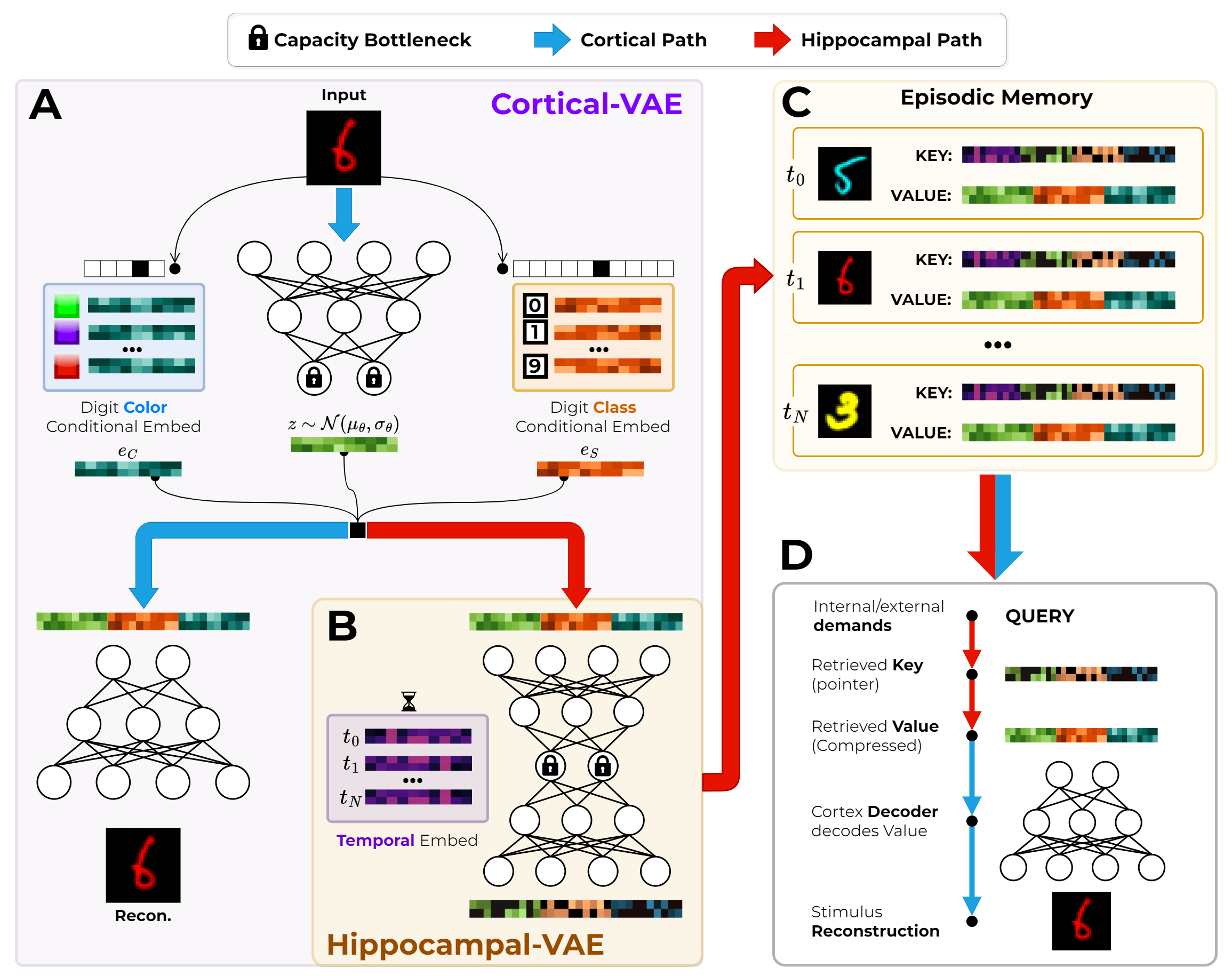

GENESIS consists of three principal components:

- Cortical-VAE: A conditional β-VAE trained on colored MNIST digits, producing a latent embedding [z,eS,eC] (Gaussian latent, digit, and color embeddings). This module models semantic memory, supporting statistical learning and generalization.

- Hippocampal-VAE: A capacity-limited β-VAE that compresses the Cortical-VAE embeddings, optionally incorporating temporal embeddings to form episode-specific keys. This models episodic memory, supporting rapid encoding and retrieval.

- Episodic Memory (RAG): A retrieval-augmented memory storing key-value pairs, where keys are compressed item+temporal embeddings and values are Cortical-VAE item embeddings. Retrieval is performed via query-key similarity, and reconstruction is achieved by decoding retrieved values through the Cortical-VAE.

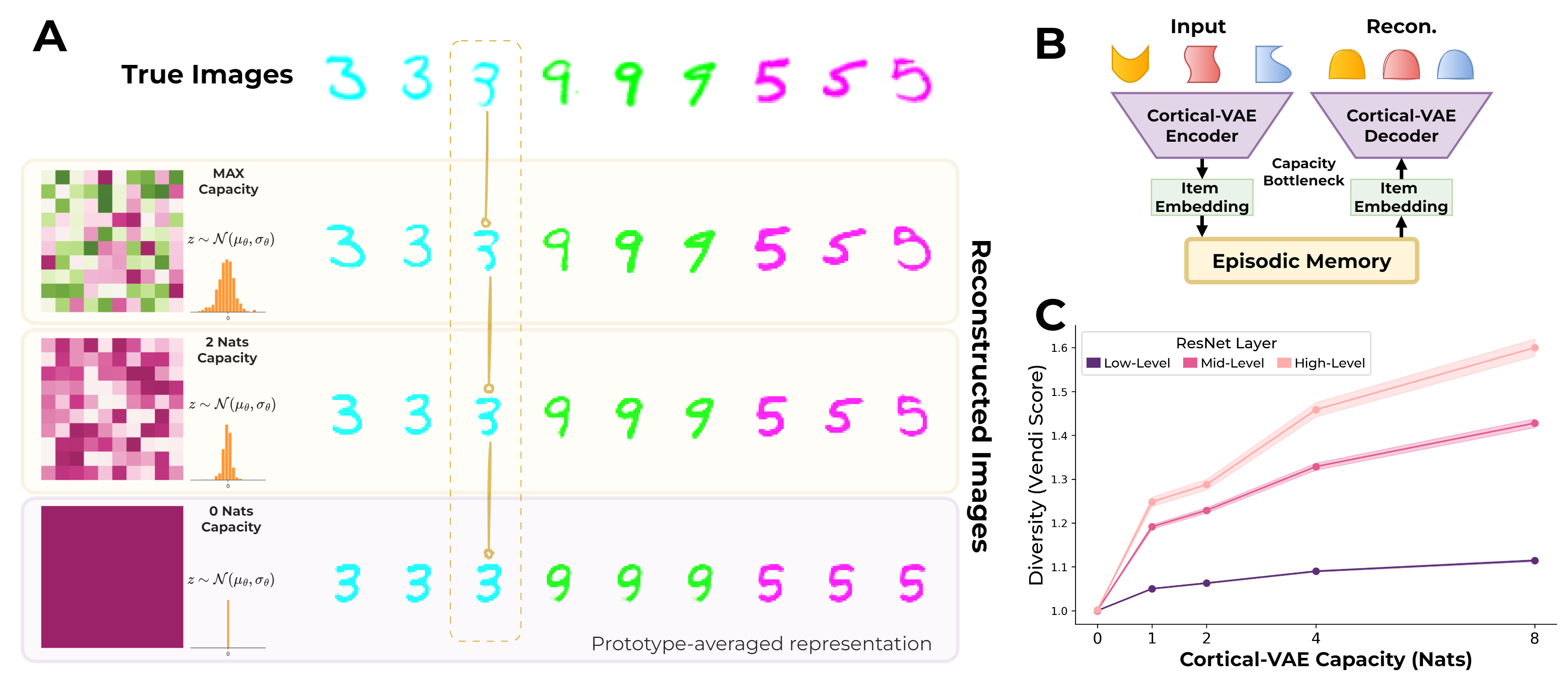

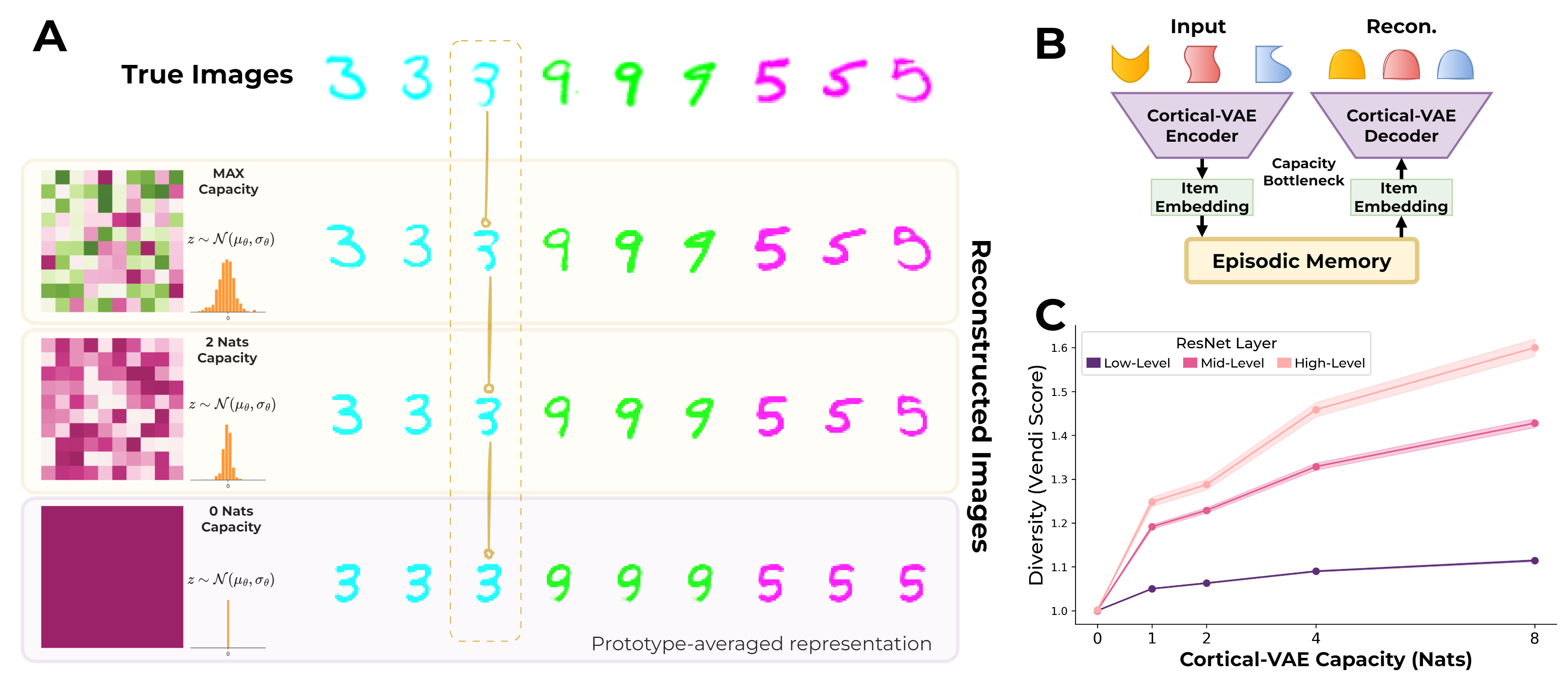

Figure 1: GENESIS architecture: Cortical-VAE encodes semantic representations, Hippocampal-VAE compresses for episodic storage, and RAG stores key-value pairs for retrieval and reconstruction.

This architecture enforces a bidirectional dependency: episodic encoding and recall are mediated by semantic representations, and semantic processing is shaped by episodic replay and recombination.

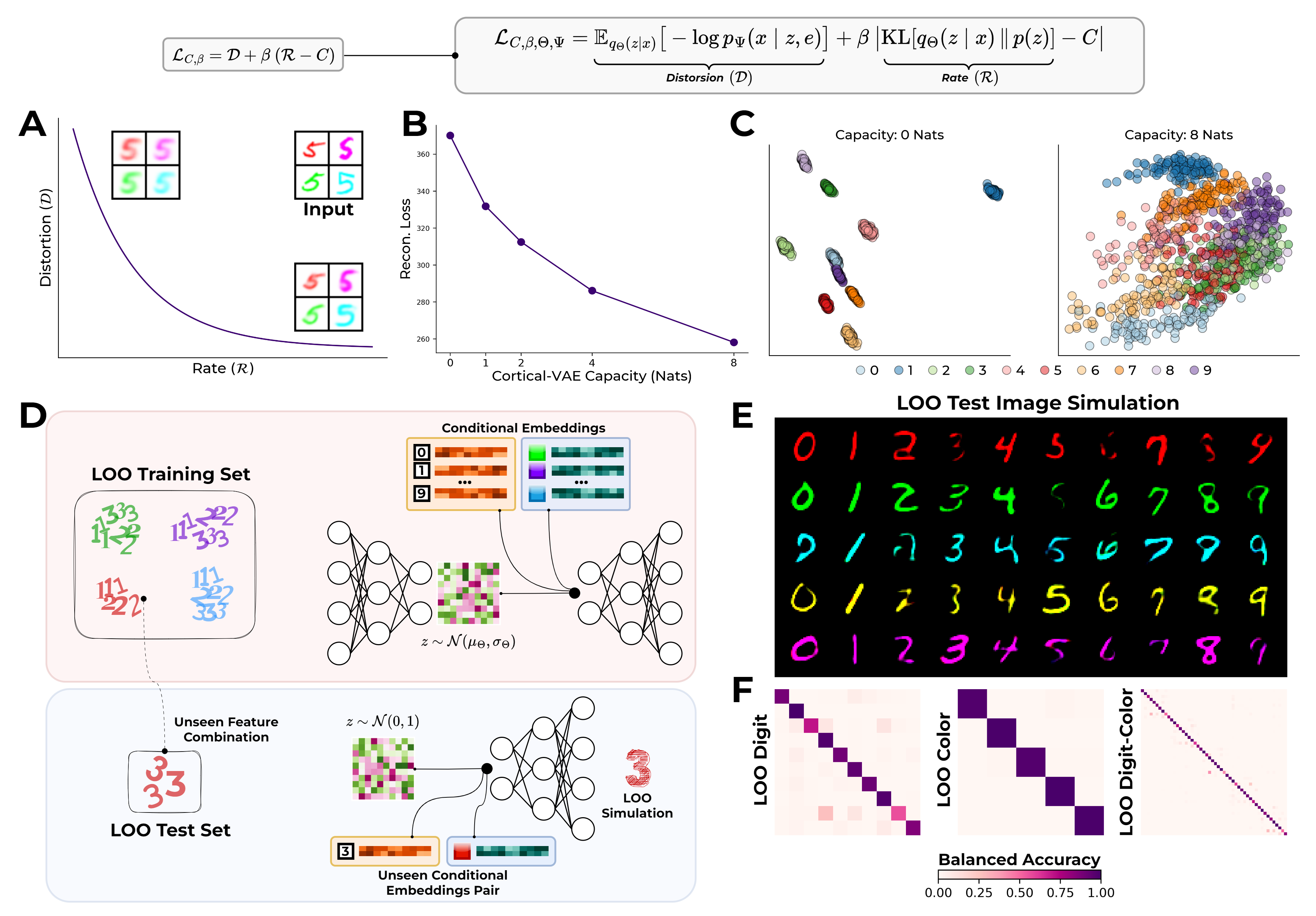

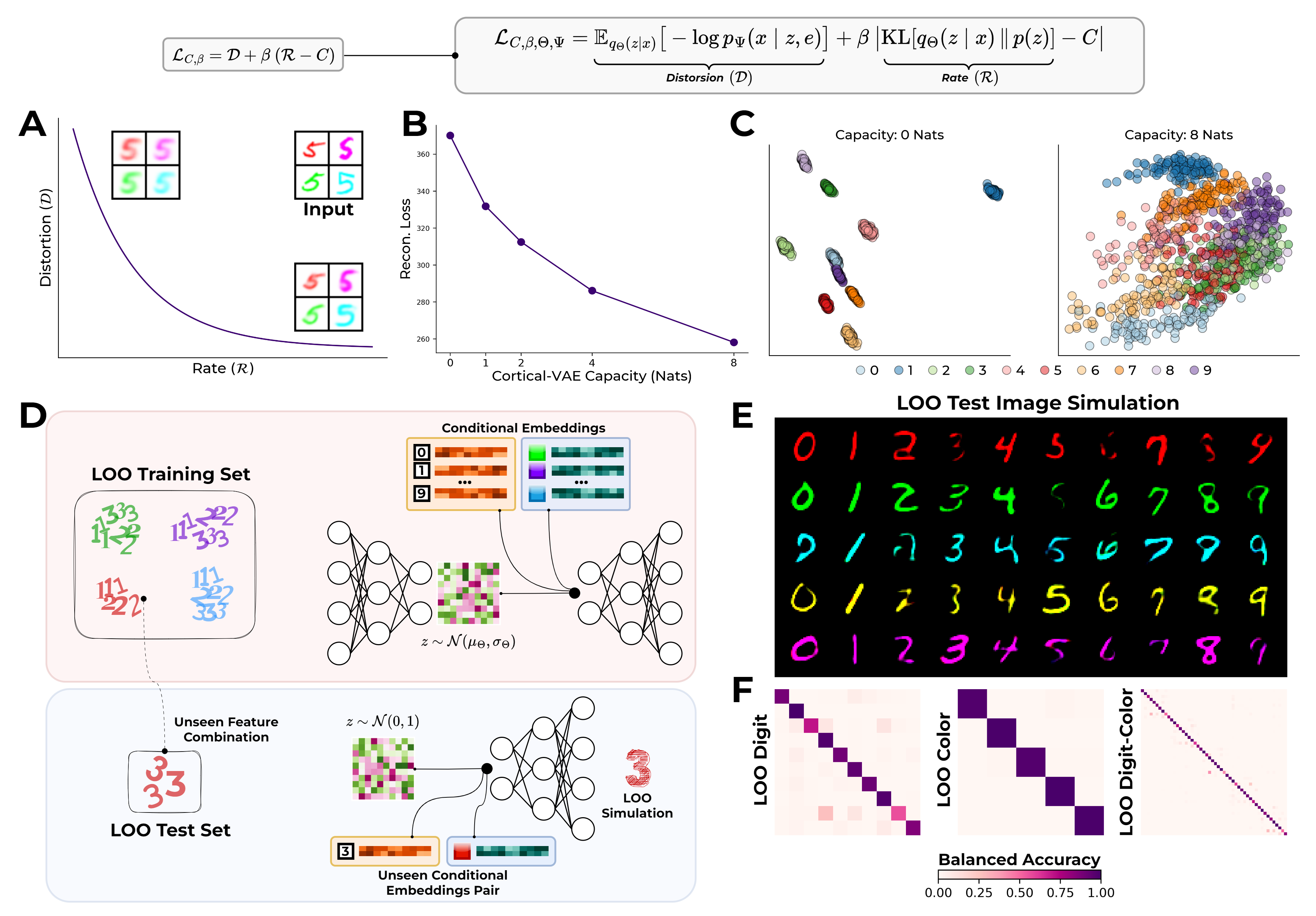

Semantic Memory: Statistical Learning, Capacity, and Generalization

The Cortical-VAE demonstrates robust statistical learning and compositional generalization. Under high capacity, it accurately reconstructs colored MNIST digits and generalizes to novel digit-color pairs via a Leave-One-(Pair)-Out (LOO) protocol. The model's performance degrades gracefully with reduced capacity, following a rate-distortion curve as predicted by information theory. Notably, low-capacity regimes induce a collapse of latent representations toward category prototypes, reducing within-category variability and increasing self-similarity.

Figure 2: Semantic learning and generalization: (A) Rate-distortion curve; (B) Reconstruction accuracy vs. capacity; (C) Latent geometry under low/high capacity; (D-F) LOO generalization and classification accuracy for novel digit-color pairs.

This behavior aligns with empirical findings on semantic memory: generalization is supported by compositional latent structure, and resource constraints induce prototype effects.

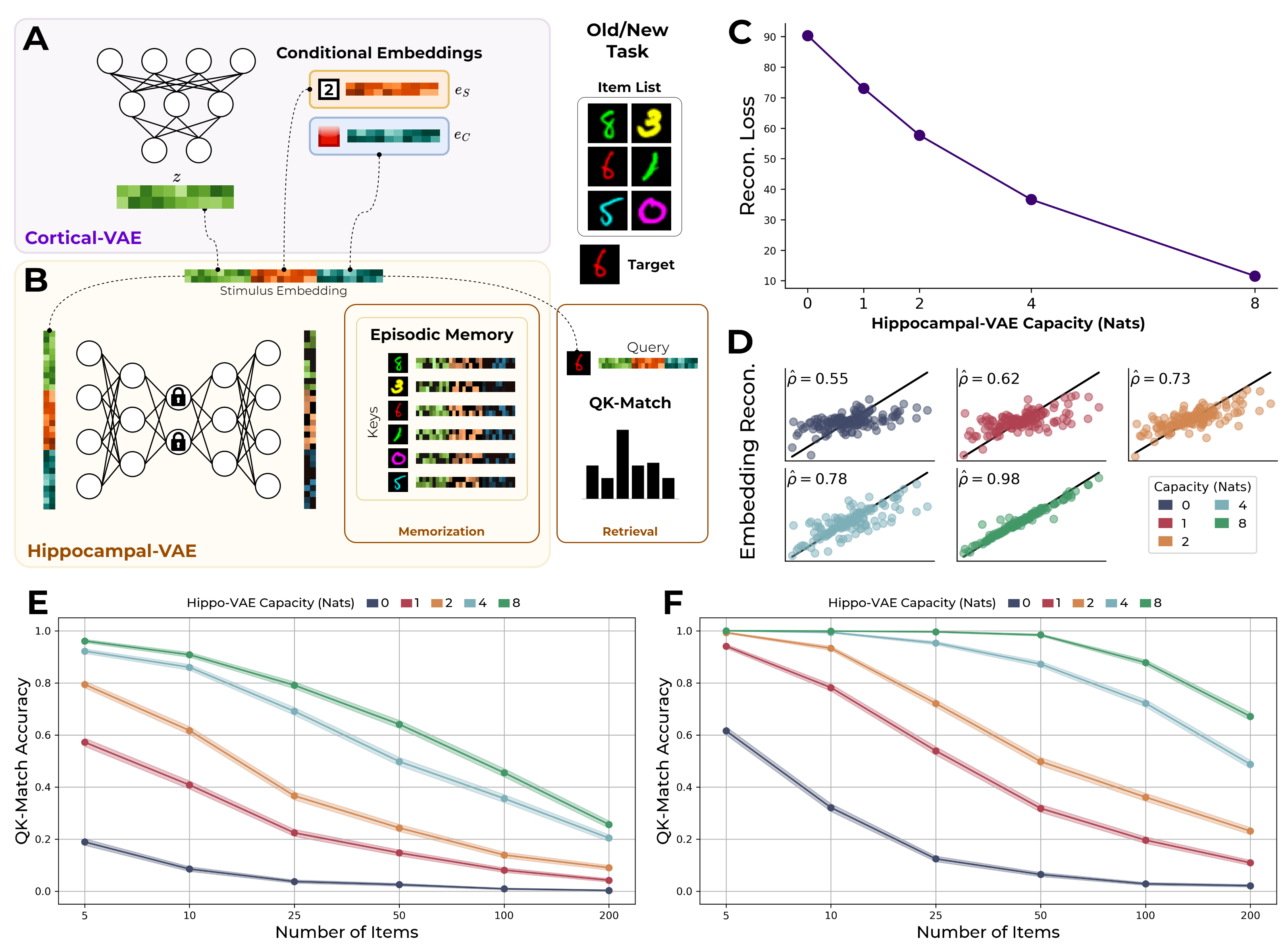

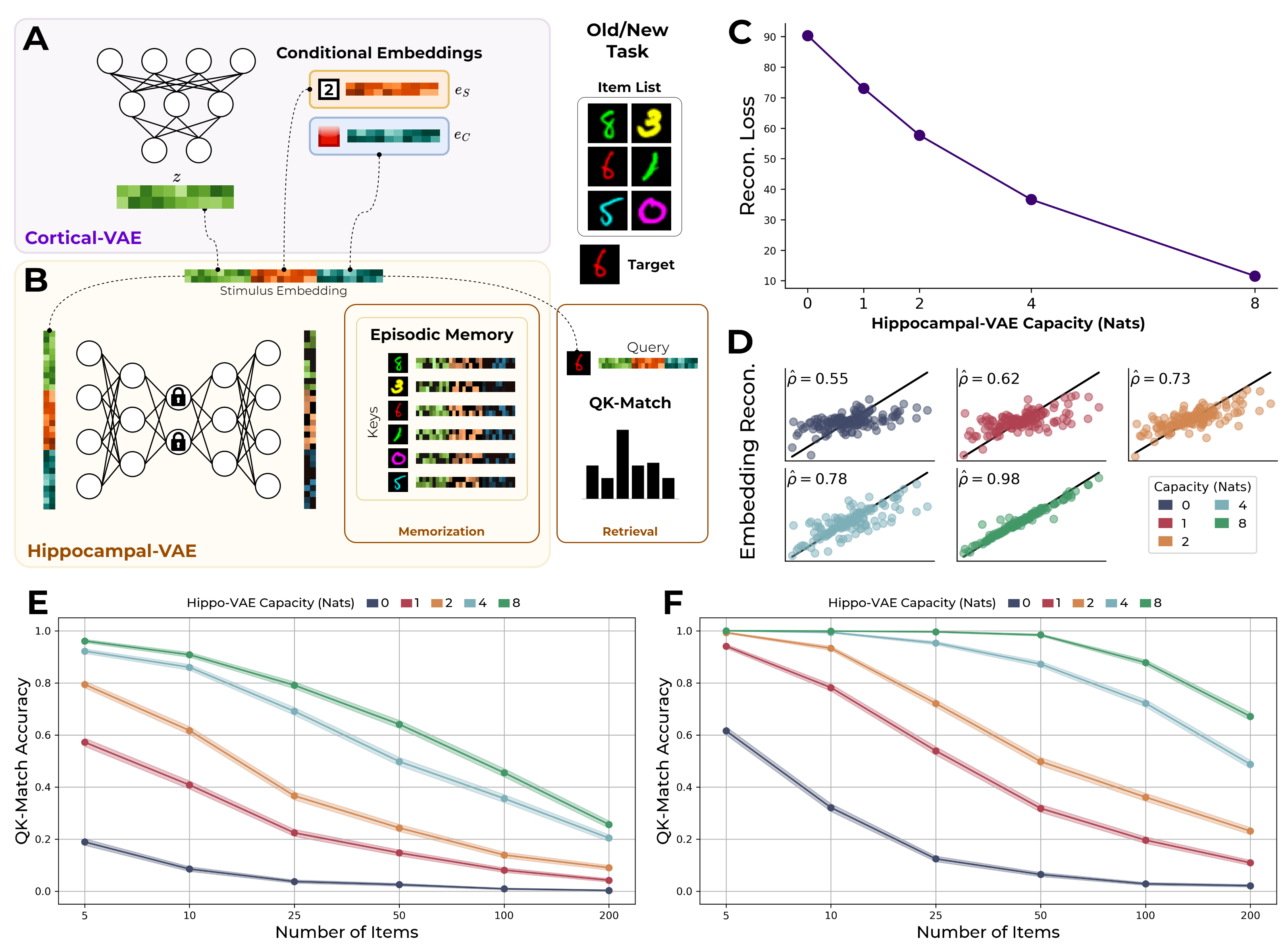

Episodic Memory: Recognition and Serial Recall

The Hippocampal-VAE, in conjunction with the RAG, supports recognition and serial recall tasks. Recognition is implemented as a query-key matching process, with accuracy modulated by both the capacity of the Hippocampal-VAE and the number of stored items. As capacity decreases, both baseline accuracy and resistance to list length decline, reflecting reduced discriminability among compressed keys.

Figure 3: Recognition memory: (A) Encoding pipeline; (B) Query-key matching; (C-D) Reconstruction error vs. capacity; (E-F) Recognition accuracy (top-1/top-3) as a function of list size and capacity.

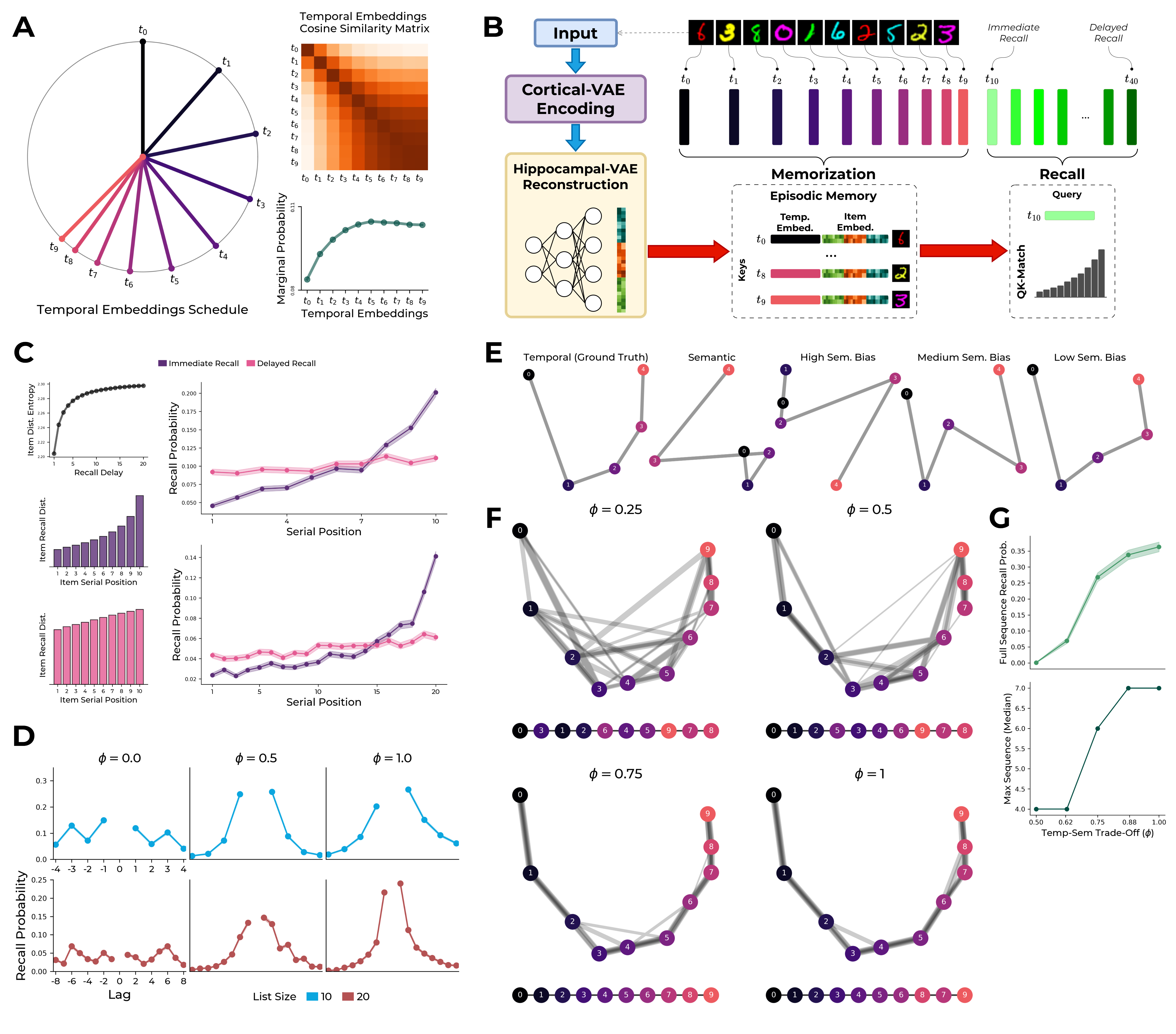

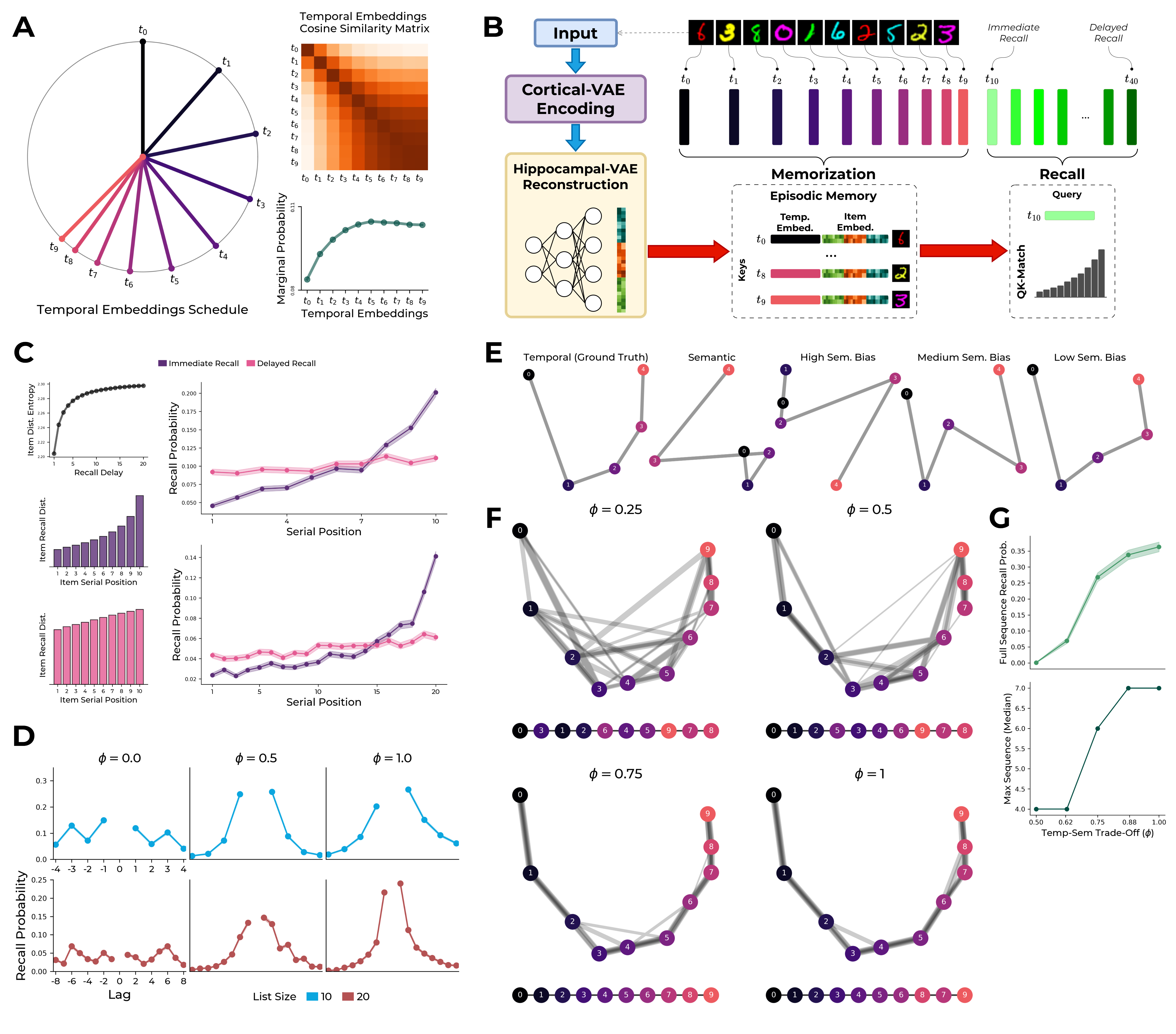

Serial recall is modeled by augmenting keys with temporal embeddings, generating a structured temporal manifold. The model reproduces recency effects, their attenuation with delayed recall, and the forward bias in recall transitions. The parameter ϕ controls the weighting of temporal vs. semantic information, modulating the prevalence of serial order effects and semantic intrusions.

Figure 4: Serial recall: (A-B) Temporal embedding schedule and usage; (C) Recency effect modulation; (D) Forward effect; (E-F) Recall path geometry and sequential recall; (G) Sequence recall probability vs. ϕ.

Gist-Based Distortion and Capacity-Limited Encoding

A critical prediction of GENESIS is that capacity limitations in the Cortical-VAE induce gist-based distortions in episodic recall. When encoding capacity is low, reconstructions of episodic memories converge toward semantic prototypes, losing idiosyncratic details. This is quantified by a reduction in the Vendi diversity score over ResNet feature maps, indicating increased self-similarity among reconstructed items.

Figure 5: Gist-based distortion: (A) Reconstructions at varying capacities; (B) Simulation pipeline; (C) Diversity (Vendi) score vs. capacity.

This mechanism provides a normative account of semantic intrusions and prototype effects in episodic memory, linking them to resource constraints.

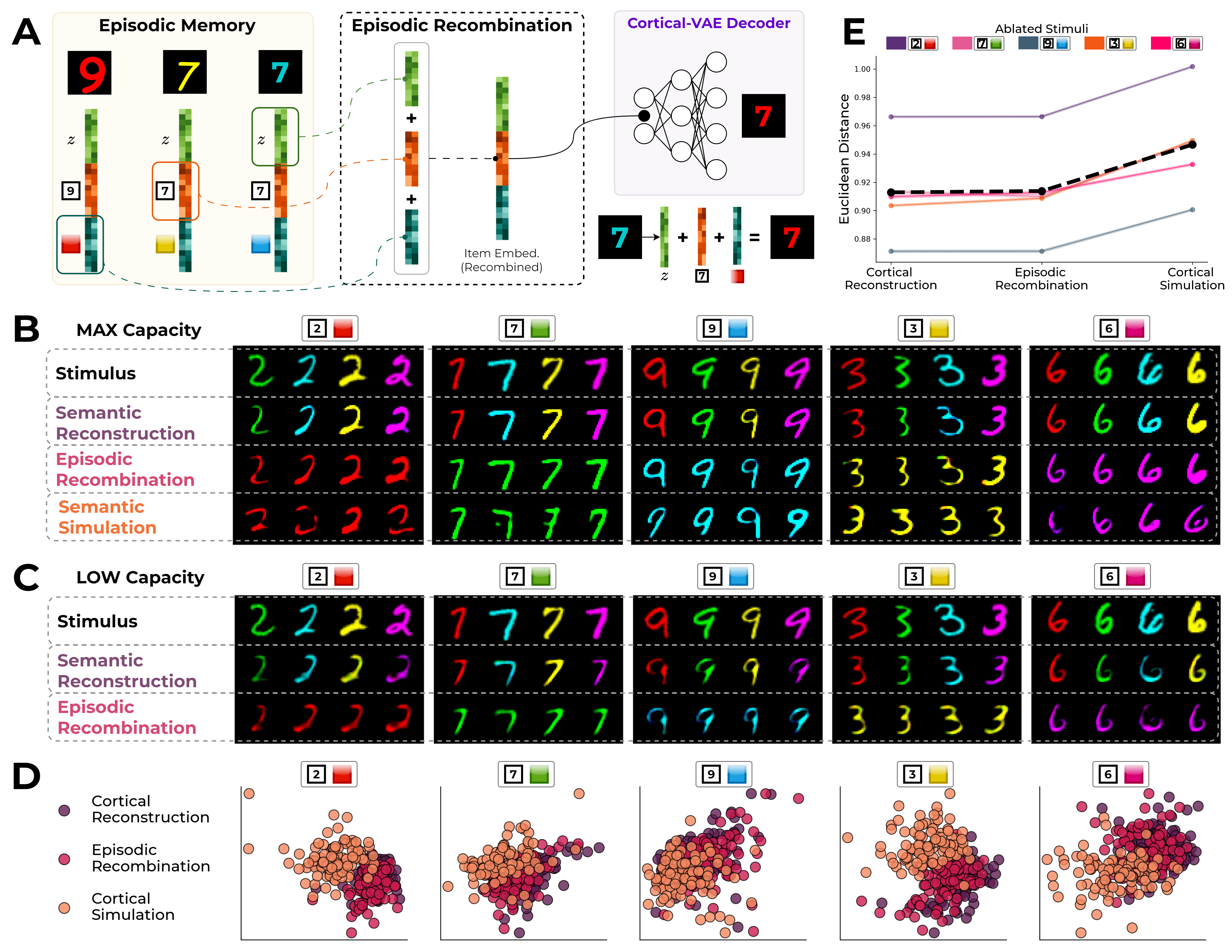

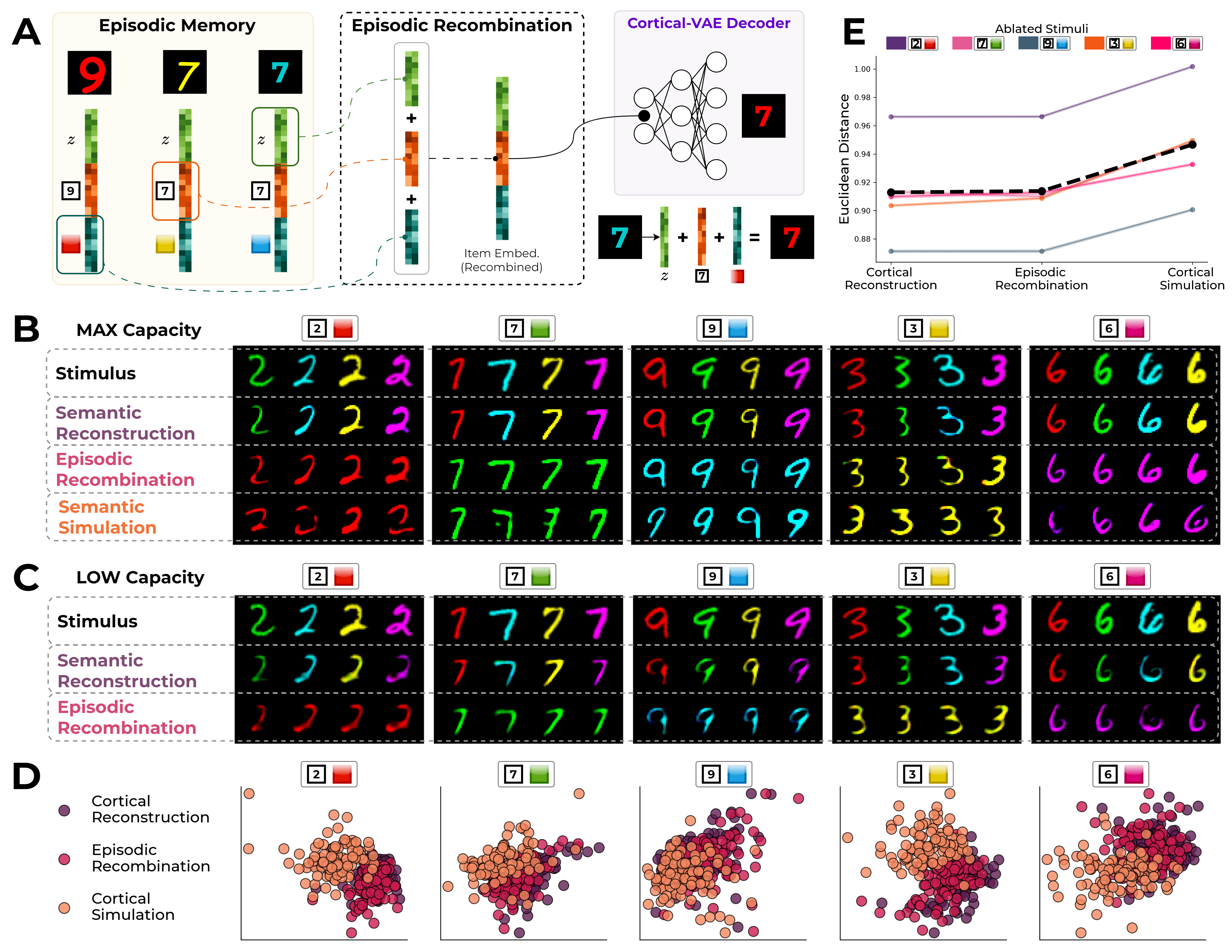

Constructive Episodic Simulation: Replay and Recombination

GENESIS supports constructive episodic simulation by recombining latent components from different episodic memories. By assembling new embeddings from existing z, eS, and eC components, the model generates novel, structurally faithful items not encountered during training. Episodic recombination yields reconstructions that are closer to original stimuli than those generated by unconstrained semantic simulation, as measured by Euclidean distance in feature space and multidimensional scaling (MDS) of ResNet embeddings.

Figure 6: Episodic recombination: (A) Recombination procedure; (B) Comparison of reconstruction, recombination, and simulation; (C) Low-capacity recombination; (D) MDS of generated images; (E) Reconstruction fidelity metrics.

Capacity limitations degrade the fidelity of recombined episodes, leading to increased self-similarity and loss of detail, paralleling age-related declines in episodic simulation.

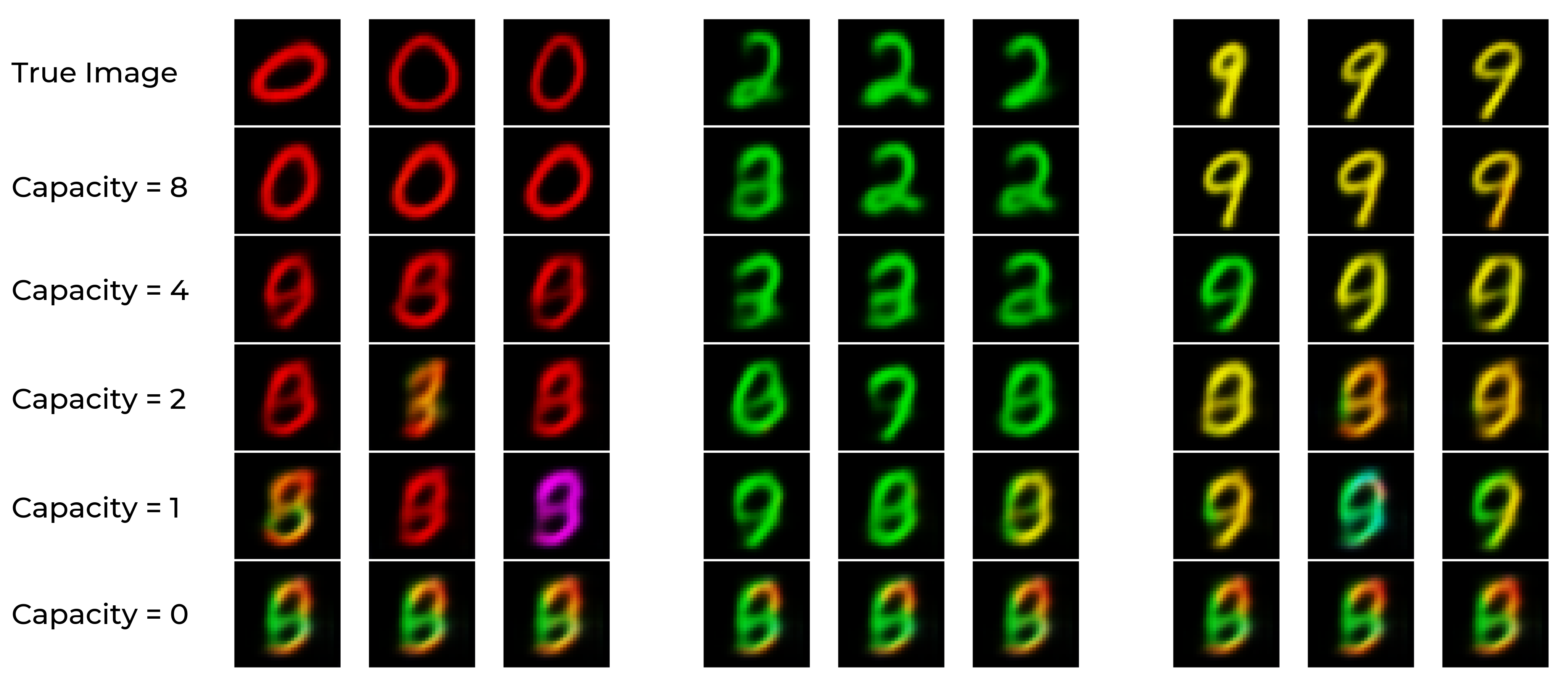

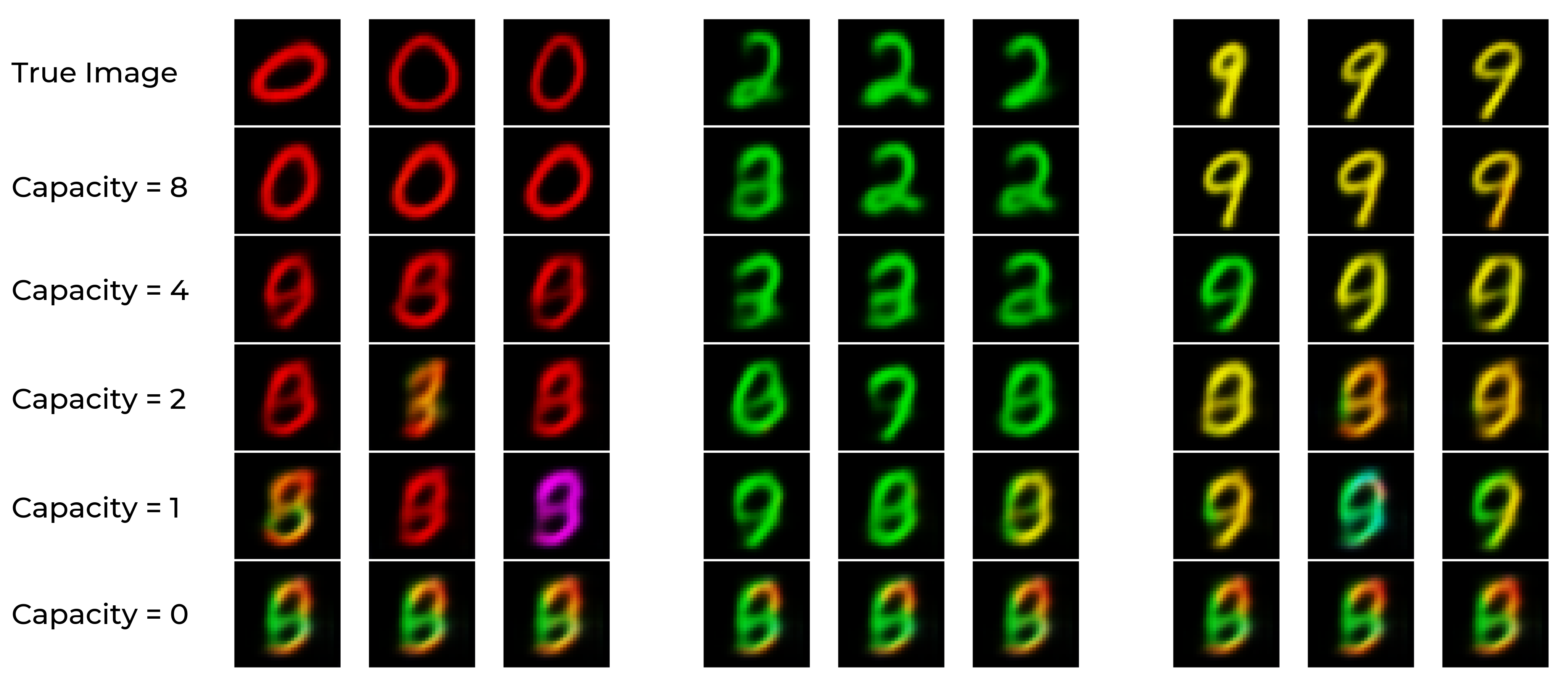

Lossy Compression and Hallucination

When both keys and values in episodic memory are subject to lossy compression (e.g., via the Hippocampal-VAE), reconstructions become increasingly chimeric, distorting both semantic and perceptual features.

Figure 7: Reconstructions from embeddings compressed at different Hippocampal-VAE capacities, illustrating progressive loss of detail and emergence of chimeric images.

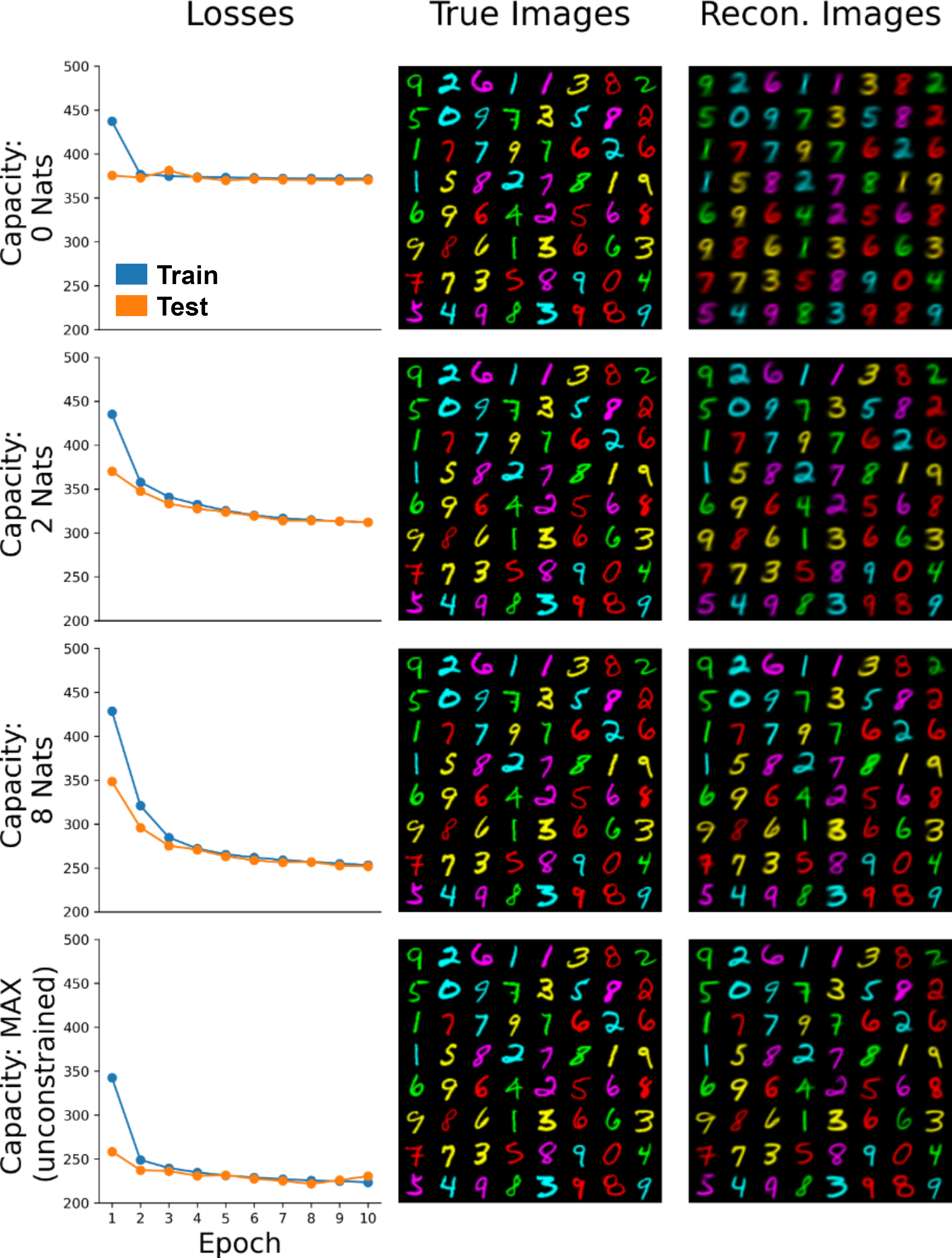

Training and Implementation Details

Implications and Future Directions

GENESIS advances a unified computational account of declarative memory, integrating semantic and episodic processes within a generative, resource-bounded framework. The model provides mechanistic explanations for a range of empirical phenomena, including:

- The trade-off between memory fidelity and capacity, formalized via rate-distortion theory.

- The emergence of semantic intrusions and gist-based distortions as a function of encoding constraints.

- The capacity for constructive episodic simulation via recombination of latent components.

- The dissociation between retrieval success (hippocampal capacity) and reconstruction fidelity (cortical capacity).

The model generates several testable predictions, such as distinct behavioral and neural signatures of compression in semantic vs. episodic subsystems, and the modulation of creative recombination by semantic similarity and resource constraints. Extensions could incorporate co-training of semantic and episodic modules, biologically plausible memory formation mechanisms, adaptive RAG components, and more realistic event segmentation and recall dynamics.

Conclusion

GENESIS provides a principled, generative framework for understanding the interaction of semantic and episodic memory under resource constraints. By modeling memory as the interaction of two limited-capacity generative systems within a retrieval-augmented architecture, the model accounts for a broad spectrum of empirical findings and offers a foundation for future theoretical and empirical work on the constructive, resource-bounded nature of human memory.