- The paper introduces LSHN, a model that integrates continuous Hopfield attractor dynamics with an autoencoder to achieve robust semantic association and retrieval.

- It demonstrates high retrieval accuracy on MNIST and CIFAR-10, achieving 0.982 and 0.726 accuracy with 1024 neurons under occlusion and noise.

- The model leverages neurobiological principles mimicking hippocampal CA3 dynamics to enhance episodic memory simulation and spatial structure recovery.

Latent Structured Hopfield Network for Semantic Association and Retrieval

Introduction and Motivation

The Latent Structured Hopfield Network (LSHN) addresses the challenge of scalable, biologically plausible associative memory for semantic association and episodic recall. While large-scale pretrained models have advanced semantic memory modeling, the mechanisms underlying the dynamic binding of semantic elements into episodic traces—mirroring hippocampal CA3 attractor dynamics—remain insufficiently explored. LSHN integrates continuous Hopfield attractor dynamics into an autoencoder framework, enabling robust, end-to-end trainable associative memory that is both scalable and neurobiologically inspired.

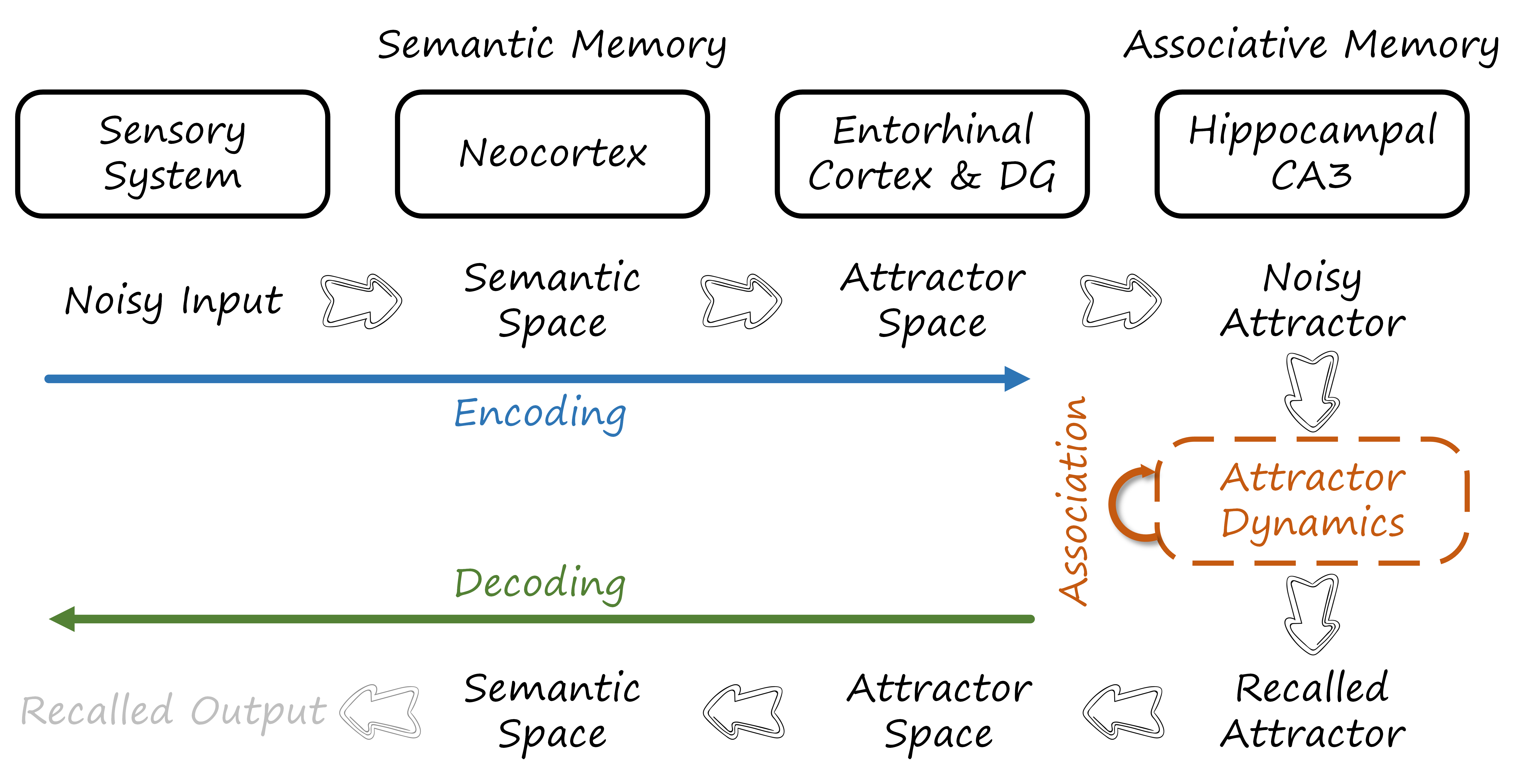

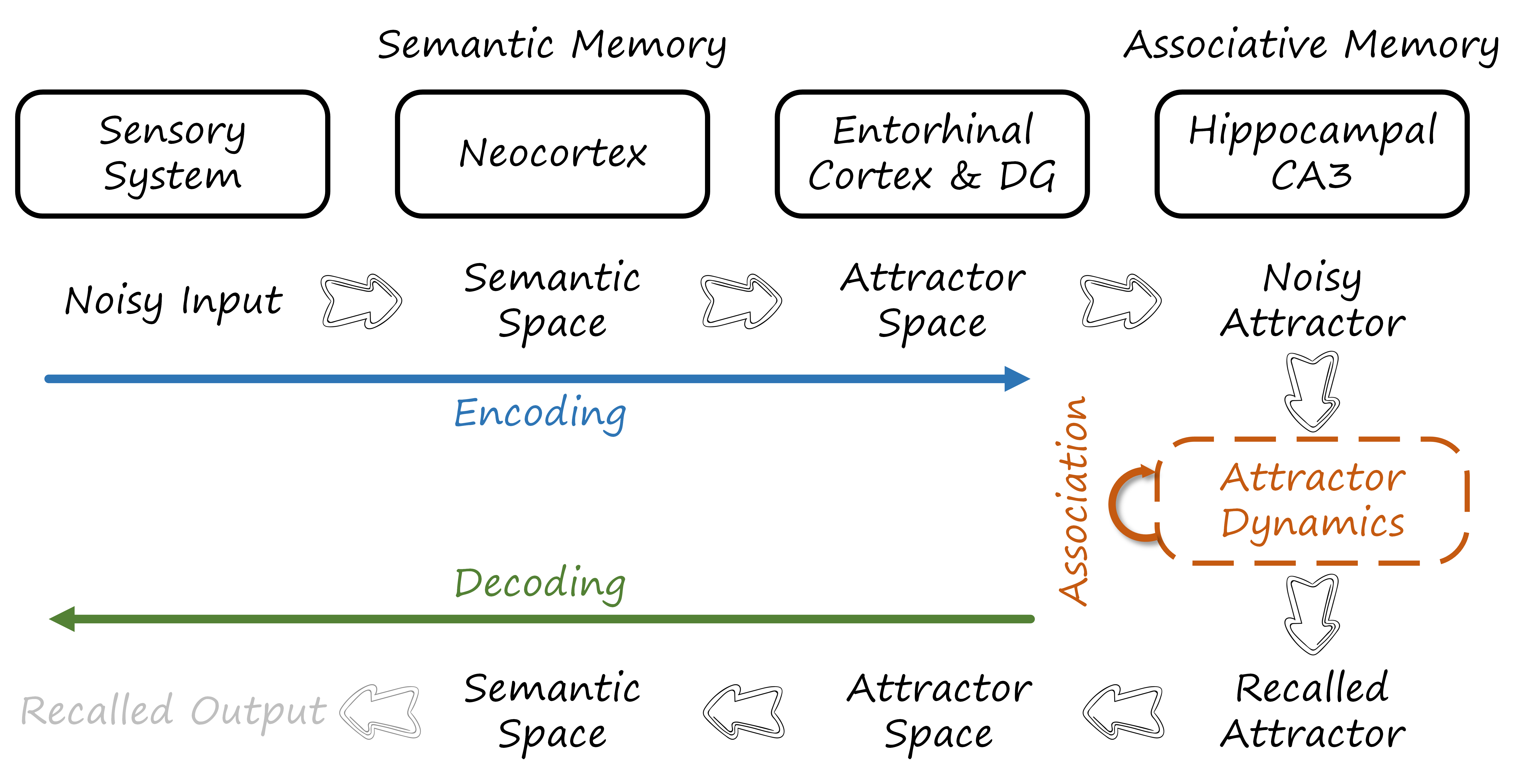

Figure 1: Overview of semantic and associative memory, mapping neocortical encoding, entorhinal relay, and hippocampal CA3 attractor dynamics.

Model Architecture

LSHN is structured as a three-stage system, each component corresponding to a distinct neuroanatomical substrate:

- Semantic Encoder (E): Maps input data (e.g., images) into a compact latent semantic space, constrained to [−1,1] via tanh activation, emulating neocortical sensory encoding.

- Latent Hopfield Association: Implements continuous-time Hopfield dynamics in the latent space, simulating CA3 attractor convergence. The network state v evolves under symmetric weights wi,j and external input Ii, with a clipping function to enforce bounded activations.

- Decoder (D): Reconstructs the perceptual input from the attractor-refined latent vector, modeling entorhinal and neocortical decoding.

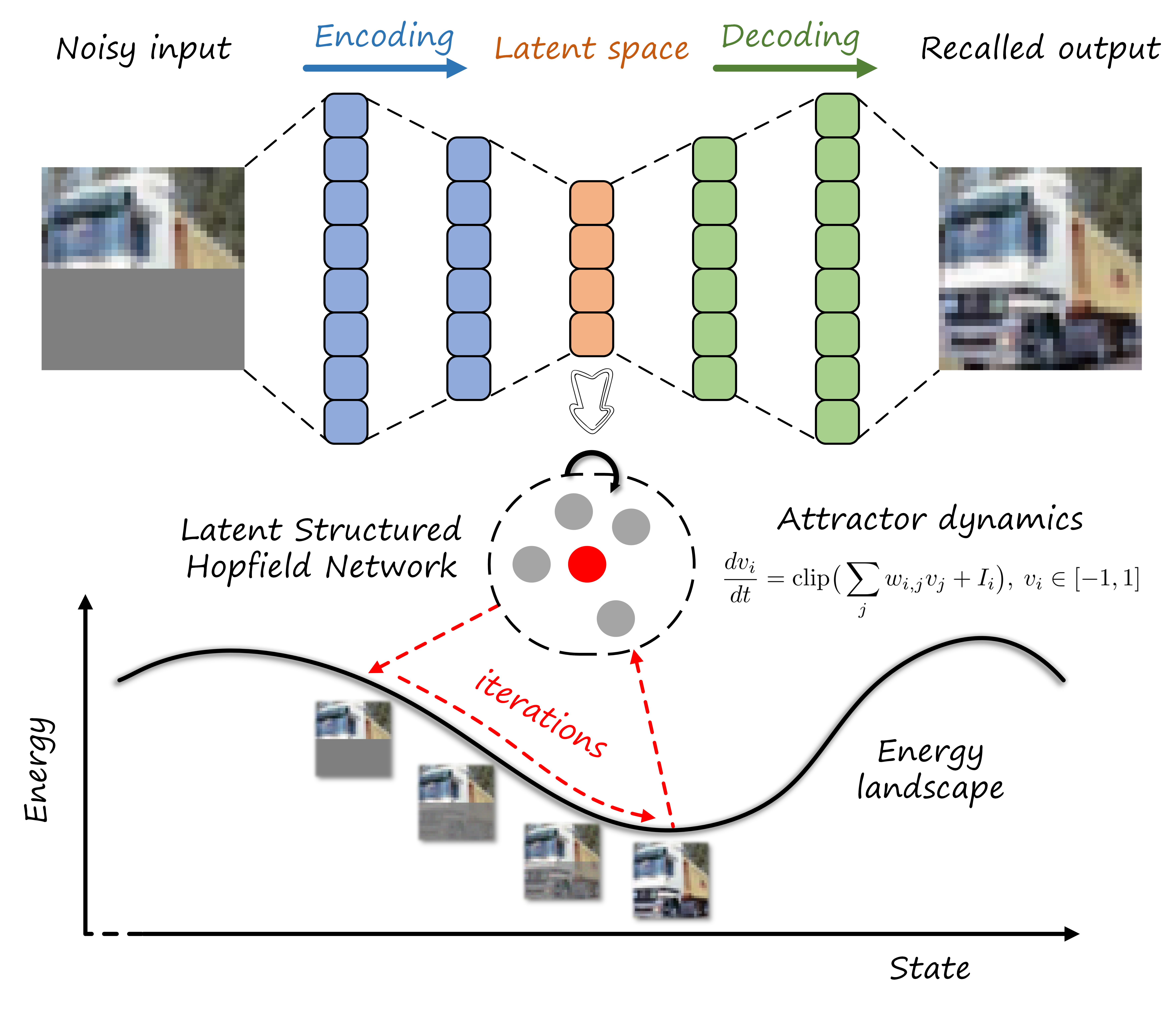

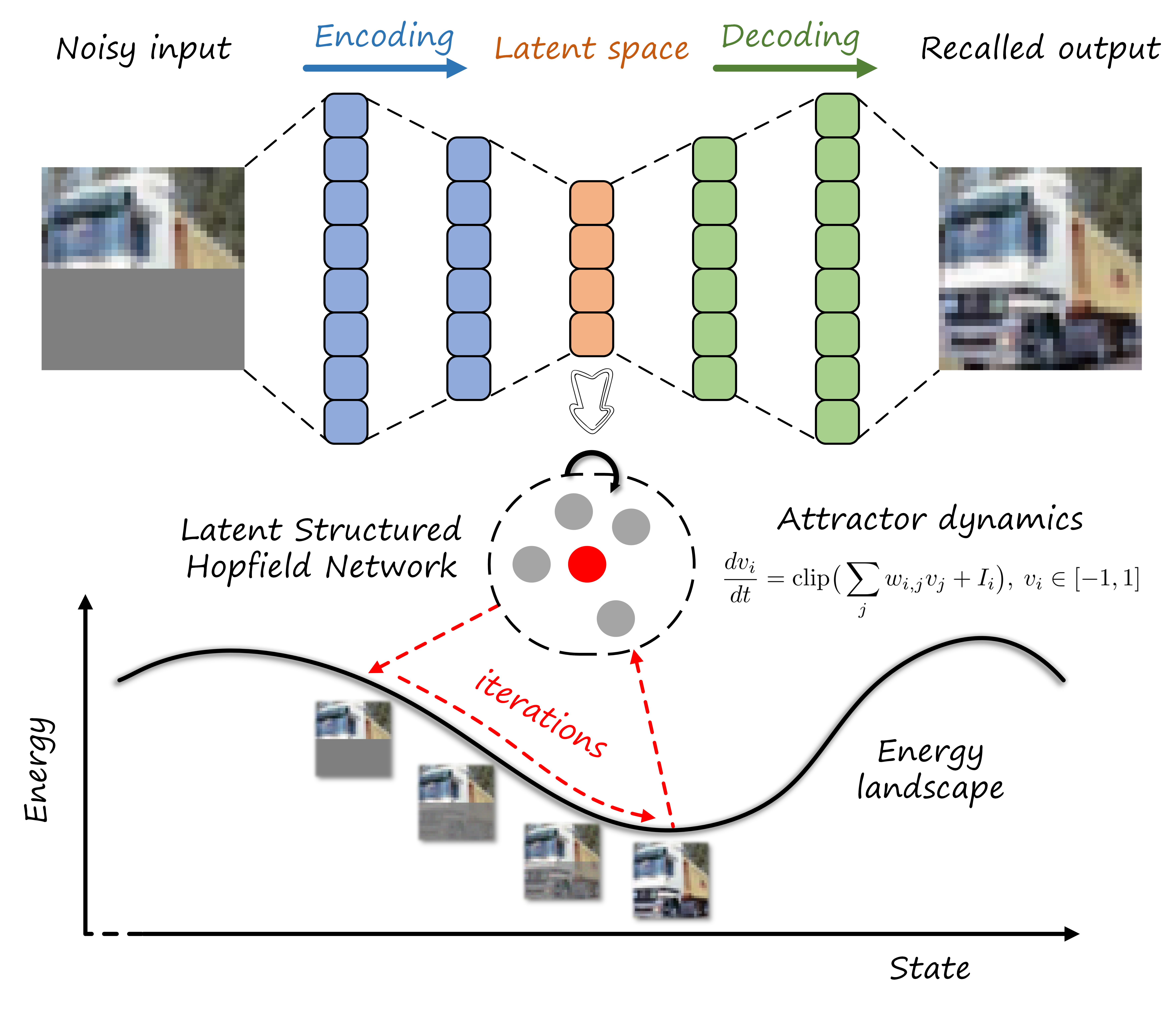

Figure 2: Diagram of the Latent Structured Hopfield Network, showing the encoder, Hopfield-based associative memory, and decoder modules.

The attractor dynamics are discretized for RNN implementation:

vi[t+1]=clamp(vi[t]+j∑wi,jvj[t]+Ii),clamp(x)=min(max(x,−1),1)

with v[0]=E(x) or E(xnoisy).

The global loss combines autoencoder reconstruction, binary latent regularization, attractor convergence, and associative retrieval objectives:

L=LAE+LBL+Lattr+Lasso

LSHN is evaluated on MNIST and CIFAR-10 for pattern completion under occlusion (half-masked) and additive Gaussian noise. The model is compared against Differential Neural Dictionary (DND) and Hebbian LSHN variants.

Key empirical findings:

- LSHN achieves perfect or near-perfect recall for half-masked images with up to 100 stored patterns and maintains high accuracy as the number of stored patterns increases.

- Retrieval accuracy improves monotonically with the number of neurons, demonstrating scalability.

- Under increasing Gaussian noise, LSHN consistently outperforms DND and Hebbian LSHN, with attractor dynamics reliably converging to correct patterns for moderate noise levels.

Notable numerical results:

- On MNIST, LSHN with 1024 neurons achieves 0.982 retrieval accuracy for 1000 stored half-masked images.

- On CIFAR-10, LSHN with 1024 neurons achieves 0.726 retrieval accuracy for 1000 stored half-masked images, substantially outperforming DND and Hebbian baselines.

Latent Space Association and Autoencoder Quality

Attractor learning is performed in the latent space, not directly in the data space. The quality of the autoencoder critically affects overall performance. When evaluation is performed using autoencoder reconstructions as targets, retrieval metrics (MSE, SSIM) improve significantly, indicating that further improvements in autoencoder training could yield additional gains in associative memory performance.

Episodic Memory Simulation in Realistic Environments

LSHN is integrated into the Vision-Language Episodic Memory (VLEM) pipeline and evaluated on the EpiGibson 3D simulation environment. The model is compared to VLEM's original attractor network.

Key results:

- LSHN matches VLEM in current event prediction and significantly outperforms it in next event prediction, especially under noise.

- Visualization of attractor trajectories reveals that LSHN captures the true spatial structure of the environment, whereas VLEM fails to do so.

Implementation Considerations

Training and Optimization:

- LSHN is trained end-to-end with gradient descent, enabling efficient learning of both autoencoder and attractor network parameters.

- The Hopfield dynamics are implemented as an RNN with a fixed number of iterations during training (e.g., 10) and extended iterations during inference (e.g., 1000) to ensure convergence.

Resource Requirements:

- Memory and compute scale with the number of neurons in the latent Hopfield network. Empirically, increasing neuron count yields monotonic improvements in recall capacity and robustness.

- The model is compatible with standard deep learning frameworks and can be deployed on GPU hardware.

Limitations:

- The model's biological plausibility is limited by architectural simplifications and the use of gradient-based optimization.

- Performance on more complex, real-world data and in online, continual learning scenarios remains to be validated.

- Training and inference efficiency could be further optimized for real-time applications.

Theoretical and Practical Implications

LSHN provides a computational framework for studying the dynamic binding of semantic elements into episodic traces, grounded in hippocampal attractor dynamics. The model bridges the gap between biologically inspired associative memory and scalable, trainable architectures suitable for modern AI tasks. Its robust performance under occlusion and noise, as well as its ability to capture spatial structure in realistic environments, suggests utility for memory-augmented agents, continual learning, and cognitive modeling.

Future directions include:

- Scaling to larger, more diverse datasets and multimodal inputs.

- Incorporating additional neurobiological constraints (e.g., sparse coding, modularity).

- Exploring online and lifelong learning settings.

- Integrating with large-scale pretrained models for enhanced semantic encoding.

Conclusion

The Latent Structured Hopfield Network advances the state of associative memory modeling by integrating continuous Hopfield attractor dynamics into a scalable, end-to-end trainable autoencoder framework. The model demonstrates strong empirical performance on standard and realistic episodic memory tasks, with clear advantages in recall capacity, robustness, and spatial structure learning. LSHN offers a promising direction for both computational neuroscience and memory-augmented AI systems, with open challenges in scaling, biological fidelity, and real-world deployment.