- The paper presents the FSGlove, integrating dense IMU sensors and a differentiable calibration framework to model joint kinematics, hand shape, and sensor misalignment.

- It demonstrates high precision with joint angle errors below 2.7° and mesh reconstruction errors ≤3.6mm, outperforming comparable commercial gloves.

- The system's open-source, cost-effective design and compatibility with VR and robotics frameworks offer significant potential for advanced teleoperation and dexterous manipulation research.

FSGlove: An Inertial-Based Hand Tracking System with Shape-Aware Calibration

Introduction and Motivation

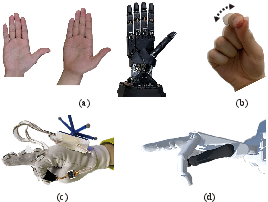

FSGlove addresses the limitations of existing hand motion capture (MoCap) systems, which typically offer restricted degrees of freedom (DoFs) and lack personalized hand shape modeling. These constraints hinder accurate data collection for applications in robotics, VR, and biomechanics, especially in contact-rich manipulation tasks. FSGlove introduces a high-DoF (up to 48 DoFs) inertial-based glove system, integrating dense IMU instrumentation and a differentiable calibration framework (DiffHCal) to simultaneously resolve joint kinematics, hand shape, and sensor misalignment. This unified approach enables anatomically consistent hand modeling and bridges the gap between human dexterity and robotic imitation.

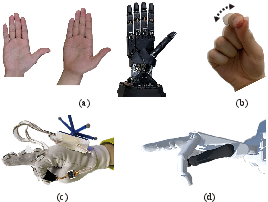

Figure 1: Hand shape variation across subjects and robotic hands, and the failure of motion transfer between heterogeneous shapes even with joint mapping.

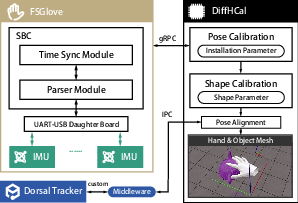

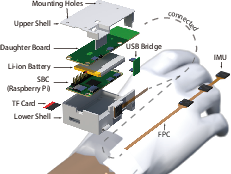

System Architecture and Hardware Design

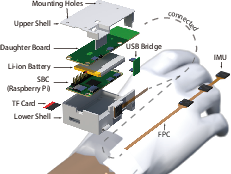

FSGlove comprises three subsystems: the glove for finger kinematics, a dorsal tracker for global hand translation, and a data acquisition suite for sensor fusion and visualization. The glove utilizes 16 IMUs (three per finger plus one on the dorsum), soldered onto a custom FPC and mounted on a stretchable nylon glove. A Raspberry Pi Zero 2W serves as the SBC, interfacing with a custom USB-UART daughter board to support 16 UART ports. The system is powered by a 1100 mAh Li-ion battery, enabling two hours of untethered operation at a total cost of \$426.

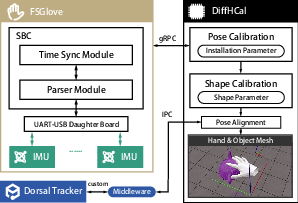

Figure 2: System architecture showing IMU data acquisition, calibration via DiffHCal, and output of hand-object mesh.

Figure 3: Glove hardware design with IMUs, FPC, Raspberry Pi Zero 2W, and custom daughter board.

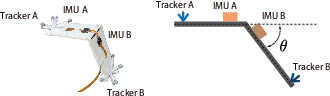

FSGlove supports multiple dorsal tracking modalities, including optical (Nokov MoCap) and VR (HTC Vive), ensuring compatibility with diverse motion capture ecosystems.

Figure 4: FSGlove's board compatibility with optical and VR tracking systems.

Differentiable Calibration: DiffHCal

DiffHCal is a differentiable, shape-aware calibration pipeline built on the MANO hand model. It simultaneously calibrates joint kinematics, hand shape, and sensor misalignment using a minimal set of reference poses. Pose calibration involves three standard poses (rest, x-axis rotation, y-axis rotation), enabling the estimation of transformation and correction matrices for IMU-to-phalanx alignment. Shape calibration leverages touch-based reference poses, optimizing MANO's shape parameters (β) to minimize contact errors between mesh vertices, using a differentiable loss function.

Figure 5: Calibration protocol with three pose calibration poses and fingertip-to-thumb contact for shape calibration.

The calibration process is fully differentiable, allowing for efficient optimization and integration into standard glove workflows without additional user burden.

Experimental Evaluation

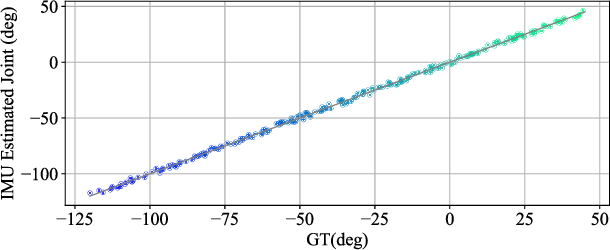

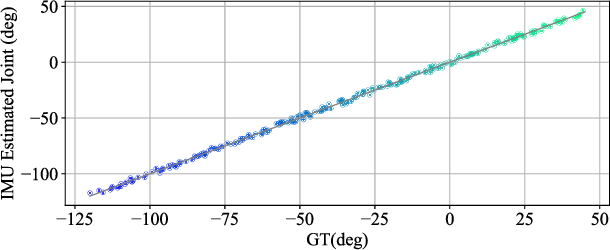

Joint Angle Accuracy

Single joint measurement experiments, using Nokov optical MoCap as ground truth, demonstrate FSGlove's joint angle error of less than 2.7∘ (std 1.8∘), with negligible non-linearity and consistent accuracy across joint angles.

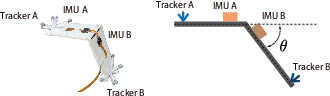

Figure 6: Experimental setup for single joint error measurement using optical ground truth.

Figure 7: IMU angle output closely matches Nokov MoCap measurements, confirming high joint accuracy.

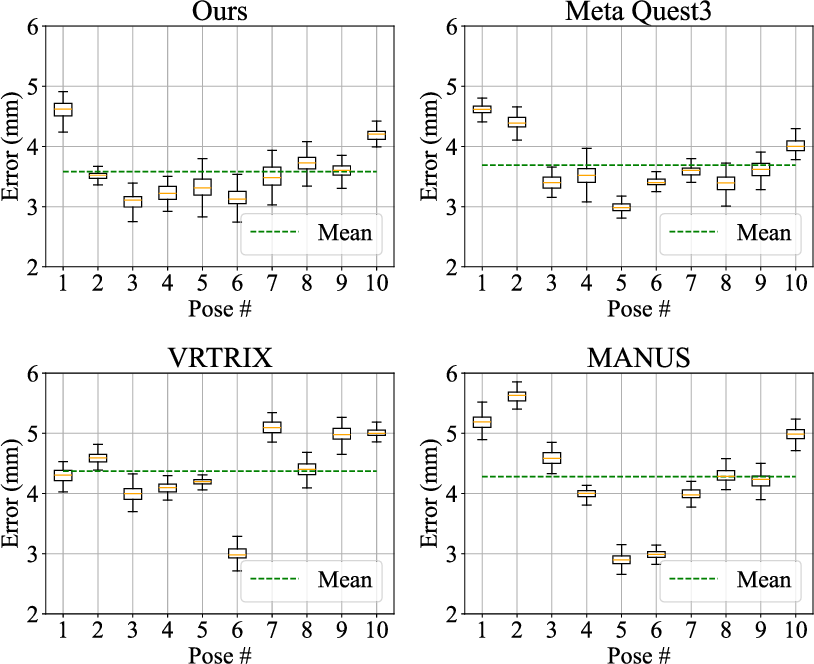

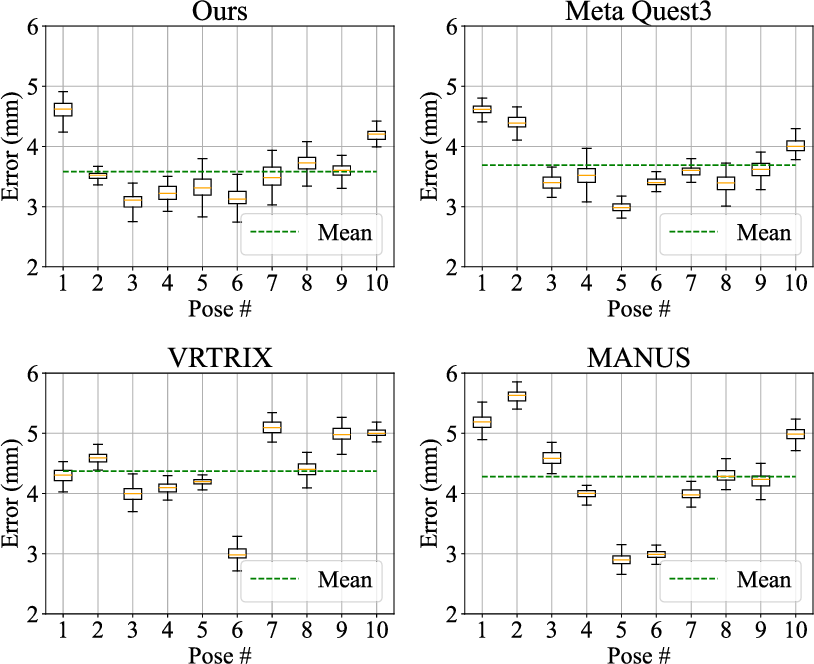

Shape Reconstruction

FSGlove achieves mean mesh error ≤3.6mm in shape reconstruction, comparable to Meta Quest3 and outperforming VRTRIX and MANUS gloves. The evaluation uses Photoneo MotionCam depth images and GroundedSAM segmentation, with Chamfer Distance as the error metric.

Figure 8: Quantitative results of shape reconstruction, showing FSGlove's superior performance over commercial gloves.

Figure 9: Qualitative results of hand shape reconstruction across diverse poses.

Fingertip Pinch Tracking

FSGlove attains the lowest average fingertip positional error (15.7mm), outperforming Meta Quest3, VRTRIX, and MANUS. Notably, FSGlove achieves the best accuracy for index and ring fingers, critical for grasping and manipulation tasks.

Hand-Object Interaction Consistency

FSGlove demonstrates superior hand-object interaction fidelity, with mean positional error ≤20mm, outperforming commercial alternatives. The system maintains anatomically plausible contact states during manipulation, validated across five objects from the ContactPose dataset.

Implementation Considerations

FSGlove's open-source hardware and software design facilitates replication and integration into existing VR and robotics frameworks. The system operates at 100Hz sampling rate, with total latency of 24ms (glove) and 40ms (display), supporting real-time applications. IMU drift is negligible for short-term data collection, and daily recalibration mitigates glove stretching effects. The cost-effective design (\$426) democratizes access to high-fidelity hand tracking.

Implications and Future Directions

FSGlove advances the state-of-the-art in hand MoCap by unifying high-DoF kinematic tracking and personalized shape modeling in a single, open-source system. The differentiable calibration framework enables robust adaptation to individual hand anthropometries and sensor misalignment, critical for contact-rich manipulation and teleoperation. Future work includes enhancing IMU precision for sub-degree tracking, dynamic adaptation to soft-tissue deformation, and integration of tactile feedback. Extending DiffHCal to support federated learning could enable automated, personalized calibration. Applications in surgical robotics, immersive teleoperation, and large-scale data collection for imitation learning are promising directions.

Conclusion

FSGlove introduces a high-DoF, inertial-based hand tracking system with shape-aware, differentiable calibration, achieving state-of-the-art accuracy in joint kinematics, shape reconstruction, and contact fidelity. Its open-source design and compatibility with VR and robotics ecosystems enable broad adoption and further research. The system's robust performance and extensibility position it as a foundational tool for advancing dexterous manipulation, teleoperation, and embodied AI.