OSMO: Open-Source Tactile Glove for Human-to-Robot Skill Transfer (2512.08920v1)

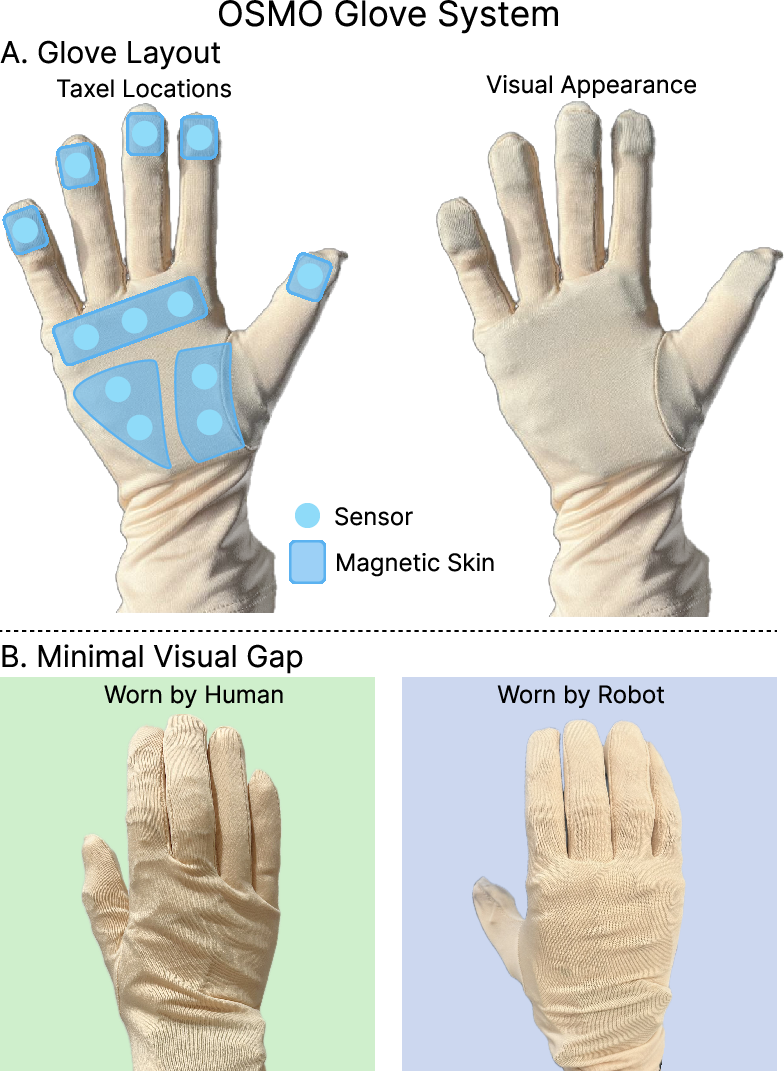

Abstract: Human video demonstrations provide abundant training data for learning robot policies, but video alone cannot capture the rich contact signals critical for mastering manipulation. We introduce OSMO, an open-source wearable tactile glove designed for human-to-robot skill transfer. The glove features 12 three-axis tactile sensors across the fingertips and palm and is designed to be compatible with state-of-the-art hand-tracking methods for in-the-wild data collection. We demonstrate that a robot policy trained exclusively on human demonstrations collected with OSMO, without any real robot data, is capable of executing a challenging contact-rich manipulation task. By equipping both the human and the robot with the same glove, OSMO minimizes the visual and tactile embodiment gap, enabling the transfer of continuous shear and normal force feedback while avoiding the need for image inpainting or other vision-based force inference. On a real-world wiping task requiring sustained contact pressure, our tactile-aware policy achieves a 72% success rate, outperforming vision-only baselines by eliminating contact-related failure modes. We release complete hardware designs, firmware, and assembly instructions to support community adoption.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What this paper is about

This paper introduces OSMO, a thin, wearable “tactile” glove that acts like a skin for both people and robots. It captures how hard and in what direction the hand is pressing or sliding on objects. The big idea is to let robots learn hands-on skills by “watching and feeling” how humans do tasks, not just by looking at videos. The team shows that a robot can learn a contact-heavy task—wiping a whiteboard—using only human demonstrations collected with this glove, and then perform the task successfully using the same kind of glove on its own hand.

The main questions the researchers asked

- Can a simple, flexible glove collect the important “feel” information (forces) that videos miss?

- Can a robot learn a real, contact-rich skill (like wiping with steady pressure) using only human data gathered with this glove?

- If both the human and the robot wear the same glove, does it make learning and transferring skills easier and more reliable?

How the glove and learning system work (in everyday language)

Think of the glove as a lightweight “smart skin”:

- What the sensors feel: Each fingertip and parts of the palm have tiny magnetic sensors that can detect:

- Normal force: pushing straight in, like pressing a button.

- Shear force: sideways force, like rubbing or sliding when you wipe.

- Why force matters: In many tasks, you can’t tell by sight how hard someone is pressing. Two videos can look the same even if one person presses lightly and another presses firmly. The glove captures that hidden “feel” information.

How the sensing works, simply:

- Imagine a compass needle moving when a magnet gets close. The glove uses a similar principle: small magnetic patches deform when pressed, and nearby magnet sensors measure changes. From that, the system infers forces in three directions.

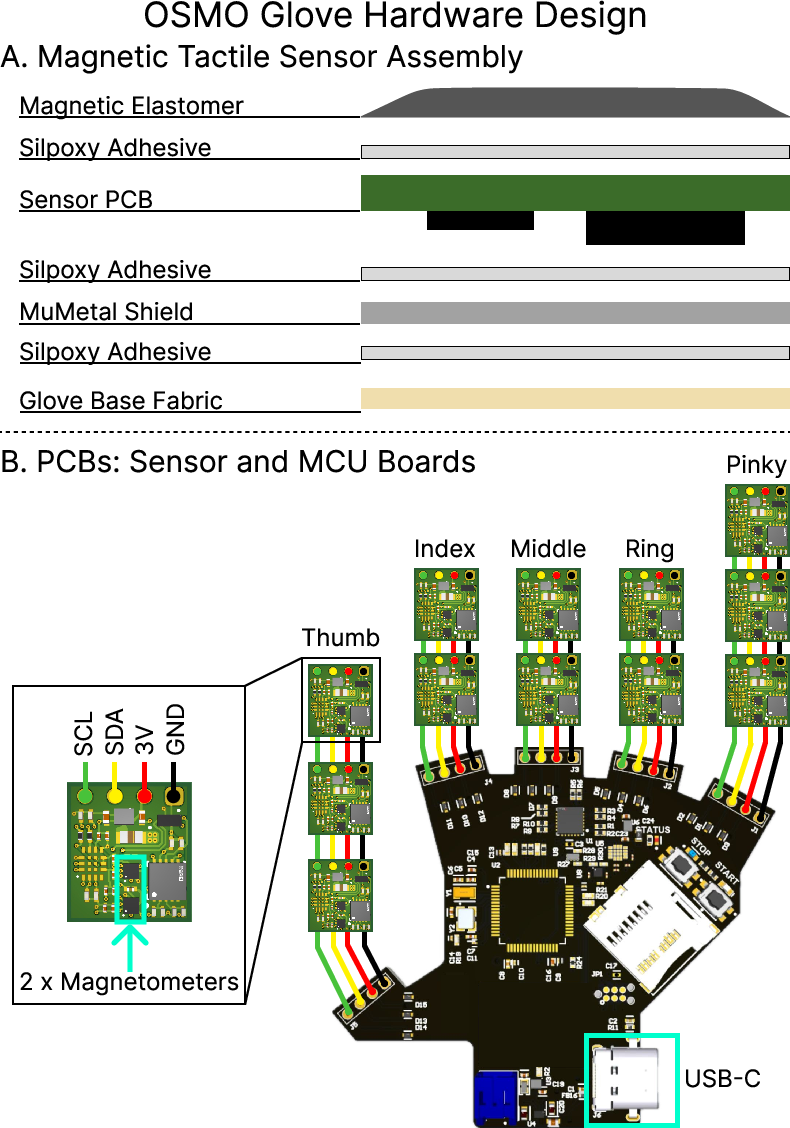

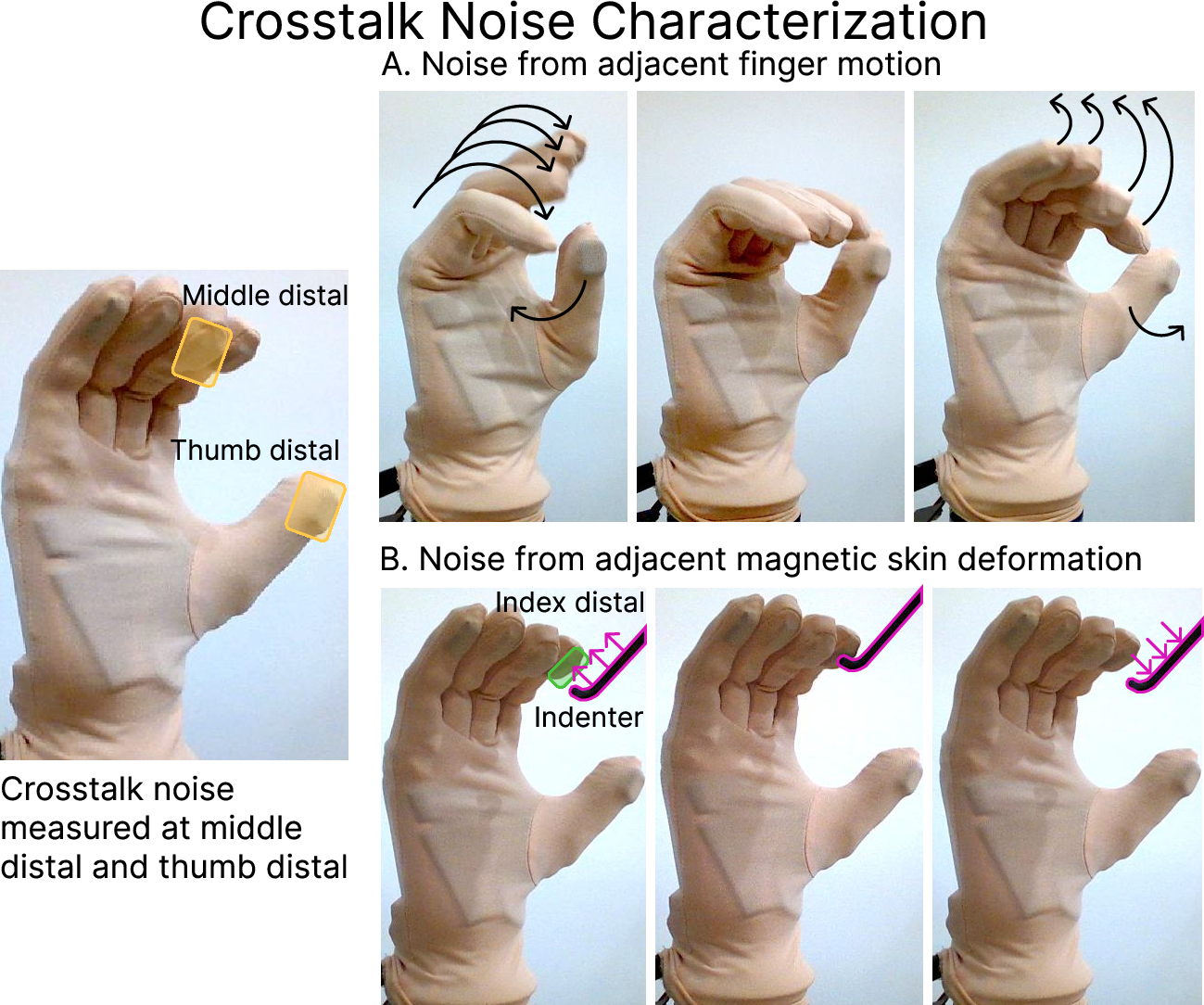

- Preventing “sensor chatter” (crosstalk): With many magnets close together, sensors can “hear” their neighbors and get noisy. The team reduced this interference in two ways:

- MuMetal shielding: like putting walls between rooms so sounds don’t leak.

- Differential sensing: using two magnetometers per spot and subtracting their readings, similar to noise-canceling headphones that remove background hum.

- These steps made the signals much cleaner when fingers moved or neighboring sensors were pressed.

Collecting human demonstrations:

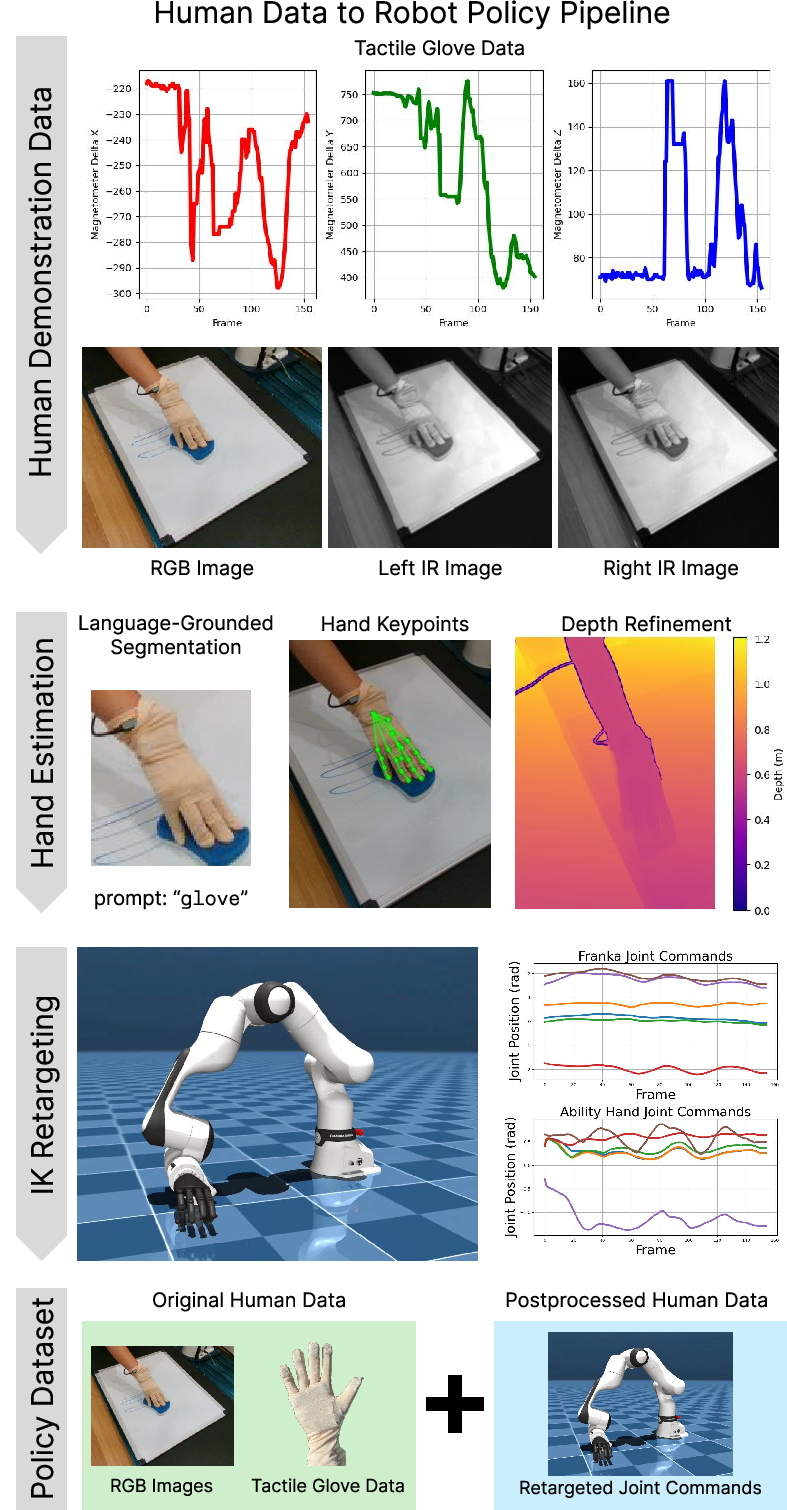

- A person wears the glove and performs the task (wiping) while a camera records the scene.

- Hand pose (where the fingers and wrist are) is estimated from video using existing hand-tracking tools. When depth is available, they refine positions so they’re more accurate.

Turning human motion into robot commands:

- The robot has a hand similar in size to a human’s. The system uses inverse kinematics (think of it as solving a puzzle that tells the robot what joint angles it needs to place its fingertips where the human’s were).

- The team pairs the human’s video and glove data with the robot’s retargeted joint positions to build a training set.

Teaching the robot (the policy):

- The robot learns a “policy,” which is a rule for what to do next based on what it sees and feels.

- Inputs during training: the human’s video images and tactile signals from the glove; targets are the robot joint positions computed from the human movements.

- The learning model is a “diffusion policy.” In simple terms, it learns to plan smooth action sequences by starting with a rough guess and repeatedly refining it—like starting with a blurry path and sharpening it step by step.

- At test time, the robot wears the same glove, looks through the same kind of camera, and uses its own tactile readings to guide its motions.

What the researchers found and why it matters

Key results on a real robot wiping task:

- The robot needed to keep steady pressure while wiping—a perfect case where “feel” is crucial.

- Policies tested:

- Only robot’s own joint info (no vision or touch): about 27% success.

- Vision + joints (no touch): about 56% success.

- Vision + joints + tactile glove: about 72% success.

- Why the tactile glove helped:

- With touch, the robot pressed with more consistent pressure and avoided losing grip on the sponge.

- Vision-only methods often failed because the camera can’t tell exactly how hard the robot is pushing.

Other important findings:

- Using the same glove on humans and robots reduces the “appearance gap,” so the robot doesn’t need fancy image editing to translate human videos into robot-compatible views.

- The glove’s crosstalk-reduction design made multi-sensor data more reliable, which is important when many sensors are packed into a small glove.

What this could change going forward

- Better robot skills from everyday human demos: Because the glove is open-source and designed to work with common hand trackers and cameras, more people can collect useful data. This could speed up robots learning hands-on tasks at home, in labs, or in factories.

- Force-aware learning becomes practical: Adding “feel” to video makes it easier for robots to learn tasks that depend on pressure and friction, like wiping, scrubbing, tightening lids, or handling delicate objects without crushing them.

- Easier human-to-robot transfer: Sharing the same glove for humans and robots shrinks the gap between how humans perform tasks and how robots should perform them.

Quick takeaways

- What’s new: A low-profile, open-source tactile glove that senses both push and slide forces and works on both human and robot hands.

- Why it’s needed: Videos alone can’t tell how hard you’re pressing; touch fills in that missing piece.

- What it proved: A robot trained only on human glove data can perform a real, contact-heavy task better than vision-only methods.

- Why it’s exciting: It opens the door to teaching robots practical, touch-dependent skills using simple, widely available tools.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concrete list of what remains missing, uncertain, or unexplored, framed so future researchers can act on it:

- Absolute force calibration: the policy and analyses use raw magnetic flux (µT); there is no per-taxel calibration to 3D force (N) across the glove, nor characterization of nonlinearity, hysteresis, temperature drift, or rate dependence under typical manipulation.

- Coordinate alignment: the paper does not define or validate mappings from each taxel’s XYZ axes to the human/robot hand frames, making shear vs. normal force interpretation and cross-embodiment transfer ambiguous.

- Crosstalk under realistic human use: crosstalk mitigation is evaluated on a robot hand with simple motions; it remains unknown how well shielding + differential sensing suppresses interference during complex, rapid human manipulation, full-hand contact, and multi-finger coordination.

- Z-axis noise trade-off: MuMetal reduces XY noise but increases Z-axis noise; there is no algorithmic compensation, adaptive filtering, or design alternatives explored to recover Z-axis fidelity.

- Environmental magnetic interference: there is no measurement or mitigation for external magnetic disturbances (robot motors, ferromagnetic objects, tools, variable Earth field) or soft-/hard-iron effects during typical lab/household use.

- Latency and bandwidth: tactile sampling at 25 Hz and action updates at 2 Hz are low for contact-rich control; the end-to-end latency budget (sensing-to-actuation) is not quantified, nor are higher-rate control or reflex loops evaluated.

- Durability and wearability: long-term stability of soft magnets, adhesives (Silpoxy), tinsel wires, and MuMetal under repeated donning/doffing, sweat, moisture, impacts, and cleaning is not assessed; no tests of glove comfort, fatigue, or skin irritation.

- Manufacturability and accessibility: the requirement for pulse magnetization at 2 kV and waterjet-cut shielding may limit replication; low-cost fabrication alternatives, QA tolerances, and community reproducibility studies are missing.

- Sensor layout optimization: fingertips have one taxel each; there is no paper of denser, wraparound, or multi-taxel designs to localize contact points, measure slip, or capture distributed pressure across phalanges and palm.

- Palm utility: palm sensors are omitted for the wiping task; the value of palm coverage for stabilization, power grasps, and varied tasks remains unevaluated, including taxel placement trade-offs.

- Onboard IMU fusion: each PCB includes an IMU, but the paper does not integrate IMU signals with vision/magnetometers for occlusion-robust hand pose or magneto-inertial tactile fusion; fusion architectures and calibration procedures are open.

- Hand pose accuracy: there is no quantitative error analysis of the vision-based hand pose pipeline (SAM2+HaMeR+stereo) under occlusion, motion blur, depth ambiguity, and specular surfaces, nor a paper of its impact on retargeting and policy outcomes.

- Egocentric, in-the-wild deployments: while compatibility with AR/VR devices is shown visually, the pipeline is not demonstrated end-to-end with moving egocentric cameras (extrinsic drift, head motion), nor is robustness to out-of-lab environments tested.

- Calibration robustness: the pipeline assumes known intrinsics and extrinsics; sensitivity analyses (to calibration error, time-varying extrinsics, or camera repositioning) and auto-calibration strategies are absent.

- Safety in contact tasks: collision checks were disabled for fingertips and wrist against the ground; contact-aware IK, compliance control, and safety constraints for deployment are not addressed.

- User diversity and fit: the dataset’s number of demonstrators is not specified; generalization across hand sizes, glove fit, wearing variability, and left vs. right hand use is unexplored.

- Object/environment diversity: generalization is not tested across sponges, surfaces (rough/smooth/wet), friction/stiffness variations, lighting changes, or different wiping targets beyond two marker patterns.

- Task breadth: only one unimanual wiping task is evaluated; multi-finger dexterity, in-hand manipulation, tool-use, bimanual coordination (two gloves), and task switching are open.

- Modality ablations: there is no tactile-only baseline and no systematic ablation of each modality’s contribution (vision, proprioception, tactile) or modality-gating strategies under occlusion.

- Visual encoder adaptation: DINOv2 is frozen; effects of fine-tuning, domain adaptation to robot viewpoints, and robustness to background/camera changes are untested.

- Tactile representation learning: tactile inputs are raw differential µT; pretraining (e.g., contrastive touch-to-touch), supervised force mapping, contact-state/slip classifiers, or temporal tactile transformers are not explored.

- Action frequency and control design: chunked diffusion actions at 2 Hz may be too coarse for pressure regulation; hierarchical controllers, high-frequency tactile servoing, and hybrid reflex/planning strategies are not evaluated.

- Dataset scale and efficiency: 140 demonstrations (~2 hours) are used; scaling laws, data augmentation, and data efficiency gains from tactile signals (vs. vision-only) are not quantified.

- Cross-robot transfer: deployment is shown on the Ability Hand; portability to other anthropomorphic hands (Inspire, Sharpa), required mechanical adaptations, and per-robot sensor calibration are not demonstrated.

- Taxel selection ablations: the paper uses only five fingertip taxels during training/deployment; systematic ablations on which taxels matter (including palm) and how many are needed for performance are missing.

- Success metrics: pixel erasure is a coarse proxy for contact quality; pressure consistency metrics, contact duration, slip events, and sponge retention are not quantified; statistical significance and confidence intervals over more rollouts are absent.

- Failure analysis tooling: qualitative failures (insufficient pressure, sponge loss) are noted but not automatically detected or categorized; event detection pipelines and corrective behaviors are open.

- Interference with other wearables: Manus glove compatibility is claimed but not stress-tested; rigorous electromagnetic and mechanical interference studies with common wearable systems and robot actuators are lacking.

- Power and connectivity: the glove requires USB-C tethering; wireless streaming, battery operation, and synchronization robustness (clock drift, packet loss) in mobile, in-the-wild settings are unaddressed.

Glossary

- Action chunk: A short sequence of target actions predicted together by a policy. "outputs an action chunk~\cite{zhao2023learning} consisting of target joint positions for both the arm and hand."

- Adam: A stochastic gradient-based optimizer commonly used for training neural networks. "We optimize the policy using Adam~\cite{kingma2014adam}."

- Affordances: Action possibilities offered by an object or environment, often used to model hand-object interactions. "Other studies learn explicit representations for hand-object interactions such as affordances \cite{liu2025egozero, sunil2025reactive, bahl2022human, goyal2022human}."

- Crosstalk: Unwanted interference where one sensor’s signal affects another sensor’s measurements. "Scaling from single sensor implementations to 12 closely-spaced magnetic sensors introduces significant crosstalk."

- DDPM scheduler: The sampling schedule used in Denoising Diffusion Probabilistic Models for iterative denoising. "We adopt the DDPM scheduler~\cite{ho2020denoising} with 100 denoising steps during both training and deployment."

- Differential sensing: Technique that subtracts paired sensor readings to suppress common-mode noise. "a dual magnetometer differential sensing layout per taxel to reduce common-mode noise."

- DINOv2: A self-supervised vision transformer used for robust image feature extraction. "a DINOv2~\cite{oquab2023dinov2} encoder to extract image features."

- Diffusion policy: A policy class that generates action sequences by denoising latent trajectories conditioned on observations. "We represent this policy as a diffusion policy~\cite{chi2024diffusionpolicy}"

- Domain gap: Distribution mismatch between data sources (e.g., human vs. robot visuals) that can hinder transfer. "greatly reduces the visual domain gap"

- Dyn-HaMR: A vision model for dynamic hand mesh reconstruction from RGB videos. "off-the-shelf vision models (e.g., HaMeR~\cite{pavlakos2024reconstructing}, Dyn-HaMR~\cite{yu2025dynhamr}) for processing generic RGB videos."

- Egocentric video: First-person video captured from the demonstrator’s viewpoint, often via wearable cameras. "wearable devices for egocentric video such as Aria 2 smart glasses"

- Embodiment gap: Differences between human and robot bodies that complicate skill transfer. "minimizes the visual and tactile embodiment gap"

- Extrinsics: Camera parameters describing its pose relative to the world or another sensor. "the camera intrinsics and extrinsics for both RGB and IR sensors are known"

- FiLM: Feature-wise Linear Modulation; a conditioning method that modulates neural network activations based on context. "using FiLM~\cite{perez2018film}-based conditioning"

- FoundationStereo: A learning-based stereo depth estimator used to compute depth from paired infrared images. "compute stereo depth from the RealSense IR pair using FoundationStereo~\cite{wen2025foundationstereo}"

- HaMeR: A model for reconstructing 3D human hand meshes and keypoints from RGB images. "HaMeR is then applied to the cropped hand image to obtain 3D keypoints"

- I2C: A multi-drop serial communication bus used to link microcontrollers and sensors. "The 12 sensor PCBs communicate with an STM32 microcontroller via I2C"

- Image inpainting: Filling or synthesizing missing image regions to remove artifacts or occlusions. "avoiding the need for image inpainting"

- Intrinsics: Camera parameters describing internal geometry (e.g., focal length, principal point). "the camera intrinsics and extrinsics for both RGB and IR sensors are known"

- Inverse kinematics (IK): Computing robot joint configurations that achieve desired end-effector poses. "using an off-the-shelf IK solver~\cite{Zakka_Mink_Python_inverse_2025} in MuJoCo."

- Kinematic retargeting: Mapping human motion trajectories to robot kinematics while respecting constraints. "human-to-robot kinematic retargeting methods \cite{pan2025spider, adeniji2025feel, yin2025geometric}"

- Latent trajectories: Hidden representations of sequence evolution used by diffusion models during denoising. "denoising latent trajectories conditioned on observations."

- Magnetometer: A sensor that measures magnetic field strength and direction. "the magnetometers measure changes in magnetic flux"

- Magnetic elastomer: A soft polymer infused with magnetic particles whose deformation alters nearby magnetic fields. "soft magnetic elastomers"

- Magnetic flux: The amount of magnetic field passing through a given area, measured here by magnetometers. "the magnetometers measure changes in magnetic flux"

- MEMS-based architecture: Micro-Electro-Mechanical Systems design enabling small, adaptable sensing devices. "Their MEMS-based architecture naturally adapts to different form factors and surfaces."

- Microtesla (T): A unit of magnetic flux density used to quantify tactile sensor signals and noise. "Noise is quantified as root-mean-squared (RMS) magnetic field variation in microtesla (T), where lower is better."

- MuMetal: A high-permeability alloy used for magnetic shielding to attenuate external fields. "MuMetal shielding integrated into the sensor architecture"

- Normal force: Force perpendicular to a contact surface, critical for grasping and pressing tasks. "measure both shear and normal forces"

- Optical flow: Apparent motion of image intensities used to infer deformation and force in vision-based tactile sensing. "deriving shear and normal forces from optical flow."

- Optical tactile sensors: Tactile sensors that use embedded cameras to observe gel deformation and infer forces. "Optical tactile sensors are the most common; they achieve high spatial resolution by embedding cameras to observe a deformable gel, deriving shear and normal forces from optical flow."

- Point cloud: A set of 3D points representing surfaces, used here to refine hand pose estimates. "combine the depth map with the SAM2 mask to form a hand point cloud."

- Proprioception: Internal sensing of the robot’s joint states (e.g., positions, velocities). "Proprioception & 27.12 ± 32.38"

- Pulse magnetizer: A device that applies a pulsed magnetic field to magnetize materials. "axially magnetized in a pulse magnetizer for 8 seconds at ."

- RMS (root-mean-squared): A statistical measure of signal magnitude used to quantify noise. "Noise is quantified as root-mean-squared (RMS) magnetic field variation in microtesla (T)"

- Rollout: A complete execution of a policy in the environment to evaluate performance. "Real robot policy rollouts with the Psyonic Ability Hand and Franka robot arm."

- ROS2: Robot Operating System 2; a middleware framework for robotics communication and tooling. "native Python and ROS2 interfaces supporting time-synchronized sensor packets."

- ROS2 bag files: Recorded logs of ROS2 message streams used for dataset collection and replay. "All data streams are logged as ROS2 bag files at 25 Hz."

- SAM2: Segment Anything Model 2; used to extract hand masks from images. "A hand mask is first extracted using SAM2~\cite{ravi2024sam}"

- Savitzky–Golay filter: A polynomial smoothing filter for denoising trajectories while preserving shape. "Fingertip and wrist trajectories are smoothed using a Savitzky-Golay filter~\cite{savitzky1964smoothing}."

- Shear force: Force parallel to a contact surface, important for sliding and wiping interactions. "measure both shear and normal forces"

- Stereo depth: Depth estimated from two cameras with known geometry via stereo matching. "compute stereo depth from the RealSense IR pair using FoundationStereo~\cite{wen2025foundationstereo}"

- Taxel: A single tactile sensing element within a tactile array. "We equip the glove with 12 taxels on the fingertips and palm"

- Tinsel wire: Thin, flexible conductive wire used to route signals in wearable electronics. "The sensors are wired in series with tinsel wire to the shared microcontroller"

- U-Net: An encoder–decoder neural network architecture commonly used for image-to-image tasks and denoising. "a U-Net~\cite{ronneberger2015u} denoising network."

Practical Applications

Immediate Applications

Below are concrete applications that can be deployed now, building directly on the paper’s released hardware, firmware, and policy-training pipeline.

- Tactile-aware “teach-by-demonstration” for contact-rich tasks (manufacturing, facilities, robotics integration)

- What: Use OSMO to record in-situ human demonstrations (e.g., wiping, polishing, sanding, deburring, insertion) and train a diffusion policy that transfers directly to a robot hand wearing the same glove. The paper’s wiping task (72% success) is an immediately replicable template.

- Sectors: Robotics, manufacturing, automotive, electronics assembly, facilities cleaning.

- Tools/Products/Workflows: OSMO glove kit + ROS2 bagging; RealSense camera; HaMeR + SAM2 + FoundationStereo hand pose pipeline; MuJoCo IK + Mink inverse kinematics; DINOv2 + diffusion policy training; deployment on Franka + Psyonic Ability Hand (or similar).

- Assumptions/Dependencies: Reachable shared workspace; camera intrinsics/extrinsics known; anthropomorphic hand form factor; consistent glove fit on human and robot; magnetometer crosstalk managed (MuMetal + dual-magnetometer design); compliance with site safety.

- Rapid, low-code robot policy authoring for service cleaning and finishing (commercial cleaning, hospitality, retail)

- What: Teach robots to apply consistent pressure in long-contact actions (wipe tables/whiteboards, clean glass, buff fixtures) via human demos without robot-side data collection.

- Sectors: Service robotics, cleaning services, hospitality, retail.

- Tools/Products/Workflows: “Record demo → auto-retarget → train diffusion policy → deploy” pipeline; prebuilt ROS2 nodes and policy scripts; off-the-shelf cameras and AR/VR headsets for tracking.

- Assumptions/Dependencies: Surface/material variability within training distribution; simple grasping fixture (e.g., sponge) reproducibly mounted on fingertips; stable camera mounting.

- Force-aware skill capture for R&D and QA (research labs, product testing, HRI evaluation)

- What: Collect synchronized RGB and tri-axial tactile signals (shear + normal) from natural human manipulation to quantify and replay “force signatures” for tasks (e.g., button feel, dial rotation, latch closure).

- Sectors: Academia, consumer product QA, industrial design, HRI research.

- Tools/Products/Workflows: OSMO data schema (RGB + tactile + pose); analysis notebooks for force profile visualization; benchmark suites for contact failure modes.

- Assumptions/Dependencies: Known calibration; repeatable test rigs; material fixtures mimicking real products.

- Open-source tactile dataset generation for contact-rich learning (robotics ML, perception, representation learning)

- What: Create public, standardized visuo-tactile datasets for manipulation tasks to train/benchmark policies, perception, and cross-modal representations.

- Sectors: Academia, robotics startups, ML research.

- Tools/Products/Workflows: Project’s released hardware/firmware; ROS2 logging; dataset format with joint targets from IK; baselines with DINOv2 + diffusion policy; crosstalk-mitigation reference design.

- Assumptions/Dependencies: Data licensing and privacy clearance; reproducible sensor fabrication; consistent task protocols.

- Teleoperation + learning-from-demonstration hybrid for fragile/deformable objects (pilot deployments)

- What: Combine operator demonstrations with tactile streams to stabilize contact actions (e.g., wiping, squeezing, tool guidance) and refine an autonomous policy from those demos.

- Sectors: Warehousing, fulfillment, light manufacturing, lab automation.

- Tools/Products/Workflows: Teleop interface + OSMO glove; online/offline policy improvement; safety interlocks with Franka or similar.

- Assumptions/Dependencies: Reliable low-latency comms for teleop; anthropomorphic end-effector; basic operator training.

- Classroom and maker education modules in tactile sensing, fabrication, and robot learning (STEM, engineering curricula)

- What: Use OSMO’s open-source designs to teach magnetic tactile sensing, crosstalk mitigation (MuMetal + differential sensing), sensor integration, ROS2, and policy learning.

- Sectors: Education (undergraduate/graduate robotics, mechatronics), maker communities.

- Tools/Products/Workflows: Course kits; lab exercises (build a sensor; calibrate; collect demos; train a policy); evaluation on simple wiping/polishing setups.

- Assumptions/Dependencies: Access to 3D printing, waterjet for MuMetal, STM32 toolchain; component supply (BMM350, BHI360).

- Hand-tracking robustness studies and sensor fusion benchmarks (vision + inertial + tactile)

- What: Immediately reproduce the paper’s hand-tracking pipeline and evaluate failure modes under occlusion; prototype simple fusion with the glove’s onboard IMUs (even before full algorithmic integration).

- Sectors: AR/VR, tracking, robotics perception.

- Tools/Products/Workflows: HaMeR + SAM2 + stereo depth alignment; quantitative “pose drift under occlusion” tests with tactile/IMU correlates.

- Assumptions/Dependencies: Stable stereo rig; synchronized clocks; consistent glove visual appearance to minimize domain shift.

- Retargeting templates for anthropomorphic hands (integration services)

- What: Adapt the provided IK retargeting to Inspire/Sharpa/Psyonic/other anthropomorphic hands and common arms (UR, Franka), enabling plug-and-play workflows for new customers.

- Sectors: System integration, robotics platforms.

- Tools/Products/Workflows: MuJoCo + Mink inverse kinematics templates; URDF import scripts; minimal collision checks for fingertips in contact-rich tasks.

- Assumptions/Dependencies: Reasonable kinematic match to human hand; tuned safety constraints per platform.

- Data-driven coaching tools for grip and contact technique (skill training, sports/music R&D)

- What: Use the glove to visualize grip force, pressure distribution, and shear during task practice (e.g., wiping motions, tool handling) for training or ergonomics analysis.

- Sectors: Occupational training, ergonomics, sports/musical instrument R&D.

- Tools/Products/Workflows: Real-time dashboards; force heatmaps; session replay; basic thresholds/alerts for unsafe pressure.

- Assumptions/Dependencies: No medical claims; careful interpretation of magnetic-field-to-force correlation (paper uses raw μT).

Long-Term Applications

These opportunities require further research, scaling, validation, or productization beyond the current paper.

- General-purpose dexterous policies trained at scale from in-the-wild human visuo-tactile demos

- What: Collect large, diverse in-the-wild datasets (AR/VR headsets + dual OSMO gloves) to train foundation policies that generalize across tasks (tool use, insertions, folding, cooking prep).

- Sectors: Household/service robotics, manufacturing, logistics.

- Tools/Products/Workflows: Cross-embodiment skill models; policy distillation; multi-task diffusion/transformer policies; continuous tactile conditioning at deployment.

- Assumptions/Dependencies: Robust cross-scene calibration; domain randomization/augmentation; policy safety and verification; scalable data infrastructure.

- Bimanual, multi-finger coordination with two tactile gloves on human and robot

- What: Extend Glove2Robot to two hands for assembly, cloth manipulation, container opening, cable routing.

- Sectors: Manufacturing, e-commerce fulfillment, service robotics.

- Tools/Products/Workflows: Dual-glove synchronization; bimanual IK/retargeting; contact planners using shear/normal cues; bi-arm policy architectures.

- Assumptions/Dependencies: Precise coordination and collision safety; robust tracking under severe occlusions; stable tactile data rates.

- Prosthetics and assistive manipulation enhanced by tactile learning from human demonstrations

- What: Train prosthetic control policies that leverage tactile cues for secure grasp, slip detection, and consistent pressure during ADLs (activities of daily living).

- Sectors: Healthcare, rehabilitation, prosthetics.

- Tools/Products/Workflows: User-specific policy adaptation; safety-critical controllers; clinical evaluation; integration with EMG/myoelectric signals and glove IMUs.

- Assumptions/Dependencies: Regulatory approvals; clinical trials; user comfort and hygiene; durable medical-grade materials; on-device inference constraints.

- Standardized visuo-tactile datasets, benchmarks, and representation learning across sensors

- What: Establish community benchmarks for contact-rich manipulation and pretraining (e.g., tactile transformers) that transfer across magnetic, optical, and resistive skins.

- Sectors: Academia, standards bodies, robotics consortia.

- Tools/Products/Workflows: Dataset specs (synchronization, metadata, calibration); benchmark tasks and metrics (contact stability, slip, force tracking); sensor-agnostic encoders.

- Assumptions/Dependencies: Cross-lab reproducibility; IP/data-sharing agreements; sensor calibration standards.

- Consumer “teach your robot” products for home tasks

- What: End-user kits where homeowners demonstrate wiping, scrubbing, or tool use; the system trains a policy and deploys on a home robot hand.

- Sectors: Consumer robotics, smart home.

- Tools/Products/Workflows: Simplified UI; auto-calibration; cloud/on-device training; safety envelopes; fail-safe monitoring.

- Assumptions/Dependencies: Affordable anthropomorphic end-effectors; robust home SLAM + calibration; liability and safety certifications.

- IMU-tactile-vision fusion for occlusion-robust hand tracking and control

- What: Fuse the glove’s onboard IMUs with vision to maintain accurate fingertip/palm pose through occlusions; reduce reliance on perfect line-of-sight.

- Sectors: AR/VR tracking, robotics perception, teleoperation.

- Tools/Products/Workflows: Probabilistic sensor fusion; self-supervised alignment of IMU/tactile/vision; robustified retargeting pipelines.

- Assumptions/Dependencies: Accurate time sync; IMU bias handling; learned fusion models validated on diverse tasks.

- Higher-resolution fingertip and palm sensing for precise contact localization and slip prediction

- What: Denser, wraparound taxel layouts for fingertip/palm to enable fine contact localization, slip onset detection, and tactile servoing.

- Sectors: Precision assembly, electronics handling, surgical robotics R&D.

- Tools/Products/Workflows: New PCB/gel designs; improved crosstalk suppression; high-bandwidth drivers; tactile servo control loops.

- Assumptions/Dependencies: Manufacturability at scale; robustness to wear/tear; EMI/EMC compliance.

- Safety, data governance, and open hardware standards for tactile human-robot demonstration

- What: Policy and standards around safe use of wearables in industrial settings, tactile data governance (biometric and contact traces), and conformance tests for tactile skins.

- Sectors: Policy/regulatory, occupational safety, standards organizations.

- Tools/Products/Workflows: Best-practice guides (magnetic materials, shielding, EHS); dataset anonymization standards; certification procedures for tactile arrays.

- Assumptions/Dependencies: Multi-stakeholder consensus; harmonization with existing robot safety norms (ISO/ANSI); legal frameworks for data ownership and privacy.

- Simulation and digital twins with realistic tactile physics

- What: Tactile-aware simulators calibrated from OSMO data to accelerate policy training and reduce real-world data needs.

- Sectors: Software tools, industrial digital twins, robotics R&D.

- Tools/Products/Workflows: Parameter identification from μT streams; synthetic tactile rendering; sim-to-real pipelines for force-aware skills.

- Assumptions/Dependencies: Accurate soft-contact models; transferability of tactile features; validation on real tasks.

- ROI-driven deployment playbooks for factories and warehouses

- What: Business processes to quantify savings from reduced robot programming time and fewer contact-related failures (scrap, rework).

- Sectors: Manufacturing ops, operations research, finance (CapEx justification).

- Tools/Products/Workflows: Pilot-to-scale templates; KPIs (programming hours saved, success rate, throughput, rework rate); cost models for glove + anthropomorphic hand adoption.

- Assumptions/Dependencies: Stable task mix with repetitive contact actions; trained staff for basic demo capture; integration with existing MES/QMS.

- Telepresence with autonomous contact micro-skills

- What: Telepresence robots that offload routine contact subtasks (e.g., wipe, press, align) to onboard tactile-aware policies while operator handles high-level navigation/decisions.

- Sectors: Field service, labs, healthcare support services.

- Tools/Products/Workflows: Shared autonomy; intent recognition; safety monitors; tactile fallback behaviors.

- Assumptions/Dependencies: Reliable connectivity; human-in-the-loop oversight; robust failure detection and recovery.

Notes on general feasibility dependencies across applications:

- Hardware supply: Availability of BMM350/BHI360, MuMetal sheets, STM32 MCUs, and silicone + magnetic micro-particles.

- Calibration: Camera calibration (intrinsics/extrinsics), time synchronization across sensors, and consistent glove fit on both human and robot.

- Embodiment: Best results with anthropomorphic hands; non-anthropomorphic grippers may require redesigned retargeting/policy formats.

- Environment: Magnetic interference and Earth field variation are managed by shielding/differential sensing but may need site-specific tuning.

- Safety and compliance: Industrial EHS policies, EMC/EMI considerations, and privacy/data-governance for human demonstration capture.

Collections

Sign up for free to add this paper to one or more collections.