- The paper introduces an energy-based framework that uses logits from the penultimate layer to better detect hallucinations in LLMs.

- It leverages a Boltzmann-distribution-inspired approach to assess and quantify model uncertainty, surpassing traditional entropy measures.

- Experimental results show improved AUROC and AUPR metrics, validating the method’s robustness in both high and low semantic diversity scenarios.

Semantic Energy: Detecting LLM Hallucination Beyond Entropy

The paper "Semantic Energy: Detecting LLM Hallucination Beyond Entropy" introduces a novel uncertainty estimation framework designed to address the limitations of traditional entropy-based methods in detecting hallucinations in LLMs. This research explores the inherent confidence of LLMs by operating on logits from the penultimate layer rather than relying on post-softmax probabilities. This essay will discuss the motivations, methods, experiments, and implications of the Semantic Energy framework proposed by the authors.

Introduction and Motivations

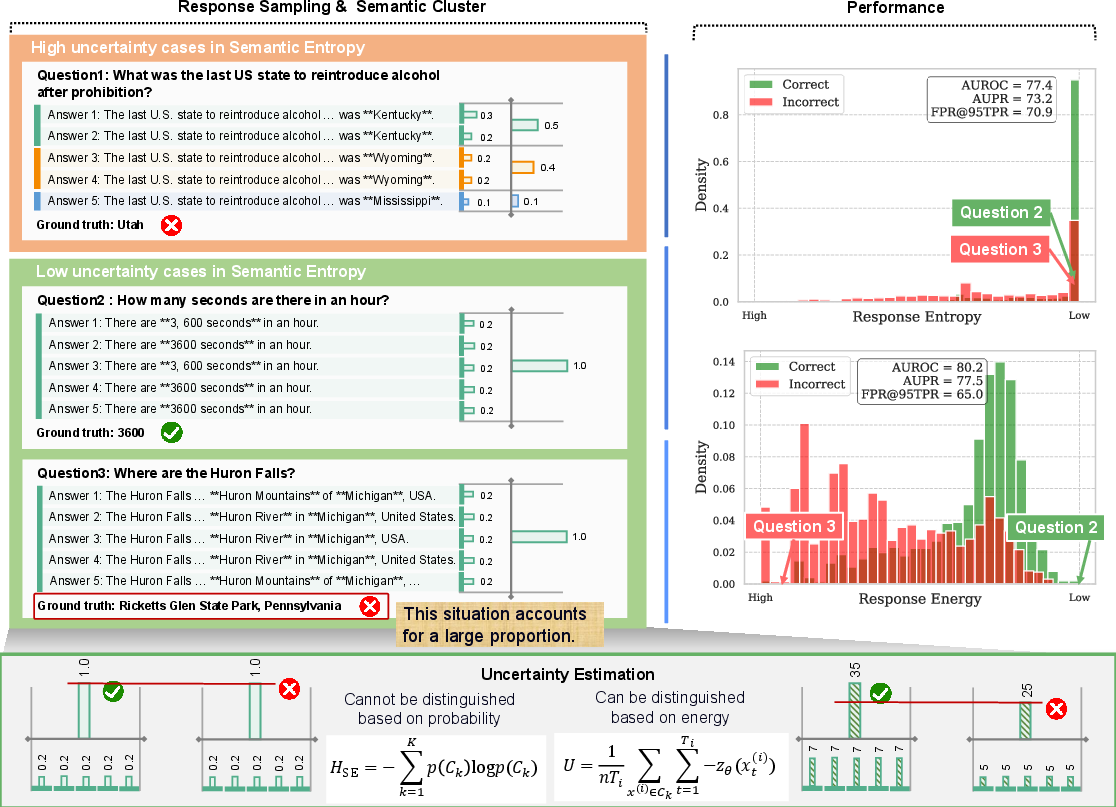

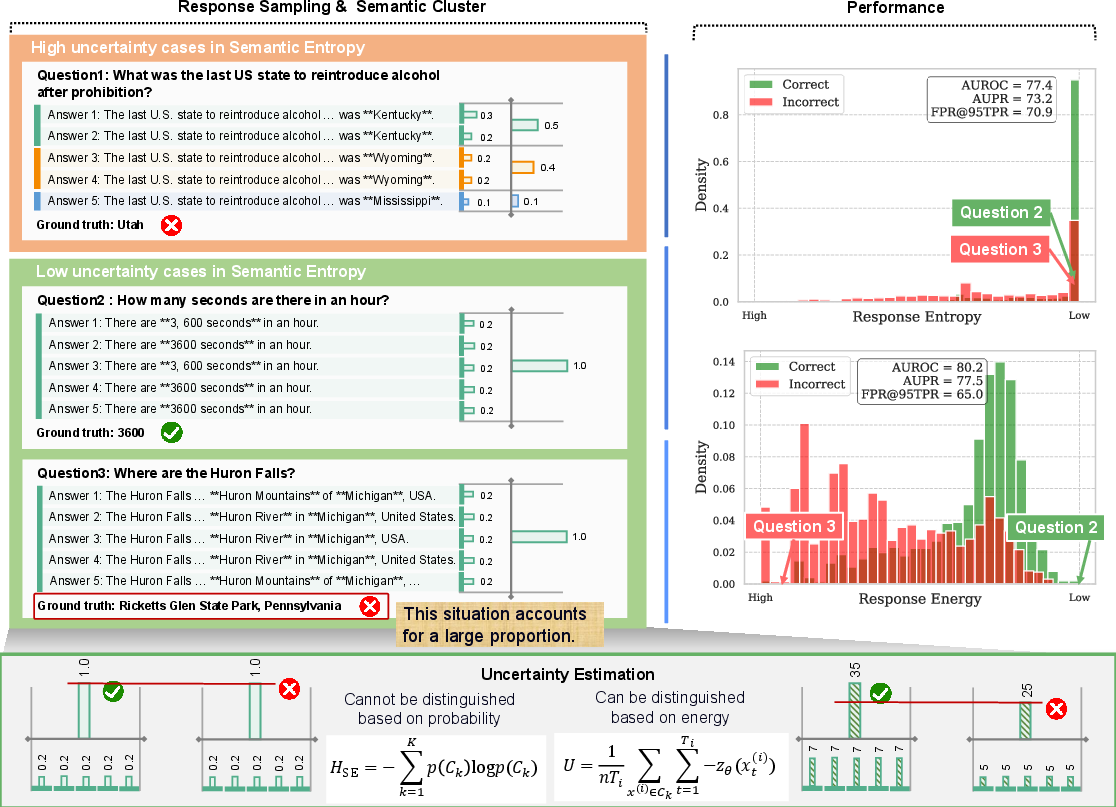

LLMs are essential components in various applications yet remain susceptible to producing hallucinations—fluent but incorrect outputs leading to potential misdirection. Traditional uncertainty estimation approaches, such as semantic entropy, estimate uncertainty by assessing semantic diversity across multiple response samples. Still, these approaches are inadequate for certain scenarios. Specifically, semantic entropy fails when it cannot capture the model's inherent uncertainty, particularly when responses are semantically homogeneous but incorrect.

Semantic Entropy's effectiveness is limited because it relies on normalized probabilities, which dilute the intensity information of inherent logits. Semantic Energy is introduced to overcome these limitations by using energy derived from logits to evaluate uncertainty, reflecting the model's epistemic uncertainty.

Figure 1: An intuitive comparison between Semantic Entropy and Semantic Energy in their ability to characterize uncertainty.

Methodology: Semantic Energy

Semantic Energy utilizes an energy-based framework inspired by the Boltzmann distribution from thermodynamics, where lower energy states correlate with higher confidence predictions. Unlike the probability-based approach, Semantic Energy assesses uncertainty using logits directly.

Boltzmann Distribution

In this setting, a token's energy within a response is derived from its logit value, transforming it into an energy measurement. The probability of a sequence is then modeled as a joint distribution over these token energies. Semantic Energy treats lower energy sequences as more reliable due to their stability and lower randomness.

Implementation in LLMs

For a given prompt, multiple responses are sampled and semantically clustered. Each cluster's energy is calculated using logits rather than softmax probabilities, allowing Semantic Energy to capture the uncertainty more effectively. This methodology aids in distinguishing reliable from unreliable responses even when traditional entropy methods fail.

Experimental Validation

Setup and Results

Experiments conducted on both Chinese (CSQA) and English (TriviaQA) datasets with models like Qwen3-8B and ERNIE-21B-A3B demonstrate the effectiveness of Semantic Energy. The results show significant improvements in metrics such as AUROC and AUPR, confirming that Semantic Energy provides a more robust uncertainty estimation framework compared to Semantic Entropy. The Semantic Energy method particularly excels in scenarios with low diversity, where entropy-based approaches offer misleading confidence assessments.

Ablation Studies

In questions where all responses belong to a single semantic cluster, Semantic Entropy failed by assigning zero uncertainty, as shown in scenarios with repeated semantics. Here, Semantic Energy markedly outperformed, indicating substantial improvement (over 13% in AUROC) where Semantic Entropy was misleadingly confident.

The robustness of Semantic Energy over traditional probabilities underscores its potential as a holistic uncertainty estimator in diverse datasets, including with the think mode activated to compartmentalize and isolate reasoning.

Implications and Future Directions

Semantic Energy establishes a compelling alternative to probability-based uncertainty estimation, emphasizing the importance of leveraging logits to capture a model's epistemic uncertainty. Future research could enhance this framework by addressing limitations introduced by cross-entropy training and exploring logits' full potential in uncertainty modelling. Additionally, Semantic Energy's energy-based assessment can inform broader AI applications, improving model interpretability and robustness in dynamic real-world environments.

Conclusion

The introduction of Semantic Energy provides a valuable advancement in detecting hallucinations within LLMs. By substituting entropy with an energy-based model derived from logits, this approach offers a more nuanced view of uncertainty, addressing key limitations inherent in existing methods. While not without potential avenues for improvement, Semantic Energy significantly advances the accurate characterization of LLM reliability, expanding opportunities for more effective deployment in critical AI applications.