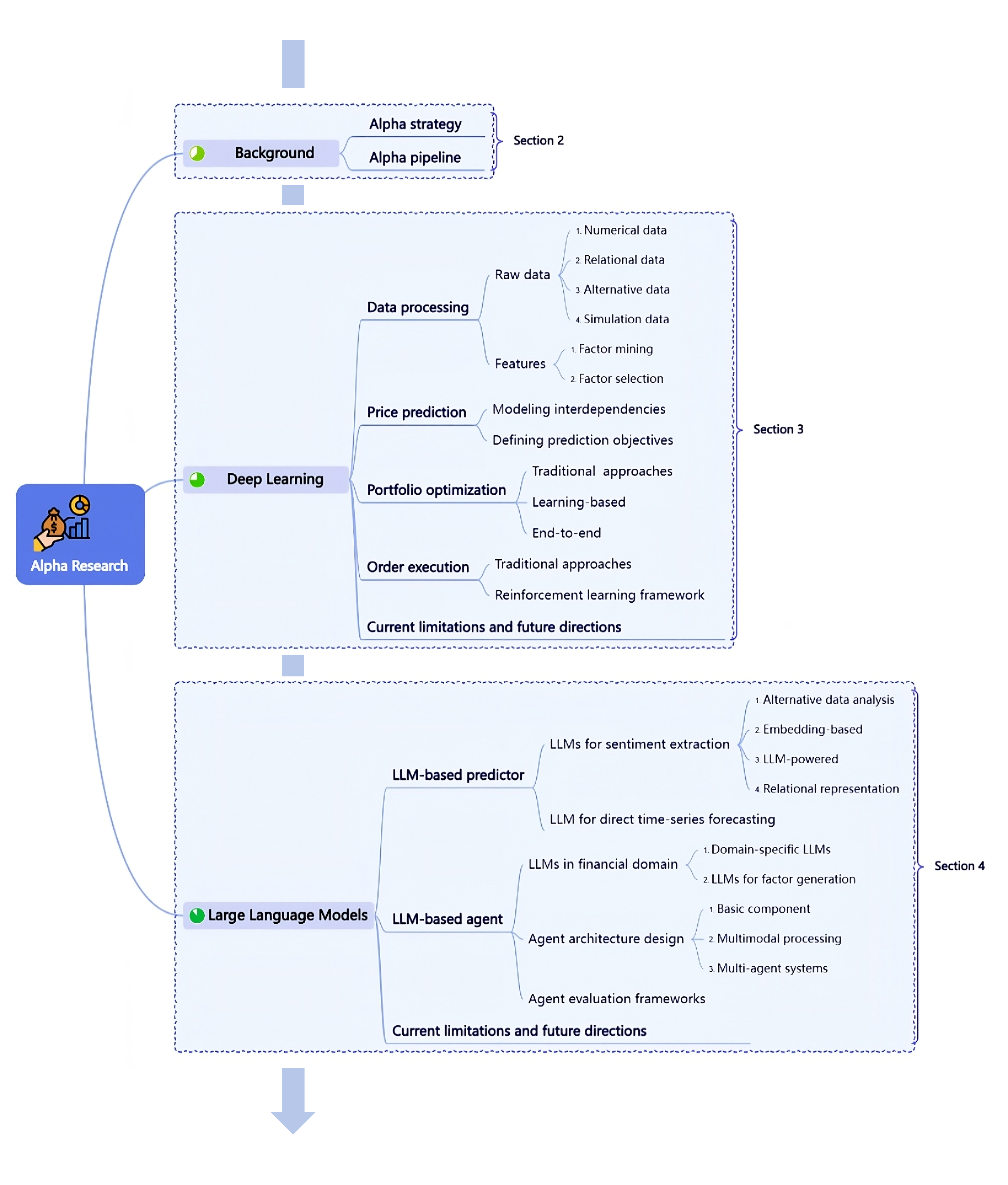

- The paper demonstrates a comprehensive survey of AI progression, documenting the shift from deep learning to LLM-powered autonomous pipelines in quantitative investment.

- It details methodological innovations in data processing, predictive modeling, portfolio optimization, and order execution using deep learning and reinforcement learning.

- It highlights the integration of LLMs for sentiment extraction, causal reasoning, and multi-modal analysis, addressing both performance gains and practical challenges.

Survey of AI Progression in Quantitative Investment: From Deep Learning to LLM-Driven Autonomy

This essay comprehensively reviews the conceptual and practical advancements resulting from the integration of Deep Learning (DL) and LLMs in the domain of quantitative investment. Focused on alpha strategy as the canonical use case, it addresses methodological innovations across the investment pipeline, covering data handling, predictive modeling, portfolio construction, and execution, and discusses the shift from human-in-the-loop workflows to AI-autonomous pipelines. The following sections examine the current methodological spectrum, empirical results, and prospective research directions.

Alpha Strategy and the Quantitative Investment Pipeline

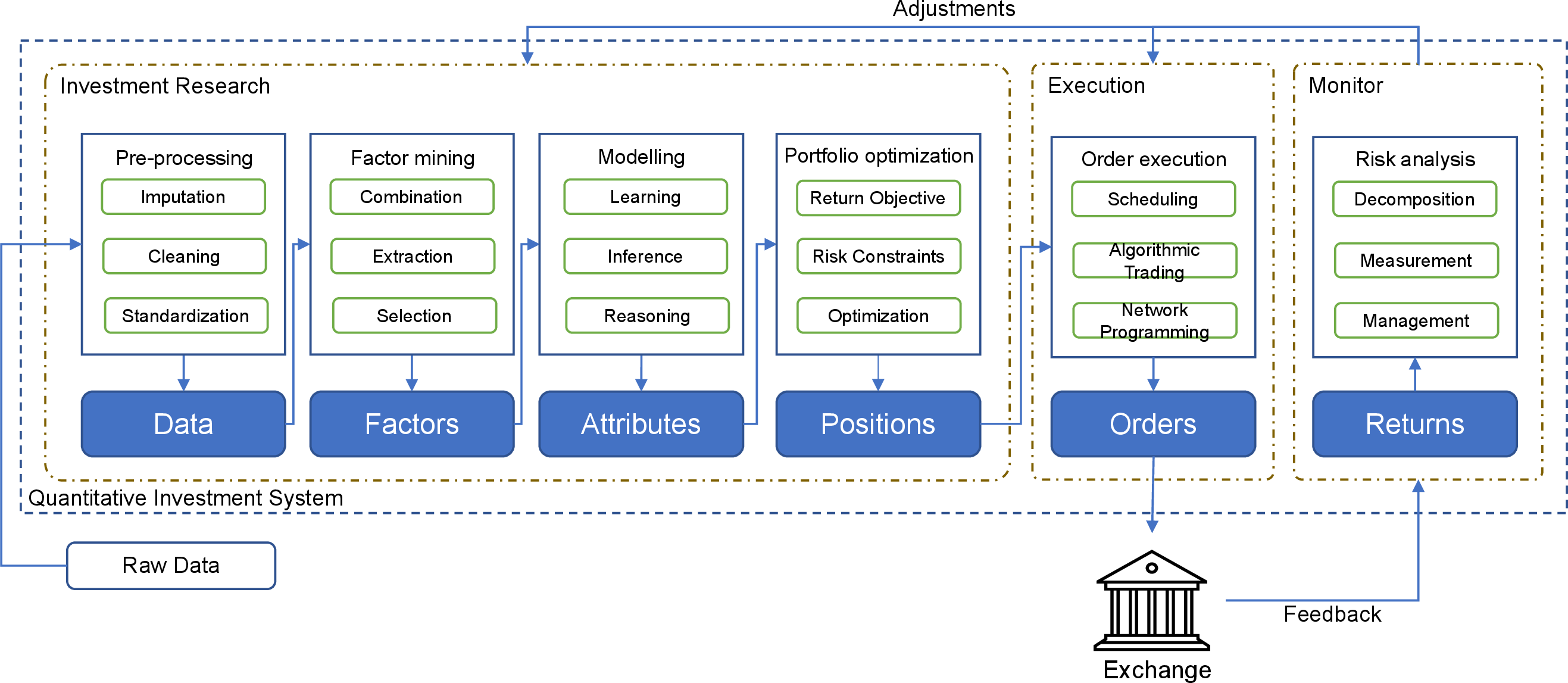

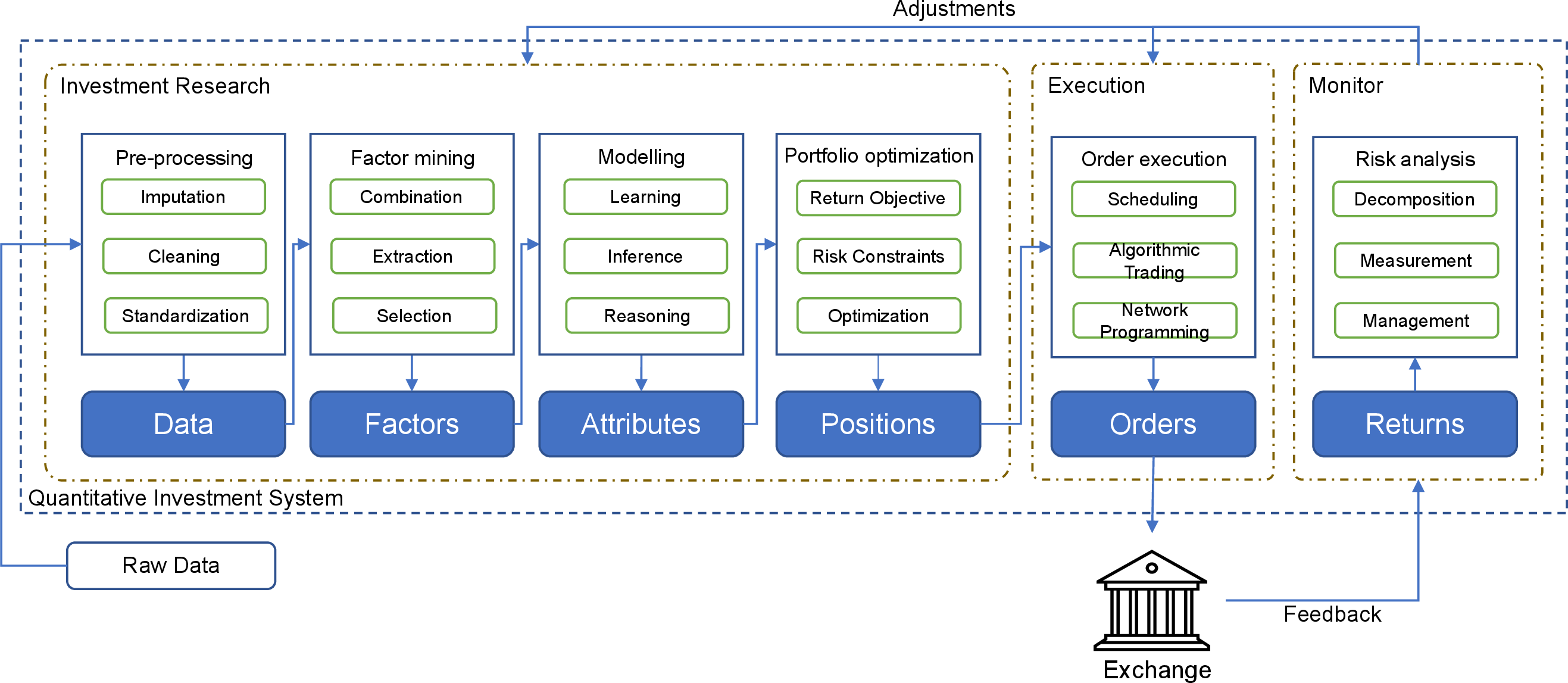

The alpha strategy framework serves as a fundamental organizing principle in systematic asset management, aiming to extract idiosyncratic returns beyond benchmark performance while managing exogenous risk. In practice, the pipeline for executing alpha strategies encompasses:

- Data Processing—Standardization and feature extraction from raw multi-modal, high dimensional, and often irregularly sampled financial data.

- Model Prediction—Forecasting asset-specific returns and risk features by leveraging representations extracted from observed data.

- Portfolio Optimization—Solving allocation problems over high-cardinality investment universes under constraints related to expected return, volatility, and transaction cost.

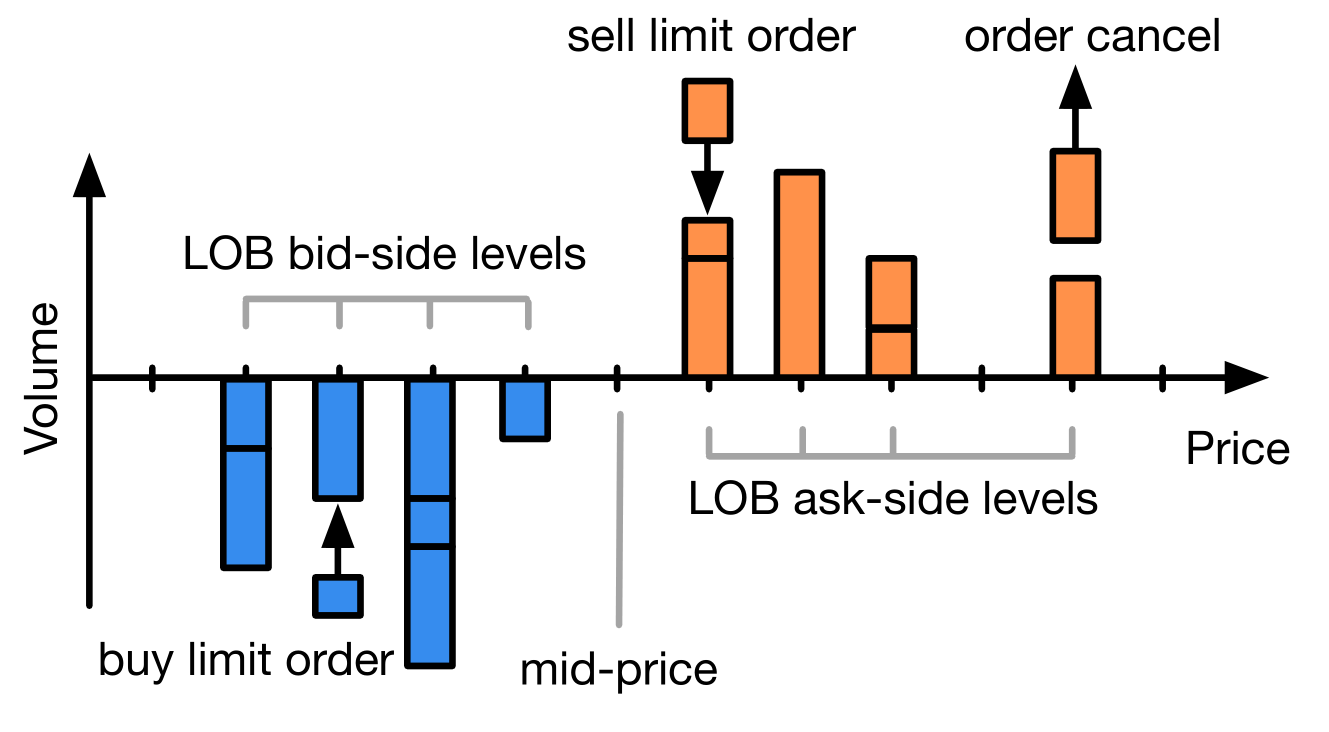

- Order Execution—Translating theoretical allocations to market orders with market impact-aware execution logic and feedback-based risk control.

Figure 1: A typical pipeline of quantitative investment.

Integration across these stages is crucial for minimizing error propagation and maximizing overall system performance. Feedback from execution and risk analysis informs adaptive revisions upstream.

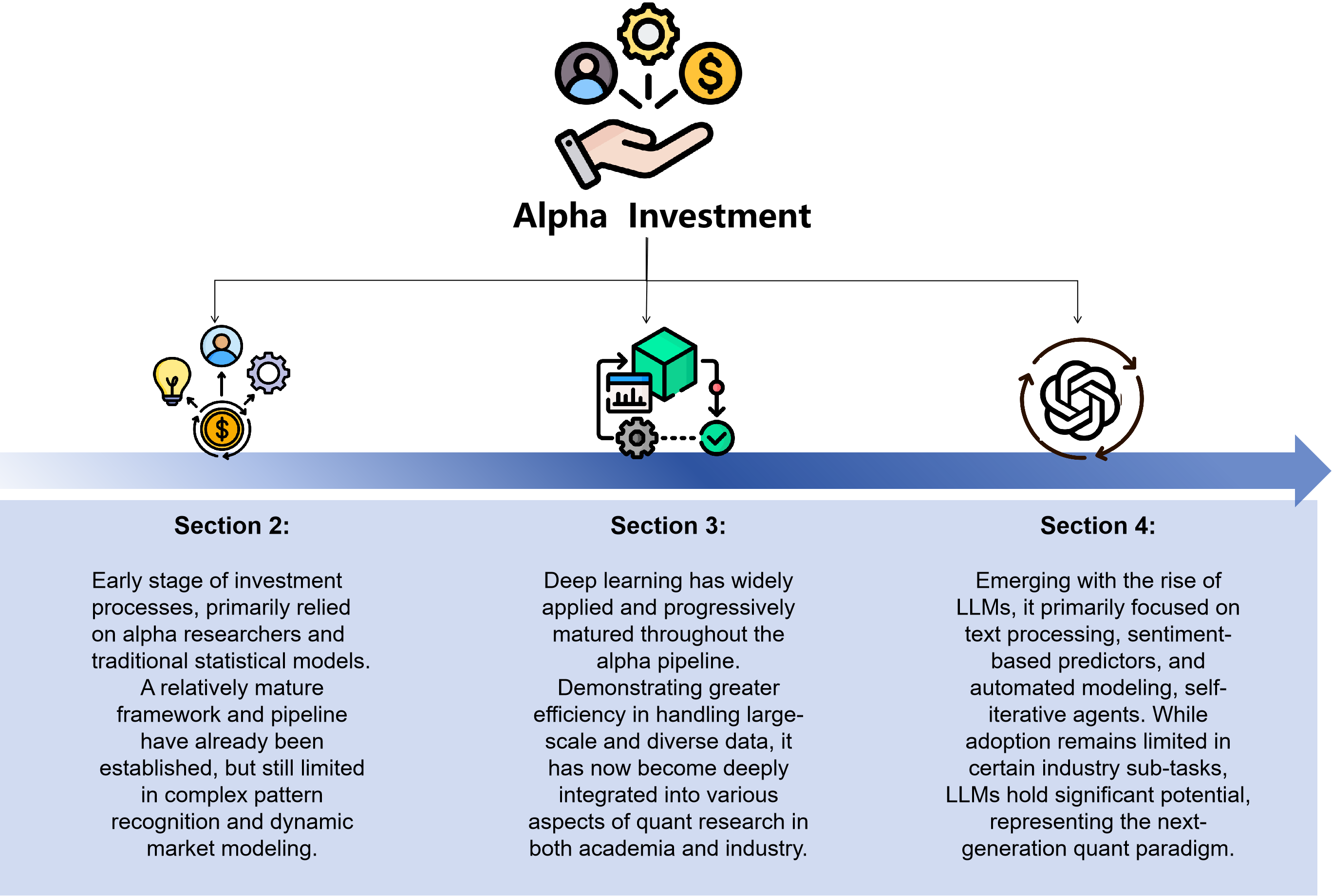

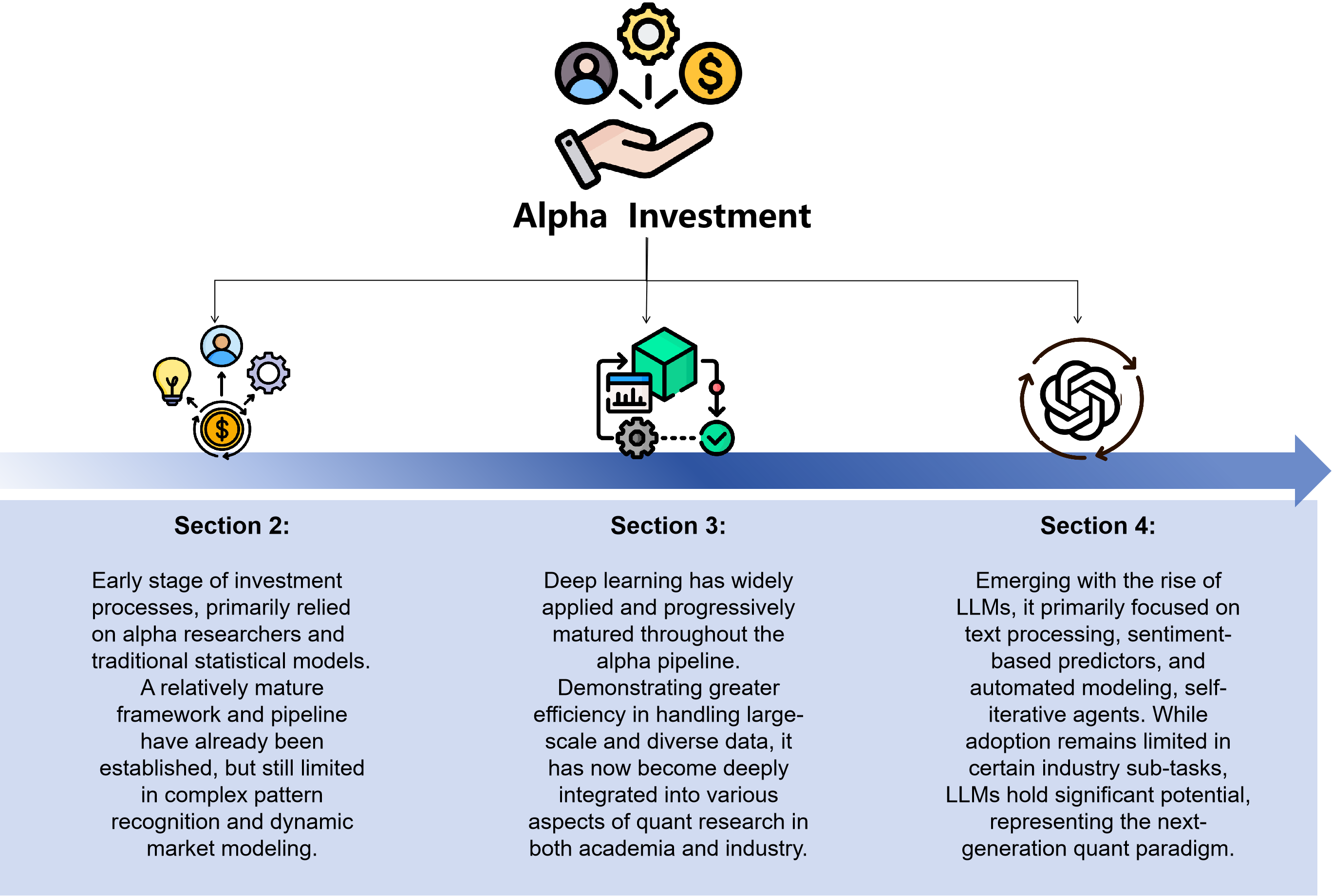

Evolution: From Traditional Methods to Deep Learning

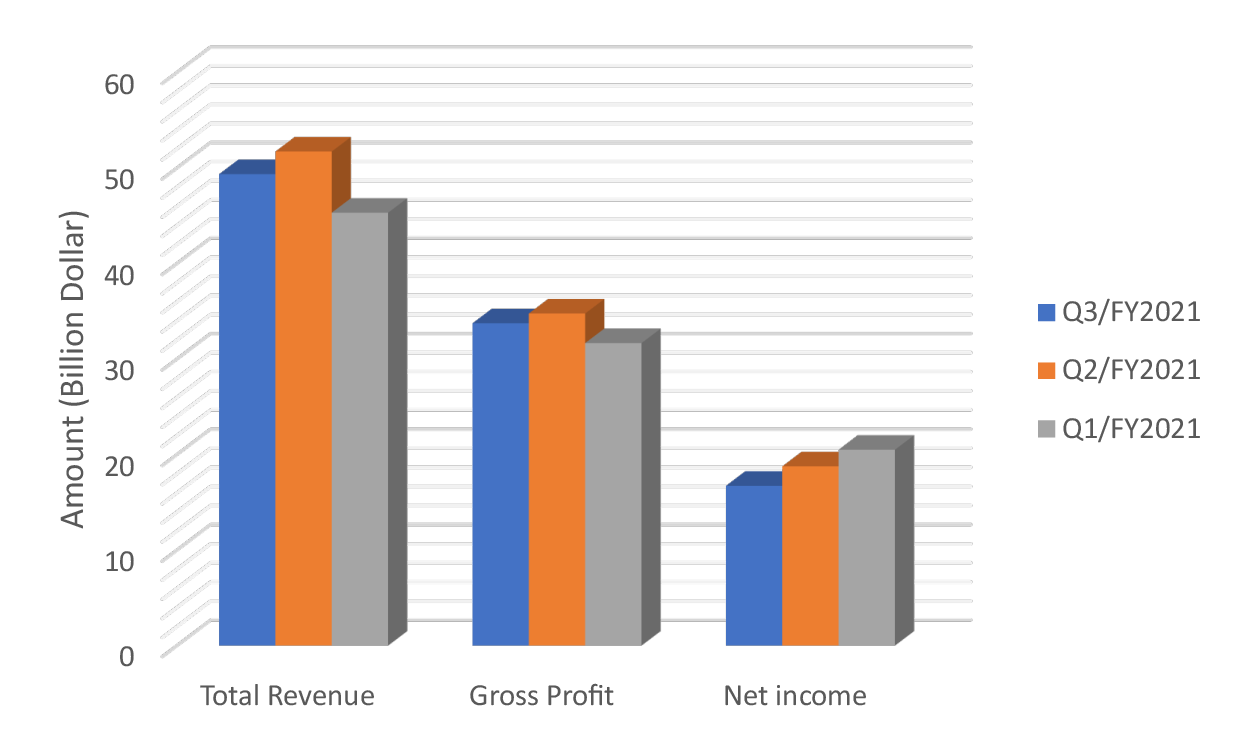

The field’s trajectory (Figure 2) has evolved from traditional statistical modeling—characterized by handcrafted feature engineering and fixed functional forms—to modern deep learning models operating on raw or weakly processed data, allowing the capture of nonlinear dependencies and high-order interactions in both temporal and cross-sectional dimensions.

Figure 2: The evolutionary process of Alpha investment across different stages.

Deep Learning Architectures in the Alpha Pipeline

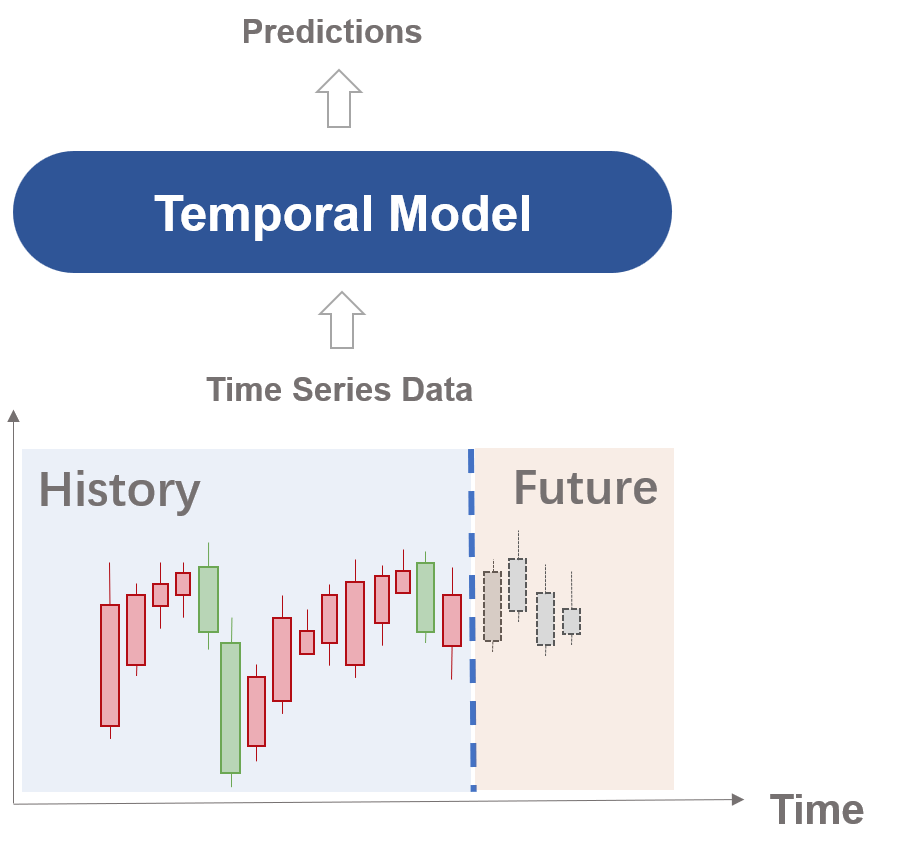

Temporal, Spatial, and Spatiotemporal Modeling

Data Modality and Simulation

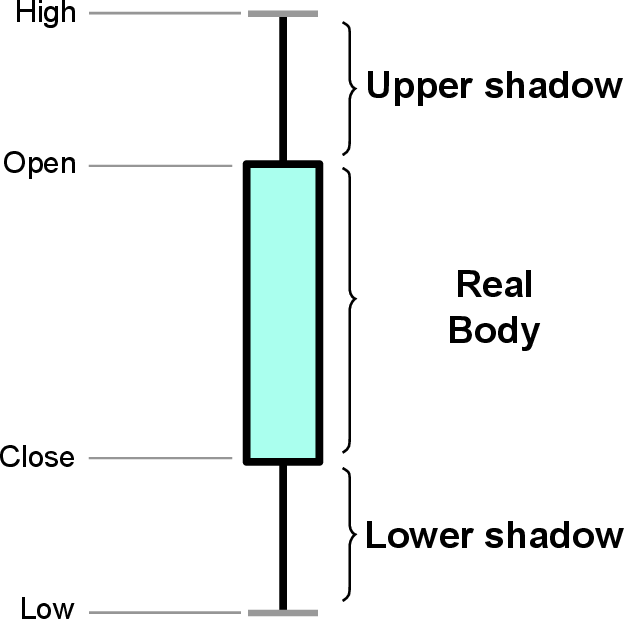

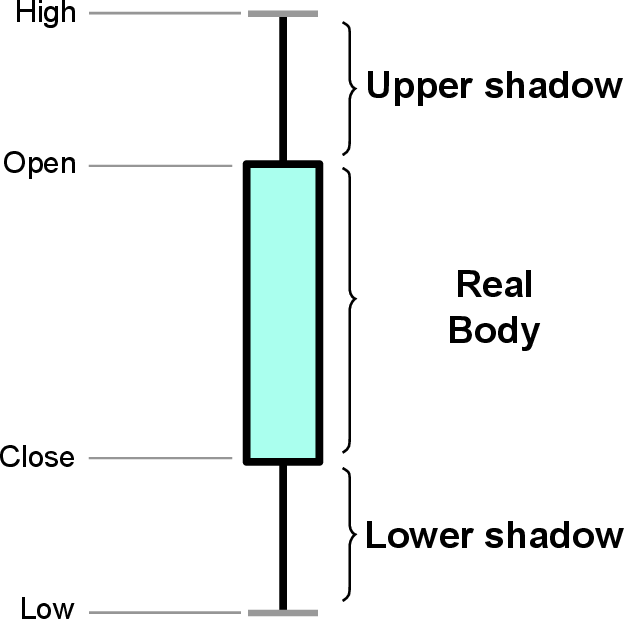

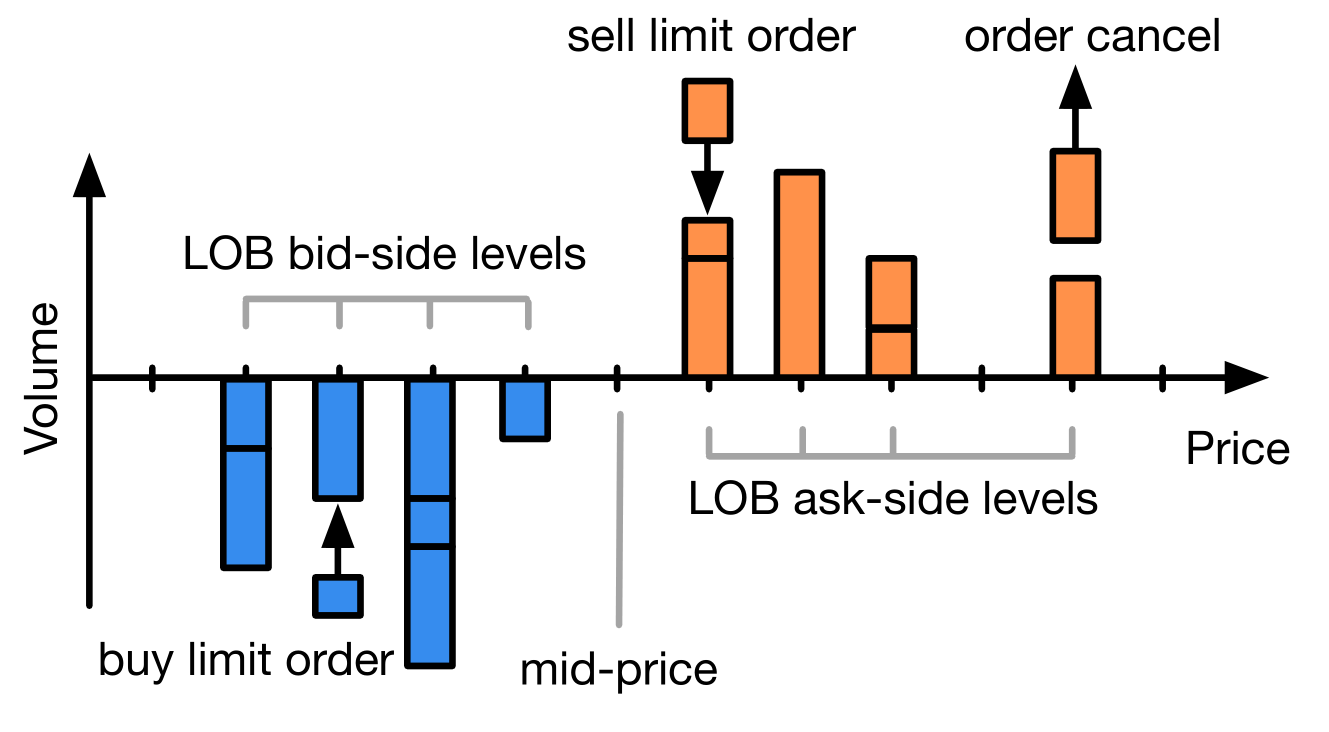

Numerical, relational, and alternative data (such as textual news or ESG signals) are now routinely fused (see Figure 5). The generation of simulation data through rule-based multi-agent systems, deep generative models (GAN, VAE, Diffusion) serves robust model training and systematic stress testing [kannan2024review].

Figure 6: Candlestick chart—a primary visualization for financial time series.

Portfolio and Execution Optimization

Deep learning has advanced both plug-in and end-to-end solutions for portfolio optimization. Deep reinforcement learning (RL) agents now directly optimize non-differentiable financial objectives (annualized return, Sharpe, max drawdown), circumventing intermediary predictive targets. Notably, RL-based allocation policies demonstrate improved empirical risk-adjusted returns compared to traditional plug-in estimators. For order execution, RL-based agents achieve superior performance over Almgren-Chriss baselines when minimizing transaction cost and slippage under realistic market frictions [donnelly2022optimal].

LLMs in Quantitative Finance

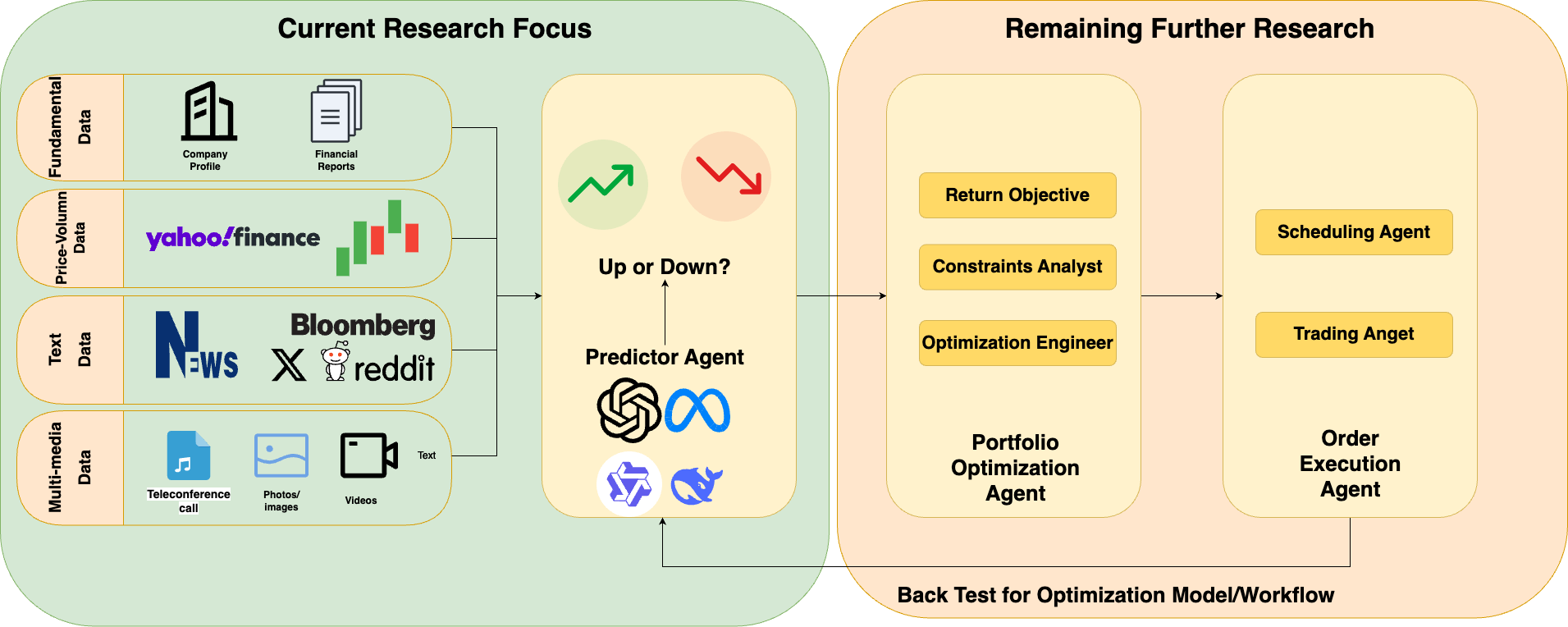

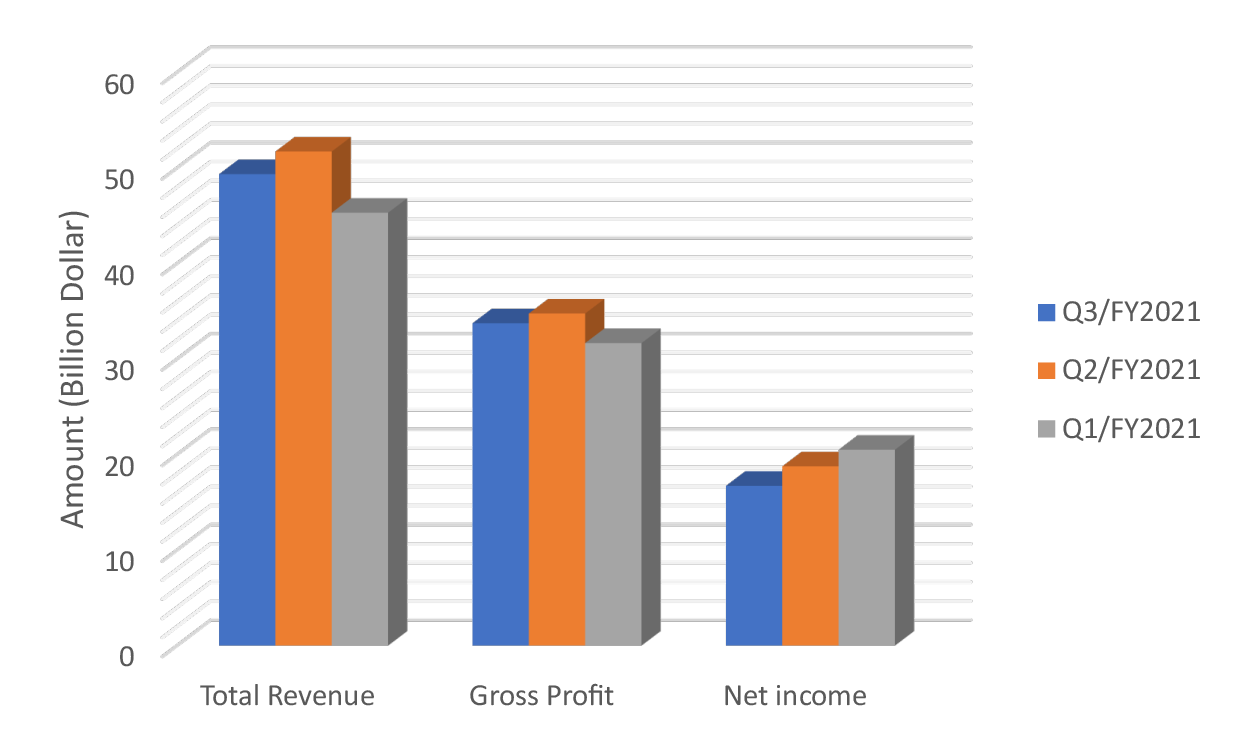

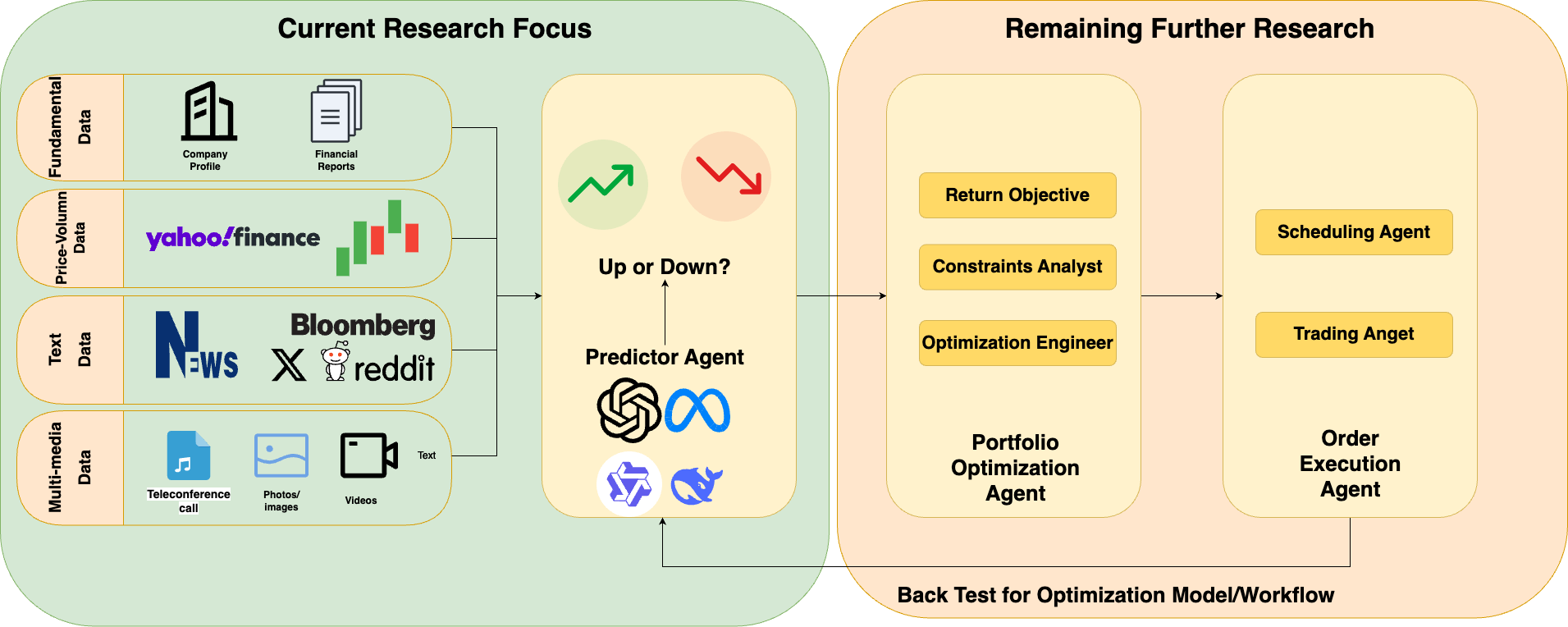

The advent of foundation LLMs (e.g., GPT-family, BloombergGPT [wu2023bloomberggpt], FinGPT [yang2023fingpt]), pretrained on vast financial corpora, has introduced multi-modality, autonomous reasoning, and agents with tool integration capability into the investment process (see Figure 7). Their involvement spans:

Figure 7: Architecture overview of LLM-based quant agents. It has three parts: using data to predict price trends, optimizing asset choices, and making trades.

LLM-Based Predictors

Sentiment Extraction and Causal Representation

- Embedding-based LLM classifiers (e.g., FinBERT [araci2019finbert], FinLlama [konstantinidis2024finllama]) outperform lexicon and shallow ML baselines for sentiment extraction, directly improving factor quality for both single-asset and cross-sectional strategies.

- Prompted LLMs (ChatGPT, GPT-4) yield return-predictive sentiment and event signals from structured and unstructured news, with backtests demonstrating significant out-of-sample alpha across equities and FX [lopezlira2023chatgpt, zhang2023unveiling].

- Causal attention models (e.g., CMIN [luo2023cmin]) integrate LLM-extracted sentiment with inferred causal relationships, improving both interpretability and predictive power for event-driven trading.

Time-Series Forecasting

- LLMs reprogrammed for time series forecasting (e.g., TIME-LLM [jin2024timellm], S2IP-LLM [pan2024s2ip]) match or exceed conventional statistical models and supervised learning approaches in zero-shot and few-shot settings [gruver2023zeroshot]. They further enable multi-modal integration (e.g., textual, numerical, audio features as in RiskLabs [cao2024risklabs]) and generate human-interpretable rationale for predictions, critical for regulated financial applications.

LLM Agents: Autonomy, Memory, and Multi-Agent Systems

- Modular, multi-component agent frameworks (e.g., FinMem [yu2024finmem], FinAgent [zhang2024FinAgent], FinVision [fatemi2024finvision]) operationalize workflow automation, integrating layered memory, character-driven profiling, dynamic tool use, and multi-source perception.

- Collaborative multi-agent systems (e.g., FinRobot [yang2024finrobot], FINCON [yu2025fincon], TradingAgents [xiao2024tradingagents]) replicate institutional structures (managers, analysts, traders) for robust portfolio management and risk control, producing superior cumulative return, Sharpe ratio, and drawdown performance relative to deep RL and classical baseline agents.

- Public benchmarks (InvestorBench [li2024investorbench]) show proprietary LLM-based agents outperforming open-source and prior domain-specific models in volatile market conditions, especially for multi-asset, multi-modal tasks.

LLMs for Direct Factor and Knowledge-Driven Generation

- Factor mining with LLMs (Alpha-GPT [wang2023alphagpt], GPT’s Idea of Stock Factors [cheng2024gpt]) automates the generation of alpha signals by interactive code synthesis, iterative domain-driven refinement, and cross-modal reasoning, surpassing symbolic and ML-only approaches in both factor novelty and diversity.

- Recent proposals apply LLM-based neuro-symbolic search, coupling reasoning and optimization, to encode domain priors and improve robustness and interpretability in feature construction.

Empirical Strengths, Limitations, and Open Problems

Empirical Evidence:

- DL and RL-based pipelines achieve strong risk-adjusted returns (Sharpe ratios > 2.0 in certain multi-asset RL and agent systems) and robust outperformance in diverse markets and stress scenarios.

- Agent frameworks integrating LLMs and multi-modal reasoning report improvements in cumulative return (CR > 80% in single-asset, CR > 100% in portfolio-level backtests) and dramatically reduced maximum drawdown under adversarial market shifts.

Limitations:

Implications and Future Research Directions

- Hybrid Architectures—Continued development of neuro-symbolic and hybrid agent architectures with explicit partitioning of tasks between natural language reasoning (problem decomposition, hypothesis generation, reporting) and numerical/ML modules (risk, allocation, execution).

- Market-Aware Sentiment and Causal Reasoning—LLM fine-tuning and reinforcement learning based on asset price reactions to news, iterative self-critique, and structural estimation should be prioritized for closing the gap between linguistic and economic sentiment.

- Real-Time, Multi-Agent Systems—Building composable multi-agent orchestration platform with adaptive role assignments and feedback-driven learning. Exploiting explicit communication protocols to synthesize insights across agents handling technical, fundamental, and alternative data.

- AutoML and Continual Learning—Automating hyperparameter tuning, feature search, and meta-learning to maintain model and pipeline robustness under regime change, while integrating continual learning strategies to mitigate catastrophic forgetting.

- Interpretability and Auditing—Task-specific explanation systems should link agent outputs to time-localized, signal-traceable rationales, facilitating compliance and model risk management.

- Evaluation and Benchmarking—Expansion of public benchmarks, simulation environments, and shared datasets that reflect realistic capital, liquidity, and information constraints is essential for empirical progress.

Conclusion

This survey establishes that the AI-driven transformation of quantitative investment is characterized by rapidly expanding methodological diversity and practical sophistication. Deep learning models have significantly expanded the function approximation and feature extraction capacity within the traditional pipeline, enhancing alpha generation and portfolio construction. LLMs, and their integration into agent-based autonomous workflows, enable new levels of reasoning, multi-modal data fusion, and decision automation, though practical and methodological challenges persist at the intersection of language, numeracy, and market microstructure.

The full realization of agent-based, AI-autonomous investment workflows will require advances in real-time multi-agent reasoning, hybrid neuro-symbolic optimization, pipeline integration, and empirical benchmarking under realistic constraints. These developments will define the next phase of research in both the academic and institutional application of AI in financial markets.