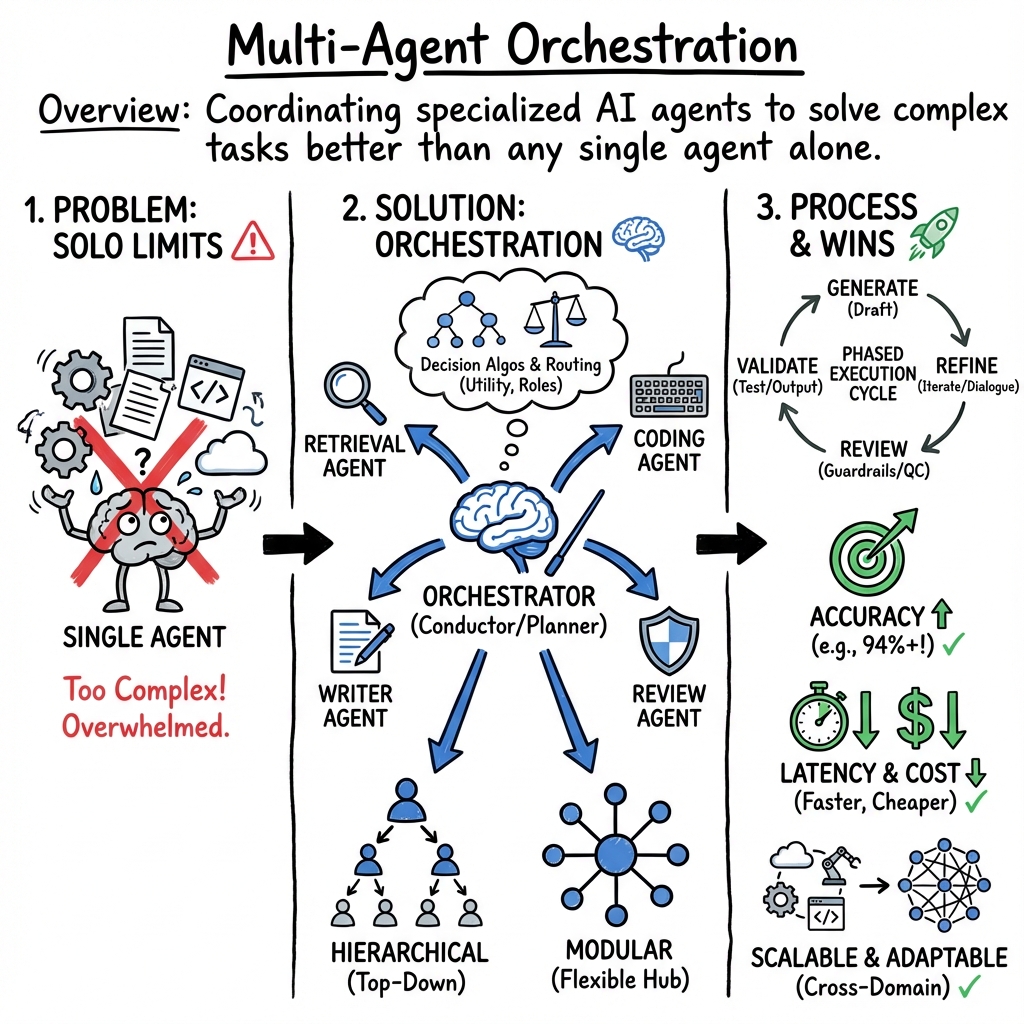

Multi-Agent Orchestration in AI Systems

- Multi-agent orchestration is the coordinated control and role allocation of heterogeneous AI agents using hierarchical, centralized, or modular designs.

- It leverages formal optimization, Bayesian and neural models, and finite-state workflows to dynamically assign tasks while balancing accuracy, cost, and latency.

- Its applications span software engineering, network operations, cloud management, and scientific coding, delivering measurable improvements in efficiency and scalability.

Multi-agent orchestration is the coordinated control, interaction, and role allocation of multiple autonomous agents—typically heterogeneous AI agents or LLM-empowered agents—to achieve complex tasks that exceed the capabilities of isolated agents. Orchestration leverages structured frameworks, algorithmic decision making, architectural modularity, and formal optimization to enable systems that are adaptive, scalable, and robust across diverse domains including process modeling (Lin et al., 2024), cross-domain network operations (Xu et al., 2024), real-time chat (Shrimal et al., 2024), question-answering retrieval (Seabra et al., 2024), cloud resource management (Yao et al., 14 Aug 2025), and scientific coding (Raghavan et al., 9 Oct 2025).

1. Architectural Paradigms and Modular Design

Multi-agent orchestration frameworks commonly adopt either hierarchical, centralized, or dynamic architectures. A typical hierarchical design features a high-level planning agent or orchestrator that decomposes user objectives into explicit sub-goals delegated to specialized sub-agents, each optimized for domain-specific operations such as data retrieval, analysis, or tool control (Zhang et al., 14 Jun 2025, Guo et al., 14 Sep 2025). This modularity enables extensibility and independent component upgrading—key requirements for general-purpose task solving.

Centralized paradigms may use a reinforcement learning-trained “puppeteer” that sequentially schedules agent activations, supporting dynamically evolving, compact, and often cyclic reasoning structures that minimize computational cost and coordination overhead while adapting as agents and tasks scale (Dang et al., 26 May 2025).

Alternatively, fully modular frameworks (e.g., AgentOrchestra, Gradientsys) separate the orchestration (planning, scheduling) layer from the agent and tool control layers, allowing for seamless integration of new agents, task types, or latent tool capabilities (Zhang et al., 14 Jun 2025, Song et al., 9 Jul 2025). Typed protocols (e.g., the Model-Context Protocol in Gradientsys) standardize communication and runtime introspection across agent boundaries.

2. Orchestration Algorithms and Decision Principles

Agent selection, task assignment, and routing frequently employ formal mathematical models that balance performance metrics (accuracy, completeness, relevance) and resource constraints (latency, cost, capacity, or privacy requirements).

- Utility-based orchestration leverages real-time Bayesian estimation of agent correctness (using, e.g., Dirichlet and Beta-Binomial models) to optimize empirical utility functions that consider both task success probability and agent inference cost (Bhatt et al., 17 Mar 2025). The decision function (where is estimated correctness and is cost) formalizes this trade-off, allowing the orchestrator to select the optimal agent dynamically.

- Supervised and neural orchestration models extend this paradigm by encoding tasks and agent histories as feature vectors, with a selection model (usually a neural network) outputting agent suitability distributions and confidence estimates (Agrawal et al., 3 May 2025).

- Role-based and tiered reasoning employ finite-state machines (FSMs) or workflow graphs to encode valid execution and error handling sequences, allowing for dynamic routing and adaptive replanning in environments requiring rapid execution and robust error recovery (Guo et al., 14 Sep 2025).

- Hierarchical planning can involve multi-level reasoning, where planning agents delegate subtasks to dynamically instantiated role-specific agents, who may themselves invoke lower-level inference or execution agents (Hou et al., 17 May 2025). Monte Carlo Tree Search (MCTS) methods systematically explore the agentic action space for optimal reasoning trajectories.

- Privacy and knowledge base-aware systems incorporate dynamic, decentralized probing mechanisms: when static agent descriptions are insufficient, sub-agents perform internal knowledge base searches and emit only privacy-preserving ACK signals, which are aggregated and cached by the orchestrator for future routing, maintaining both data confidentiality and real-time adaptability (Trombino et al., 23 Sep 2025).

3. Orchestration Phases and Communication Mechanisms

Orchestration processes typically unfold in explicit phases, each targeting a different class of reasoning or error mode:

1. Generation

The orchestrator or a specialized generation agent produces initial artifacts (e.g., process models, test plans) from user input, leveraging LLM-driven prompt engineering that combines knowledge injection, few-shot examples, retrieval augmentation, and chain-of-thought reasoning (Lin et al., 2024, Song et al., 9 Jul 2025).

2. Refinement and Iterative Reasoning

Agents engage in multi-turn interaction to iteratively refine artifacts, disambiguate vague content, and incorporate additional context or sub-goal outputs. This may include inter-agent dialogues, feedback loops grounded in evaluation scores or reward shaping, and iterative context enrichment through hybrid vector-graph retrieval (Ke et al., 29 Sep 2025, Hariharan et al., 12 Oct 2025).

3. Reviewing, Quality Control, and Guardrails

Dedicated agents or evaluators systematically probe for semantic or syntactic hallucinations, logical inconsistencies, or format errors. Robust guardrail mechanisms—explicit schema checks, static rule embedding, or automated reflection prompts—are deployed to correct errors and guide recovery (Shrimal et al., 2024, Guo et al., 14 Sep 2025).

4. Testing and Output Validation

External tools or API interfaces are called to validate the conformance of generated outputs to predefined standards (e.g., BPMN models checked for format errors). Observability layers stream intermediate results, thoughts, and tool calls for transparency and debuggability (Song et al., 9 Jul 2025).

4. Performance, Efficiency, and Accuracy Metrics

Empirical evaluations demonstrate substantial improvements over single-agent or flat multi-agent baselines in dimensions including:

- Accuracy: Orchestrated systems reach up to 94.8% accuracy in applied domains (e.g., enterprise software testing artifact generation (Hariharan et al., 12 Oct 2025), scientific coding benchmarks (Raghavan et al., 9 Oct 2025)), greatly exceeding basic retrieval-augmented baselines.

- Latency and Efficiency: Systems such as MARCO and Gradientsys achieve 44.91% reductions in operational latency and a 4.5× decrease in API cost by leveraging modular orchestration, dynamic task assignment, and parallel agent execution (Shrimal et al., 2024, Song et al., 9 Jul 2025).

- Generalization and Adaptability: Architectures built around role-based modeling, modular agent registration, and centralized training with decentralized execution scale to large, heterogeneous environments, maintaining high coordination and robustness under high concurrency and partial observability (Yao et al., 14 Aug 2025).

- Resource Utilization: Optimal agent selection strategies (especially those incorporating cost and availability constraints) ensure that high-accuracy agents are only employed when their utility justifies the expense, directly translating to resource savings (Bhatt et al., 17 Mar 2025, Dang et al., 26 May 2025).

5. Specialized Communication and Cognitive Coordination

Advanced frameworks introduce adaptive, knowledge-aware communication:

- Collaborator Knowledge Models (CKM): Each agent maintains a real-time latent model of its collaborators’ cognitive states, updated via recurrent neural encoders. Cognitive gap analyses quantify divergences, enabling adaptive communication policies that select content, detail level, and strategy for inter-agent messaging (Zhang et al., 5 Sep 2025).

- Active Inference and Reflective Benchmarking: Orchestrators monitor agent-to-agent and agent-to-environment interactions, dynamically optimizing behavior via free energy-based metrics that balance exploration and exploitation, leading to emergent self-coordination (Beckenbauer et al., 6 Sep 2025).

- Reasoning-Aware Prompt Orchestration: Formalized via prompt templates, reasoning context vectors, and capability matrices, agent states evolve according to distributed consensus protocols with mathematically guaranteed convergence under certain step size constraints. This ensures preservation of logical consistency and context during prompt transitions across agents (Dhrif, 30 Sep 2025).

6. Application Domains and Real-World Impact

Multi-agent orchestration frameworks have been empirically validated in a wide array of domains:

- Software Engineering and Process Modeling: Automation of process model generation, test artifacts, and quality engineering procedures with state-of-the-art gains in efficiency and model quality (Lin et al., 2024, Hariharan et al., 12 Oct 2025).

- Network Operations and Robotics: Cross-domain orchestration of tasks spanning digital networks and physical systems, facilitated by LLM-driven agent coordination and real-time optimization models (Xu et al., 2024).

- Cloud-Scale Scheduling and Resource Management: Multi-agent reinforcement learning with heterogeneous, role-based agents enables adaptive orchestration in high-concurrency, high-dimensional resource allocation problems, yielding improvements in system fairness, stability, and convergence speed (Yao et al., 14 Aug 2025).

- Complex QA and Scientific Coding: Modular orchestration blends dynamic routing, hybrid retrieval, and consensus mechanisms to provide robust, context-rich answers or usable scientific code even when evidence is incomplete or tasks require stepwise decomposition (Seabra et al., 2024, Raghavan et al., 9 Oct 2025).

- Desktop Automation and Long-Horizon Planning: FSM-driven routing and self-emergent attention-inspired coordination enable robust sequential execution and error recovery in desktop automation and path planning tasks (Guo et al., 14 Sep 2025, Beckenbauer et al., 6 Sep 2025).

7. Open Challenges and Future Directions

Although significant progress has been made, limitations remain. Performance degrades when agent transition chains become excessively long (beyond ~10 transitions in complex reasoning orchestration (Dhrif, 30 Sep 2025)), and memory demands scale with coordination metadata. Trade-offs exist between orchestration accuracy, system latency, and operational cost, suggesting further research into more generalizable, resource-efficient, and theoretically grounded policies.

Open challenges include optimizing computational overhead in team initialization (Tian et al., 23 Sep 2025), integrating richer objective metrics (e.g., reliability, human feedback), and developing more advanced, privacy-aware orchestration mechanisms with dynamic knowledge alignment and semantic caching (Trombino et al., 23 Sep 2025).

Expanded application to multi-modal domains (e.g., visual quality assurance in agriculture (Ke et al., 29 Sep 2025), multimodal research assistants (Zhang et al., 14 Jun 2025)) and the incorporation of reinforcement learning, self-reflective scheduling, and knowledge base probing indicate promising directions for future research.

Multi-agent orchestration represents a formal, principled approach to managing complex, heterogeneous agent systems, characterized by modular architecture, probabilistic and neural selection algorithms, robust communication, and demonstrated scalability and efficiency across diverse real-world domains.