- The paper introduces a novel framework using stochastic strategies like MC-dropout and AGN to scale latent reasoning models.

- It proposes a latent reward model with a contrastive objective to aggregate diverse latent trajectories effectively.

- Experimental results demonstrate improved exploration and aggregation, outperforming traditional majority voting methods.

Parallel Test-Time Scaling for Latent Reasoning Models

Introduction

The paper "Parallel Test-Time Scaling for Latent Reasoning Models" (2510.07745) presents an innovative framework to enhance the efficiency and effectiveness of LLMs through Parallel Test-Time Scaling (TTS). This mechanism traditionally involves sampling token-based chains-of-thought in parallel and employing aggregation strategies like voting or search. The focus on latent reasoning — where reasoning occurs in continuous vector spaces — presents challenges due to the lack of clear sampling methods in continuous spaces and absence of probabilistic signals for sophisticated trajectory aggregation.

Figure 1: Token-based Sampling

Methodology

Stochastic Strategies

The approach introduces two pivotal stochastic sampling strategies for scaling latent reasoning models: Monte Carlo Dropout (MC-dropout) and Additive Gaussian Noise (AGN). MC-dropout aims to incorporate epistemic uncertainty by using dropout during inference, thus capturing variability in model knowledge. AGN complements this by injecting isotropic Gaussian noise into latent thoughts, simulating aleatoric uncertainty.

Figure 2: GSM-Test

These sampling strategies enable generating multiple latent trajectories, each representing distinct reasoning paths characterized by different degrees of uncertainty.

Latent Reward Model

For aggregation, the Latent Reward Model (LatentRM) is proposed, applying a step-wise contrastive objective to evaluate latent reasoning steps analytically. This model operates by summing the latent scores across reasoning steps, acting as a proxy for trajectory quality and serving as a guide for decision-making in the latent space.

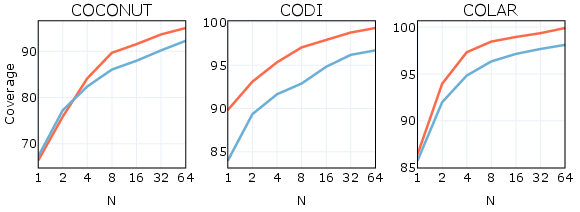

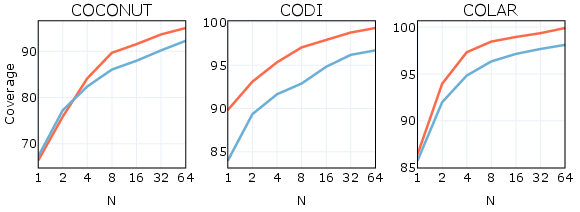

Figure 3: Coverage versus diversity for MC-dropout (red) and AGN (blue) with N∈{4,8,16,32} by sweeping p and σ to span a range of diversity values.

Experimental Results

Sampling Dynamics

Extensive experimentation indicated both MC-dropout and AGN scale well with increased computational resources, while also demonstrating distinct exploration dynamics in latent space. MC-dropout tends to facilitate structured exploration, progressively branching into less conventional solutions, while AGN promotes broad and diverse expansion, enriching environmental diversity.

Figure 4: Diversity of latent trajectories across reasoning steps on GSM-Test with COCONUT. Left: MC-dropout (p=0.1 - $0.5$). Right: AGN (σ=0.1 - $0.5$).

LatentRM proved effective in trajectory selection under best-of-N and beam search strategies, outperforming traditional aggregation methods such as majority voting. This confirms LatentRM’s ability to improve trajectory recognition and aggregation in latent models, validating its utility in enhancing LLM performance through parallel TTS.

Figure 5: Easy

Ablation studies demonstrated the critical roles of LatentRM’s contrastive supervision and stochastic rollout capabilities in achieving superior trajectory aggregation results.

Figure 6: GSM-Test

Conclusion

The presented framework successfully extends parallel TTS to latent reasoning models, showcasing potential for scalable and efficient parallel inference in continuous vector spaces. By addressing previous limitations in sampling and aggregation, this study opens pathways to more sophisticated latent reasoning strategies, potentially leading to more dynamic and adaptive inference processes in AI systems.

Future research could explore integrating the stochastic sampling and aggregation under reinforcement learning paradigms, allowing models to learn exploration strategies dynamically and optimize compute allocation adaptively across different reasoning tasks. Such advancements could further transform latent reasoning from static inference procedures into adaptive cognitive processes across diverse applications.