- The paper presents a modular, open-source framework combining LLMs with behavior trees to translate natural language into interpretable robot actions.

- It integrates a ROS2-based architecture with domain-specific plugins, achieving high success rates over 90% in diverse human-robot interaction scenarios.

- The system emphasizes transparency through a hierarchical BT design and real-time failure reasoning, enabling scalable and extensible robot control.

Interpretable Robot Control via Structured Behavior Trees and LLMs

Introduction

The integration of LLMs into robotic control systems has enabled more natural and flexible human-robot interaction (HRI), but bridging the gap between high-level natural language instructions and low-level, interpretable robot behaviors remains a significant challenge. This paper presents a modular, open-source framework that combines LLM-driven natural language understanding with structured behavior trees (BTs) to enable interpretable, robust, and scalable robot control. The system is designed to be robot-agnostic, supports multimodal HRI, and emphasizes transparency and extensibility in both perception and control domains.

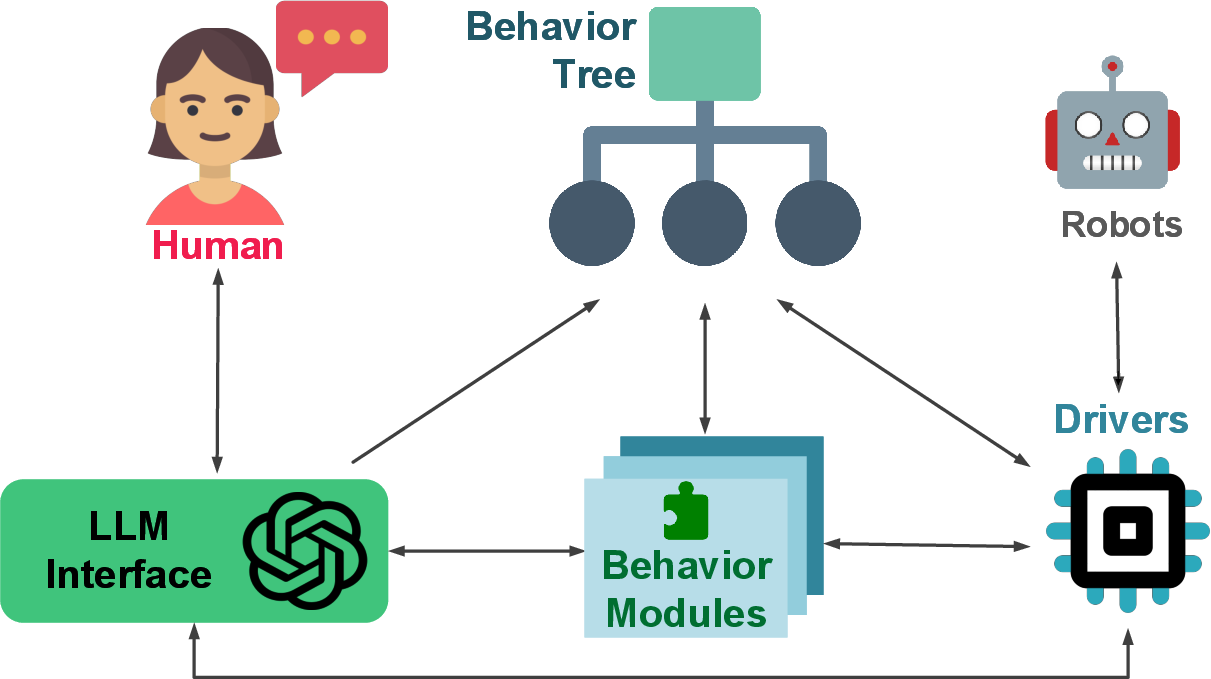

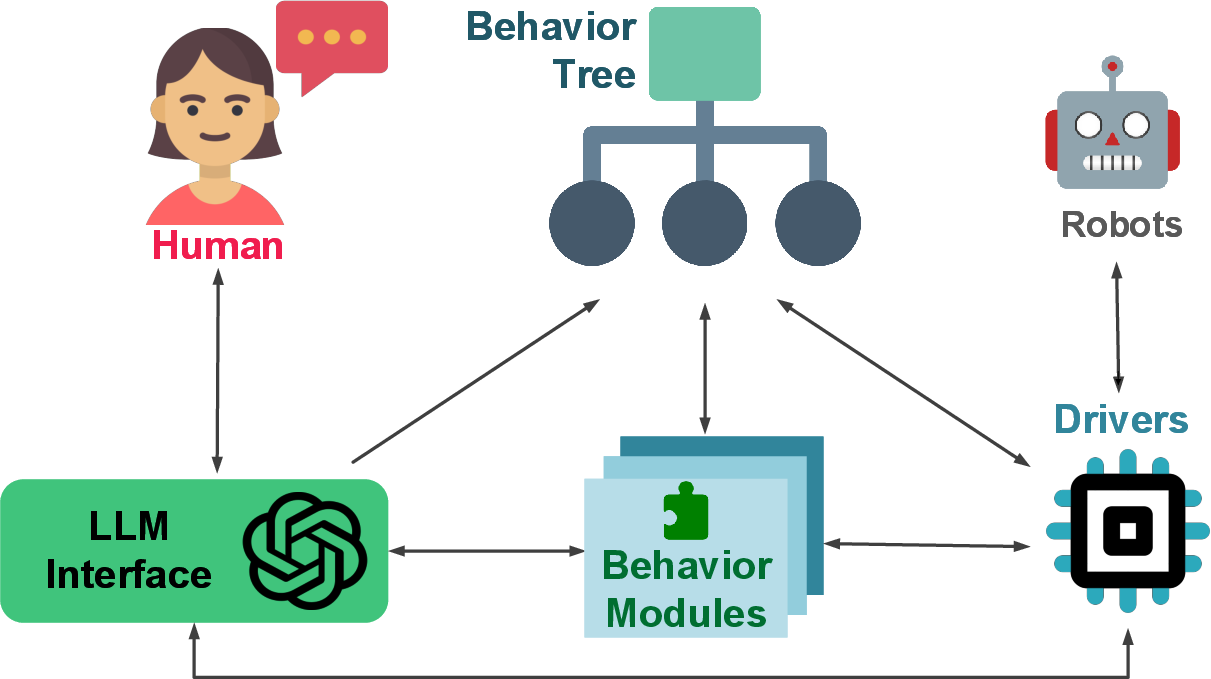

Figure 1: High-level overview of the proposed LLM-driven robotic control system, where a user interacts with the system through natural language, interpreted by an LLM to guide robot behavior via a modular control structure.

System Architecture

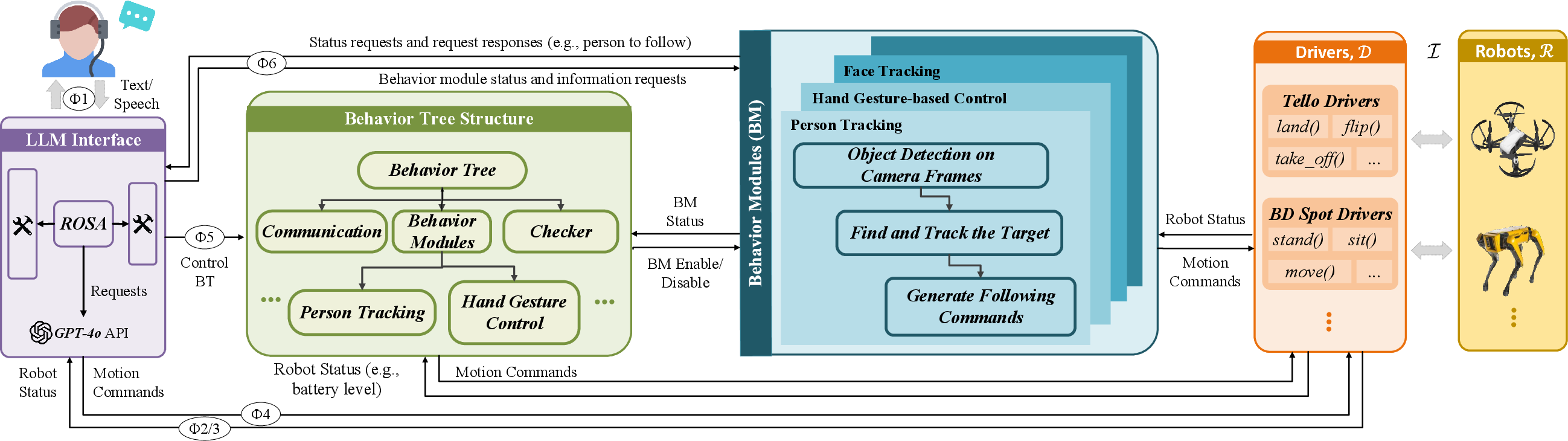

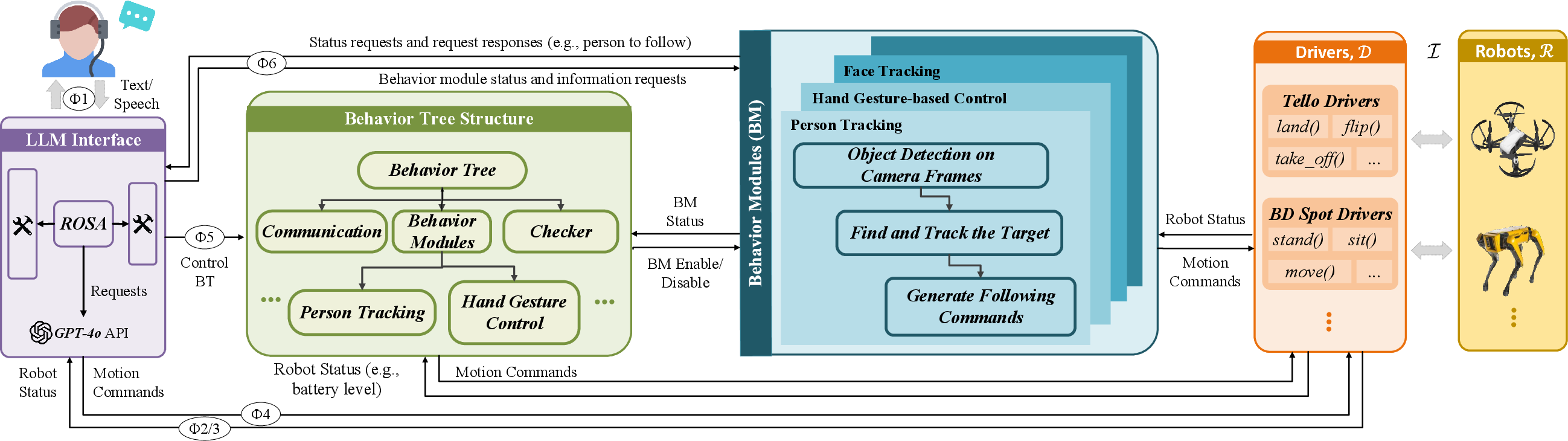

The proposed framework is built on ROS2 and extends the ROSA architecture to support BT-based control. The architecture consists of several key modules:

- LLM Interface: Utilizes GPT-4o for one-shot prompt-based interpretation of user commands, mapping them to structured actions or behavior modules.

- Behavior Tree Core: Implements hierarchical, tick-based execution logic, enabling real-time, reactive, and interpretable control flow.

- Behavior Modules (Plugins): Encapsulate domain-specific capabilities (e.g., person tracking, hand gesture recognition) as ROS2 nodes, triggered by the BT.

- Drivers: Abstract hardware-specific operations, supporting both aerial (DJI Tello) and legged (Boston Dynamics Spot) robots.

Figure 2: The outline of the proposed system architecture. An LLM interprets natural language instructions from the human, interfacing with a behavior tree to coordinate modular plugins that control the robot’s actions.

Figure 3: A detailed overview of the proposed system architecture, depicting the integration of LLM-based language understanding, behavior tree core, plugin, and driver modules. Arrow labels indicate the interaction category (Φ1 to Φ6) corresponding to evaluation scenarios.

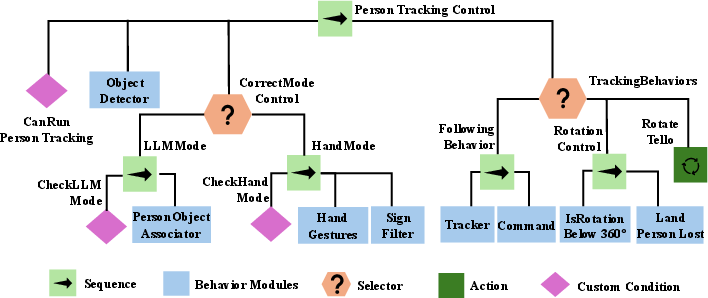

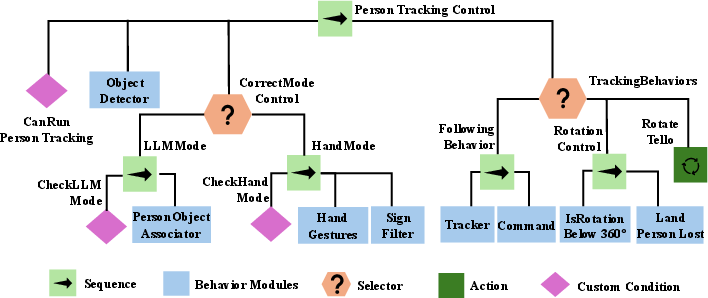

Behavior Tree Design

The BT is central to the system, providing hierarchical task decomposition, fallback mechanisms, and conditional execution. Each node in the BT corresponds to a behavior module or a control logic element, and the tree is traversed in discrete ticks, updating node statuses and adapting to dynamic conditions.

Figure 4: Structure of the sample behavior tree employed in the paper for system evaluation, containing the hierarchical arrangement of execution nodes to manage robotic behaviors.

Multimodal and Autonomous Behavior Selection

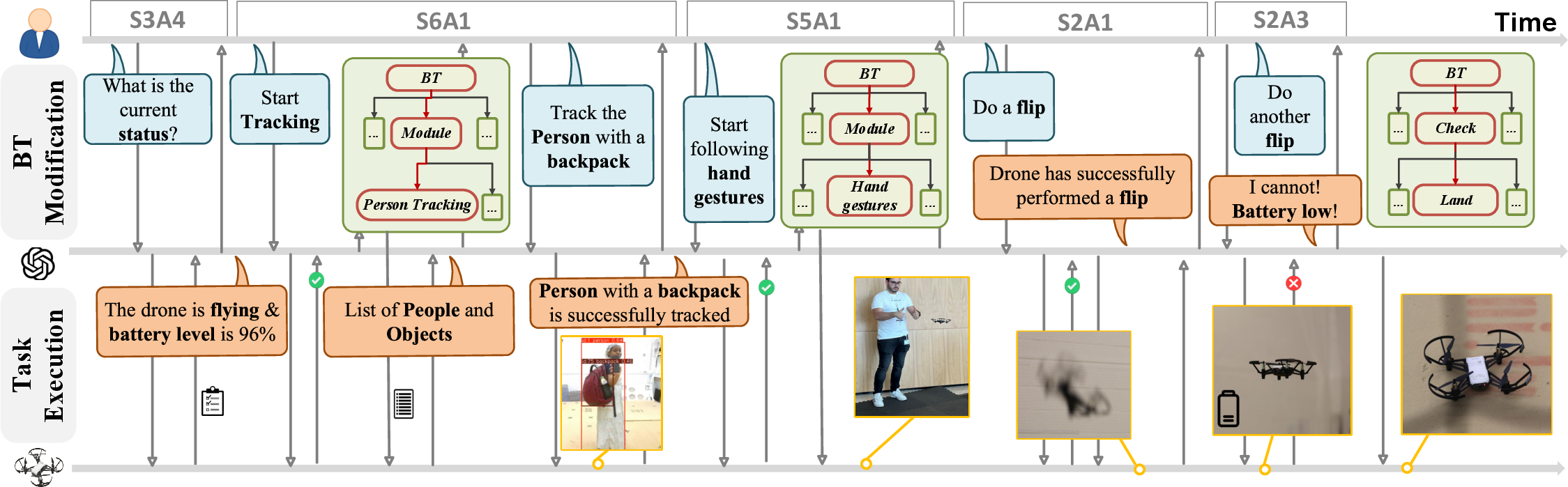

The LLM interface supports both text and (architecturally) voice input, with a unified pipeline for semantic parsing, context-aware behavior selection, and failure reasoning. The system autonomously selects and triggers behavior modules based on interpreted intent, robot state, and environmental context, decoupling high-level cognition from low-level execution.

Implementation and Evaluation

Experimental Setup

- Platforms: DJI Tello drone and Boston Dynamics Spot robot, both equipped with vision sensors.

- Software: Python, ROS2 Humble, OpenAI GPT-4o API, YOLO11 for real-time object detection.

- Scenarios: Diverse HRI tasks, including unsupported actions, context-aware responses, system inquiries, motion commands, plugin switching, and vision-based interactions.

Evaluation Metrics

- Success Rate: Binary metric for cognition, dispatch, and execution stages, averaged over 10 runs per scenario.

- Response Latency: Measured for LLM cognition, dispatch, and execution stages.

Quantitative Results

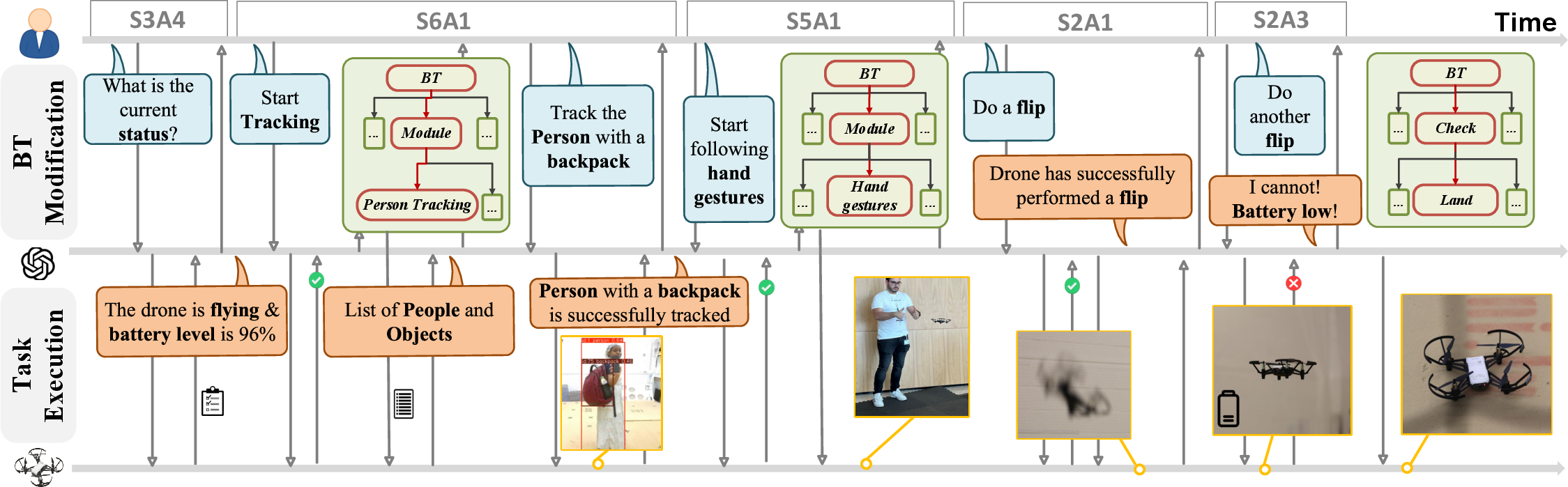

The system achieved an average cognition accuracy of 0.93, dispatch accuracy of 0.92, execution accuracy of 0.95, and an overall end-to-end success rate of 0.94 across all scenarios. Eleven out of twenty scenarios achieved perfect success rates, with the remainder above 0.80 except for cases involving ambiguous or under-specified commands.

- LLM Cognition: Near-perfect except for motion commands requiring precise parameter extraction.

- Dispatch: High accuracy, with minor failures due to incomplete routing or ambiguous context.

- Execution: Strongly correlated with system state awareness and context; lower for complex motion or plugin switching on legged robots.

- LLM Cognition Latency: Dominant contributor, typically 2–8 seconds, depending on prompt complexity and LLM response length.

- Dispatch Latency: Negligible in most cases (<1 ms), except for scenarios involving extended perception or plugin activation.

- Execution Time: Platform-dependent; infinite for continuous tasks (e.g., person following), longer for legged robots due to slower locomotion.

Qualitative Analysis

Figure 5: High-level state-flow diagram of the proposed end-to-end HRI system, highlighting command interpretation and execution states.

The system demonstrates robust handling of natural language instructions, context-aware decision-making, and dynamic switching between control modalities. Failure reasoning is integrated, providing user feedback when commands are infeasible or ambiguous. The BT structure ensures interpretable, traceable execution, and the modular design allows for straightforward extension with new behaviors or input modalities.

Discussion

The framework addresses several limitations of prior LLM-robot integration approaches:

- Interpretability: BTs provide transparent, hierarchical control logic, facilitating debugging and user trust.

- Modularity and Scalability: Plugins and drivers can be added or modified independently, supporting diverse robots and tasks.

- Real-Time Adaptation: Tick-based BT execution and autonomous behavior selection enable responsive adaptation to changing environments and user intent.

- Failure Reasoning: LLM-generated explanations improve transparency and user experience in failure cases.

However, the system's performance is bounded by the LLM's semantic understanding and the reliability of perception modules. Ambiguous or under-specified commands can lead to suboptimal behavior selection or require clarification. The reliance on external APIs (e.g., OpenAI) introduces latency and potential privacy concerns. The current implementation is limited to single-robot scenarios and primarily text-based HRI, though the architecture supports future multimodal and multi-robot extensions.

Implications and Future Directions

This work demonstrates the feasibility of combining LLMs with structured BTs for interpretable, robust, and extensible robot control in real-world HRI. The open-source release facilitates reproducibility and further research. Future developments should focus on:

- Benchmarking with Diverse LLMs: Evaluating the impact of different LLM backends and prompt engineering strategies on system performance.

- Multi-Robot Coordination: Extending the BT and plugin architecture to support collaborative, distributed task execution.

- Enhanced Multimodality: Integrating additional input modalities (e.g., vision, speech, gestures) and perception-driven behaviors.

- Dynamic BT Adaptation: Enabling real-time BT modification and learning from user feedback or environmental changes.

Conclusion

The integration of LLMs with structured behavior trees provides a practical and interpretable approach to natural language-driven robot control. The proposed framework achieves high success rates in both semantic interpretation and task execution across diverse HRI scenarios, with strong modularity and extensibility. The work lays a foundation for future research in scalable, transparent, and adaptive robot control systems leveraging advances in LLMs and structured decision-making architectures.