- The paper introduces an LLM-based adaptive compensator that uses prompt engineering to design controllers aligning unknown system dynamics with a reference model.

- The proposed methodology outperforms traditional adaptive schemes with superior tracking performance, reduced overshoot, and rapid convergence under various disturbances.

- The framework eliminates the need for explicit system modeling by leveraging natural language prompts, enabling seamless integration with legacy and multi-DoF robotic systems.

LLMs-Guided Adaptive Compensator: A Framework for Adaptive Control with LLMs

Introduction

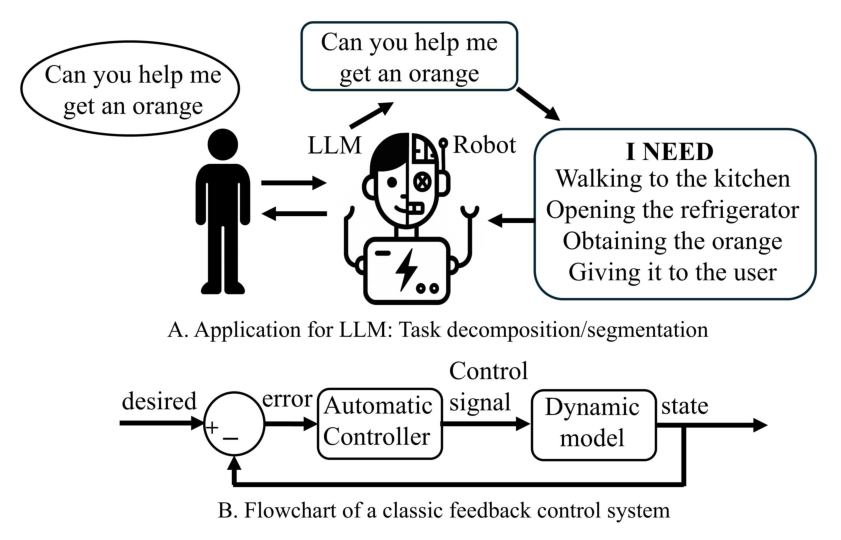

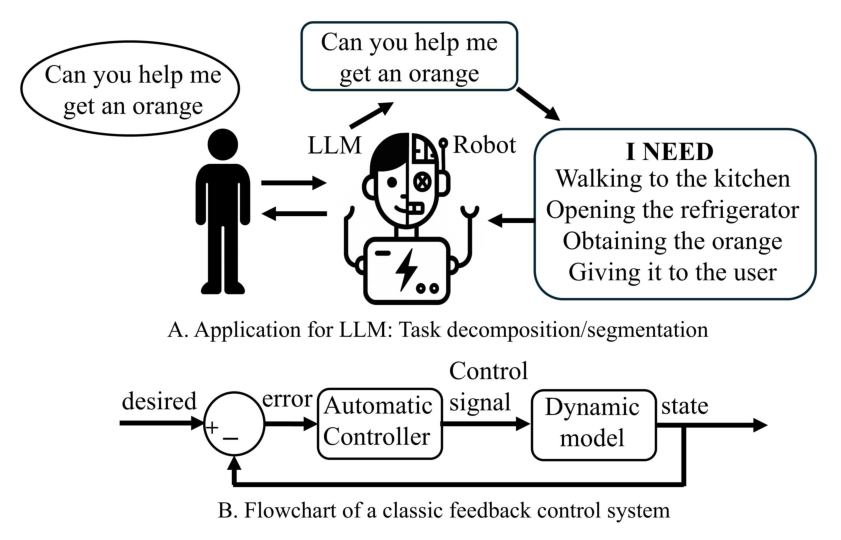

The integration of LLMs into robotics has predominantly focused on high-level reasoning, task decomposition, and multimodal perception. However, their application to low-level feedback control, particularly adaptive control, remains nascent. This paper introduces a novel LLMs-guided adaptive compensator framework, which leverages LLMs to enhance the adaptivity of existing feedback controllers by synthesizing compensators that align the response of unknown systems with that of a reference model. The approach is inspired by Model Reference Adaptive Control (MRAC) but departs from traditional methods by utilizing LLMs' reasoning capabilities to design compensators through prompt engineering, rather than relying on explicit system modeling or gain tuning.

Figure 1: Trends in LLM-based robotics and feedback control, highlighting the shift from high-level reasoning to low-level adaptive control.

Methodology

LLMs-Guided Adaptive Compensator Framework

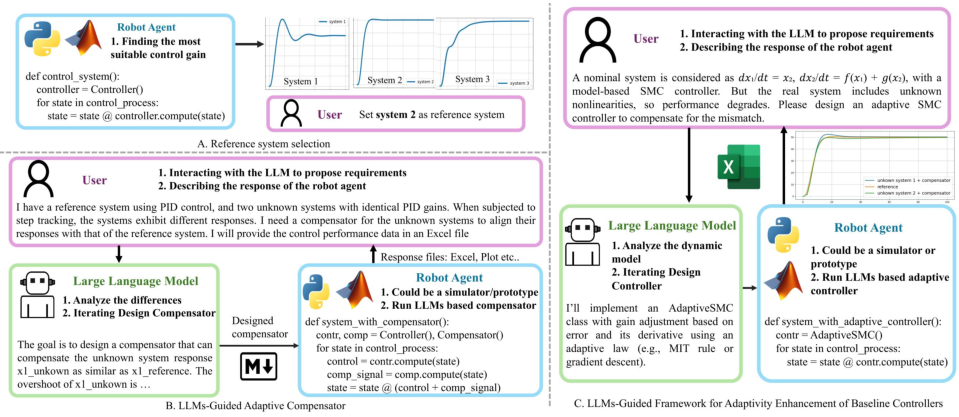

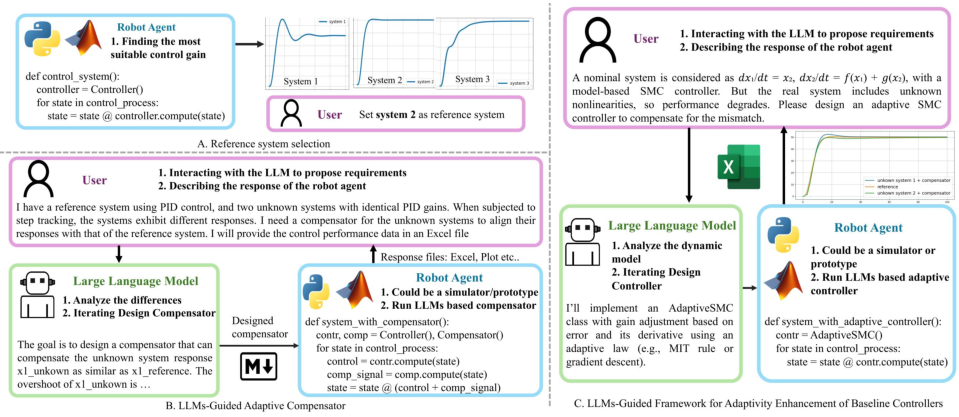

The proposed framework consists of three main stages: reference system selection, compensator design via LLM prompting, and iterative closed-loop refinement. The user first defines a reference system with desirable dynamic properties, typically through trial-and-error or established design heuristics. The LLM is then prompted with both the reference and unknown system responses, along with qualitative descriptors of performance discrepancies. The LLM outputs a compensator, ϕc, which augments the existing controller u0(t):

u(t)=u0(t)+ϕc(ubase,ydesired,y(t),yr(t),k)

This process is iteratively refined based on observed system responses, forming a closed-loop human-in-the-loop design cycle.

Figure 2: Flowchart of LLMs-Guided adaptive compensator and LLMs-Guided adaptive controller, illustrating the iterative prompt-compensate-evaluate loop.

Comparison: LLMs-Guided Adaptive Controller

A baseline is established by prompting the LLM to design an adaptive controller from scratch, given explicit system dynamics. This approach, while theoretically appealing, is shown to be less practical due to increased reasoning complexity and the need for detailed system models.

Theoretical Analysis: Lyapunov-Based Generalization

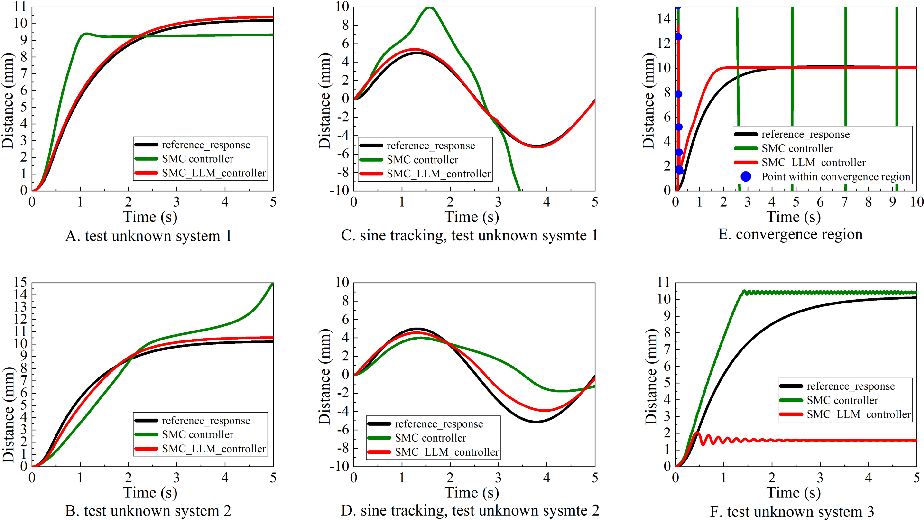

A Lyapunov-based analysis is conducted to assess the generalizability and stability of the LLMs-guided adaptive compensator. The compensator is synthesized in a zero-shot manner and applied to a family of nonlinear, time-varying systems with varying dynamics. The analysis demonstrates that, under certain structural assumptions (i.e., systems with similar error dynamics to the reference), the compensator ensures regional asymptotic stability. The convergence region is determined by the dynamic discrepancy between the unknown and reference systems.

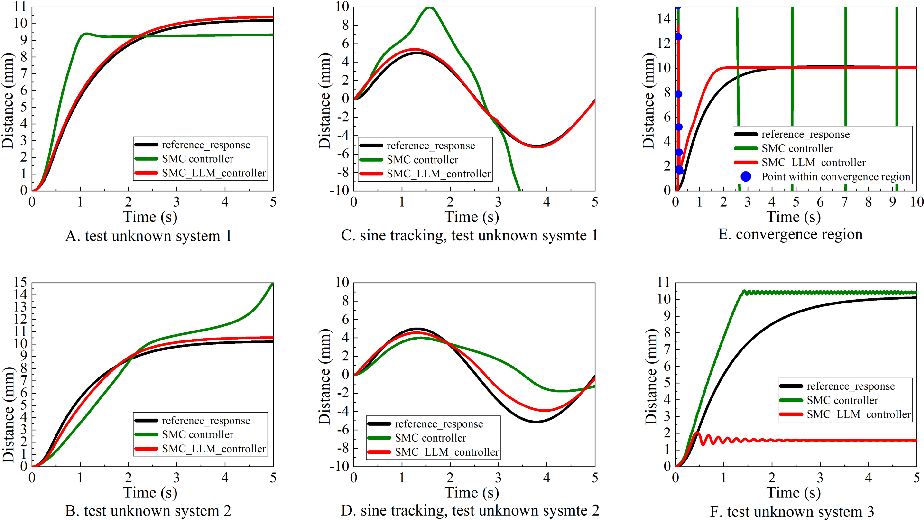

Figure 3: LLMs-guided adaptive compensator validation across multiple nonlinear systems, including disturbance rejection and tracking tasks.

Empirical results confirm that the compensator is effective for systems conforming to the assumed structure, but fails when the structural assumptions are violated, highlighting the importance of prompt formulation and system similarity.

Experimental Evaluation

Two representative robotic platforms are used for evaluation:

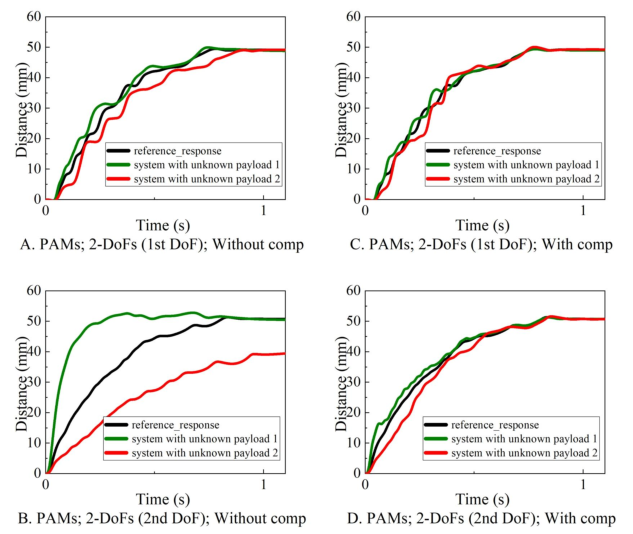

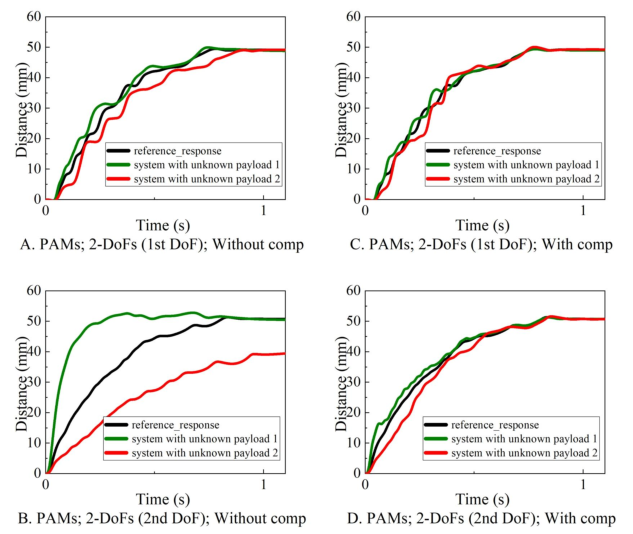

- Type 1: 2-DoF McKibben PAMs-driven robot arm, characterized by strong nonlinearity, hysteresis, and dynamic coupling.

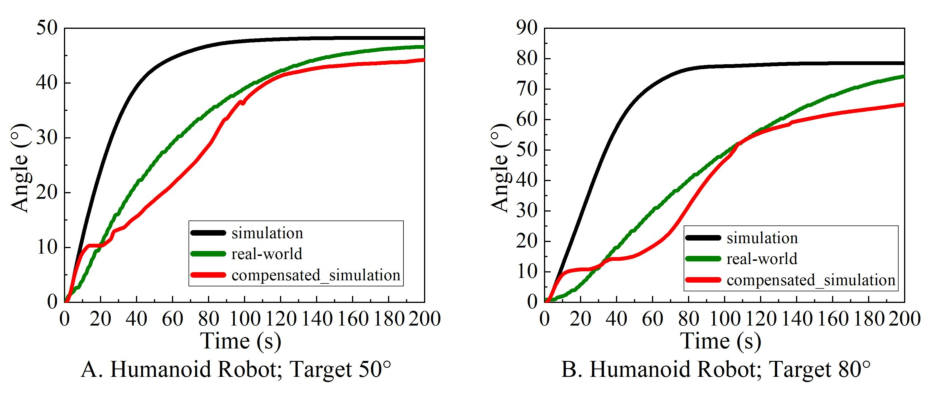

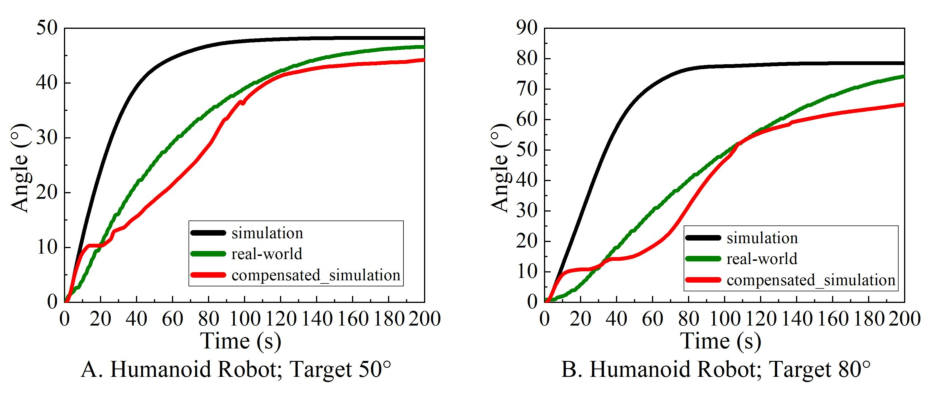

- Type 2: Commercial humanoid robot (Unitree H1_2), used to address sim-to-real transfer challenges.

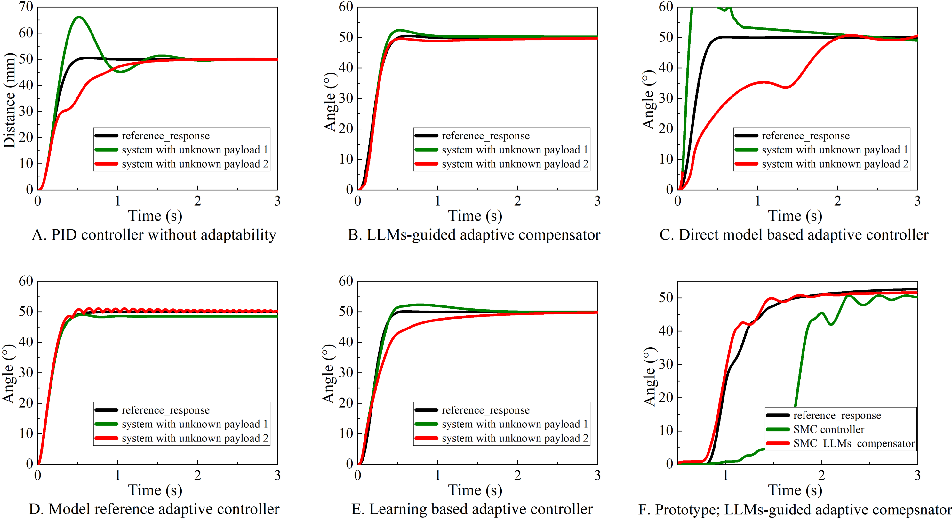

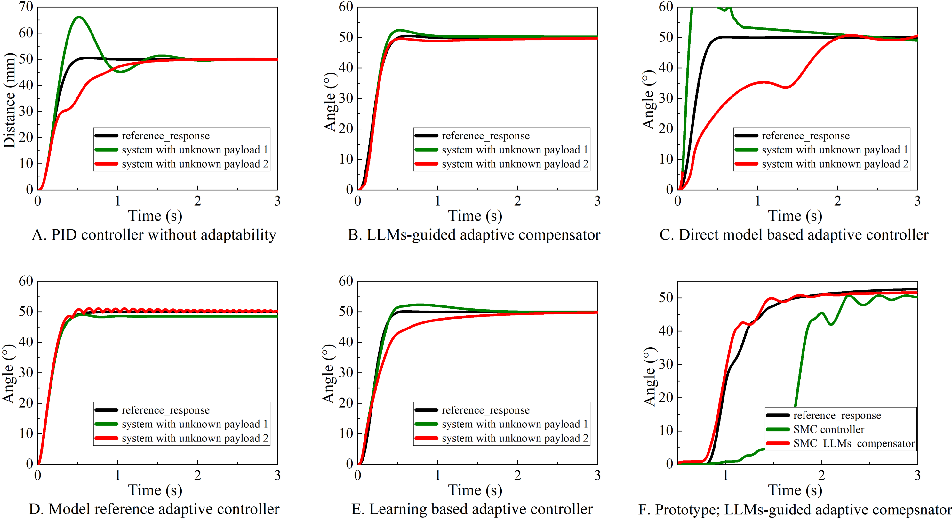

Controllers compared include direct model-based adaptive controllers, MRAC, learning-based adaptive controllers (GAN-based PID), LLMs-guided adaptive controller, and the proposed LLMs-guided adaptive compensator.

Results

Figure 4: Response under different adaptive controllers, demonstrating the superior consistency and robustness of the LLMs-guided adaptive compensator.

The LLMs-guided adaptive compensator consistently outperforms traditional adaptive controllers and learning-based methods in both simulation and real-world experiments. Key findings include:

- Superior tracking performance under varying payloads and disturbances, with minimal overshoot and rapid convergence.

- Reduced design complexity: No explicit modeling, adaptive law derivation, or large-scale data training required.

- High usability: Compensator design is achieved via natural language prompts and system responses, eliminating manual parameter tuning.

Figure 5: Response of 2 DoF PAMs-driven robot arm, showing effective compensation and decoupling under payload variation.

Figure 6: Response of Humanoid robot, demonstrating sim-to-real compensation via indirect target adjustment.

The compensator also demonstrates effective decoupling in multi-DoF systems and adaptability in closed-architecture platforms where direct control signal modification is infeasible.

Reasoning Path Analysis

A detailed analysis of the LLMs' reasoning paths reveals two distinct strategies:

- Compensator Design: System-level feature analysis, focusing on observable discrepancies (e.g., overshoot, settling time) and inferring physical mechanisms to propose compensation strategies.

- Adaptive Controller Design: Theory-driven, model-based reasoning, involving error definition, Lyapunov function construction, and adaptation law derivation.

The compensator design process is more interpretable, structured, and less reliant on explicit system models, making it more practical for real-world deployment.

Implications and Future Directions

The LLMs-guided adaptive compensator framework demonstrates that LLMs can be effectively leveraged for low-level adaptive control without explicit modeling or gain tuning. This approach offers several practical advantages:

- Deployability: Can be integrated into legacy systems without modifying existing controllers.

- Safety: Non-intrusive, as it operates via compensator augmentation or indirect target adjustment.

- Generalizability: Zero-shot prompting enables application across a range of systems with similar error dynamics.

Theoretical implications include the potential for prompt-driven control synthesis, where LLMs act as reasoning engines that bridge the gap between high-level task descriptions and low-level control adaptation. Future research may explore automated prompt generation, integration with multimodal perception, and extension to broader classes of nonlinear and hybrid systems.

Conclusion

This work establishes a new paradigm for adaptive control by leveraging LLMs as compensator designers, guided by prompt engineering and reference-based reasoning. The LLMs-guided adaptive compensator achieves robust, generalizable, and interpretable control performance across diverse robotic platforms, with significantly reduced design complexity. The findings suggest that LLMs can play a substantive role in the future of automatic control, particularly in scenarios where explicit modeling is infeasible or costly, and where rapid adaptation to system uncertainties is required.