VISTA-OCR: Towards generative and interactive end to end OCR models (2504.03621v1)

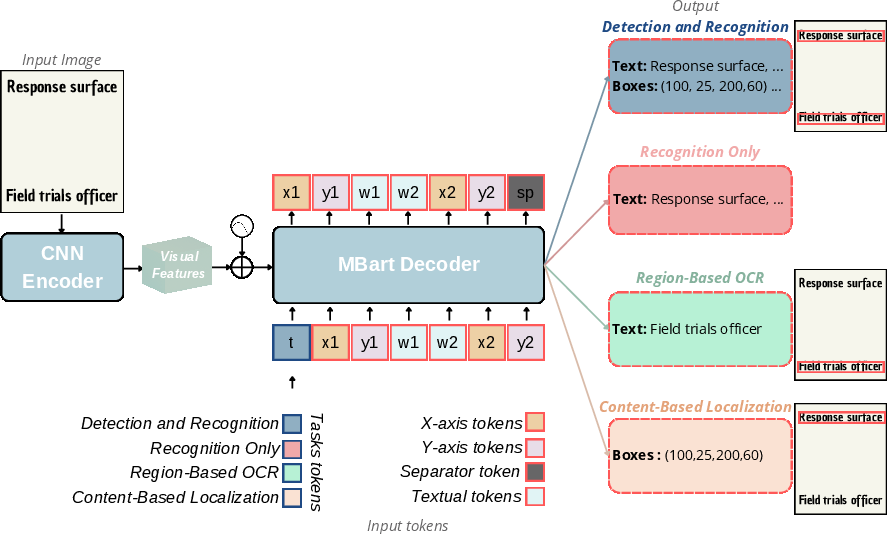

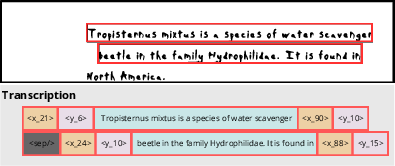

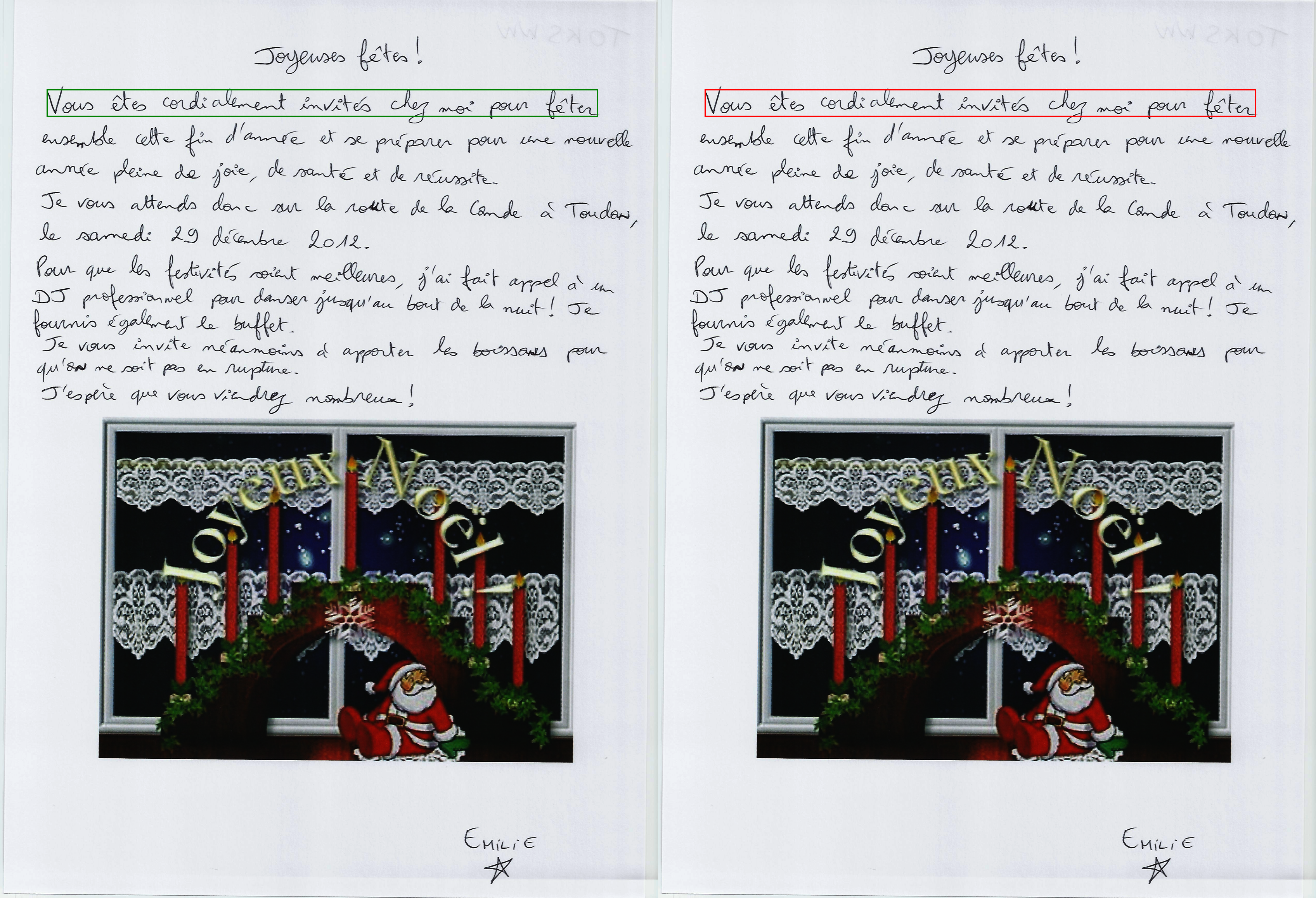

Abstract: We introduce \textbf{VISTA-OCR} (Vision and Spatially-aware Text Analysis OCR), a lightweight architecture that unifies text detection and recognition within a single generative model. Unlike conventional methods that require separate branches with dedicated parameters for text recognition and detection, our approach leverages a Transformer decoder to sequentially generate text transcriptions and their spatial coordinates in a unified branch. Built on an encoder-decoder architecture, VISTA-OCR is progressively trained, starting with the visual feature extraction phase, followed by multitask learning with multimodal token generation. To address the increasing demand for versatile OCR systems capable of advanced tasks, such as content-based text localization \ref{content_based_localization}, we introduce new prompt-controllable OCR tasks during pre-training.To enhance the model's capabilities, we built a new dataset composed of real-world examples enriched with bounding box annotations and synthetic samples. Although recent Vision LLMs (VLLMs) can efficiently perform these tasks, their high computational cost remains a barrier for practical deployment. In contrast, our VISTA$_{\text{omni}}$ variant processes both handwritten and printed documents with only 150M parameters, interactively, by prompting. Extensive experiments on multiple datasets demonstrate that VISTA-OCR achieves better performance compared to state-of-the-art specialized models on standard OCR tasks while showing strong potential for more sophisticated OCR applications, addressing the growing need for interactive OCR systems. All code and annotations for VISTA-OCR will be made publicly available upon acceptance.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.