Transferring General Multimodal Pretrained Models to Text Recognition: An Expert Overview

The paper "Transferring General Multimodal Pretrained Models to Text Recognition" presents a novel approach to optical character recognition (OCR) by leveraging multimodal pretrained models. The authors introduce OFA-OCR, a method that repurposes a vision-language pretrained model through a reconceptualization of text recognition as an image captioning task. This technique eschews the dependency on large-scale annotated or synthetic datasets traditionally required for OCR, yet manages to attain state-of-the-art performance in Chinese text recognition benchmarks.

Methodology

OFA-OCR stands on the shoulders of the OFA (One-For-All) framework, a unified multimodal pretrained model. The approach uniquely transforms text recognition into a sequence-to-sequence task akin to image captioning. This is achieved through finetuning the unified multimodal pretrained model on text recognition datasets without the necessity for domain-specific OCR pretraining.

The model architecture leverages the Transformer encoder-decoder framework. Adaptors facilitate integration of diverse modalities, such as visual backbones for images and word embeddings for text, into discrete tokens suitable for sequence generation. OFA-Chinese, the model variant used, undergoes pretraining on extensive general-domain datasets including LAION-5B and other translated datasets, thus capturing versatile multimodal features that are adaptable to OCR.

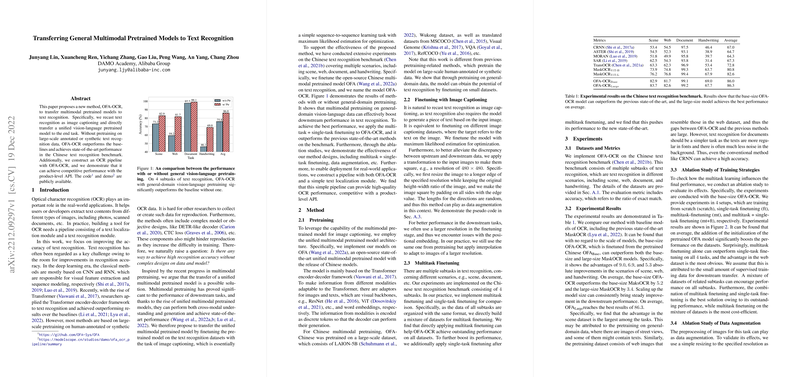

Experimental Results

The efficacy of OFA-OCR is demonstrated across multiple tasks in the Chinese text recognition benchmark, encompassing scenarios such as scene, web, document, and handwriting recognition. Notably, both the base and large versions of OFA-OCR outperform previous state-of-the-art models. For instance, the base model surpasses MaskOCR by significant margins, with notable absolute improvements of 9.0 in scene and 6.9 in web recognition tasks. These numerical results underscore the capacity of multimodal pretraining to enhance downstream task performance even in text-intensive applications.

Ablation Studies and Practical Implications

The authors conduct several ablation studies to elucidate the contributions of different training strategies. Multitask finetuning, in particular, is shown to significantly enhance performance across various subtasks. This suggests that diverse training data, enabled by the mixture of multitask learning, can leverage the model’s generalization ability more effectively than single-task finetuning. Furthermore, the paper discusses deployment strategies by integrating a detection-then-recognition pipeline, demonstrating competitive results against product-level APIs.

Implications and Future Directions

The research presents important implications for the field of text recognition. By eliminating the traditional reliance on extensive OCR-specific datasets, OFA-OCR presents a more accessible approach for researchers hindered by data collection challenges. The work prompts further exploration into the utility of multimodal pretrained models for various domain-specific applications beyond text recognition.

Future research could delve into model compression or distillation techniques to address the computational inefficiencies inherent in deploying large-scale transformer models. Additionally, extending the adaptation strategies to other languages and script styles could broaden the applicability of similar approaches.

Conclusion

This paper provides a compelling argument for the transfer of general multimodal pretrained models to specific tasks like text recognition without resorting to elaborate domain-specific pretraining. OFA-OCR exemplifies how leveraging a unified model's capabilities through innovative task framing can yield state-of-the-art results. The methodology not only boosts performance metrics but also sets a precedent for further advancements in AI deployment strategies across diverse real-world applications.