Visual Grounding Task

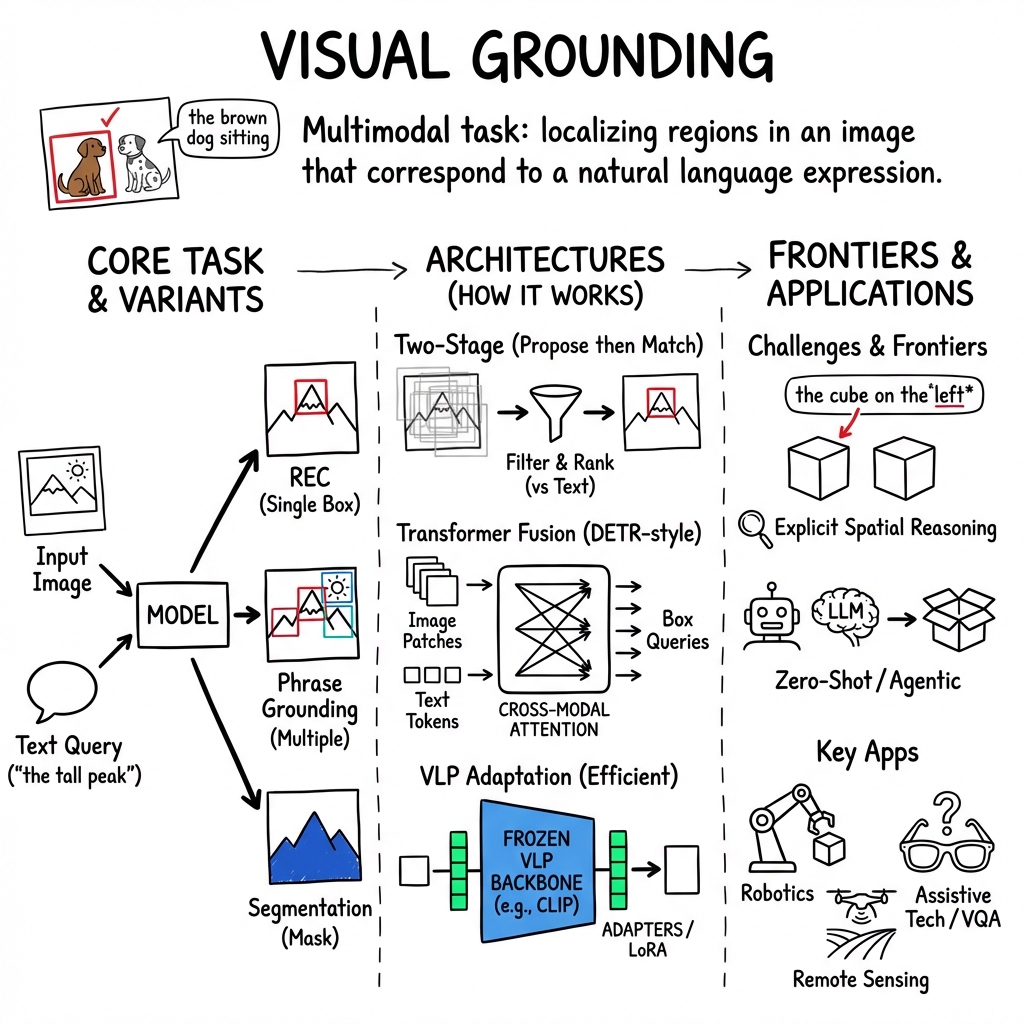

- Visual grounding is the multimodal task of localizing image regions that correspond to natural language descriptions.

- It integrates diverse methodologies including two-stage proposals, one-stage dense-anchors, transformer-based models, and vision-language pre-training.

- Key challenges include dataset saturation, robustness under ambiguous conditions, and the need for efficient real-time deployment.

Visual grounding is the multimodal task of localizing one or more the regions in an image that correspond to a natural language referring expression. It underpins a range of high-level vision-language tasks, including referring expression comprehension, phrase grounding, segmentation, and visually grounded question answering. Visual grounding research has advanced rapidly, encompassing diverse architectural regimes, robust evaluation protocols, large-scale benchmarks, and applications that span explicit spatial reasoning, embodied perception, and cross-domain grounding in remote sensing, robotics, and video (Xiao et al., 2024, Liu et al., 10 Apr 2025, Zhou et al., 2024).

1. Formal Task Definition and Core Variants

Given an image and a referring expression , a visual grounding model produces a set of bounding boxes . In the classic single-target setting (), the desired output is a single box aligning with the region most semantically consistent with (Xiao et al., 2024). When or , the task generalizes to support multi-object, zero-object, and open-world query scenarios (generalized visual grounding).

Major subtypes include:

- Referring Expression Comprehension (REC): ; localize the unique region matching the query phrase.

- Phrase Grounding: Given a sentence and a set of entity phrases, ground every phrase to its respective region(s).

- Referring Expression Segmentation (RES): Return a pixel-wise binary mask for the referent instead of a bounding box.

- Generalized REC: Allow for referents per query, reporting both precision and handling 'no referent' cases (Xiao et al., 2024).

Evaluation metrics typically involve Intersection-over-Union (IoU), with correct predictions defined by . Generalized settings substitute accuracy@IoU with Precision@F1 for set-based outputs, and "N-acc" for quantifying correct 'no target' predictions (Xiao et al., 2024).

2. Model Architectures and Methodological Regimes

Visual grounding methods can be broadly grouped as follows (Xiao et al., 2024):

- Two-Stage Region Proposal: Generate candidate boxes with a region proposal network; each is scored against the referring expression via a learned matching function. Training uses cross-entropy or contrastive loss over proposals, and regression losses for box refinement.

- One-Stage Dense-Anchor: Early fusion of textual information into detection backbones (e.g., YOLO/SSD), directly regressing per-anchor box and class outputs in a unified manner.

- Transformer-Based: DETR-style architectures fuse vision and language streams via encoder-decoder attention; a fixed set of queries is used for box (and occasionally mask) prediction (Li et al., 2021, Xiao et al., 2024). Variants introduce dynamic fusion, explicit query/phrase pooling, or multi-scale hierarchical attention (Liu et al., 10 Apr 2025, Xiao et al., 2024).

- VLP Transfer and Adapterization: Vision-language pre-trained models (e.g., CLIP, BEiT-3, ALBEF) are adapted for grounding via lightweight fusion adapters, prompt tuning, or low-rank adaptation (LoRA/HiLoRA), with or without frozen backbones (Xiao et al., 2024, Zhou et al., 2024).

- Grounding-Oriented Pre-Training: Models such as MDETR or GLIP pre-train cross-modal encoders on region-phrase pairs at scale, fusing detection and grounding supervision into unified objectives; strong transfer to downstream grounding is typical (Xiao et al., 2024).

Emerging strategies exploit generative multimodal models, agentic reasoning across open-vocabulary detectors and LLMs, multimodal conditional adaptation of backbone weights, and diffusion denoising frameworks for iterative cross-modal alignment (Shen et al., 2023, Yao et al., 2024, Luo et al., 24 Nov 2025, Chen et al., 2023).

3. Benchmarks, Data Regimes, and Evaluation Metrics

Key public benchmarks include (Xiao et al., 2024):

- RefCOCO, RefCOCO+, RefCOCOg: MS COCO images with short (3–4 word, REC) or longer (8+ word, g) expressions. Standard splits (train/val/testA/testB).

- Flickr30k Entities: Phrase-level mapping for 31.7k images, 456k noun phrase–box pairs.

- ReferItGame: Short phrases over SAIAPR images.

- Generalized and Domain-Specific Datasets: gRefCOCO for 0/1/multi-target open-world, GigaGround for gigapixel images, AVVG/GeoGround for remote sensing with OBB/masks, WaterVG for USV perception, AerialVG for airborne imagery, and ScanRefer/SUNRefer for RGBD/3D (Liu et al., 10 Apr 2025, Zhou et al., 2024, Guan et al., 2024).

Evaluation metrics are standardized:

- [email protected]: For bounding boxes, fraction of queries with between prediction and ground truth.

- mIoU: Mean IoU for pixel-level mask outputs (RES).

- Precision@F1, N-acc: For generalized tasks.

- AP@IoU: For segmentation or detection variants.

- Speed (FPS, GFLOPs): For real-time deployment scenarios (Guan et al., 2024).

4. Specialized Extensions and Domain Adaptations

Spatial Reasoning and Relation-Aware Grounding

Complex scenes with object crowding, occlusion, or uniform object appearances (e.g., aerial, remote, or medical imagery) necessitate explicit modeling of spatial relations ("above," "between") and relation-aware box ranking. Methods have introduced relation-aware modules, pairwise reasoning blocks, and hierarchical or multi-scale attention to support disambiguation (Liu et al., 10 Apr 2025, Zhou et al., 2024).

Multimodal and Morphological Adaptation

To address diverse scene semantics and support task generalization, modern models employ adaptive mechanisms such as:

- Multi-modal Conditional Adaptation: Data-dependent low-rank weight updates in CNNs/Transformers steered by multi-modal fused embeddings, focusing attention on text-relevant regions per sample (Yao et al., 2024, Xiao et al., 2024).

- Hierarchical Cross-Modal Bridges: Progressive update of vision and language layers via staged LoRA to avoid catastrophic drift when adapting large pre-trained models to highly localized grounding supervision (Xiao et al., 2024).

Few-shot, Reference, Sensor-Fusion, and Zero-Shot Grounding

- Few-shot and Reference-based: Database of reference images/masks for each object instance supports robust grounding even among highly similar instances by hybrid vision/LLM pipelines (Lu et al., 2 Apr 2025).

- Multi-sensor (RGB+Radar) Fusion: Enables robust grounding using multiple modalities, important for embodied agents and robotics in complex or adverse conditions (Guan et al., 2024).

- Zero-shot and Training-Free: Leveraging open-vocabulary detectors, VLP, and agentic LLMs yields strong grounding without any explicit fine-tuning—achieving up to 65% accuracy on REC tasks in standard VG benchmarks (Shen et al., 2023, Luo et al., 24 Nov 2025). These approaches exploit model compositionality, explicit reasoning steps, and human-interpretable decision traces.

5. Current Challenges, Limitations, and Research Frontiers

Key open directions include (Xiao et al., 2024):

- Dataset Saturation and Domain Shift: RefCOCO/+/g appear saturated, with simple categories and low-linguistic diversity; benchmark expansion toward richer language, zero/multi-target, and cross-modality is essential.

- Grounding Faithfulness and Robustness: Standard accuracy is insufficient; metrics must capture attention faithfulness, region overlap, and support out-of-distribution OOD diagnostics.

- Efficient Real-time Systems: Streaming and edge deployment demand lightweight, low-latency architectures (MobileNetV4, prompt-guided radar fusion in NanoMVG) (Guan et al., 2024).

- Explicit Spatial/Relational Reasoning: Robustness in dense, ambiguous, or high-resolution domains (aerial, surveillance, medical, remote) requires incorporation of explicit spatial and relational modules (Liu et al., 10 Apr 2025, Zhou et al., 2024).

- Unsupervised and Weakly-Supervised Grounding: Self-supervised region/phrase alignment, agentic and modular zero-shot systems, and diffusion-motivated generative approaches are evolving to minimize reliance on dense manual annotations (Chen et al., 2023, Shen et al., 2023, Luo et al., 24 Nov 2025).

- Interpretability and Stepwise Explanation: Recent agentic LLM+MLLM architectures provide explicit reasoning chains, supporting transparency and human verification at each step of region selection (Luo et al., 24 Nov 2025).

6. Applications and Societal Impact

Visual grounding is integral to:

- Robotics and Embodied AI: Language-conditioned robotic control, pick-and-place, navigation, and human–robot collaboration.

- Assistive Tech and VQA: Support for visually impaired users via grounded question answering and evidence highlighting (Chen et al., 2022).

- AR/VR, UI, Medical Imaging: Grounding instructions for interactive interfaces, clinical navigation, and real-time user interaction (Xiao et al., 2024).

- Remote Sensing and Safety: Aerial and satellite imagery analysis for surveillance, vehicular traffic, and environmental monitoring (Zhou et al., 2024).

Societal challenges include robustness under ambiguity, adversarial language, and generalization across domain shifts.

Visual grounding continues to evolve as a central, multimodal challenge in vision-language research, driving advances in model architectures, scalable supervision, robust evaluation, and open-world operation (Xiao et al., 2024, Liu et al., 10 Apr 2025, Luo et al., 24 Nov 2025, Shen et al., 2023, Xiao et al., 2024).