Generalizable Visual Grounding

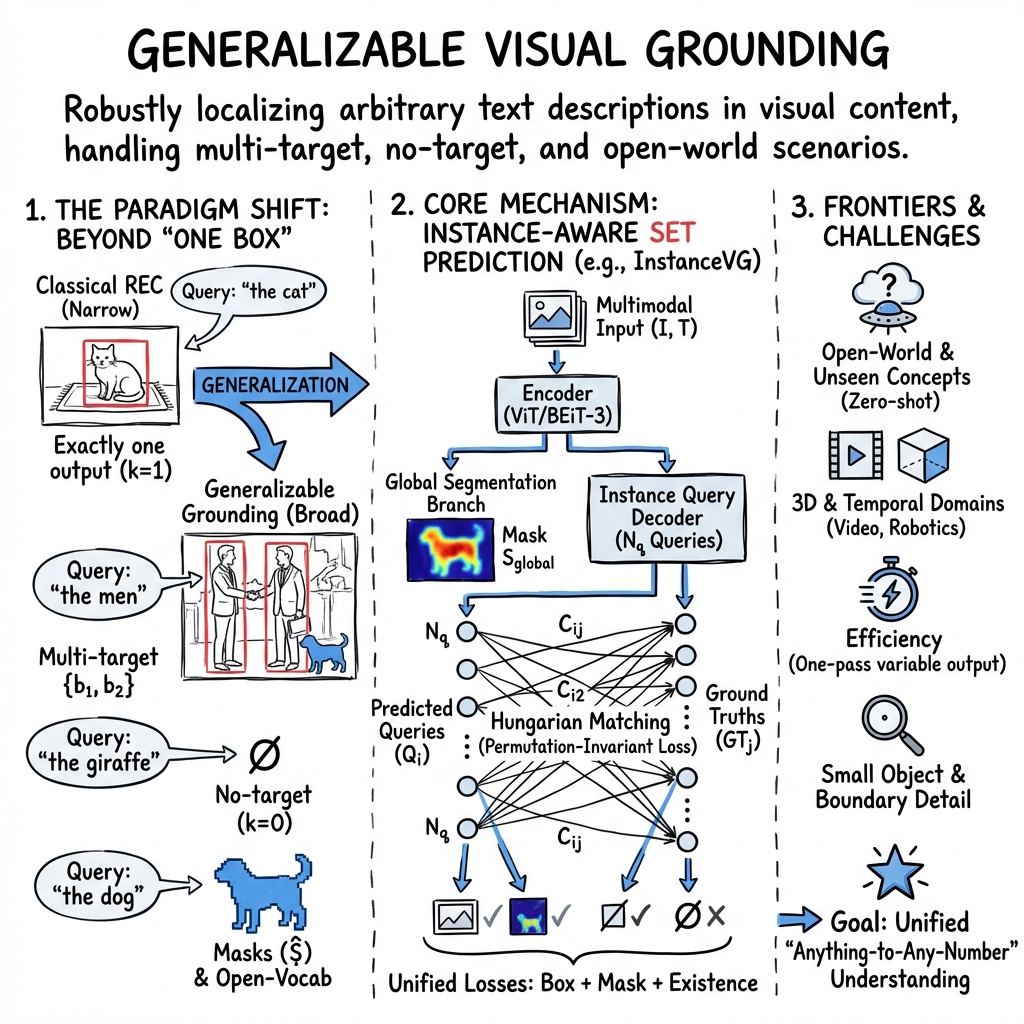

- Generalizable Visual Grounding is defined as the ability to accurately localize arbitrary textual descriptions in visual content across diverse scenarios, granularities, and domains.

- It employs instance-aware query-matching, hierarchical feature fusion, and open-vocabulary detection to produce variable-length outputs, including bounding boxes and pixel-wise masks.

- Recent frameworks, exemplified by InstanceVG, achieve high REC accuracy and robust generalization through unified losses, multimodal training, and cross-granularity consistency.

Generalizable visual grounding is the ability of a vision–language system to robustly and accurately localize an arbitrary textual description in visual content, across a wide range of referential scenarios, domains, target granularities (e.g., from bounding boxes to pixel-wise masks), and with minimal dependence on the number or category of referents. Contemporary research traces the evolution of generalizable visual grounding from classical single-object referring expression comprehension (REC) to frameworks that must handle multi-target, no-target, zero-shot, and open-world settings, as well as rapidly shifting domains (including natural images, video, remote sensing, medical imaging, art, and 3D environments).

1. Formal Definitions and Task Generalization

Classical visual grounding (REC, phrase grounding) presumes each input query localizes exactly one entity per image; the ground-truth output is a single bounding box (or mask). Generalizable visual grounding, by contrast, accommodates:

- Multi-target grounding: an expression may refer to several disjoint regions ("the two men"), so the output is a variable-length set , .

- No-target cases: some queries refer to no visible object ("the zebra in a car park"), so .

- Fine-grained segmentation: generalization to pixel-wise or instance-level masks () instead of coarse boxes.

- Open-vocabulary or open-domain settings: queries reference objects or concepts unseen during training.

Given an image and free-form expression , the grounding model is

This generalized formulation underlies recent work in generalized referring expression comprehension (GREC), generalized referring expression segmentation (GRES), and zero-shot 3D visual grounding (Xiao et al., 2024, Dai et al., 17 Sep 2025, Li et al., 28 May 2025).

2. Core Architectural Paradigms

Recent architectures align with several design principles to achieve generalizability:

- Instance-aware Query-matching:

- DETR-style set prediction: Use object queries matched, via permutation-invariant losses (Hungarian matching), to an arbitrary number () of true referents.

- Example: InstanceVG (Dai et al., 17 Sep 2025) assigns each instance query a prior spatial point and enforces one-to-one consistency across points, boxes, and masks (see section 5).

- Hierarchical and Cross-modal Feature Fusion:

- Systems such as SimVG (Dai et al., 2024) and HCG-LVLM (Guo et al., 23 Aug 2025) decouple multimodal feature fusion (learned at pre-training scale) from task-specific reasoning heads, allowing both fast lightweight inference and robust context integration.

- Hierarchical or cascaded fusion (global-to-local) boosts robustness to spatial ambiguity and rare queries.

- Open-vocabulary and Modular Pipelines:

- Use of open-vocabulary object detectors (OVD; e.g., Detic, GLIP, OWL-ViT) in tandem with cross-modal similarity heads enables grounding on unseen categories (Shen et al., 2023, Li et al., 3 Aug 2025).

- Modular pipelines dissociate grounding "what" (object identity) from "where" (spatial localization), as in compositional or graph-based approaches (Rajabi et al., 2023, Liu et al., 2018).

- 3D and Multimodal Alignment:

- Approaches such as SeeGround (Li et al., 28 May 2025) and OpenMap (Li et al., 3 Aug 2025) synchronize rendered 2D/3D views, spatially-enriched text, and query-adaptive prompts for generalizing to 3D navigation and embodied scenarios.

- Emergent and Supervision-free Grounding:

- Large multimodal models trained on weak or instruction-only data can exhibit "emergent" grounding capacities, extractable via attention map analysis and external segmentation heads (e.g., attend-and-segment with SAM) (Cao et al., 2024).

3. Learning Objectives and Inference Mechanisms

Generalizable grounding frameworks unify localization, segmentation, and discrimination losses, frequently using permutation-invariant objectives:

- Hungarian Matching Cost:

as in InstanceVG (Dai et al., 17 Sep 2025).

- Joint and Consistency Losses:

Integrates detection, segmentation, instance-level consistency, and existence discrimination.

- Text-Mask and Prompt-based Supervision:

- Multi-format outputs (boxes, OBBs, masks) via text-serialization, e.g., run-length encoded masks for GeoGround (Zhou et al., 2024).

- Auxiliary tasks (geometry-guided, prompt-assisted), enforcing consistency across outputs of differing granularity.

- Per-object Open-vocab Alignment:

- Grounding as dot-product (CLIP/ViT) or cross-attention between text and class-agnostic segment/mask embeddings (Qi et al., 2024).

- Contrastive and Consistency-based Learning:

- Use of region–text contrastive objectives across layers (Xiao et al., 2024), or contrastive consistency losses in local-vs-global alignment (Guo et al., 23 Aug 2025).

4. Evaluation Protocols and Benchmark Datasets

Standardized evaluation of generalizable grounding metrics must accommodate non-fixed cardinality, empty-set predictions, and segmentation accuracy. Key benchmarks and protocol features include:

| Task/Metric | Dataset Reference | Specifics |

|---|---|---|

| GREC Precision@(F1=1, IoU0.5), N-acc | gRefCOCO, Ref-ZOM, D³ | Multi/zero-target, exact set-match |

| GRES gIoU, cIoU, mIoU, oIoU | gRefCOCO, R-RefCOCO | Instance/pixel-level, empty-target |

| Classical REC/RES | RefCOCO/+/g | Box/mask accuracy @ IoU0.5 |

| 3DVG [email protected]/0.50 | ScanRefer, Nr3D | 3D box accuracy, open-vocab |

| Panoptic Narrative Grounding (PNG) AR | COCO-PNG | Mask recall (all mentions) |

Modern state-of-the-art models report >96% REC accuracy and >87% RES mIoU (InstanceVG-L; RefCOCO val), as well as strong gains in generalized and multi-target settings (e.g., InstanceVG: +11.4% F1 on GREC, +4.9% gIoU on GRES vs. prior SOTA) (Dai et al., 17 Sep 2025).

5. Exemplary Framework: Instance-aware Joint Learning

InstanceVG (Dai et al., 17 Sep 2025) provides a canonical instantiation of multi-task, instance-aware joint training for generalized grounding:

- Multi-modality Encoder: BEiT-3 transformer encodes both visual and textual tokens, producing feature maps (image), (text).

- Parallel Prediction Branches:

- Global semantic segmentation: SimFPN + U-Net decoder, yielding a mask and target-existence score.

- Instance perception: Point-prior decoder generates instance queries with reference points via L2-norm text filtering, cross-attention heatmaps, and diversity-constrained greedy sampling.

- Point-guided Instance Matching:

- Each instance query is matched to ground-truth objects via a cost that includes semantic, box, and point-location terms.

- Mask losses are enforced per-instance (not just globally), correcting the semantic-segmentation bias.

- Cross-granularity Consistency:

- Ensures the predicted point, box, and mask for each query are mutually consistent, with instance mask loss also applied to negative queries to suppress spurious predictions.

The overall design—joint prediction, one-to-one instance matching, unified losses—facilitates high-accuracy, consistent grounding across REC, RES, GREC, and GRES benchmarks.

6. Challenging Aspects and Limitations

Current obstacles for robust generalizability include:

- Task and output inconsistency: Segregation of detection/segmentation, lack of instance-awareness (GRES as pure semantic segmentation).

- No-target discrimination: Difficulty in classifying empty-set targets ("none") and false positive suppression.

- Domain shift and low-resolution artifacts: Small-object recall is challenged by visual token downsampling, patch-compression, and ViT backbone limitations.

- Efficiency: Achieving variable-cardinality outputs in a single pass remains computationally demanding, especially for video or 3D scenarios.

- Dataset limitations: Shortage of large, unbiased, multi-domain generalized grounding corpora with fine-grained and open-vocabulary labels (Xiao et al., 2024).

7. Future Directions

Emerging trends and research goals, informed by recent surveys and empirical analyses, include:

- Multi-level feature fusion: Integrating cross-scale visual features to improve small-object localization and instance boundary detail.

- Enhanced pre-training tasks: Region-phrase matching at scale, synthetic and paraphrased captions, self-supervised region discovery.

- Unified architectures: One-tower or LLM-based backbones that output arbitrary-size sets or panoptic signals via text-based serialization.

- Human-in-the-loop generalization: Active/online learning for ambiguous or out-of-vocab queries, calibration for no-target detection.

- 3D and temporal generalization: Extending to sequential data (video streams, autonomous driving, robotics), requiring tracking, viewpoint adaptation, and spatio-temporal tube grounding (Li et al., 28 May 2025, Li et al., 3 Aug 2025, Feng et al., 2021).

- Open-world and zero-shot transfer: Decoupling segmentation from grounding (e.g., GELLA), and leveraging open-vocab CLIP-style features to unlock new entities and domains without explicit fine-tuning (Qi et al., 2024, Cao et al., 2024).

Generalizable visual grounding, as a research focus, targets "anything-to-any-number" referential understanding, integrating multi-level, multi-modal, and open-vocabulary reasoning under unified, instance-aware, and scalable frameworks.