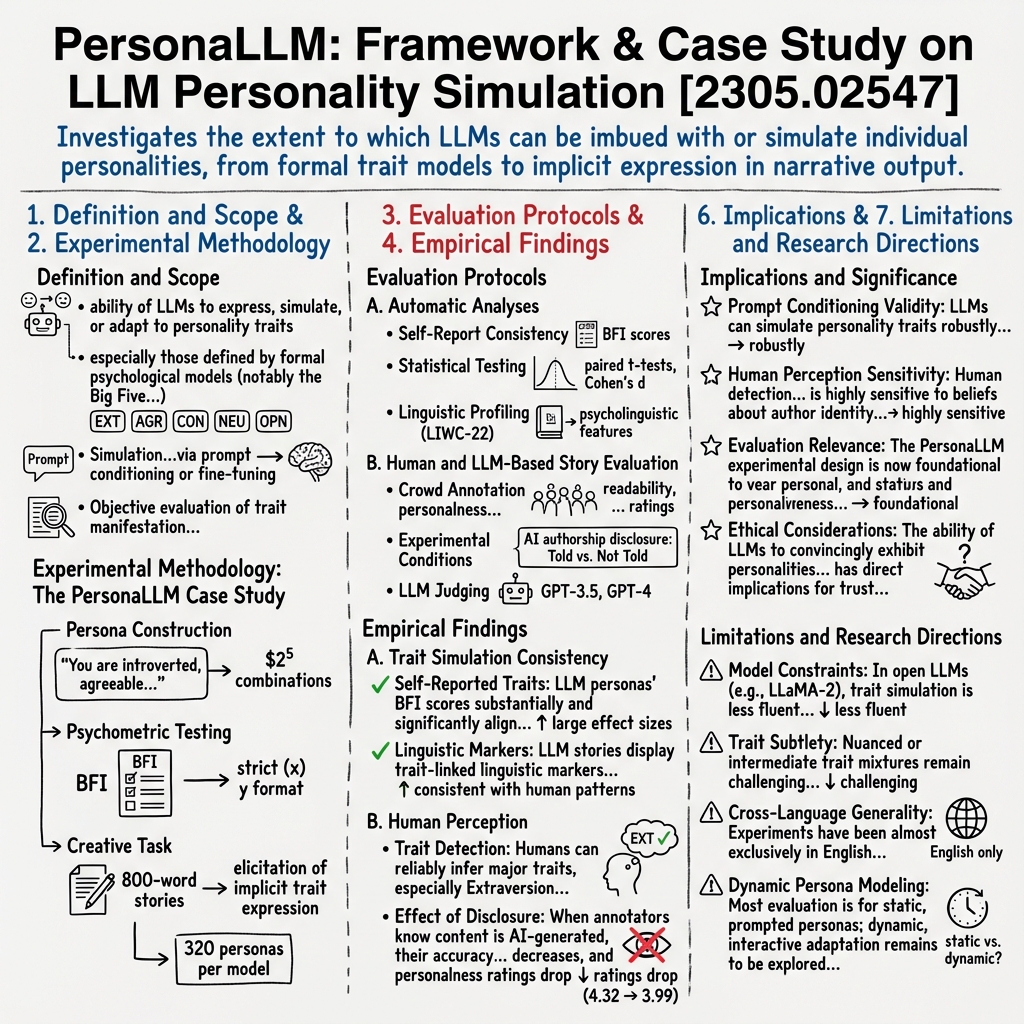

PersonaLLM: Personality Simulation in LLMs

- PersonaLLM is a framework focused on simulating personality traits in LLM outputs using prompt conditioning and standardized psychometric tools like the Big Five.

- It employs rigorous methodologies, including persona construction, statistical testing, and linguistic profiling to benchmark personality simulation.

- Empirical evaluations show that while LLMs can align with assigned traits, biases in human detection and model limitations persist.

PersonaLLM refers to both a broad conceptual framework in LLM research and a specific case study on evaluating personality trait expression in LLM outputs. The overarching topic encompasses methods, benchmarks, and theoretical perspectives on the extent to which LLMs can be imbued with or simulate individual personalities—whether for user modeling, system role-playing, or assessment of socio-empathic behavior.

1. Definition and Scope

PersonaLLM is primarily concerned with the ability of LLMs to express, simulate, or adapt to personality traits, especially those defined by formal psychological models (notably the Big Five: Extraversion, Agreeableness, Conscientiousness, Neuroticism, Openness to Experience). The nomenclature "PersonaLLM" was formalized in the first comprehensive case study investigating LLM personality simulation using forced trait assignment and standardized psychometric instruments (Jiang et al., 2023).

The concept covers:

- Simulation of personality by LLMs via prompt conditioning or fine-tuning,

- Objective evaluation of trait manifestation in LLM-generated outputs,

- The intersection of LLM persona simulation with user personalization paradigms and conversational agent design.

2. Experimental Methodology: The PersonaLLM Case Study

The seminal PersonaLLM study proposes a rigorous experimental approach to investigating personality simulation in LLMs:

- Persona Construction: LLMs (GPT-3.5, GPT-4) are conditioned via prompts specifying binary Big Five values (e.g., “You are introverted, agreeable…”). For full trait coverage, combinations are instantiated (32), with multiple random seeds producing 10 samples per type (320 personas per model).

- Psychometric Testing: Each persona completes the 44-item Big Five Inventory (BFI), responding strictly (x) y format for each Likert statement.

- Creative Task: Personas write 800-word stories triggered by the prompt: “Please share a personal story in 800 words. Do not explicitly mention your personality traits in the story.” This instruction is used to elicit implicit trait expression in naturalistic, narrative output.

3. Evaluation Protocols

A. Automatic Analyses

- Self-Report Consistency: BFI responses from each LLM persona are aggregated and trait scores are computed.

- Statistical Testing: Paired t-tests and Cohen’s d effect sizes quantify the correspondence between prompted assignment and model-inferred trait.

- Linguistic Profiling: LIWC-22 (Linguistic Inquiry and Word Count) is used on all stories to extract 81 psycholinguistic features; point-biserial correlations between trait assignment and LIWC features are analyzed and compared to human-authored story corpora (Essays dataset).

B. Human and LLM-Based Story Evaluation

- Crowd Annotation: Five English-native annotators (Prolific, US-based) rate stories for readability, personalness, redundancy, cohesiveness, likeability, and believability (1–5 Likert scale). Each also infers the author’s Big Five from the text.

- Experimental Conditions: Raters are either told or not told that the author is an LLM. Trait inference and story ratings are compared for both conditions to assess the effect of AI authorship disclosure.

- Majority Voting: Aggregates trait predictions for story-level majority accuracy.

- LLM Judging: GPT-3.5 and GPT-4 are also used as automated raters (zero-temperature for reproducibility) using the same rating templates.

4. Empirical Findings

A. Trait Simulation Consistency

- Self-Reported Traits: LLM personas’ BFI scores substantially and significantly align with their assigned trait values, with large effect sizes (GPT-4 Cohen’s d: Extraversion=5.47, Agreeableness=4.22, etc.).

- Linguistic Markers: LLM stories display trait-linked linguistic markers consistent with human patterns—e.g., Extraversion aligning with positive affect and social affiliation words; Neuroticism with mental health and negative emotion vocabulary.

- GPT-4 exhibits closer alignment with human baselines, particularly for Conscientiousness and Openness.

B. Human Perception

- Trait Detection: Humans can reliably infer major traits, especially Extraversion (individual accuracy 0.68, majority vote 0.84), and to a lesser degree, Agreeableness. Performance drops for more subtle traits (e.g., Openness).

- Effect of Disclosure: When annotators know content is AI-generated, their accuracy in trait detection decreases, and personalness ratings drop (from 4.32 to 3.99 on a 5-point scale).

- Correlation with Model BFI: Spearman's : Extraversion (0.64), Agreeableness (0.33), demonstrating significant trait-perception alignment.

- AI Evaluation Bias: GPT-4 as judge shows self-preference, rating its own stories as more likeable and personal than humans do.

C. Comparison with Human Authored Stories

- Readability and Style: LLM stories are rated as highly readable and cohesive by both human and LLM judges, but are viewed as less “personal” and likeable compared to human-authored ones.

- Stereotypicality: LLMs sometimes exhibit over-saturation of prototypical trait markers relative to humans, reflecting both the strengths and limitations of prompt-based persona conditioning.

5. Representative Patterns and Linguistic Correlates

A sample of trait–linguistic feature relationships (via LIWC correlation analysis):

| Trait | Positive Correlates (LLM, Humans) | Negative Correlates |

|---|---|---|

| EXT | Social/affiliation lexicon, positive affect words | Solitary/introverted terms |

| AGR | Prosocial lexicon, cooperative tone | Conflict, rejection words |

| CON | Achievement, work, order | Neglect, chaos |

| NEU | Anxiety, negative emotion, health | Stability, positive tone |

| OPN | Curiosity, insight, vision-related | Conventional, factual style |

6. Implications and Significance

- Prompt Conditioning Validity: LLMs can simulate personality traits robustly at the behavioral and linguistic level with simple prompt assignment, albeit with nuances in depth and realism compared to humans.

- Human Perception Sensitivity: Human detection of personality traits in text is highly sensitive to beliefs about author identity; AI-authored stories are seen as less “personable” when disclosure is present.

- Evaluation Relevance: The PersonaLLM experimental design is now foundational for assessing LLMs' capacity for personality simulation and for benchmarking "personification" tasks in conversational AI.

- Ethical Considerations: The ability of LLMs to convincingly exhibit personalities (especially when not disclosed as AI) has direct implications for trust, authenticity, and user interaction in socio-empathic AI deployments.

7. Limitations and Research Directions

- Model Constraints: In open LLMs (e.g., LLaMA-2), trait simulation is less fluent and marked by unnatural outputs compared to closed LLMs (GPT-4).

- Trait Subtlety: Nuanced or intermediate trait mixtures remain challenging for both detection and reliable simulation.

- Cross-Language Generality: Experiments have been almost exclusively in English; cross-linguistic generalization is untested.

- Dynamic Persona Modeling: Most evaluation is for static, prompted personas; dynamic, interactive adaptation remains to be explored fully.

PersonaLLM, as defined in (Jiang et al., 2023), establishes that contemporary LLMs both directly and implicitly simulate major personality traits through prompt engineering. The framework’s empirical and psycholinguistic methodologies provide a robust template for subsequent research into computational personification, trait-adaptive conversational AI, and the ethical frontiers of human-AI interaction.