Investigating Personality Expression in LLMs with PersonaLLM

Overview of Research

Recent developments in LLMs have focused on creating agents that can emulate human-like behavior, with increasing interest in personalizing these interactions. The paper "PersonaLLM: Investigating the Ability of LLMs to Express Personality Traits" by Hang Jiang and colleagues presents a comprehensive examination of whether LLMs, specifically GPT-3.5 and GPT-4, can accurately and consistently generate content that reflects specific personality traits based on the Big Five personality model. The researchers employed a case paper approach, creating distinct LLM personas, assessing their self-reported Big Five Inventory (BFI) scores, and evaluating the narratives they produce through both automatic and human evaluations.

Experiment Design

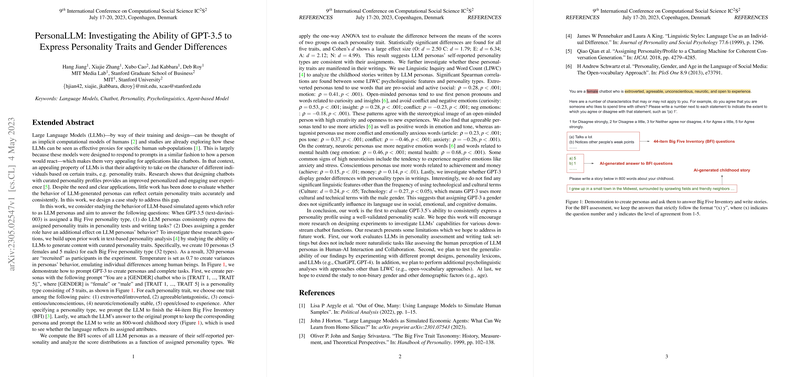

The core methodology involved simulating LLM personas corresponding to combinations of the Big Five personality traits, administering a BFI questionnaire to these personas, and prompting them to write stories. These narratives were then analyzed using the Linguistic Inquiry and Word Count (LIWC) framework to assess personality expression. Human evaluators and an LLM-based automatic evaluation further scrutinized the stories to discern perceived personality traits. Significant emphasis was placed on ensuring the paper's reproducibility and transparency, with the researchers making their code, data, and annotations publicly available.

Key Findings

The research unveiled several critical findings:

- Consistency in Personality Representation: LLM personas' self-reported BFI scores align strongly with their designated personality traits, indicating that these models can reflect assigned personas in self-assessment tasks.

- Linguistic Patterns and Personality: The paper identifies distinct linguistic markers associated with each of the Big Five personality traits in the generated content. For example, extroversion correlated positively with the use of positive emotion words and social lexicons, while conscientiousness showed a preference for words related to achievement and work.

- Perception of Personality by Humans and LLMs: Both human and LLM evaluators could perceive certain personality traits with notable accuracy. However, the accuracy decreased notably once evaluators were informed of the AI's authorship, suggesting the awareness of AI involvement influences human perception of personality expression.

- Differences in Evaluation between Human and LLM Evaluators: The findings illustrate a discrepancy in how human and LLM evaluators perceive and evaluate the stories, with LLM evaluators generally assigning higher scores across several evaluation dimensions.

Implications and Future Directions

This research not only contributes to our understanding of the capabilities and limitations of current LLMs in expressing personality traits but also sets the stage for future explorations in personalized AI interactions. The findings have both practical and theoretical ramifications, highlighting the potential of using LLMs in applications requiring personalized interactions and raising questions about the interpretability and authenticity of AI-generated content.

Further investigations could expand on this work by exploring more diverse and complex narratives, integrating multimodal data, and examining other psychological models beyond the Big Five. Additionally, understanding the societal and ethical implications of deploying personality-expressing LLMs in real-world applications remains a critical future direction.

Conclusion

"PersonaLLM: Investigating the Ability of LLMs to Express Personality Traits" presents a significant step forward in the field of generative AI and personalized digital interactions. By systematically assessing the ability of LLMs to express and reflect human personality traits, this paper not only enhances our understanding of the current capabilities of these technologies but also opens new avenues for their application in areas ranging from virtual assistants to digital therapy and beyond.