OpenVLA: Open Source VLA for Robotics

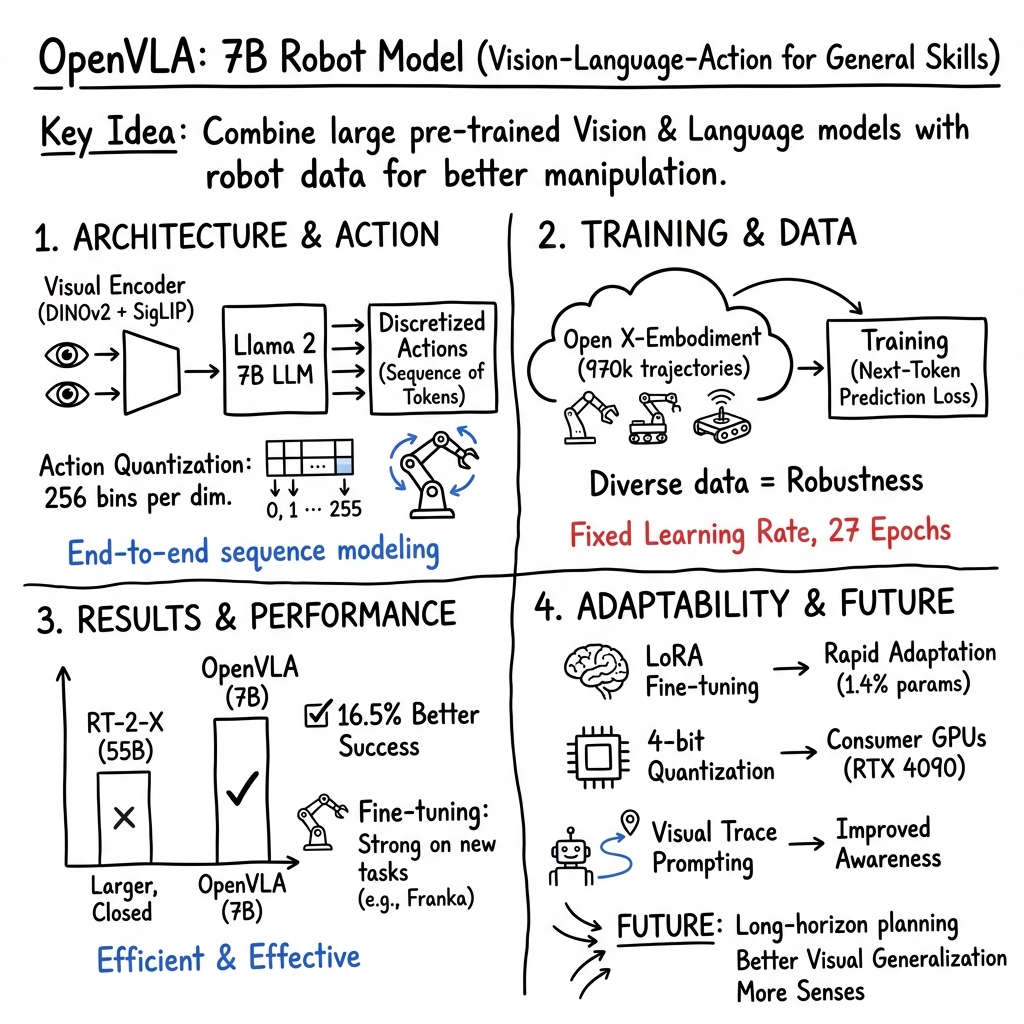

- OpenVLA is a 7-billion-parameter Vision-Language-Action model that fuses advanced visual encoders and language models with real-world robot data for generalist manipulation.

- The model is fine-tuned on nearly 1 million robot trajectories across 70+ domains using discrete action tokenization and efficient LoRA updates.

- Empirical results show OpenVLA achieves superior success rates and robustness in multi-object tasks, outperforming larger closed models and standard imitation learners.

OpenVLA is a 7-billion-parameter open-source Vision-Language-Action (VLA) model designed to advance generalist robot manipulation by combining large-scale internet-trained vision and LLMs with extensive real-world robot demonstration data. Its architecture, training methodology, and empirical performance establish new baselines in robustness, generalization, and computational efficiency for robotic policy learning.

1. Architecture and Action Decoding

OpenVLA integrates a Llama 2 7B LLM backbone with a visual encoder that fuses features from DINOv2 (for spatial fidelity) and SigLIP (for semantic discrimination). Incoming RGB observations are divided into patches, each processed by both visual encoders and concatenated as token vectors. These tokens are projected into the LLM embedding space using a two-layer MLP projector. The model processes inputs as a sequence of tokens—vision (patch-as-token), language, and discretized actions—and operates under an autoregressive next-token prediction loss.

Actions, which may originally reside in (e.g., 3D position, orientation, gripper open/close), are discretized into 256 bins per dimension:

The vocabulary of the Llama tokenizer is overwritten to accommodate these new action tokens, ensuring end-to-end sequence modeling that directly yields control outputs. For multi-dimensional action vectors , each is discretized and the full sequence is decoded jointly.

2. Training Methodology and Data Diversity

OpenVLA is fine-tuned on 970,000 real-world robot manipulation trajectories sourced from more than 70 domains within the Open X-Embodiment dataset. This data encompasses a wide range of embodiments (e.g., WidowX, Franka, mobile platforms), sensory layouts, and task variations. The model's training pipeline applies rigorous episode curation, stratified sampling, and explicit filtering of degenerate transitions (e.g., all-zero actions).

Training employs a fixed learning rate (), matching LLM pretraining regimes, and runs for 27 epochs. The loss is a standard cross-entropy over next-token prediction, involving joint sequences of language, vision, and action tokens. To balance task- and embodiment-specific distributions, data reweighting (inspired by the Octo policy) is implemented.

3. Comparative Performance and Empirical Results

OpenVLA achieves superior results across diverse manipulation tasks and platforms:

- Outperforms RT-2-X (closed, 55B parameters) by an absolute 16.5% success rate margin with fewer parameters.

- Surpasses single-policy baselines including RT-1-X and modular imitation learning systems such as Octo, particularly in multi-object environments and tasks requiring strong language-vision association.

- Fine-tuning OpenVLA yields strong performance even in unfamiliar setups (Franka-Tabletop, Franka-DROID), with improvements over imitation learners (e.g., Diffusion Policy) by 20.4%.

Performance on benchmark suites such as the LIBERO and TOTO tasks shows consistently low normalized error rates except in outlier domains, supporting the model’s robustness but also highlighting some specialization to task distributions observed during training (Guruprasad et al., 2024).

4. Generalization and Fine-Tuning Strategies

OpenVLA demonstrates notable adaptability:

- Full-model and parameter-efficient fine-tuning (e.g., Low-Rank Adaptation/LoRA with ) afford rapid adaptation. LoRA updates only 1.4% of all parameters yet matches full fine-tune performance, facilitating usage on limited hardware.

- The model’s strong grounding in multi-object and multi-instruction settings is empirically validated by paired scene evaluations, where OpenVLA reliably responds to varied scene contexts and linguistic instructions.

- Model quantization (down to 4 bits) enables inference on consumer GPUs (e.g., RTX 4090), with negligible drop in downstream task success.

The adoption of visual trace prompting via TraceVLA (overlaying visual state–action histories) further improves spatial-temporal awareness, delivering up to 10% higher simulated success and 3.5 improvements on physical robots (Zheng et al., 2024).

5. Computational Efficiency and Accessibility

Efficient computational design underpins OpenVLA:

- Inference requires 15 GB (bfloat16); quantization reduces this to fit on commodity devices.

- Training and fine-tuning can be performed in practical timeframes (10–15 hours on a single A100 GPU with LoRA).

- The open PyTorch codebase supports multi-node scaling, mixed precision (FlashAttention), and FSDP; integration with HuggingFace’s AutoModel interface is provided.

- Model checkpoints, fine-tuning notebooks, and training scripts are publicly released.

ADP (Action-aware Dynamic Pruning) enhances efficiency further, adaptively pruning visual tokens contingent on robot motion phases and task semantics, yielding a 1.35 speedup and 25.8% action success rate improvement in OpenVLA-OFT settings (Pei et al., 26 Sep 2025).

6. Interpretability and Symbolic Integration

Experimental probing of OpenVLA’s LLM backbone reveals robust internal encoding of symbolic states (object relations, action status):

- Linear probes on hidden layers achieve 0.90 accuracy in decoding symbolic predicates, indicating that high-level environmental structures are accessible from activation vectors (Lu et al., 6 Feb 2025).

- Integration with cognitive architectures (e.g., DIARC) for real-time symbolic state monitoring is demonstrated, providing hybrid systems that combine pattern recognition and logical reasoning.

Mechanistic interpretability analyses show that specific transformer neurons correspond to semantic directions (e.g., “fast”, “up”), and activation steering can causally modulate robot behavior at inference time, yielding real-time behavioral control without retraining (Häon et al., 30 Aug 2025).

Recent studies indicate that OpenVLA encodes an emergent internal world model; probes reveal latent knowledge of state transitions consistent with Koopman operator theory, emerging as training progresses and scalable via Sparse Autoencoder analysis (Molinari et al., 29 Sep 2025).

7. Limitations, Extensions, and Future Work

Challenges and research directions include:

- Visual generalization: Catastrophic forgetting in vision backbones during fine-tuning can limit OOD performance; gradual backbone reversal (ReVLA) restores robust visual representation, improving OOD manipulation metrics by 77% (grasp) and 66% (lift) (Dey et al., 2024).

- Sequential, long-horizon manipulation: Agentic Robot, using Standardized Action Procedures (SAP) with explicit planning and verification modules, outperforms OpenVLA by 7.4% in LIBERO-long tasks and is more reliable in error recovery (Yang et al., 29 May 2025).

- Data scaling and domain adaptation: ReBot’s real-to-sim-to-real synthetic video pipeline improves OpenVLA’s success rates by 21.8% (simulation) and 20% (real world), addressing data scarcity and generalization (Fang et al., 15 Mar 2025).

- Training efficiency and tokenization: Object-agent-centric tokenization in Oat-VLA reduces visual tokens by 90%, enables over 2 faster convergence, and improves success rates beyond OpenVLA in both simulation and real-world pick/place tasks (Bendikas et al., 28 Sep 2025).

- Failure recovery: The FailSafe framework systematically generates failures and corrective actions; when integrated, OpenVLA’s success rates increase by up to 22.6% and the policy generalizes across spatial, viewpoint, and embodiment variations (Lin et al., 2 Oct 2025).

Future enhancements will incorporate additional sensory modalities (e.g., proprioception, multi-camera), increase inference frequency to support high-rate control, and investigate co-training with broader internet-scale data. Advances in attention mechanisms, token pruning strategies, and interpretability frameworks will further enable robust, transparent deployment in diverse robotic environments.

OpenVLA sets a foundational precedent in the design of scalable, adaptable, and interpretable VLA models for robotics, combining rigorous architectural choices, data-diverse training, and extensible open-source tooling, while maintaining a trajectory of ongoing innovation and research expansion.