Meta-Cognitive Controls in AI

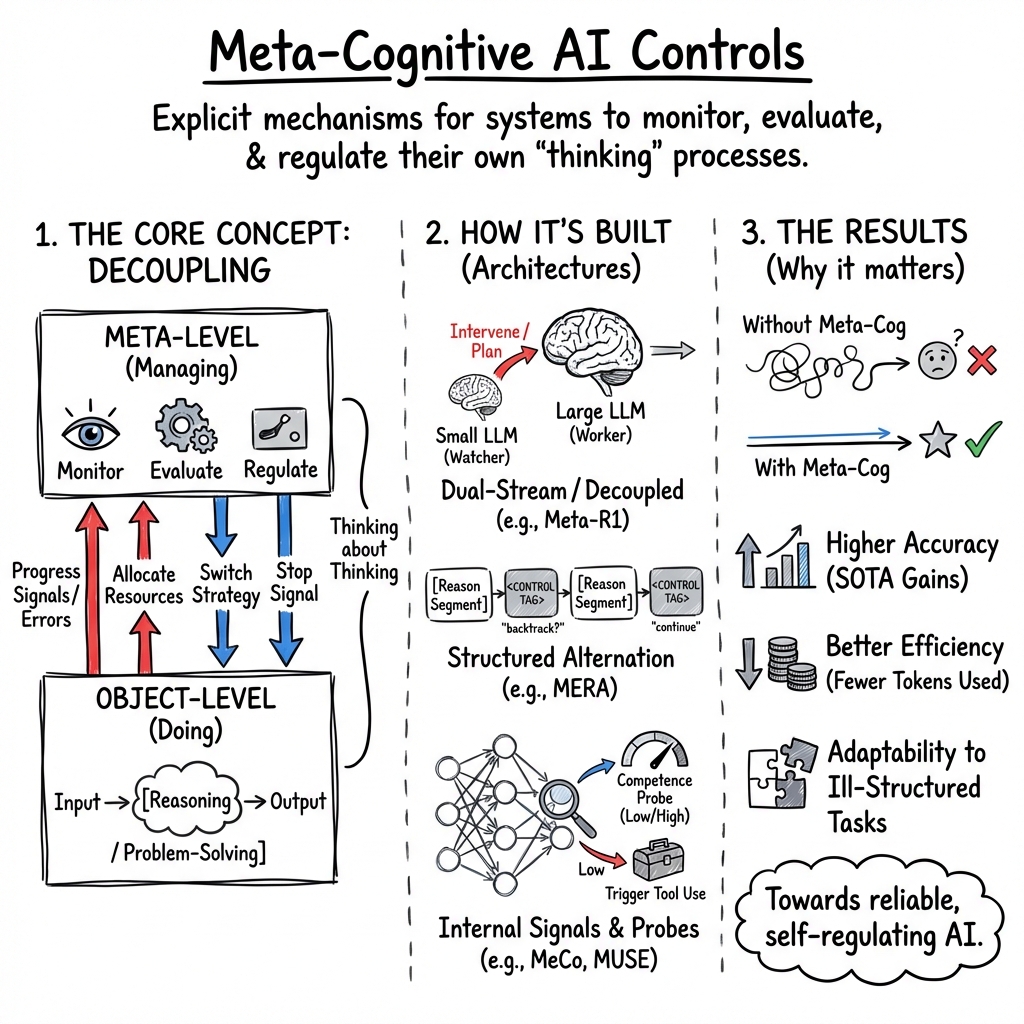

- Meta-Cognitive Controls are explicit mechanisms that enable AI systems to self-monitor and regulate their cognitive operations separate from object-level reasoning.

- They employ dual-level architectures, reinforcement learning, and probabilistic models to dynamically allocate resources, detect errors, and adapt strategies.

- These controls enhance performance by improving error detection, reducing token usage, and enabling efficient strategy switching in complex, multi-modal tasks.

Meta-cognitive controls are explicit mechanisms—architectural, algorithmic, or procedural—that enable an intelligent system to monitor, evaluate, and regulate its own cognitive processes. Originating in cognitive psychology as the basis of “thinking about thinking,” meta-cognitive control decouples “object-level” cognition (direct problem-solving or reasoning) from a “meta-level” layer that allocates resources, monitors progress, diagnoses errors, issues corrective signals, and optimizes termination or policy switching. Recent advances formalize meta-cognitive control across a spectrum of machine learning, reinforcement learning, knowledge editing, planning, hybrid-symbolic, and multi-modal architectures, yielding systematic gains in adaptivity, efficiency, robustness, and behavioral alignment with human reasoning.

1. Core Principles and Taxonomy

Meta-cognitive controls constitute an executive layer distinct from both the substrate representations and the elementary reasoning operations. Canonical meta-cognitive controls, summarized in recent taxonomies, notably include:

- Self-awareness: assessing the agent’s own knowledge state, uncertainty, and task solvability.

- Context awareness: monitoring external and situational factors (task, environment, agent roles) influencing the agent's behavior.

- Strategy selection: choosing reasoning or problem-solving strategies based on task requirements or internal/external feedback.

- Goal management: establishing, maintaining, and adaptively revising goals throughout a cognitive task.

- Evaluation: systematically assessing reasoning progress, efficiency, and correctness, issuing explicit or implicit signals for improvement or error detection.

These abstract controls are instantiated through architectural separations (object/meta modules), explicit meta-reasoning loops, introspective knowledge structures, competence-predictors, or meta-level planners (Kargupta et al., 20 Nov 2025).

2. Architectural Paradigms for Meta-Cognitive Controls

Recent machine learning systems realize meta-cognitive regulation in several distinct forms:

a. Decoupled Reasoning and Control (Dual-Stream LLM Architectures)

Meta-R1 epitomizes architectural separation: an object-level LLM (e.g., DeepSeek-R1-Distill-Qwen-14B/32B) handles causal inference and chain-of-thought, while a smaller meta-level LLM (e.g., Qwen2.5-1.5B) monitors, regulates, and intervenes via planning, error detection, and adaptive termination. Explicit meta-cognitive stages are:

- Proactive Metacognitive Planning: schema activation, difficulty prediction, strategy/budget allocation.

- Online Regulation: stepwise monitoring, error diagnosis, and latent advice injection contingent on content or progress signals.

- Satisficing Termination: adaptive early stopping upon budget exhaustion or meta-level detection of solution readiness.

This cascaded, inference-time (training-free) orchestration enhances both answer quality and token efficiency (Dong et al., 24 Aug 2025).

b. Structured Reasoning-Control Separation (MERA, Policy Optimization)

MERA further structurally alternates <reason> and <control> segments, explicitly training the model to generate stepwise self-evaluation tags (continue, backtrack, terminate), optimizing regulatory policies by reinforcement learning over control tokens only (Control-Segment Policy Optimization with control-masking and Group Relative Policy Optimization). Key benefits are strong compression of overthinking traces and statistically significant accuracy gains (Ha et al., 6 Aug 2025).

c. Meta-cognition via Rule-Based Controllers or Self-assessment Probes

Hybrid-AI approaches (EDCR) build symbolic or probabilistic meta-cognitive controllers atop neural perceptual backbones. Meta-cognitive error-detecting/correcting rules are induced without accessing model weights, flagging likely errors or relabeling outputs, governed by rigorous probabilistic precision/recall tradeoffs (Shakarian et al., 8 Feb 2025).

Other methods derive meta-cognitive signals directly in the representation space, using contrastive activations to train a probe (e.g., PCA direction) predictive of internal self-assessment, which then triggers downstream modules only when models detect their own limitations (e.g., tool-use in MeCo) (Li et al., 18 Feb 2025).

d. Dual-Memory and Meta-Control in RL

Multi-agent and quantum reinforcement learning frameworks (Q-ARDNS-Multi) realize meta-cognitive control through dual memory (short/long-term), meta-controllers that dynamically modulate learning rates, exploration, and curiosity based on running statistics (reward mean/variance, surprise), and explicit update rules for quantum policy parameters (Sousa, 2 Jun 2025). Actor–Critic agents can also employ the discrepancy between action-value and state-value as a pure internal metacognitive error signal, triggering confidence reporting and potential further search without needing external feedback (Schaeffer, 2021).

e. Competence-Aware and Competence-Regulative Loops

Self-awareness is formalized as a learned predictor (e.g., multinomial or neural head) estimating probability of task success; if competence is low, meta-regulation triggers strategy search or multiple rollout evaluation, selecting actions to maximize predicted competence. The MUSE framework demonstrates that coupling such signals with iterative policy selection drastically improves adaptation to out-of-distribution tasks (Valiente et al., 2024).

3. Mechanisms and Mathematical Formalisms

Meta-cognitive control mechanisms are mathematically formulated as:

- Cascaded calls between object-/meta-modules:

- , for planning and schema activation.

- , for error correction (Dong et al., 24 Aug 2025).

- Meta-controller updates:

- Recency-weighted averages, variances, and novelty statistics inform parameter updates (learning rate , curiosity , exploration ) via meta-networks and clipping (Sousa, 2 Jun 2025).

- Policy gradients and advantage computation are masked to control tokens only (MERA CSPO objective), focusing reward and update only where genuine meta-cognitive decisions occur (Ha et al., 6 Aug 2025).

- Game-theoretic credit assignment via (approximate) Shapley values over meta-knowledge units, with activations weighted for their marginal utility in knowledge editing (Fan et al., 6 Sep 2025).

- Probabilistic post-gating update equations for precision and recall of meta-cognitive gating or relabeling rules, providing theorems on necessary and sufficient conditions for net behavioral improvement (Shakarian et al., 8 Feb 2025).

- Graph-based test-time scaffolding creates explicit step-by-step meta-cognitive templates that can be dynamically injected into prompts, yielding substantial performance improvements, especially on ill-structured problems (Kargupta et al., 20 Nov 2025).

4. Applications Across Modalities and Problems

Large Language and Reasoning Models

- On GSM8K, AIME2024, and MATH500 benchmarks, Meta-R1 surpasses state-of-the-art by up to 27.3%, reduces token consumption by up to 32.7%, and improves root-scaled efficiency by up to 14.8% (Dong et al., 24 Aug 2025).

- Internalized meta-cognitive control (MERA) yields systematic reasoning trace compression and accuracy gains (e.g., 76% acc and 4,680 tokens, outperforming both original LRM and best baselines) (Ha et al., 6 Aug 2025).

- Test-time meta-cognitive scaffolding boosts LLM accuracy on ill-structured reasoning tasks by 21.9–60.0% (Kargupta et al., 20 Nov 2025).

Multimodal and Knowledge Editing Systems

- The MIND framework for multimodal LLMs (MLLMs) integrates self-awareness, boundary monitoring, and reflective label refinement, achieving high scores on fidelity, adaptability, compliance, and clarity under the CogEdit benchmark, outperforming baselines on all meta-cognitive metrics (Fan et al., 6 Sep 2025).

Multi-Agent RL, Robotics, and Planning

- Q-ARDNS-Multi’s meta-cognitive adaptation stabilizes learning, improves efficiency (210 to goal vs. 912 for SAC), and increases success (99.6% vs. 49.8%), tightly coupling reward statistics, meta-controller, and quantum circuit dynamics (Sousa, 2 Jun 2025).

- Structured meta-cognitive control enables effective self-regulated learning in creative problem-solving robotics, partitioning phases for monitoring, strategy transition, and feedback loops to optimize exploration/exploitation tradeoff (Romero et al., 7 Aug 2025).

Knowledge Editing, Tool Use, and Self-Assessment

- Fine-tuning-free meta-cognition triggers (MeCo) detect self-assessment signals and drive adaptive tool invocation, yielding sharp reductions in false calls and non-calls, and improving raw accuracy on tool-use benchmarks by 4–12 percentage points (Li et al., 18 Feb 2025).

- EDCR-based probabilistic meta-controllers analytically characterize conditions for metacognitive gating to guarantee precision gain at explicit recall cost, underpinning robust rule learning across neural, symbolic, and hybrid-AI contexts (Shakarian et al., 8 Feb 2025).

5. Empirical Quantification and Transferability

Across the spectrum, meta-cognitive controls yield increases in accuracy, efficiency, task success, and adaptivity, accompanied by reductions in output length, error rate, or computation:

- Meta-R1: Acc gain of +0.9–3.6% (up to 27.3% vs. SOTA), token savings 24.2–32.7%, robust across model sizes and datasets (Dong et al., 24 Aug 2025).

- MIND: Adaptability +26 points, Boundary Compliance +12 points, Clarity@4 +4 points over best baselines (Fan et al., 6 Sep 2025).

- MedAlign: 11.85% F1 gain, 51.60% reduction in reasoning length compared to fixed-depth (Chen et al., 24 Oct 2025).

- MUSE: Self-awareness AUROC 0.95 (vs 0.63), success rate gain from 0–35% to 70–90% across RL and LLM-based tasks (Valiente et al., 2024).

- Heuristic or Rule-based Meta-cognition: MeCo improves LLM precision in tool use, EDCR-based meta-cognitive gating provably bounds tradeoffs in hybrid-AI settings (Li et al., 18 Feb 2025, Shakarian et al., 8 Feb 2025).

All reported gains are empirical and evaluated against appropriate baselines, often over multiple domains and with ablation studies isolating the meta-cognitive components.

6. Open Challenges and Future Directions

Current limitations and research challenges include:

- Transferability of meta-cognitive probes: Gating thresholds or linear directions are often model/distribution-specific; robust cross-domain generalization remains open (Li et al., 18 Feb 2025).

- Rule and Signal Consistency: Combining multiple, independently learned meta-cognitive rules requires conflict-resolution or global prioritization mechanisms (Shakarian et al., 8 Feb 2025).

- Computational and Sample Efficiency: Some meta-cognitive routines, especially with multiple look-ahead rollouts or large control networks, can increase per-step computation; more efficient batching, selective application, or horizon scaling is under investigation (Valiente et al., 2024).

- Scaling and Coordination: For multi-agent or federated learning, synchronizing meta-cognitive signaling across sites and reconciling local vs. global competence estimates is nontrivial (Chen et al., 24 Oct 2025).

- Bridging Human-Machine Behavioral Gaps: Structural analyses continue to reveal that LLMs, though capable of meta-cognitive behaviors, rarely deploy them spontaneously; design of scaffolding methods to activate latent controls is an active area (Kargupta et al., 20 Nov 2025).

A plausible implication is that principled scaffolding, explicit representation of meta-level goals, and continuous introspection loops are critical for deploying high-reliability, adaptive AI systems across both closed- and open-world tasks.

Table: Representative Meta-Cognitive Control Frameworks

| Framework | Domain/Modality | Meta-cognitive Mechanisms |

|---|---|---|

| Meta-R1 (Dong et al., 24 Aug 2025) | LLM Reasoning | Proactive planning, real-time regulation, satisficing termination |

| MIND (Fan et al., 6 Sep 2025) | Multimodal LLMs | Meta-memory self-awareness, Shapley boundary monitoring, reflective editing |

| MERA (Ha et al., 6 Aug 2025) | LLM Reasoning | Alternating reason/control, segment-wise RL, control-masking |

| Q-ARDNS-Multi (Sousa, 2 Jun 2025) | Multi-agent RL | Dual-memory gating, meta-controller, adaptive exploration, quantum modulation |

| MUSE (Valiente et al., 2024) | World-model RL, LLMs | Competence prediction, competence-aware rollouts, self-regulation |

| MECO (Li et al., 18 Feb 2025) | LLM Tool Use | Linear-probe meta-cognitive gating in hidden space |

References

- (Dong et al., 24 Aug 2025)

- (Kargupta et al., 20 Nov 2025)

- (Ha et al., 6 Aug 2025)

- (Fan et al., 6 Sep 2025)

- (Chen et al., 24 Oct 2025)

- (Valiente et al., 2024)

- (Shakarian et al., 8 Feb 2025)

- (Sousa, 2 Jun 2025)

- (Li et al., 18 Feb 2025)

- (Schaeffer, 2021)

- (Cox et al., 2022)

In summary, meta-cognitive controls represent a unifying paradigm for self-regulation, adaptivity, and task-generalization in artificial intelligence. Architectures that expose explicit meta-level monitoring and regulation consistently outperform purely object-level or “emergent” cognitive systems, with benefits pronounced in ill-structured, novel, or multi-modal environments. Quantitative metrics, ablation findings, and mathematical theorems now ground this intuition, offering blueprints for principled implementations across the AI landscape.