Layer-wise Probing Study

- Layer-wise probing is a method that uses lightweight classifiers to extract and quantify information from each neural network layer.

- It reveals how features progressively evolve from low-level to high-level abstraction, identifying key depth-specific patterns.

- This approach enables effective model diagnostics, architectural comparisons, and informed interventions for improved interpretability.

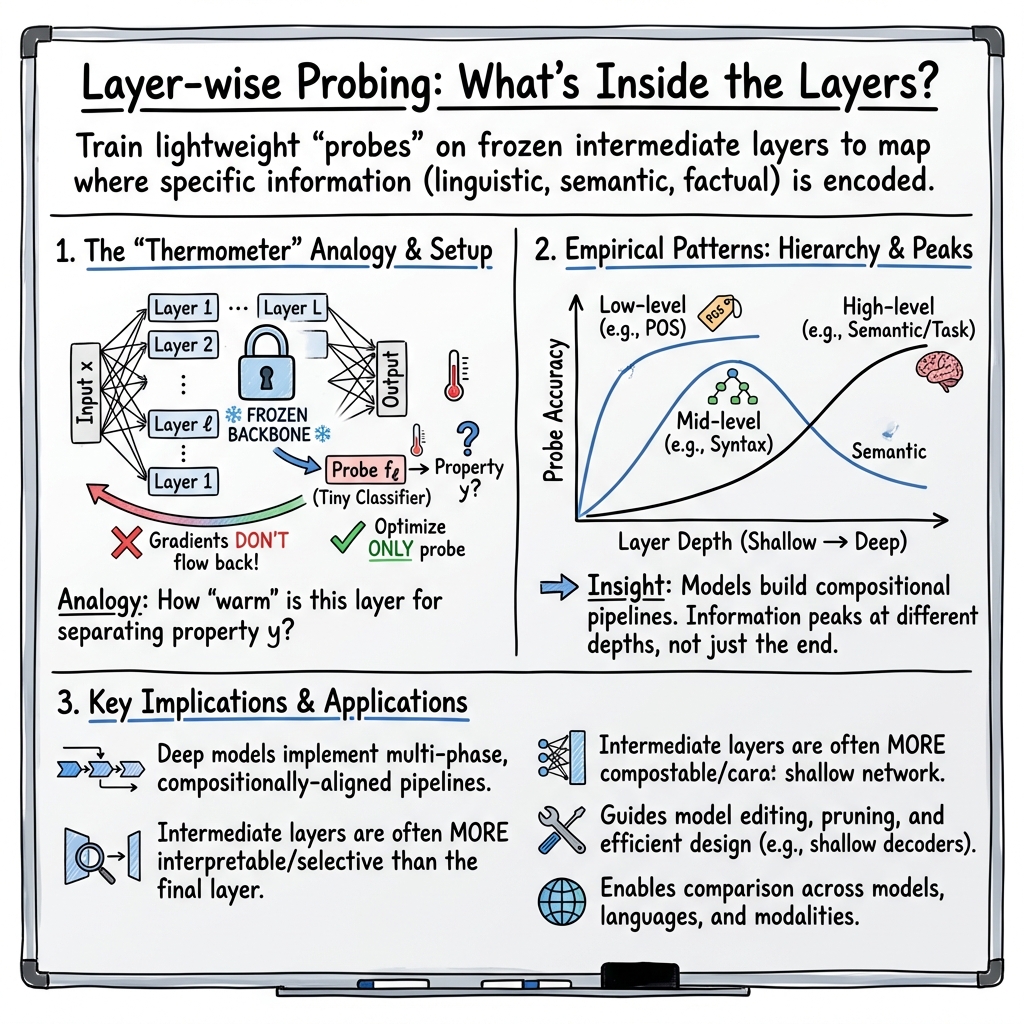

Layer-wise probing study refers to the systematic evaluation of neural network representations at each intermediate layer of a model by training lightweight classifiers or regressors—known as “probes”—to predict target properties (labels, features, or continuous variables) directly from these internal activations. By holding the backbone model parameters fixed and optimizing only the probe, researchers can quantitatively map where and how different types of information—linguistic, semantic, prosodic, factual, world-model, or task-specific—are encoded and transformed throughout the network. This paradigm has become foundational in the interpretability of deep models in language, vision, and speech, enabling fine-grained functional analysis of representational hierarchies, architecture comparisons, layer-wise specialization, and training dynamics.

1. Definitions, Objectives, and Rationale

Layer-wise probing was formalized as an empirical methodology for mapping what information is linearly extractable from each layer of a deep model via post-hoc analysis. Classic works introduced the term “probe” for a simple classifier or regressor trained on the frozen representation output by layer , with the key property that gradients do not flow into or alter the original model parameters (Alain et al., 2016). The typical scientific objectives are:

- Information Localization: Identify the depth(s) at which specific properties (e.g., syntax, semantic roles, task concepts) become accessible by a simple decision boundary.

- Hierarchical Organization: Chart the progression from low-level to high-level abstraction across layers, testing theoretical claims of hierarchical structure-building.

- Comparative Analysis: Measure and align the probing profiles across different pretraining objectives, architectures, languages, or fine-tuned variants to explain empirical performance differences (e.g., BERT vs. ELECTRA vs. XLNet (Fayyaz et al., 2021)).

- Diagnostic and Model Editing: Pinpoint “dead” layers, bottlenecks, or the optimal intervention depth for injection or manipulation of information (e.g., knowledge editing, style control).

- Task-dependence and Transferability: Determine which layer(s) should be tapped or frozen for a specific downstream application, maximizing performance and efficiency.

The underlying rationale is the “thermometer” analogy: a probe trained at each depth reveals — in a layer-resolved and task-specific sense — how “warm” the network sits with respect to linearly separating the quantities of interest.

2. Methodological Frameworks and Probe Formulations

The fundamental mathematical setup involves, for a network with layers, extracting the input–output pairs for each layer , where is the hidden state and the label. The primary probe types are:

Classification Probe:

trained with cross-entropy loss,

(Alain et al., 2016, Fuente et al., 2024)

Regression Probe:

For regularization, L1 or L2 penalties (, ) are frequently used.

Training Protocols:

Probes are shallow (typically linear), trained with fixed hyperparameters and early stopping, and evaluated with accuracy, macro F1, , or information-theoretic metrics (e.g., Minimum Description Length (Fayyaz et al., 2021), V-information (Ju et al., 2024, Ju et al., 14 Apr 2025)).

For sequence or span tasks, feature aggregation (e.g., pooling, attention, mean or max-pooling) is performed, and for multi-modal or spatial models, feature selection may involve attentive pooling (Psomas et al., 11 Jun 2025).

3. Empirical Patterns: Hierarchy, Peaks, Specialization

Layer-wise probing reliably uncovers distinctive depth-localized profiles reflecting the build-up, transformation, and specialization of model representations:

- Monotonic or Peaked Trajectories: In image models (ResNet, ViT), linear separability improves monotonically from shallow to deep layers (Alain et al., 2016, Psomas et al., 11 Jun 2025). In NLP and speech models, a non-monotonic “mid-layer peak” frequently appears—e.g., suprasegmental categories in wav2vec 2.0/HuBERT/WavLM peak at layers 7–9 (of 12) (Fuente et al., 2024), reinforced by findings in BERT for argument roles, with maximal probe accuracy at layers 3–6 (Kuznetsov et al., 2020).

- Task-specific Depth Allocation: NLP models encode word identity and POS earliest, followed by syntax (argument labels, dependency edges), then semantic or task-specific layers (relations, QA facts, knowledge) (Aken et al., 2019, Chen et al., 2023, Someya et al., 27 Jun 2025). In addition, high-resource language signals concentrate in deep layers of multilingual LLMs (Li et al., 2024).

- Objective-Driven Layer Dynamics: Pretraining (e.g., replaced token detection vs. language modeling) alters where information is stored: ELECTRA pushes task signal deeper, XLNet’s permuted LM leads to earlier peaks (Fayyaz et al., 2021). Fine-tuning can shift information earlier or repurpose upper layers; instruction-tuned LLMs produce crisper, more linearly separable clusters (Ju et al., 14 Apr 2025).

- Emergence of Computation-like Stages: In arithmetic and reasoning tasks (multi-digit addition, LLaMA probing), layers exhibit discrete “movement” stages—structure encoding, core computation (carry, partial sum), abstraction, and output formatting—mirroring human algorithmic steps (Yan, 9 Jun 2025, Chen et al., 2023, Gupta et al., 21 Oct 2025).

4. Representative Applications Across Modalities

Layer-wise probing has broad applications:

Speech and SSL Models:

Systematic probing of framewise or word-level representations in wav2vec 2.0, HuBERT, and WavLM reveals that abstract suprasegmental and syntactic features arise in contextual transformer layers, whereas raw convolutional outputs encode only local acoustics (Fuente et al., 2024, He et al., 19 Sep 2025, Saliba et al., 2024).

LLMs:

In BERT, probing recovers a pipeline: early layers for NER/POS, middle for syntax, upper for semantic facts or NLI, with fine-tuning specializing upper layers for the end task (Aken et al., 2019, Kuznetsov et al., 2020). In LLaMA, vertical analysis via multiple-choice tasks reveals that arithmetic, factual recall, and reasoning emerge at distinct depths proportionally scaling with depth (Chen et al., 2023).

Vision and Multimodal Models:

For ViT and masked image models, attentive probing (e.g., efficient probing) outperforms linear classifiers, extracting semantic content stably from intermediate layers and enabling interpretable spatial attention (Psomas et al., 11 Jun 2025). In multimodal LLMs, probing discovers a universal four-stage solution pipeline: visual grounding, lexical integration, reasoning, and answer formatting (Yu et al., 27 Aug 2025).

World-models and Games:

In specialized settings (e.g., OthelloGPT), probes and sparse autoencoders quantify the compositional progression from board geometry (shallow) to dynamic, relational planning (middle), and task output (deep) (Du et al., 13 Jan 2025).

5. Cross-model, Cross-lingual, and Cross-task Insights

Layer-wise probing provides a platform for model comparison:

- Architectural Effects: Pretraining objectives and backbone design affect depth of information localization: ELECTRA stores information in upper layers, XLNet in earlier ones, as revealed via MDL probes (Fayyaz et al., 2021).

- Language and Resource Effects: Cross-lingual probing shows high-resource languages benefit from deep-layer specialization, with flat or weak signal in low-resource settings, motivating adapter-based depth intervention and data augmentation (Li et al., 2024).

- Formalism-sensitive Mapping: The peak probing layer for recoverable semantic roles or Proto-Roles in BERT depends systematically on annotation scheme—syntactically anchored formalisms (PropBank) peak mid-network, while decompositional semantic properties require deeper representations (Kuznetsov et al., 2020).

- Contextualization and Extraction: The accessibility of human-relevant semantic features (e.g., affective, experiential norms) peaks in mid-layers and is heavily contingent on the contextualization of probes—template or averaged sentence contexts outperform isolated tokens (Tikhomirova et al., 7 Jan 2026).

6. Metrics, Visualization, and Interpretability

Quantitative and qualitative analyses include:

- Linearity Metrics: Macro-F1, accuracy, , V-information, MDL, mutual information gap (MIG), and auxiliary selection/confidence scores.

- Visualization: Probing weight visualization (e.g., block-diagonal emergence for digits (Yan, 9 Jun 2025)), representational clustering via PCA/t-SNE, activation heatmaps of attentive probes across spatial or feature dimensions (Psomas et al., 11 Jun 2025).

- Diagnostic Use: Early-layer probe failure identifies training bottlenecks; shifts in representational similarity/probe accuracy guide fine-tuning and model surgery.

- Editing Directions: Probing hyperplanes define linear boundaries interpretable for intervention; their normals can be used for controlled editing (e.g., personality attribute flipping) (Ju et al., 14 Apr 2025, Ju et al., 2024).

7. Theoretical and Practical Implications

The body of layer-wise probing research makes several high-impact contributions:

- **Deep models implement multi-phase, compositionally-aligned pipelines even with end-to-end training, with intermediates paralleling classic (linguistic, computational, algorithmic) stages.

- **The final layer is rarely the optimal point for extracting abstract, human-aligned or transferable features; intermediate layers are typically more selective and interpretable for probing (Tikhomirova et al., 7 Jan 2026).

- **Probing enables comparisons of depth allocation, localization of emergent behavior, and identification of optimal points for pruning, freezing, or targeted intervention.

- **Cross-modal and multilingual analyses suggest universality of layered hierarchies but also expose resource and architectural gaps.

- **Probing non-invasively reveals the “computational anatomy” of deep models and guides not only interpretability but also efficient model design (e.g., deep encoders and shallow decoders in NMT (Xu et al., 2020), stage-wise dynamic reasoning (Gupta et al., 21 Oct 2025)).

By rigorously mapping the layer-wise informational topology of neural networks, probing studies constitute an indispensable bridge between black-box architectures and functional, interpretable, and efficient AI systems.