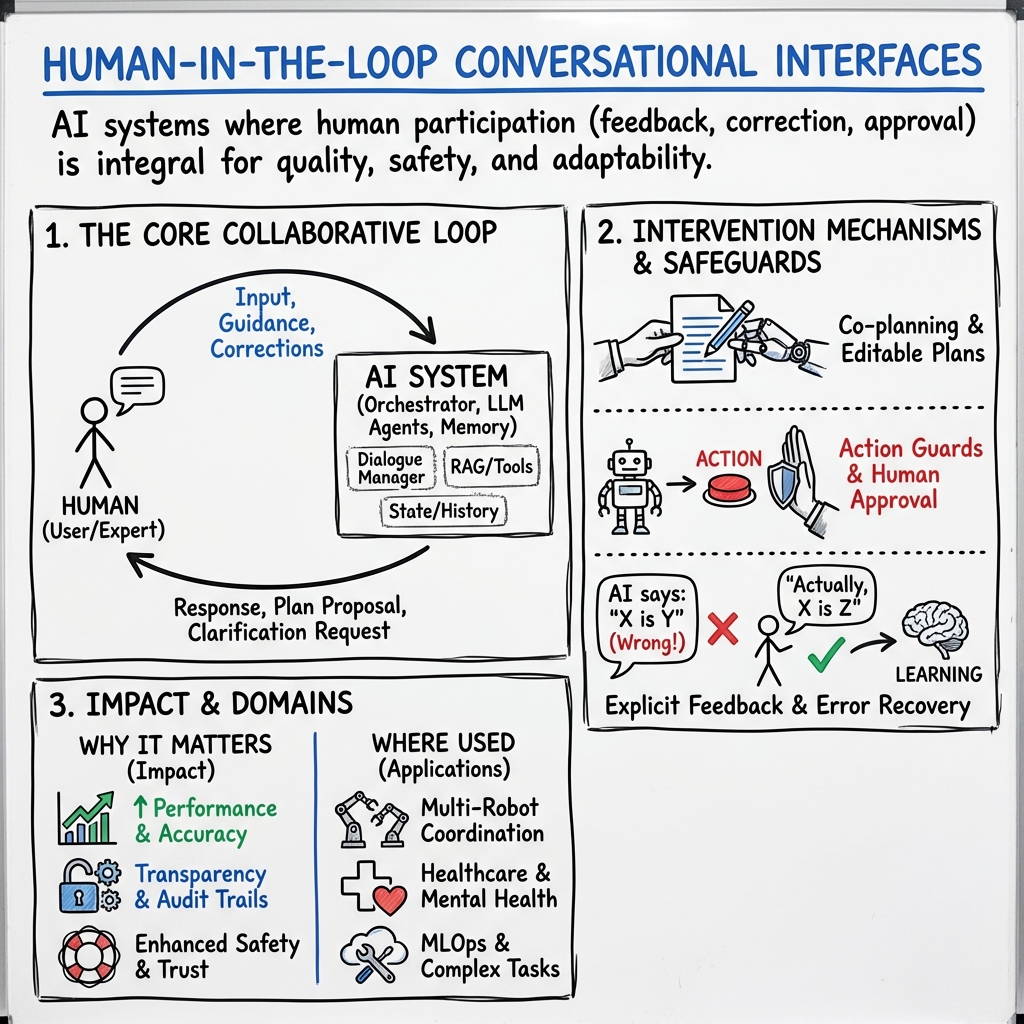

Human-in-the-Loop Conversational Interface

- Human-in-the-loop conversational interfaces are systems that blend human oversight with AI dialogue management to enhance adaptability, safety, and control.

- They employ robust methodologies like context-aware orchestration, dynamic feedback integration, and domain-specific safety protocols to improve performance and reliability.

- Practical applications span multi-robot coordination, healthcare, and MLOps, demonstrating significant efficiency and reduced error rates.

A human-in-the-loop conversational interface is an architectural paradigm in which human participants remain integral to the operation, quality assurance, and adaptability of AI-driven dialogue systems. These systems capitalize on human feedback, arbitration, correction, or co-planning to inject domain expertise, ethical judgment, safety constraints, and adaptive behavioral refinement beyond what fully autonomous agents can achieve. The approach spans diverse contexts—from task guidance, multi-robot coordination, MLOps, and pharmacologic modeling to mental-health care, explainable AI, and multimodal embodied interaction—each with bespoke workflow designs, evaluation metrics, and mathematical formulations.

1. Architectural Patterns and System Components

Human-in-the-loop conversational systems exhibit heterogeneous architectures tailored for application domain and interaction regime, yet share core features:

- Interaction Front Ends: Graphical user interfaces (chat windows, plan editors, video streams) facilitate real-time, bidirectional exchanges between users and agents (Hunt et al., 2024, Manuvinakurike et al., 2022, Fatouros et al., 16 Apr 2025, Mozannar et al., 30 Jul 2025, Bazgir et al., 5 Dec 2025).

- Conversational Orchestrators: Middleware aggregates utterances, manages session context, routes requests to specialized agents or modules, and maintains global state via contextual memory (rolling vector embeddings, chat histories) (Hunt et al., 2024, Fatouros et al., 16 Apr 2025, Bazgir et al., 5 Dec 2025).

- Agent Controllers/Submodules: Specialized components such as LLM-based dialogue managers, retrieval-augmented generation agents, planning agents, data-brokers, task guidance wizards, and justification modules interact with both users and external APIs/services (Fatouros et al., 16 Apr 2025, Hunt et al., 2024, Manuvinakurike et al., 2022).

- State and Protocol Layering: Platforms leverage state machines to manage conversation phases (e.g., understanding/validation/action/error recovery), enforce constraint checks, or synchronize multimodal inputs and outputs (Bazgir et al., 5 Dec 2025, Preece et al., 2014, Arias-Russi et al., 31 Aug 2025).

- Extensibility: Protocols such as the Model Context Protocol (MCP) enable plug-and-play integration of new tools, permitting dynamic augmentation of agent capabilities (Mozannar et al., 30 Jul 2025).

| Layer | Example Component | Role |

|---|---|---|

| UI | Chat window, plan editor | User interaction, feedback |

| Orchestrator | Python/Chainlit backend | Session/context management |

| Specialized agent | KFP, RAG, Reasoning, Wizard | Domain/task-specific logic |

| External services | ROS2, Kubeflow, MinIO, LLM | Real-world actuation, computation |

2. Dialogue Management, Feedback, and Intervention Mechanisms

Human-in-the-loop dialogue systems implement multiple forms of human participation:

- Co-planning and Editable Plans: Humans jointly craft or alter step-by-step agent plans, reviewing, correcting, and authorizing before execution (Mozannar et al., 30 Jul 2025).

- Direct Intervention: Human participants interject during task execution (e.g., by supplying constraints, clarifying ambiguous instructions, solving CAPTCHAs) (Hunt et al., 2024, Mozannar et al., 30 Jul 2025).

- Supervisory or Wizard Control: Wizards mediate agent responses, inject domain expertise, select utterances, and alternate control over navigation or conversation (Manuvinakurike et al., 2022, Arias-Russi et al., 31 Aug 2025).

- Feedback and Error Recovery: Systems ingest evaluative signals—ranging from numerical rewards or accept/reject choices to open-text feedback and slot-filling clarifications—to refine agent behavior and update internal state (Li et al., 2016, Bazgir et al., 5 Dec 2025, Preece et al., 2014).

- Action Guards and Safety Constraints: Pre-execution guards and user approval prompts mitigate risks of misaligned or irreversible actions (Mozannar et al., 30 Jul 2025).

- Long-Term Memory and Learning: Session traces and user corrections are stored and leveraged for continual improvement, plan retrieval, and context adaptation (Mozannar et al., 30 Jul 2025).

3. Methodologies for Quality, Adaptivity, and Reasoning

Distinct application areas motivate specialized methodologies:

- Task Guidance & Embodied Agents: Wizard-of-Oz interfaces fused with action segmentation, multimodal retrieval (CLIP, Sentence-BERT), semantic frame extraction, and slot-based question generation facilitate shared initiative, error correction, and causal online inference (Manuvinakurike et al., 2022, Arias-Russi et al., 31 Aug 2025).

- Argumentative Multi-Agent Planning: Decentralized, peer-to-peer planning via argument-style dialogue acts (PROPOSE, CHALLENGE, CLARIFY, ACCEPT) supports consensus negotiation and adaptation to live human constraint injection (Hunt et al., 2024).

- Explainable AI Decision Support: Conversational XAI architectures coordinate menu-driven and LLM-powered explanation modules (e.g., SHAP, PDP, MACE, Decision Trees, WhatIf) and inject evaluative scaffolding to compare model rationale to user-specified criteria (He et al., 29 Jan 2025).

- Graph Reasoning and Constraint Enforcement: Typed knowledge graph manipulation, BFS-based parameter alignment, mass-balance checks, and iterative code generation underpin specialized conversational modeling workflows, as seen in QSP platforms (Bazgir et al., 5 Dec 2025).

- Sensing and Fusion: Formal message-passing protocols progress dialogue from NL inputs through CE rule-based representation, Bayesian fusion, and explainable rationale generation (Preece et al., 2014).

4. Mathematical Formulations and Quantitative Evaluation

Rigorous mathematical frameworks underpin several subsystems:

- Contextual Embedding Updates: Session context evolves via concatenation and transformation of user utterances and agent responses (Fatouros et al., 16 Apr 2025).

- Quality-of-Information Fusion: Bayesian updates, reliability-weighted aggregation, and coreference resolution combine multimodal evidence (Preece et al., 2014).

- Reward-Based and Forward Prediction Learning: Hybrid loss functions combining imitation () and textual feedback prediction () drive online learning (Li et al., 2016).

- Unit, Mass-Balance, and Physiological Constraints: Dimensional analysis, stoichiometric matrix checks (), and bounded range enforcement maintain model fidelity during graph-edit workflows (Bazgir et al., 5 Dec 2025).

- Task Completion, Error Rate, and SUS Scores: Empirical evaluation metrics—mean rank, mean reciprocal rank, accuracy, switch/RAIR/RSR fractions, usability scores (SUS), and time-to-completion—quantify system efficacy and user experience (Chattopadhyay et al., 2017, Fatouros et al., 16 Apr 2025, Mozannar et al., 30 Jul 2025, Arias-Russi et al., 31 Aug 2025).

5. Applications and Domain-Specific Instantiations

Human-in-the-loop conversational interfaces have been deployed in a spectrum of domains:

- Multi-Robot Systems: Orchestrated planning, role negotiation, and real-time replanning coordinated via dialogical peer/human exchanges (Hunt et al., 2024).

- Task Guidance: Procedural scaffolding for complex human tasks using video stream analysis, semantic structuring, and wizard mediation for multi-modal annotation (Manuvinakurike et al., 2022).

- Visual Conversational Games: Human-AI collaborative image-guessing games reveal mismatches between AI-alone benchmarks and true team performance (Chattopadhyay et al., 2017).

- Healthcare and Mental Health: HITL architectures preserve safety and auditability, integrating AI companions with explicit human oversight or tool-assisted therapeutic expertise (Lukas, 5 Jan 2025, Arias-Russi et al., 31 Aug 2025).

- MLOps and Knowledge Work: Conversational MLOps assistants encapsulate orchestration, data, and documentation queries, lowering barriers to entry for non-expert users (Fatouros et al., 16 Apr 2025).

- Agentic Systems and Web Automation: Multi-agent frameworks adopt co-planning, co-tasking, verification, and memory mechanisms for safe, auditable tool use (Mozannar et al., 30 Jul 2025).

- Systems Pharmacology Modeling: Interactive graph editing, intent parsing, parameter alignment, and constrained code generation enable accessible, error-resistant QSP workflows (Bazgir et al., 5 Dec 2025).

- Conversational Sensing: Transparent translation between NL and CE, sensor fusion, and rationale replay advance explainability and collaborative situation awareness (Preece et al., 2014).

6. Impact, Usability, and Operational Challenges

Human-in-the-loop conversational interfaces demonstrably improve flexibility, accessibility, robustness, and safety:

- Performance: Efficiency gains of 50–60% over manual platforms, reduction in user errors by >50%, and broad usability across technical levels (Fatouros et al., 16 Apr 2025, Mozannar et al., 30 Jul 2025).

- Transparency: Persistent chat logs, actionable traces, rationale relaying, and explicit confirmation cycles render agentic actions auditable (Hunt et al., 2024, Preece et al., 2014).

- User Trust and Reliance: Enhanced user engagement and subjective trust with conversational interfaces, though “illusion of explanatory depth” and over-reliance remain persistent challenges—particularly when powered by highly plausible LLM generations (He et al., 29 Jan 2025).

- Safety and Security: Layered action guards, sandboxing, explicit approval prompts, and domain whitelists mitigate autonomy-associated risks and adversarial manipulation (Mozannar et al., 30 Jul 2025).

7. Future Directions and Design Guidelines

Continual evolution of HITL architectures is guided by:

- Mixed-Initiative and Adaptivity: Optimizing task handover protocols and scaffolding for seamless human-agent role alternation (e.g., co-planning, slot suggestions, back-and-forth clarifications) (Manuvinakurike et al., 2022, Mozannar et al., 30 Jul 2025).

- Context Awareness and Feedback Integration: Leveraging session memory, agent personalization, multimodal fusion, and feedback-driven retraining to adapt to user needs and environmental drift (Zhou et al., 10 Oct 2025, Manuvinakurike et al., 2022, Chattopadhyay et al., 2017).

- Explainability, Trust Calibration, and Auditability: Embedding cues for uncertainty, counterfactual reasoning, and provenance, aligning natural-language clarity with technical faithfulness (He et al., 29 Jan 2025, Preece et al., 2014, Lukas, 5 Jan 2025).

- Scalable and Modular Tooling: Protocol-based tool discovery, containerized agent processes, and open-source evaluation suites accelerate extensibility and reproducible research (Mozannar et al., 30 Jul 2025, Arias-Russi et al., 31 Aug 2025).

- Domain-Specific Safety and Ethics: Continuous monitoring, role-specific UI affordances, and staged deployment strategies underpin responsible integration in sensitive areas, such as health and public safety (Lukas, 5 Jan 2025, Arias-Russi et al., 31 Aug 2025).

In summary, human-in-the-loop conversational interfaces constitute an essential class of AI-driven collaborative systems, enabling rigorous control, adaptability, transparency, and robustness across task domains where autonomous agents alone remain insufficient or unsafe. The research corpus evidences rapid progress in architectural diversity, evaluation methodology, usability, and impact, while highlighting ongoing challenges in calibration of reliance, ethical oversight, and longitudinal adaptation.