Advantage Design for RLVR: Strategies & Insights

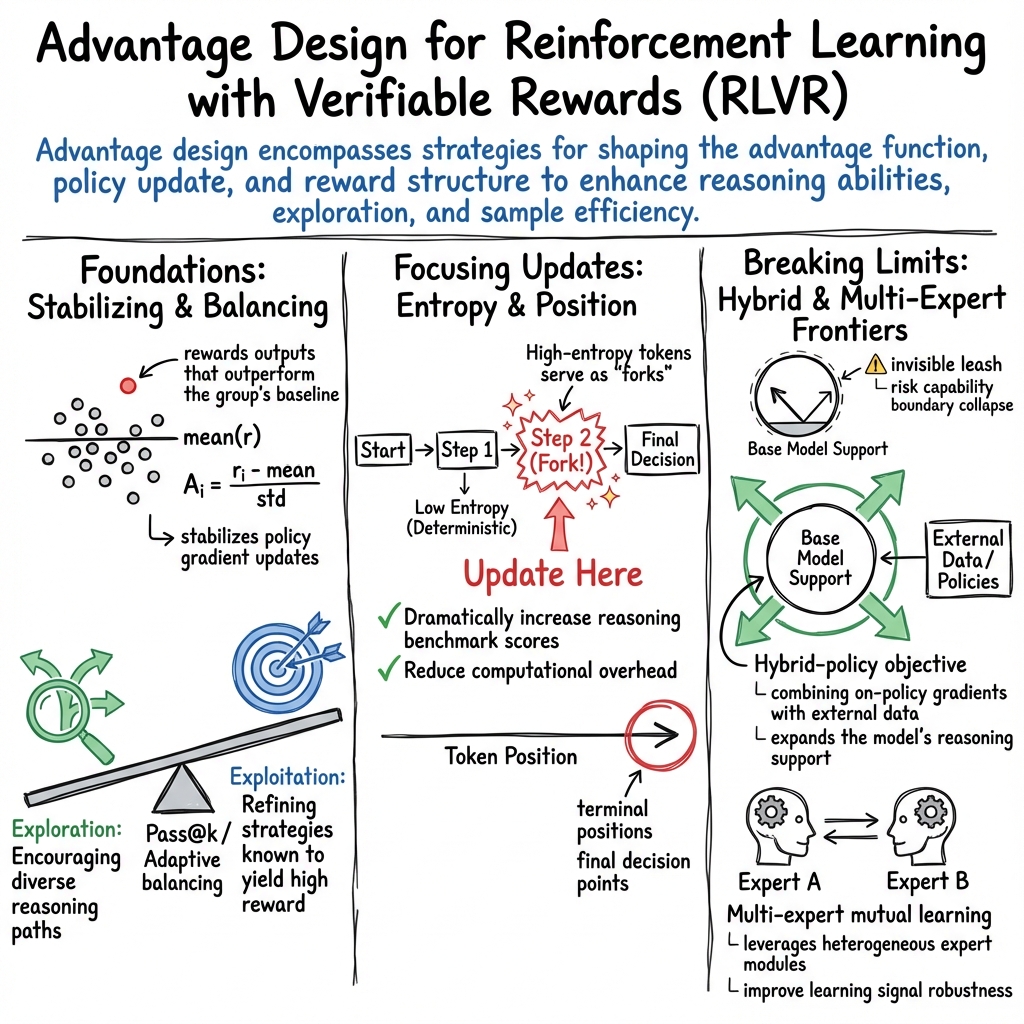

- Advantage design for RLVR is a set of reward shaping techniques that adjust token-level advantages to improve exploration, reasoning, and sample efficiency.

- The method employs entropy-aware token selection and Pass@k-based strategies to balance exploration and exploitation dynamically.

- Hybrid-policy and multi-expert architectures strengthen performance while mitigating capability collapse in advanced AI models.

Advantage design for Reinforcement Learning with Verifiable Rewards (RLVR) encompasses strategies for shaping the advantage function, policy update, and reward structure to enhance reasoning abilities, exploration, and sample efficiency in both language and vision models. The recent RLVR literature provides multiple orthogonal insights on advantage mechanisms, from token entropy-aware policies to hybrid policy optimization and structured multi-expert mutual learning. The following sections synthesize key principles, empirical results, and methodologies that characterize the state of advantage design in RLVR.

1. Foundations of Advantage Design in RLVR

RLVR systems employ an advantage function to weigh RL policy updates, emphasizing outputs (or tokens) that are more valuable under the reward objective. The canonical group-normalized advantage is given by

where denotes the verifiable reward for candidate output within a sampling group (Wang et al., 29 Apr 2025). This normalization stabilizes policy gradient updates and rewards outputs that outperform the group’s baseline, even in settings with sparse or binary feedback.

Advantage design in RLVR must reconcile several competing objectives:

- Exploration: Encouraging the model to traverse diverse reasoning paths, particularly those with low prior probability under the base policy.

- Exploitation: Refining and concentrating probability mass on reasoning strategies known to yield high reward.

- Sample Efficiency: Focusing the optimization on tokens, samples, or data points where learning potential is highest.

Modern RLVR research highlights that policy gradient loss, when paired with group-normalized or exploration-aware advantage calculation, implicitly regularizes the model and incentivizes the emergence of reasoning capabilities—often without explicit reasoning supervision (Wang et al., 29 Apr 2025, Zhang et al., 27 Feb 2025).

2. Entropy-Aware and Token-Level Advantage Strategies

Recent work reveals that only a minority of tokens in chain-of-thought (CoT) reasoning—typically the top 20% by entropy—serve as “forks” in the reasoning process, driving logical transitions and exploration (Wang et al., 2 Jun 2025). By explicitly restricting policy gradient updates to these high-entropy tokens, RLVR can:

- Dramatically increase reasoning benchmark scores, especially in larger LLMs.

- Improve generalization to out-of-domain tasks by focusing learning capacity on decision-critical moments.

- Reduce computational overhead by avoiding updates to deterministic (low-entropy) tokens.

The selective gradient update can be formalized as

where masks updates to only the top quantile in entropy (Wang et al., 2 Jun 2025).

Complementary to this are dual-token constraint approaches such as Archer (Wang et al., 21 Jul 2025), which apply reduced KL regularization and increased clipping to reasoning tokens (high-entropy), while narrowly constraining knowledge tokens (low-entropy). This dual mechanism allows synchronous, role-aware updates, safeguarding core knowledge while progressively refining logical inference.

3. Exploration-Exploitation Tradeoffs and Pass@k-Based Advantages

Classical RLVR schemes often use Pass@1 as reward, incentivizing conservative exploitation. Pass@k training, by contrast, tunes the agent based on the probability that at least one out of rollouts is successful (Chen et al., 14 Aug 2025). Analytical derivation of the group-relative advantage for Pass@k yields:

- Efficient, low-variance gradient updates.

- Incentives for exploration in harder problem instances (where positive responses are rare).

- Adaptive balancing: On easy tasks, exploitation is favored; on hard tasks, exploration pressure is automatically higher.

This analytical advantage can be written as

for a positive response, with and derived from counts of correct/incorrect samples. The strategy is extendable to adaptive advantage functions, where entropy or reward distribution can be used to dynamically adjust the balance between exploration-promoting and exploitation-promoting updates.

4. Instance- and Position-Specific Advantage Shaping

Empirical analyses across RLVR training stages indicate that learning efficiency is maximized by focusing updates on:

- Tokens in low-perplexity (low-PPL) outputs, corresponding to fluent and robust reasoning chains.

- High-entropy tokens at terminal positions, which often serve as final decision points.

Reward shaping methods formalize this intuition:

- Perplexity-based advantage adjustment:

where is the standardized log-perplexity of response (Deng et al., 4 Aug 2025, Deng et al., 11 Aug 2025).

- Positional advantage shaping:

with as a normalized token position and setting directionality (e.g., favoring late-sequence tokens). These mechanisms measurably improve reasoning accuracy by refining the most consequential tokens (Deng et al., 11 Aug 2025, Deng et al., 4 Aug 2025).

5. Hybrid and Multi-Expert Advantage Architectures

On-policy RLVR tends to reinforce the model’s initial support, risking capability boundary collapse—i.e., the inability to discover correct answers never sampled by the base model (Dong et al., 31 Jul 2025, Wu et al., 20 Jul 2025). RL-PLUS and MEML-GRPO present hybrid solutions:

- RL-PLUS introduces a hybrid-policy objective, combining on-policy gradients with external data via multiple importance sampling (MIS), and an exploration-based advantage function that amplifies updates to correct but low-probability reasoning steps. This approach addresses distributional mismatch and consistently expands the model’s reasoning support beyond base boundaries (Dong et al., 31 Jul 2025).

- MEML-GRPO leverages heterogeneous expert modules, each guided by diverse system prompts. Inter-expert mutual learning (e.g., minimization of pairwise parameter differences) aggregates varied advantage perspectives to improve learning signal robustness, reward sparsity handling, and performance stability (Jia et al., 13 Aug 2025).

Table: Comparison of Key Advantage Design Paradigms

| Design Strategy | Mechanism | Main Empirical Benefit |

|---|---|---|

| High-entropy token focus | Update only "fork" tokens via entropy | Cost-effective, generalizes across domains (Wang et al., 2 Jun 2025) |

| Dual-token constraint | Separate exploration/exploitation | Stabilizes knowledge, promotes reasoning (Wang et al., 21 Jul 2025) |

| Pass@k/Adaptive advantage | Group reward on k candidates | Automates exploration-exploitation tradeoff (Chen et al., 14 Aug 2025) |

| Hybrid-policy and MIS | On-policy + external policy integration | Addresses boundary collapse, boosts OOD (Dong et al., 31 Jul 2025) |

| Multi-expert mutual learning | Prompt and gradient sharing among experts | Mitigates reward sparsity, improves convergence (Jia et al., 13 Aug 2025) |

6. Limits, Challenges, and Future Directions

Recent theoretical and empirical work shows that RLVR, absent explicit exploration, is fundamentally limited to enhancing support present in the base model ("invisible leash") (Wu et al., 20 Jul 2025). Answer-level diversity often contracts even as pass@1 increases. Solution proposals include:

- Explicit exploration mechanisms, e.g., distribution mixing:

where is an explicit exploration policy.

- Hybrid or off-policy data integration, as realized in RL-PLUS and MEML-GRPO, to inject probability mass into underrepresented regions.

- Task-adaptive and instance-/token-level advantage shaping, leveraging entropy, perplexity, and positional signals.

- Integrating curriculum or difficulty-aware learning schedules to ensure focus on the most informative training samples (Li et al., 23 Jul 2025, Deng et al., 4 Aug 2025).

In practice, these innovations yield robust generalization, improved pass@k, OOD transfer, and faster adaptation in domains ranging from math and code to multimodal and agentic settings. However, perfecting advantage design in RLVR remains an open area, with ongoing research into fine-grained control of exploration-exploitation dynamics, verifier-free regimes, scaling trends, and hybrid data strategies.

7. Practical Implications

- For mathematical and logical reasoning, token entropy-based or Pass@k-driven advantage shaping enables model efficiency and broad generalization, even with limited data (Wang et al., 29 Apr 2025, Wang et al., 2 Jun 2025).

- In vision-language and robotics, compositional reward function design and group-normalized advantages encourage systematic reasoning while sidestepping the need for dense annotation (Song et al., 22 May 2025, Koksal et al., 29 Jul 2025).

- Hybrid-policy advantage approaches combined with mutual learning yield state-of-the-art or near-SOTA performance across diverse reasoning and generation benchmarks, with strong sample efficiency and robustness to reward sparsity (Dong et al., 31 Jul 2025, Jia et al., 13 Aug 2025).

- Careful advantage design mitigates capability collapse, improves exploration capacity, and supports adaptation to new tasks, domains, and modalities. Application of these principles underpins effective RLVR fine-tuning and deployment in contemporary AI systems.

In summary, the contemporary landscape of advantage design for RLVR covers entropy-aware token selection, group-relative and position-aware shaping, hybrid on- and off-policy integration, and multi-expert mutual learning. Collectively these innovations provide a rigorous toolkit for extracting, amplifying, and generalizing reasoning capabilities in LLMs, VLMs, and related architectures.