Active Perception Behaviors in AI

- Active Perception Behaviors are defined by a closed-loop perception–action cycle where agents actively gather sensory data to reduce uncertainty.

- They employ feedback-driven sensing and resource-aware control to adapt sensor placements and trajectories based on real-time observations.

- These strategies underpin robust performance in robotics and autonomous systems, enabling iterative policy refinement and efficient information acquisition.

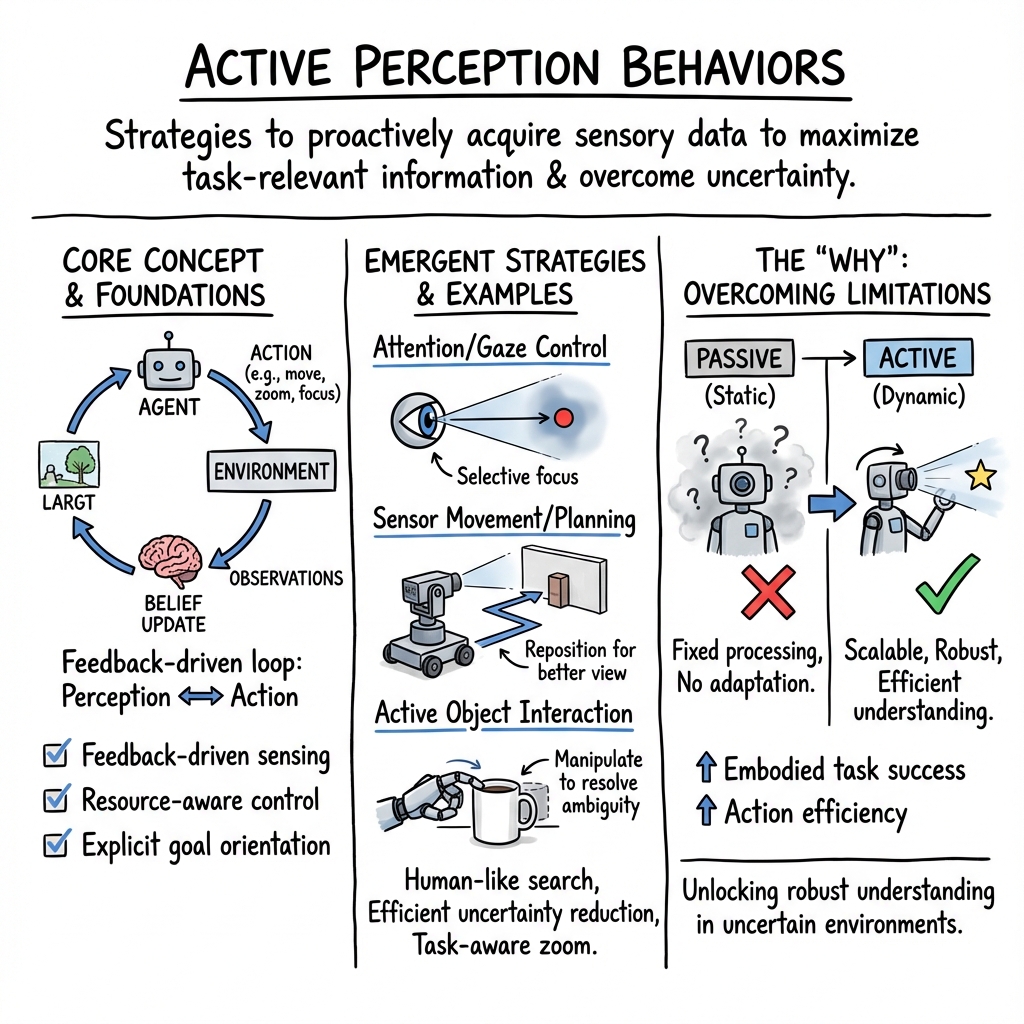

Active perception behaviors refer to the strategies and mechanisms by which intelligent agents—robotic or computational—proactively acquire sensory data to maximize task-relevant information and overcome uncertainty or ambiguity in their environment. Unlike passive perception, which processes whatever data are incidentally received, active perception entails closed-loop interaction between perception and action, enabling the system to adapt its sensing parameters, attention, or physical configuration to accomplish specific goals. This paradigm is foundational across embodied AI, robotics, and autonomous systems, where informed, purposeful sampling of the sensorium is critical for scalable, robust performance in open-world, partially observable, or dynamically changing settings (Li et al., 3 Dec 2025, Yang et al., 19 Nov 2025, Bajcsy et al., 2016).

1. Conceptual Foundations and Formal Structure

Active perception is characterized by a tightly coupled perception–action loop, wherein the agent (1) selects sensing actions (e.g., changing viewpoint, focus, zoom, sensor modality), (2) gathers new observations, and (3) updates its internal state or belief to guide subsequent actions. This differentiates it fundamentally from passive systems, which apply fixed processing to a static sensory input stream without feedback-driven adaptation (Li et al., 3 Dec 2025, Bajcsy et al., 2016). The key principles distinguishing active perception are:

- Feedback-driven sensing: Sensing actions are conditioned on the agent’s current belief or interpretation of the state.

- Resource-aware control: Sensor placement, time allocation, and computation are optimized in light of informational objectives and operational constraints.

- Explicit goal orientation: Sensing strategies are selected to maximize information gain, minimize uncertainty, or improve expected utility with respect to task-specific metrics.

Mathematically, active perception is often formalized as a partially observable Markov decision process (POMDP) or as optimization over expected information gain, where the optimal action at time may be determined via: with denoting expected information gain about latent variables given action and dataset (Li et al., 3 Dec 2025).

2. Taxonomy of Active Perception Behaviors

Active perception manifests in several canonical behavioral classes, each tied to specific sensorimotor or attention mechanisms and commonly instantiated in both biological systems and artificial agents (Li et al., 3 Dec 2025, Bajcsy et al., 2016, Yang et al., 19 Nov 2025):

- Attention/Gaze Control: Selective focusing of sensors or computational resources on spatial subregions of interest (e.g., foveated vision, saliency-driven saccades).

- Sensor Movement and Trajectory Planning: Physical repositioning of visual sensors (pan/tilt/zoom mechanisms, mobile platforms) to acquire more informative or less ambiguous views (Sripada et al., 2024).

- Active Object/Scene Interaction: Manipulation of objects or environmental configuration to reveal hidden features or to resolve ambiguity (e.g., regrasping for unoccluded view).

- Multimodal Sensory Fusion: Dynamic integration and weighting of multiple sensory streams (vision, haptics, audio) to leverage complementary information.

- Selective Attention in State Space: Dynamic selection of which state variables or features to observe or process, trading off information value against sensing/computation cost (Ma et al., 2020).

These behaviors are not mutually exclusive and frequently coexist or co-adapt within complex perception-action pipelines.

3. Algorithmic and Learning Frameworks

Modern approaches to active perception behaviors span classical optimal control, Bayesian inference, and contemporary deep learning paradigms. Core algorithmic themes include:

- Information-Theoretic Action Selection: Actions are chosen to maximize expected information gain, often quantified using mutual information or reduction in Shannon entropy of the belief state (Li et al., 3 Dec 2025, He et al., 2024).

- Bayesian and Belief State Updates: Recursive Bayesian filtering (e.g., Kalman, particle filters) propagates the agent’s state belief and quantifies uncertainty, which guides the next-best action planning (Sehr et al., 2020, Silva et al., 2021).

- POMDP and Reinforcement Learning Methods: Formulating active perception as a POMDP enables agents to deploy deep reinforcement learning (e.g., PPO, SAC) to synthesize policies that explicitly close the perception–action loop under partial observability (Yang et al., 19 Nov 2025, Hu et al., 1 Dec 2025, Luo et al., 18 May 2025).

- Hierarchical and Option-based Planning: Temporal abstraction via attention modes or action options (e.g., hierarchical planners) facilitates efficient focus and switching of sensing strategies (Ma et al., 2020).

- Tokenization and Policy Embedding: Recent models, such as EyeVLA, discretize continuous sensor control into action tokens and autoregressively generate action sequences conditioned on visual and linguistic context (Yang et al., 19 Nov 2025).

Not only do these mechanisms enable the agent to select optimal viewpoints, zoom levels, or sensor configurations, but they also underpin the emergence of sophisticated search, tracking, and integrative behaviors in complex tasks (Xiong et al., 18 Jun 2025, Wang et al., 2024).

4. Quantitative Evaluation and Benchmark Domains

Active perception behaviors are evaluated using diverse metrics specific to the target application and the nature of the environment:

- Action and Task Accuracy: Mean absolute error (MAE) for angle/zoom predictions, Intersection over Union (IoU) for bounding-box quality, and completion rate (CR) for perceptual task success (Yang et al., 19 Nov 2025).

- Information Gain and Coverage: Reduction in entropy or covariance of belief states; semantic object discovery rates; coverage of previously unobserved regions (He et al., 2024, Sehr et al., 2020).

- Efficiency Metrics: Number of sensing actions, path length, or computational cost per unit of information or task success (Wang et al., 2024, Zhu et al., 27 May 2025).

- Real-World Task Benchmarks: Robotic manipulation in occluded or cluttered environments (Hu et al., 1 Dec 2025, Xiong et al., 18 Jun 2025), dynamic landing and navigation for UAVs (Mateus et al., 2022, Ginargiros et al., 2023), visual question-answering under restricted fields of view (Wang et al., 2024), and scene exploration for semantic tasks (Sripada et al., 2024).

A representative result is the marked increase in embodied task success and action efficiency for policies applying fine-grained pan/tilt/zoom within reinforcement-optimized frameworks, such as EyeVLA’s reduction of MAE by over 50% and completion rate increases by 60 percentage points over baselines (Yang et al., 19 Nov 2025).

5. Empirical Insights and Emergent Strategies

Large-scale empirical studies reveal a broad range of emergent active perception strategies, including:

- Human-like search and exploratory behaviors: End-to-end RL agents develop head-sweeping, gaze-centering, and target localization routines analogous to human vision (Luo et al., 18 May 2025, Xiong et al., 18 Jun 2025).

- Efficient uncertainty reduction: Agents adaptively prioritize viewpoints or actions that maximally eliminate ambiguity, leading to non-trivial zig-zag or opportunistic trajectories in exploration tasks (He et al., 2024, Zaky et al., 2020).

- Task-aware zoom/shift control: Multimodal models learn to zoom on or shift to subregions that specifically resolve the information bottleneck for downstream reasoning tasks, demonstrated in MLLM-based VQA (Wang et al., 2024, Zhu et al., 27 May 2025).

- Viewpoint and attention diversity: Diversity-promoting objectives avoid repeated sampling of redundant or ambiguous subregions, enhancing coverage and downstream accuracy (Zhu et al., 27 May 2025).

- Modality-adaptive fusion: Systems integrate multi-sensor inputs, weighing them dynamically relative to contextual informativeness (Li et al., 3 Dec 2025).

A key finding across domains is that iterative policy refinement via RL or imitation learning (potentially leveraging privileged information during training) consistently yields robust, sample-efficient active perception compared to static or open-loop baselines (Hu et al., 1 Dec 2025).

6. Limitations, Open Challenges, and Prospects

Despite significant progress, several open challenges constrain the full realization of active perception:

- Real-time computation and planning: Solving full POMDPs or high-dimensional information gain problems in real time remains computationally intensive. Lightweight approximations and policy learning are active areas of research (Li et al., 3 Dec 2025).

- Sample and data efficiency: Sample-complexity remains high in domains requiring complex exploration; few-shot and offline RL are being developed to address this (Zhu et al., 27 May 2025).

- Generalization and sim-to-real transfer: Active policies trained in simulation can suffer from degradation in real-world environments due to sensory noise, dynamic obstacles, or domain shift (Ginargiros et al., 2023).

- Multi-agent coordination and multi-modal fusion: Integrating asynchronous sensor data, synchronizing multi-agent actions, and fusing heterogeneous sensory streams pose architectural and algorithmic challenges (Li et al., 3 Dec 2025).

- Ethics, safety, and resource constraints: Balancing the benefits of active exploration with safety, privacy, and energy constraints is critical, especially in human-centered or safety-critical settings (Li et al., 3 Dec 2025, Bajcsy et al., 2016).

Future work is advancing toward: (1) collaborative and scalable multi-agent active perception; (2) seamless integration of information-theoretic criteria with end-to-end differentiable architectures; (3) standardization of ethical and operational frameworks for deployment; and (4) closing the semantic gap between high-level reasoning and low-level sensorimotor policies in vision-language-action models (Yang et al., 19 Nov 2025, Sripada et al., 2024, Zhu et al., 27 May 2025).

Active perception behaviors form the algorithmic core of next-generation embodied intelligence. Through the synthesis of mechanisms for viewpoint control, sensor adaptation, attention, and information-guided exploration, agents can transcend the limitations of passive sensing—unlocking scalable, efficient, and robust understanding in complex, dynamic, and uncertain environments (Li et al., 3 Dec 2025, Yang et al., 19 Nov 2025, Zhu et al., 27 May 2025, Xiong et al., 18 Jun 2025).