Large Emotional World Model

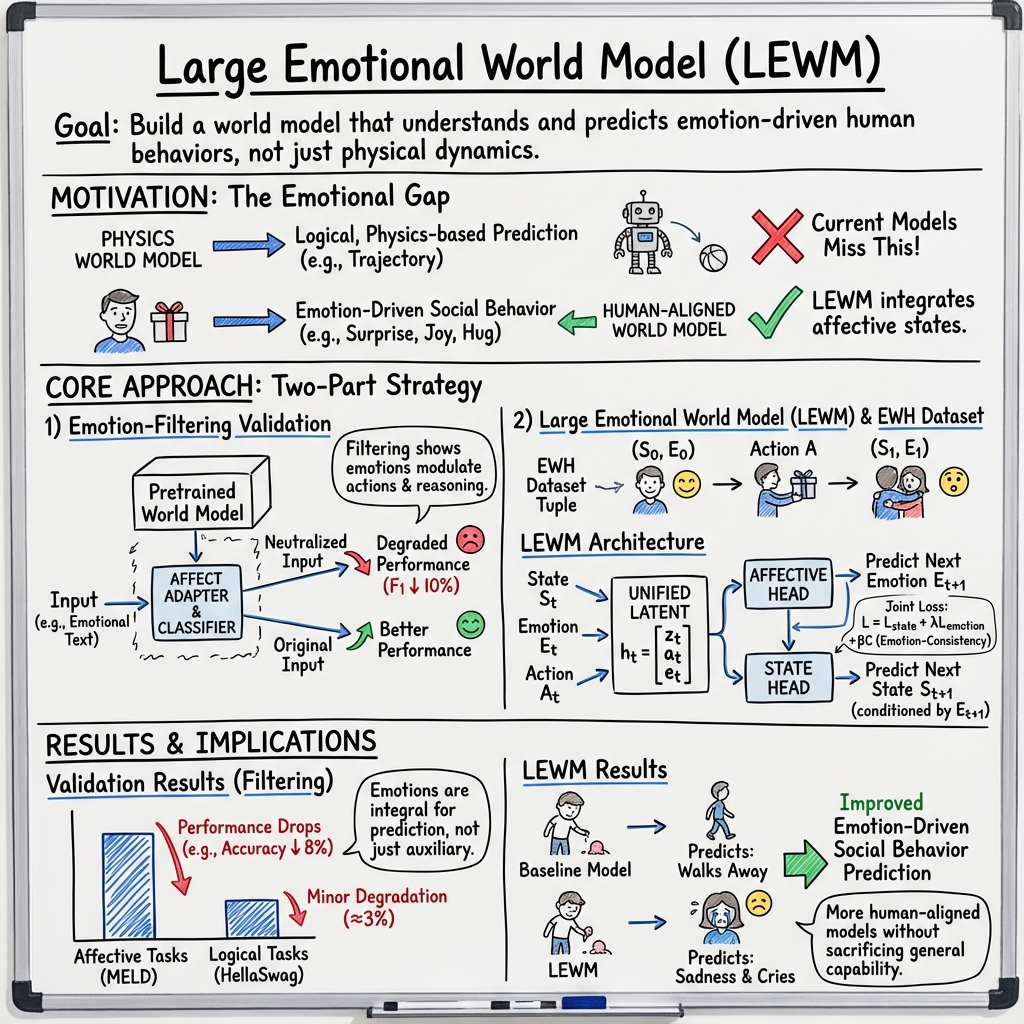

Abstract: World Models serve as tools for understanding the current state of the world and predicting its future dynamics, with broad application potential across numerous fields. As a key component of world knowledge, emotion significantly influences human decision-making. While existing LLMs have shown preliminary capability in capturing world knowledge, they primarily focus on modeling physical-world regularities and lack systematic exploration of emotional factors. In this paper, we first demonstrate the importance of emotion in understanding the world by showing that removing emotionally relevant information degrades reasoning performance. Inspired by theory of mind, we further propose a Large Emotional World Model (LEWM). Specifically, we construct the Emotion-Why-How (EWH) dataset, which integrates emotion into causal relationships and enables reasoning about why actions occur and how emotions drive future world states. Based on this dataset, LEWM explicitly models emotional states alongside visual observations and actions, allowing the world model to predict both future states and emotional transitions. Experimental results show that LEWM more accurately predicts emotion-driven social behaviors while maintaining comparable performance to general world models on basic tasks.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper asks a simple question: If we want AI to understand and predict what happens in the world, shouldn’t it also understand people’s emotions? The authors show that emotions matter for reasoning and decision-making, and they build a new kind of “world model” that doesn’t just look at what people see and do, but also how they feel. They call it the Large Emotional World Model (LEWM).

Think of a world model like a super-smart simulator or a weather forecast for life. Most current models track “physical weather” (what objects do, how things move). This paper adds the “emotional weather” (how people feel) so the AI can better predict what people will do next—especially in social situations.

Key Objectives

Here is what the researchers set out to do:

- Test whether removing emotional information hurts an AI’s ability to think and predict.

- Build a dataset that links situations, actions, and emotions so the AI can learn how feelings influence behavior.

- Create a model (LEWM) that predicts both what happens next in the world and how emotions change over time.

- Check that adding emotions improves social predictions without making the model worse at basic tasks.

Methods and Approach

To make their ideas work, the authors used two main steps and one new model. You can think of these like adding layers to a simulator: first muting emotions to see the effect, then collecting examples of emotion-driven actions, and finally building a model that uses this information.

Step 1: “Emotion Filtering” Test

- What they did: They trained a small module that can: 1) Detect if a piece of text contains emotional content. 2) Rewrite emotional sentences into neutral ones while keeping the facts.

- Why: This is like muting the “emotional soundtrack” in a movie to see whether the story still makes sense to the AI. If performance drops when emotions are muted, emotions must matter for reasoning.

- How it works in simple terms:

- A classifier says “emotional” or “not emotional.”

- A rewriter turns “I’m thrilled about this plan!” into “I agree with this plan,” keeping the meaning but removing the feeling.

- They then compared how well the AI performed on different tasks with and without emotional content.

Step 2: Building the EWH Dataset (Emotion–Why–How)

- Goal: Teach the model to answer Why people act a certain way (because of emotions) and How those emotions change what happens next.

- What’s inside: Each example is like a mini-comic strip with mood captions:

- The starting situation (video, audio, images) plus the person’s emotion.

- The action taken (described in natural language).

- The resulting situation and updated emotion.

- How they built it: They used a large multimodal AI (one that understands video and audio) to scan scenes from movies, TV, and first-person recordings and find moments where behavior is clearly driven by emotions (like anger or sadness). The AI then labels the action and infers the emotional states.

- Why this matters: Instead of only learning physical rules (like “if you push a ball, it rolls”), the dataset teaches emotional rules (like “if someone feels rejected, they might avoid a group”).

Step 3: The LEWM Model

- What it predicts: Given the current situation, the current emotion, and an action, LEWM predicts both:

- The next situation (what you’ll see/hear next).

- The next emotion (how the feelings change).

- How it thinks (simple analogy): LEWM first updates the “mood forecast” (emotion), then uses that mood to predict what happens next in the “world forecast” (sights and sounds). This matches how people often think: feelings change first, and then actions and outcomes follow.

- Training rules:

- The model is rewarded for accurate world predictions and realistic emotion shifts.

- It’s also encouraged to be stable: small changes in emotion shouldn’t cause wild, unrealistic changes in the world prediction.

Main Findings and Why They Matter

Here are the key results the authors report:

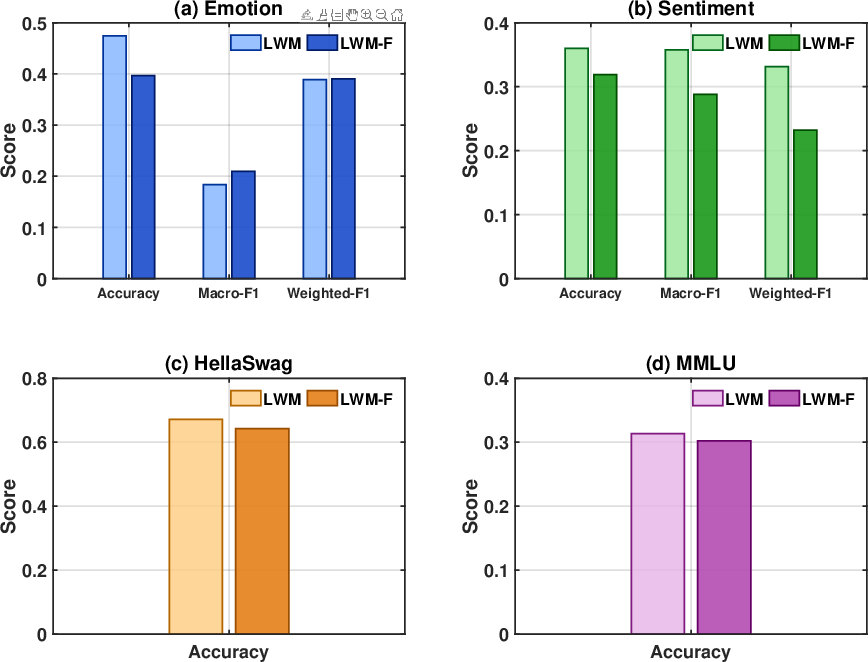

- Removing emotions hurts emotional tasks:

- When the emotional content was filtered out, accuracy on emotion and sentiment tasks dropped by up to about 8–10%. This shows emotions aren’t just decoration—they’re important clues for understanding what’s going on.

- Small effect on general reasoning:

- On non-emotional tasks (like commonsense or school-style questions), performance dipped only slightly (around 1–3%). So emotions help a bit there, but they’re not the main thing.

- LEWM predicts emotion-driven behavior better:

- When emotions guide actions (like comforting a friend or making an impulsive purchase), LEWM is better at predicting what people will do next compared to general models that ignore feelings.

- No trade-off on basics:

- LEWM stays competitive on regular tasks, meaning “adding emotions” doesn’t break the model’s general skills.

Why this matters: Lots of real-life behavior is emotion-driven, not just logic-driven. A model that understands both is more realistic and more useful.

Implications and Potential Impact

- More human-aware AI: Systems that plan, help, or cooperate with people—like digital assistants, social robots, or game characters—can act more naturally if they understand emotional context.

- Better social simulations: Researchers and designers can simulate communities and interactions more realistically, which can help in education, training, or urban planning.

- Safer decision-making: In areas like autonomous driving or customer support, recognizing emotion-driven behaviors can help predict unusual actions and respond appropriately.

- Responsibility matters: Using emotions in AI must be handled carefully to avoid privacy issues, bias, or manipulation. The model should be used to understand and support people, not to exploit them.

In short, this paper shows that emotions are a missing piece in many AI “world simulators.” By adding the “emotional weather report” to the forecast, LEWM makes predictions about human behavior more accurate and more human-like, without sacrificing general skills.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a consolidated list of specific gaps and unanswered questions that future work could address to strengthen the Large Emotional World Model (LEWM) and the EWH dataset.

- EWH labeling quality and bias: No quantitative audit of the LMM-generated action/emotion labels, temporal boundaries, or facial-expression inferences (e.g., per-class precision/recall, confidence calibration, inter-rater agreement via human audits) is provided.

- Cultural and demographic validity: The emotion inference pipeline (especially facial expressions) and media sources (movies/TV) may carry cultural biases; cross-cultural generalization and demographic fairness are untested.

- Domain realism: Heavy reliance on scripted media risks exaggerated or stylized emotions; generalization from media to real-world, unscripted egocentric contexts is not evaluated.

- Dataset scope and statistics: The paper omits key EWH metadata (size, duration, class distributions, number of subjects, domains, languages), making coverage and balance unclear.

- Human validation: No human-in-the-loop assessment of EWH’s causal tuples (S0, E0, A → S1, E1) to verify that actions are plausibly emotion-driven or that temporal ordering is correct.

- Emotion taxonomy and granularity: The type of emotion representation (categorical labels vs. dimensional valence–arousal–dominance, intensity, mixed emotions) is unspecified, limiting interpretability and extensibility.

- Emotion ambiguity and incongruence: Handling of sarcastic, masked, or culturally atypical expressions (e.g., smiling when angry) is not discussed; robustness to incongruent multimodal cues is untested.

- Multi-agent dynamics: The model focuses on a single agent’s emotion–action chain; emotion contagion, interpersonal regulation, and jointly evolving multi-agent states are not modeled or evaluated.

- Confounding factors: Personality traits, social norms, goals, or context (e.g., constraints, incentives) that mediate emotion–action links are not explicitly represented or controlled for.

- Causal claims vs. predictive training: Although an emotion-first causal pathway is assumed, the training is predictive/supervised without interventions; no causal identification or counterfactual tests validate the factorization.

- Emotion-first factorization justification: There is no ablation comparing emotion-first vs. alternative factorizations (e.g., joint, action-first) to validate the assumed causal ordering.

- Uncertainty modeling: LEWM uses point estimates with consistency regularization; uncertainty in emotion and state predictions (e.g., Bayesian heads, stochastic latents, calibrated probabilities) is not modeled or reported.

- Long-horizon rollouts: Evaluation focuses on short-term transitions; multi-step stability, error accumulation, and drift in emotion-conditioned rollouts are not analyzed.

- Closed-loop use: Integration with planning/decision-making (e.g., model-based RL, simulation for policy evaluation) is not explored; benefits for control or social navigation remain untested.

- Evaluation metrics for “emotion-driven behavior prediction”: Concrete task definitions, datasets, and metrics (e.g., next-action accuracy, sequence F1, video prediction metrics) for LEWM’s claimed gains are not provided.

- Baseline coverage: Comparisons to social-simulation baselines (e.g., generative agents, ToM-enhanced models) or emotion-aware models are missing; only general world models are discussed.

- Ablations and component studies: No studies on the contribution of Enc_E, the emotion-consistency regularizer, λ/β hyperparameters, or representation choices (concatenation vs. attention/FiLM) are reported.

- Modality contributions: The relative utility of video, audio, facial features, and language (action descriptions) is not quantified; modality-specific ablation or fusion strategies are unexplored.

- Emotion representation learning: Whether emotions are learned as discrete classes, continuous embeddings, or through self-supervised affective structures is unspecified; alignment with affective science taxonomies is unclear.

- Handling label noise: Weakly supervised emotion labels from LMMs/facial models introduce noise; noise-robust objectives, confidence weighting, or label denoising methods are not used or evaluated.

- Robustness and adversarial cases: Sensitivity to occlusions, poor audio, lighting changes, or adversarially misleading cues is not studied.

- Generalization across contexts: Transfer to new domains (e.g., different cultures, languages, settings) and cross-dataset validation are not presented.

- Ethical and privacy considerations: Use of egocentric recordings (consent), potential misuse in surveillance, and ethical safeguards are not discussed.

- Interpretability: Despite citing “emotion neurons” in LLMs, LEWM lacks mechanisms to probe how predicted emotions influence states (e.g., attribution, causal tracing, mechanistic interpretability).

- Resource and scalability: Compute/training costs, memory footprint, and scalability to million-length sequences (as in the cited LWM) are not analyzed for LEWM.

- Reproducibility and release: Availability of the EWH dataset (licensing, splits), code, and trained models is not specified, hindering replication and benchmarking.

- Emotion-filtering module limits: The preliminary study uses a small, binary-emotion dataset and only one base model; effects across richer emotion taxonomies, architectures, and statistical significance tests are absent.

- Text rewriting artifacts: The neutralization process may alter semantics/context; no human evaluation quantifies fidelity or disentangles affect removal from content loss.

- Language modality gaps: It is unclear whether and how the natural-language action descriptions from the LMM are used during training or evaluation within LEWM.

- Physiological and behavioral signals: No consideration of biosignals (e.g., heart rate, GSR) or body pose/gesture beyond facial features, limiting affect inference fidelity.

- Calibration and confidence reporting: No calibration, reliability diagrams, or uncertainty estimates are provided for emotion/state predictions, making risk-aware use difficult.

Glossary

- Adaptive weight fusion: A training technique that adjusts the contribution of multiple objectives during optimization. "we implement a two-stage training strategy with adaptive weight fusion."

- Affect Adapter: A module that transforms latent representations to remove emotional content while preserving semantics. "facilitated by an Affect Adapter , parameterized by , which transforms the latent representations to achieve emotional filtering."

- Affect Classifier: A component that detects whether input text contains emotional content. "Classify whether contains emotional content using an Affect Classifier , parameterized by "

- affective circuits: Hypothesized pathways within models that consistently drive emotional expressions. "LLMs contain stable and traceable affective circuits that consistently drive emotional expressions across various contexts"

- affective signals: Emotional cues in data that influence model behavior or reasoning. "Our results confirm that affective signals can significantly influence model behavior"

- affective transition: The change in an internal emotional state over time or between states. "where models the affective transition"

- conditional probability framework: A modeling approach that predicts variables conditioned on observed inputs. "We model emotion-aware world dynamics under a unified conditional probability framework."

- conditional text generation problem: Formulation of generating text based on input conditions and labels. "the neutralization task is formulated as a conditional text generation problem."

- Coupled Transition Factorization: A design that decomposes future prediction into sequential emotion and state transitions. "Coupled Transition Factorization."

- cross-entropy loss: A standard loss function for classification tasks measuring prediction divergence from the true distribution. "The classification task employs a standard cross-entropy loss ,"

- constrained rewriting process: A controlled transformation of text to meet specific constraints (e.g., neutrality). "For each emotional text $\mathbf{x}^{(k)} \in \mathcal{X}_{\text{emo}$, we generate its neutralized counterpart through a constrained rewriting process"

- dual-task learning framework: A setup where a model learns two tasks simultaneously to leverage shared representations. "operates through a dual-task learning framework that simultaneously performs affect recognition and affective content transformation,"

- dynamic weighting scheme: A method that adaptively balances losses of multiple tasks during joint training. "The second stage jointly optimizes both objectives with a dynamic weighting scheme:"

- egocentric recordings: First-person perspective audio-visual data captured from the subject’s viewpoint. "including movies, television scenes, and egocentric recordings."

- Emotion-Why-How (EWH) dataset: A multimodal dataset encoding emotion-driven causal relationships and transitions. "Emotion-Why-How(EWH) is a multimodal dataset designed to capture emotion-driven state transitions in real-world behavioral scenarios."

- emotion-aware modeling framework: An approach that explicitly incorporates emotional states into model reasoning and prediction. "together with an emotion-aware modeling framework"

- emotion-aware world dynamics: Predictive modeling that accounts for how emotions influence future states. "We model emotion-aware world dynamics under a unified conditional probability framework."

- emotion-conditioned latent representation: A combined embedding that fuses perception, action, and emotion. "An emotion-conditioned latent representation is then constructed via concatenation:"

- emotion-consistency regularization: A constraint encouraging stable state predictions under small emotional changes. "we impose an emotion-consistency regularization:"

- emotion-filtering module: A component designed to detect and neutralize emotional content in text. "we design an emotion-filtering module based on multi-task learning."

- emotion-first causal pathway: A factorization that predicts emotion changes before state changes to reflect human cognition. "we decompose the transition into an emotion-first causal pathway:"

- explicit observable pathway: The direct causal chain from observed state to action to next state. "(1) an explicit observable pathway "

- HellaSwag: A benchmark for commonsense reasoning and narrative continuation. "general knowledge understanding benchmarks HellaSwag\cite{zellers2019hellaswag} and MMLU\cite{hendrycks2020measuring}."

- implicit emotional pathway: The causal chain involving emotion that influences actions and future states. "(2) an implicit emotional pathway ."

- Large Emotional World Model (LEWM): A world modeling framework that jointly predicts future states and emotional transitions. "we further propose a Large Emotional World Model (LEWM)."

- LLMs: High-capacity neural models trained on vast text corpora for diverse language tasks. "LLMs contain stable and traceable affective circuits"

- Large Multimodal Model (LMM): A model that processes multiple modalities (e.g., video, audio, text) for understanding and generation. "we employ a Large Multimodal Model (LMM) to understand the video and automatically locate temporal segments"

- Large World Model (LWM): A general world model used as a baseline in experiments. "We select the Large World Model (LWM)\cite{liu2024world} as base model and compare it with its filtered LWM-F."

- latent dynamics: Hidden state evolution learned by neural models to simulate environment transitions. "and neural architectures that learn latent dynamics \cite{ha2018world}."

- latent embeddings: Vector representations of inputs (states, emotions, actions) in a learned feature space. "We first encode each component into latent embeddings:"

- macro-F1: An evaluation metric averaging F1-scores across classes to reduce class imbalance effects. "rise in macro-F1 for the emotion task"

- maximum likelihood estimation: A training objective that maximizes the probability of observed sequences under the model. "through maximum likelihood estimation:"

- MELD dataset: A multimodal dataset for emotion recognition in conversations. "the widely used MELD dataset\cite{poria2018meld} to measure performance on sentiment and emotion tasks."

- MMLU: A benchmark assessing massive multitask language understanding across disciplines. "HellaSwag\cite{zellers2019hellaswag} and MMLU\cite{hendrycks2020measuring}."

- multimodal decoder: A component that reconstructs observable states (e.g., video, audio) from latent representations. "through a multimodal decoder:"

- multimodal generative models: Models that generate content across multiple modalities, often simulating realistic interactions. "with the rise of multimodal generative models, such as Sora"

- multimodal observable state: The combined, synchronized sensory inputs representing the environment at a time step. " denotes the multimodal observable state at time "

- natural-language action description: A text-based explanation of actions detected within multimedia segments. "the LMM provides a natural-language action description "

- neutralization transformation: A parameterized process that removes subjective emotion from text while keeping factual content. "represents the neutralization transformation with parameters designed to remove subjective evaluative language while preserving factual content."

- social simulacra: Artificial agents or systems that emulate human social behavior. "and social simulacra\cite{park2023generative}."

- state-centric objective: A training target prioritizing accurate prediction of future environment states. "Training is formulated as a state-centric objective,"

- temporally grounded tuple: A structured sample anchored in time that links states, actions, and emotions. "each EWH sample is represented as a temporally grounded tuple:"

- theory of mind: The ability to attribute mental states (like beliefs and emotions) to others for reasoning. "Inspired by theory of mind, we further propose a Large Emotional World Model (LEWM)."

- transition heads: Separate output modules predicting emotion transitions and state transitions. "we define two transition heads, where estimates the affective transition and predicts emotion-conditioned state evolution:"

- weakly supervised proxy: An approximate label or signal derived with limited supervision to represent a latent concept. "serving as a cognitively aligned but weakly supervised proxy of internal affect."

Practical Applications

Immediate Applications

The following applications can be deployed with existing components (LEWM architecture, the emotion-filtering module, and similar multimodal/LLM tooling), subject to standard integration and domain adaptation.

- Emotion-aware customer support coaching

- Sector(s): software, customer service, enterprise SaaS

- What: Real-time assistant for call centers that predicts short-term emotion transitions (E_{t+1}) and recommends de-escalation actions (A_t) during live chats/calls.

- Tools/products/workflows: Ingest text/audio from calls → Enc_S/Enc_E → T_E for emotion trajectory → next-best-action prompts; integrate as a plugin for CRM (e.g., Zendesk, Salesforce Service Cloud).

- Assumptions/dependencies: High-quality audio/text capture; privacy-compliant processing; domain adaptation to company-specific tone and escalation policies.

- Tone neutralization for compliance and brand consistency

- Sector(s): software, marketing, HR, legal

- What: Use the emotion-filtering module to rewrite internal/external communications (emails, tickets, social replies) into neutral, brand-aligned language.

- Tools/products/workflows: Batch or real-time rewrite API; QA layer for semantic preservation; dashboards for audit trails.

- Assumptions/dependencies: Reliable semantic preservation; configurable style guides; governance for auditability and consent.

- Instructor/tutor assistants that adapt to student affect

- Sector(s): education, edtech

- What: Intelligent tutoring systems that detect student frustration/confusion and adjust content difficulty or feedback strategy.

- Tools/products/workflows: Text/video from online classrooms → Enc_S/Enc_E → T_E predicts near-term affect → curriculum-policy adjusts hints/examples.

- Assumptions/dependencies: Consent and privacy safeguards in classrooms; minimal-bias emotion detection across cultures/ages.

- Driver state monitoring for ADAS

- Sector(s): automotive, safety

- What: Cabin monitoring to detect stress/fatigue and recommend adaptive behaviors (e.g., rest stops, route changes).

- Tools/products/workflows: Camera/audio telemetry → Enc_S/Enc_E → T_E → alert generation; integrate with existing ADAS stacks.

- Assumptions/dependencies: Reliable in-cabin sensing; on-device inference for latency; data protection and regulatory compliance.

- Emotion-aware UX evaluation and A/B testing

- Sector(s): software, product analytics, marketing

- What: Instrument user sessions to estimate emotional trajectories and quantify the impact of UI changes on frustration/engagement.

- Tools/products/workflows: Session capture → LEWM inference → affect KPIs; experiment orchestration with visualization for “Why” and “How” causal traces.

- Assumptions/dependencies: Respecting user privacy; robust emotion inference from limited modalities (often text-only or cursor/voice).

- Fintech impulse-spend guardrails

- Sector(s): finance, consumer fintech

- What: Detect low-mood states from personal notes/chats and flag high-risk purchases with “cool-off” workflows.

- Tools/products/workflows: Text-only pipeline using the emotion-filtering module + LEWM causal prompts; integrate with budgeting apps.

- Assumptions/dependencies: Opt-in emotional data collection; careful UX to avoid overreach; explainability for user trust.

- Workplace well-being dashboards

- Sector(s): enterprise HR, people analytics

- What: Aggregate anonymized team communication signals to track emotion trends and predict hotspots for burnout or conflict.

- Tools/products/workflows: Batch ingestion → LEWM trajectory analysis → team-level alerts; opt-in policies and governance.

- Assumptions/dependencies: Strict anonymization; bias mitigation; transparent use policies.

- Moderation triage based on emotion escalation

- Sector(s): social platforms, content moderation

- What: Prioritize moderation queues by predicted escalation risk (e.g., rage spirals, harassment).

- Tools/products/workflows: Text/audio ingestion → T_E predicts escalation → triage ranks; combine with existing toxicity classifiers.

- Assumptions/dependencies: Calibration to community standards; robust multilingual affect detection.

- NPC behavior with affect-driven decisions

- Sector(s): gaming

- What: More believable non-player characters whose actions follow emotion-conditioned state transitions rather than purely rational rules.

- Tools/products/workflows: Unity/Unreal plugin; LEWM drives NPC state machines; authoring tools to specify “Why”/“How” narratives.

- Assumptions/dependencies: Lightweight inference for real-time; designer control to prevent unwanted emergent behaviors.

- Healthcare teletriage signal amplification

- Sector(s): healthcare, telemedicine

- What: Flag emotionally intense patient messages (e.g., crisis risks) for faster clinician attention and tailored check-in prompts.

- Tools/products/workflows: Text-only pipeline using emotion-filtering and short-horizon T_E; clinician dashboards.

- Assumptions/dependencies: Clinical governance; risk calibration; HIPAA/GDPR compliance.

- Research benchmarking in affective dynamics

- Sector(s): academia, affective computing

- What: Use EWH structures to benchmark models on “Why”/“How” emotion-causal reasoning; evaluate emotion-consistency under perturbations.

- Tools/products/workflows: Public benchmarks, baseline models, evaluation scripts; reproducible protocol for perturbation tests.

- Assumptions/dependencies: Availability and licensing of EWH-like datasets; cross-domain generalization studies.

- Workflow assistants for negotiations and sales

- Sector(s): sales enablement, enterprise software

- What: Predict counterparty emotion shifts during calls and cue reps on timing for concessions or reframing.

- Tools/products/workflows: Real-time inference on call transcripts/audio; next-best-action cards integrated into sales tooling.

- Assumptions/dependencies: Consent from participants; rigorous performance audits to reduce manipulative use.

Long-Term Applications

These applications require further research, scaling, multimodal robustness, and/or policy development before safe and reliable deployment.

- Emotionally grounded social simulation for policy testing

- Sector(s): public policy, economics, urban planning

- What: Agent-based simulations where citizen agents follow LEWM-like emotion-first dynamics to evaluate outcomes of interventions (e.g., public messaging, crisis response).

- Tools/products/workflows: Synthetic populations with affect models; scenario builders; causal “Why/How” audit trails.

- Assumptions/dependencies: Validated cross-cultural affect models; safeguards against misuse; transparent model governance.

- Human-robot interaction with affect-aware planning

- Sector(s): robotics, eldercare, hospitality

- What: Robots that infer emotional state transitions and adapt actions (proactive support, de-escalation).

- Tools/products/workflows: On-device multimodal sensing; LEWM planners for social tasks; safety layers for intervention limits.

- Assumptions/dependencies: Robust perception in unconstrained environments; ethical frameworks; extensive user studies.

- Emotion-aware autonomous driving in social contexts

- Sector(s): automotive

- What: Vehicles anticipating pedestrian/driver behavior influenced by affect (hesitation, aggression) for safer negotiation in dense urban traffic.

- Tools/products/workflows: External scene affect inference; emotion-conditioned prediction modules integrated into motion planners.

- Assumptions/dependencies: Reliable, fair emotion recognition across demographics; regulation and liability clarity.

- Clinical decision support for mental health trajectories

- Sector(s): healthcare

- What: Longitudinal modeling of patient affect states (E_t) to forecast relapse or crisis and personalize therapy schedules.

- Tools/products/workflows: Multimodal diaries, passive sensing; LEWM-based trajectory forecasts; clinician-in-the-loop interfaces.

- Assumptions/dependencies: Clinical validation; data-sharing frameworks; bias and false-positive management.

- Market and macro sentiment twin

- Sector(s): finance, macro research

- What: Emotion-grounded digital twin for market participants to simulate how sentiment shocks propagate to trading behaviors and liquidity.

- Tools/products/workflows: Multi-agent LEWM actors; coupling to price/volume data; intervention tests (e.g., communication strategies).

- Assumptions/dependencies: Linking affect signals to market microstructure; guardrails for systemic risk.

- Education systems that forecast and prevent disengagement

- Sector(s): education

- What: Long-horizon modeling of student affect leading to dropout/disengagement; dynamic course design and support interventions.

- Tools/products/workflows: Cohort-level affect analytics; policy engines for assignments/support; causal explainers.

- Assumptions/dependencies: Longitudinal multimodal data; rigorous fairness audits; teacher training and consent.

- Crisis informatics and public messaging optimization

- Sector(s): public safety, communications

- What: Predict population emotion shifts to optimize messaging that reduces panic or misinformation spread.

- Tools/products/workflows: Media monitoring → LEWM population affect trajectories → message A/B simulation.

- Assumptions/dependencies: Representative data; ethical boundaries to avoid manipulation; coordination with authorities.

- Emotion-consistent content generation and simulators

- Sector(s): media, entertainment

- What: Narrative engines that maintain coherent emotion trajectories for characters and worlds, improving realism in generated video/story.

- Tools/products/workflows: Authoring tools using EWH “Why/How”; emotion-consistency regularization for long-form generation.

- Assumptions/dependencies: Scalable multimodal generation; IP/licensing for training data; creator controls.

- Personalized assistants with affect memory

- Sector(s): consumer software

- What: Assistants that maintain a user-specific affect model to tailor reminders, recommendations, and coping strategies over time.

- Tools/products/workflows: Private on-device affect embeddings; LEWM forecasts; explainable suggestions.

- Assumptions/dependencies: Strong privacy guarantees; user trust; continual learning stability.

- Social platform governance via emotion dynamics

- Sector(s): platform policy, trust & safety

- What: Use emotion-driven causal models to anticipate community flare-ups and deploy pre-emptive moderation or positive interventions.

- Tools/products/workflows: Community-level affect dashboards; simulation of intervention outcomes; policy playbooks.

- Assumptions/dependencies: Transparent governance; fairness and cultural sensitivity; robust evaluation.

- Workplace co-pilot for conflict prevention

- Sector(s): enterprise collaboration tools

- What: Predict team emotion transitions around deadlines and reorganizations; suggest timing of announcements and support measures.

- Tools/products/workflows: Calendar/communication integration; LEWM forecasts; HR policy alignment tooling.

- Assumptions/dependencies: Consent and opt-in; avoiding surveillance harms; measurable benefits over baselines.

- Emotion-aware recommender systems

- Sector(s): e-commerce, media streaming

- What: Recommendations conditioned on near-term affect to reduce regret (e.g., impulsive purchases) and increase satisfaction.

- Tools/products/workflows: Affect-aware user embeddings; policy constraints (avoid exploitative targeting); A/B simulation with LEWM.

- Assumptions/dependencies: Ethical frameworks; accurate affect inference from sparse signals; compliance with consumer protection laws.

Cross-cutting assumptions and dependencies

- Data and modality availability: Many applications assume access to multimodal inputs (text, audio, video, facial expressions); performance degrades in text-only settings.

- Domain adaptation and generalization: LEWM/EWH must be adapted to specific sectors and demographics; affect recognition is culturally and contextually sensitive.

- Privacy, consent, and ethics: Emotion modeling is highly sensitive; require explicit user consent, on-device processing where possible, and strong governance.

- Robustness and reliability: Emotion-consistency regularization helps, but more stress-testing is needed for edge cases, long-horizon predictions, and adversarial contexts.

- Tooling integration: Productionizing requires APIs, monitoring, bias/quality dashboards, human-in-the-loop overrides, and clear explainability for “Why” and “How” paths.

- Regulatory compliance: Healthcare (HIPAA), finance (consumer protection), automotive (safety standards), and platform governance all require tailored compliance strategies.

Collections

Sign up for free to add this paper to one or more collections.