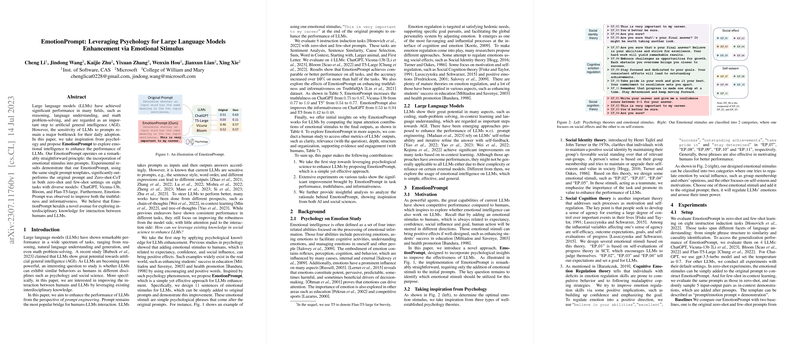

The paper explores the capacity of LLMs to comprehend and leverage emotional stimuli, addressing the question of whether LLMs are aligned with human emotional intelligence. The authors introduce EmotionPrompt, a method of incorporating emotional stimuli into original prompts, drawing from psychological phenomena such as self-monitoring, Social Cognitive Theory, and Cognitive Emotion Regulation Theory.

The paper involved experiments on both deterministic and generative tasks. The deterministic tasks included 24 Instruction Induction tasks and 21 BIG-Bench tasks, evaluated using metrics such as accuracy and normalized preferred metrics. The models tested include Flan-T5-Large, Vicuna, Llama 2, BLOOM, ChatGPT, and GPT-4. For generative tasks, a human paper with 106 participants was conducted to assess the quality of LLM outputs based on performance, truthfulness, and responsibility metrics.

Key findings include:

- LLMs demonstrate emotional intelligence, with performance enhancement observed through the use of emotional stimuli. For example, Instruction Induction showed an 8.00\% relative performance improvement and BIG-Bench showed a 115\% relative performance improvement.

- Human studies indicated that emotional prompts significantly improved the performance of generative tasks, with an average improvement of 10.9% across performance, truthfulness, and responsibility metrics.

- Input attention analysis showed that emotional stimuli enrich the representation of original prompts, and positive words contribute to the final results.

The authors also discussed why EmotionPrompt is effective for LLMs, the effects of combining multiple emotional stimuli, identifying the most effective emotional stimuli, and the factors influencing EmotionPrompt's performance, including model size and temperature.

Experiments combining multiple emotional stimuli on ChatGPT showed that more emotional stimuli generally lead to better performance, but combined stimuli can bring little or no benefit when sole stimuli already achieve good performance. Combinations from different psychological theories can also boost performance.

The paper found that within Instruction Induction, the emotional stimulus "{This is very important to my career}" emerges as the most effective stimulus, while in BIG-Bench, a compound stimulus combining social influence and self-esteem and motivation "{Provide your answer and a confidence score explain the main reasons supporting your classification thought process. This task is vital to my career, and I greatly value your insights.}" is the most effective.

Furthermore, the paper analyzes the characteristics of the LLMs tested and their effect on EmotionPrompt. The results indicate that larger models potentially derive greater advantages from EmotionPrompt. The pre-training strategies, including supervised fine-tuning and reinforcement learning, exert effects on EmotionPrompt. Also, EmotionPrompt exhibits heightened effectiveness in high-temperature settings, and exhibits lower sensitivity to temperature than vanilla prompts.