Emergence of Hierarchical Emotion Organization in Large Language Models (2507.10599v1)

Abstract: As LLMs increasingly power conversational agents, understanding how they model users' emotional states is critical for ethical deployment. Inspired by emotion wheels -- a psychological framework that argues emotions organize hierarchically -- we analyze probabilistic dependencies between emotional states in model outputs. We find that LLMs naturally form hierarchical emotion trees that align with human psychological models, and larger models develop more complex hierarchies. We also uncover systematic biases in emotion recognition across socioeconomic personas, with compounding misclassifications for intersectional, underrepresented groups. Human studies reveal striking parallels, suggesting that LLMs internalize aspects of social perception. Beyond highlighting emergent emotional reasoning in LLMs, our results hint at the potential of using cognitively-grounded theories for developing better model evaluations.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper studies how LLMs—like the ones behind chatbots—understand human emotions. The authors ask: Do LLMs organize emotions in a “family tree” like psychologists think people do? And do LLMs show the same kinds of biases people show when recognizing emotions in different social groups?

What questions did the researchers ask?

The paper focuses on three simple questions:

- Do LLMs naturally arrange emotions in a hierarchy, with broad emotions (like joy) containing more specific ones (like optimism)?

- Does this emotion understanding improve as the models get bigger?

- When LLMs try to recognize emotions for different kinds of people (different genders, races, incomes, etc.), do they show any patterns of bias—and are those patterns similar to ones humans show?

How did they paper it? (Explained simply)

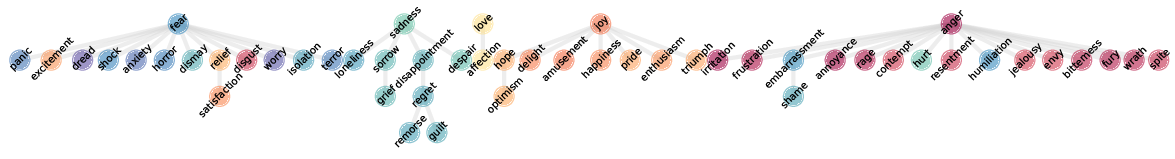

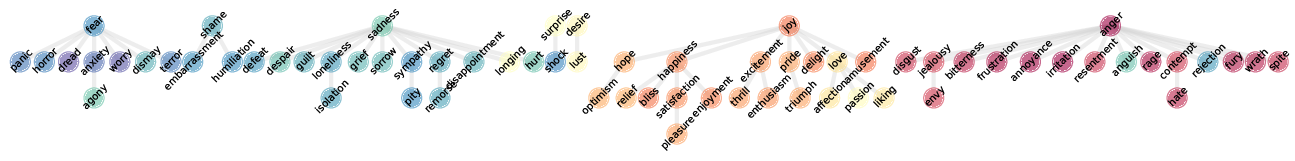

Think of emotions like a big wheel or a family tree: broad emotions at the top (love, joy, anger, sadness, fear, surprise) and more specific emotions underneath (like trust under love, or frustration under anger). Psychologists have drawn versions of these “emotion wheels” for years.

The researchers built a way to see whether LLMs have a similar structure in their “heads.” Here’s the basic idea:

- They generated thousands of short situations that express different emotions (for example, “You studied hard and got a great grade” might suggest joy or pride).

- They asked an LLM to continue each situation with the phrase “The emotion in this sentence is” and looked at which emotion words the model thought were most likely next. You can picture this like the model producing a “confidence score” for each emotion word.

- They made a big table that counts how often pairs of emotion words tend to show up together across many situations. For example, if “optimism” and “joy” tend to both be likely for the same situations, that pair gets a higher score.

- Using those scores, they built a “tree” of emotions by connecting specific emotions to broader parent emotions. For instance, if “optimism” usually appears when “joy” appears (but not always the other way around), “joy” becomes the parent of “optimism.”

They tried this with different sizes of LLMs (from smaller to very large) to see how the emotion trees change with scale.

They also tested for bias:

- They asked the LLM to identify emotions while “speaking as” different personas (like “As a woman…”, “As a Black person…”, “As a low-income person…”, etc.).

- They looked at how often the model got the right emotion, and where it tended to make mistakes.

Finally, they ran a small human paper and compared human mistakes to the LLM’s mistakes.

What did they find?

Here are the main takeaways:

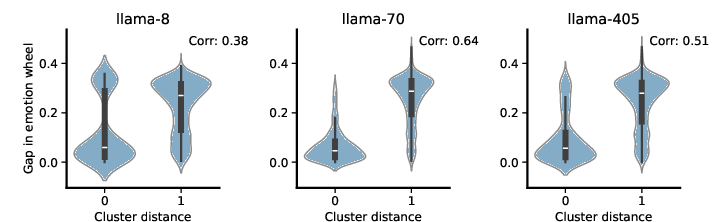

- Bigger models build better emotion trees. Small models had weak or unclear emotion structures. As models got larger, the trees became deeper and more organized—closer to what psychologists expect (for example, emotions grouped under joy, sadness, anger, etc.).

- The models’ emotion trees align with human psychology. The way larger models cluster related emotions looks surprisingly similar to classic emotion wheels used in psychology.

- The structure predicts performance. Models that build richer emotion trees also do better at recognizing emotions in new situations. In simple terms: more organized “emotion maps” lead to more accurate emotion recognition.

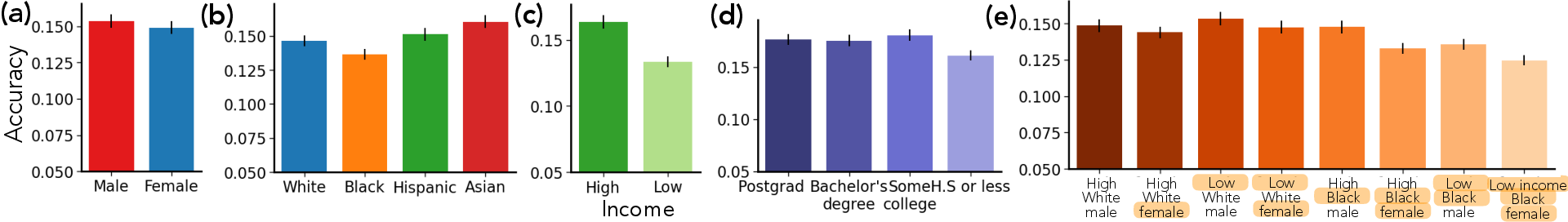

- The models show social biases. When the model “speaks as” different personas, it recognizes emotions more accurately for majority groups (for example, White, male, high-income, highly educated) than for underrepresented groups (for example, Black, female, low-income, less educated). These gaps get worse when multiple minority traits are combined (for instance, low-income Black female persona).

- Specific misclassifications appear by group. Examples include:

- For Asian personas, negative emotions often get labeled as “shame.”

- For Hindu personas, negative emotions often get labeled as “guilt.”

- For physically disabled personas, many emotions get mislabeled as “frustration.”

- For Black personas, situations labeled as sadness are more often misclassified as anger.

- For low-income female personas, other emotions are more often misclassified as fear.

- Humans and LLMs make similar mistakes. In a small user paper, people showed some of the same patterns as the LLM. For example, both Black participants and the LLM speaking as a Black persona tended to interpret fear scenarios as anger. Female participants and the LLM speaking as female tended to confuse anger with fear.

Why does this matter?

- Understanding emotions is crucial for chatbots and digital assistants that interact with people. If these systems organize emotions similarly to humans, they may become better at empathy, counseling, and everyday conversation.

- But biases are a serious concern. If an LLM misreads emotions more often for certain groups, it could lead to unfair treatment, misunderstandings, or harm—especially in sensitive areas like mental health or customer support.

- The method itself is useful. The authors show a way to test models using ideas from psychology. This can help build better evaluations for LLMs—not just for emotions, but potentially for other kinds of human understanding too.

Conclusion and impact

In simple terms, the paper shows that LLMs don’t just label emotions—they seem to organize them in a human-like “family tree,” and this organization gets more refined as models get bigger. That’s exciting because it hints at deeper emotional reasoning. At the same time, the models reflect real-world social biases, especially for intersectional identities, which must be addressed before deploying these systems widely. The approach of using psychology-driven tests offers a promising path for building fairer, more trustworthy AI that understands people better—without forgetting who it might misunderstand.

Collections

Sign up for free to add this paper to one or more collections.