Meta-RL Induces Exploration in Language Agents (2512.16848v1)

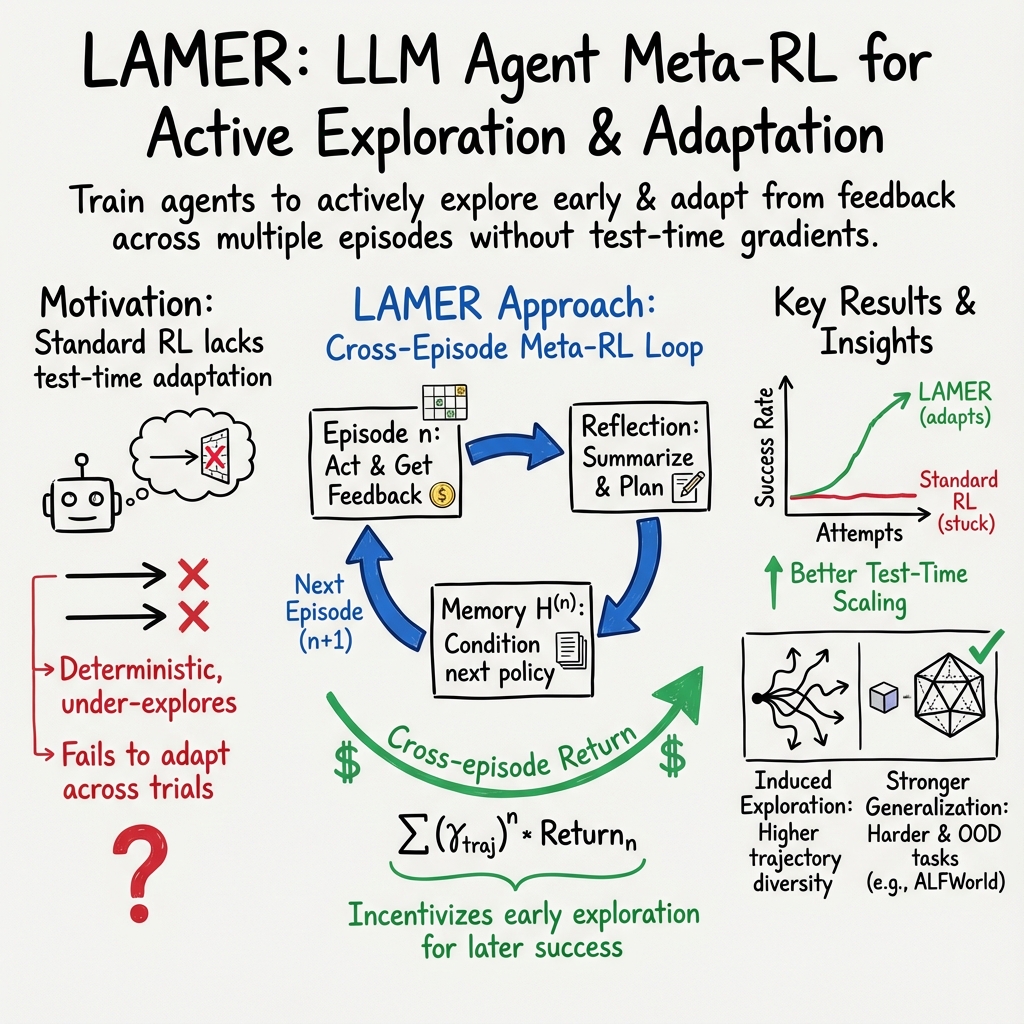

Abstract: Reinforcement learning (RL) has enabled the training of LLM agents to interact with the environment and to solve multi-turn long-horizon tasks. However, the RL-trained agents often struggle in tasks that require active exploration and fail to efficiently adapt from trial-and-error experiences. In this paper, we present LaMer, a general Meta-RL framework that enables LLM agents to actively explore and learn from the environment feedback at test time. LaMer consists of two key components: (i) a cross-episode training framework to encourage exploration and long-term rewards optimization; and (ii) in-context policy adaptation via reflection, allowing the agent to adapt their policy from task feedback signal without gradient update. Experiments across diverse environments show that LaMer significantly improves performance over RL baselines, with 11%, 14%, and 19% performance gains on Sokoban, MineSweeper and Webshop, respectively. Moreover, LaMer also demonstrates better generalization to more challenging or previously unseen tasks compared to the RL-trained agents. Overall, our results demonstrate that Meta-RL provides a principled approach to induce exploration in language agents, enabling more robust adaptation to novel environments through learned exploration strategies.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about teaching AI “language agents” (smart programs that use LLMs, or LLMs) to explore better when solving multi-step tasks. The authors introduce a new training framework called LAMER that helps these agents actively try different strategies, learn from feedback, and improve on the fly—without needing to change their internal weights during testing.

Key Objectives

The paper asks simple, practical questions:

- How can we get LLM agents to explore more effectively when a task takes many steps and the outcome (success or failure) only becomes clear at the end?

- How can agents quickly adapt their behavior from feedback during testing, instead of sticking to a single fixed plan learned during training?

- Does teaching agents to “learn how to learn” (meta-reinforcement learning, or Meta-RL) make them better at new, harder, or unseen tasks?

How They Did It (Methods)

Reinforcement Learning (RL), in everyday terms

Think of RL like playing a game: you try an action, see what happens, and get points (rewards). Over time you learn which actions lead to more points. Traditional RL usually trains an agent to follow one policy (a fixed way of acting) to get immediate rewards.

Two key ideas in RL:

- Exploration: trying new or uncertain actions to discover better strategies (like testing different paths in a maze).

- Exploitation: using what you already know works to score points.

What is Meta-RL?

Meta-RL is “learning to learn.” Instead of just learning one good strategy, the agent learns an adaptable way to change its strategy quickly based on experience. It’s like teaching a student not just facts, but how to study effectively in any new class.

The LAMER Framework

LAMER combines two main components to boost exploration and adaptation:

- Cross-episode training:

- Imagine tackling a tough puzzle in multiple tries (episodes). Early tries focus on exploring and collecting clues; later tries focus on using what you learned to solve it.

- LAMER rewards the agent across tries, not just within a single try. By valuing multi-try performance, the agent learns when to explore first and when to exploit later.

- A “discount factor” controls how much the agent cares about early vs. later episodes. Lower values push quick exploitation; higher values encourage more exploration first.

- In-context adaptation via reflection:

- After each try, the agent writes a short “reflection” (a summary of what happened and a plan for next time).

- These reflections are added to the agent’s context (its memory) so it can adjust its strategy on the next try—without changing its internal weights (no gradient updates).

- Think of it like keeping a learning journal: “I clicked the wrong cells in Minesweeper—next time I’ll test safer spots first.”

Training and Evaluation

- Base models: Qwen3-4B (and also tested on Llama 3.1-8B in the appendix).

- Optimizer: Standard RL methods (e.g., PPO, GRPO, GiGPO), but the key novelty is the Meta-RL setup in LAMER.

- Tasks:

- Sokoban (grid planning game)

- Minesweeper (logical puzzle with hidden mines)

- WebShop (text-based online shopping simulation)

- ALFWorld (text-based tasks in a virtual home, like picking, cleaning, heating items)

- Performance measured as pass@k (success within 1, 2, or 3 tries).

Main Findings and Why They Matter

Better performance through exploration

LAMER consistently beats standard RL across tasks:

- Sokoban: pass@3 improved to 55.9% (vs. 44.1% for the best RL baseline).

- Minesweeper: pass@3 jumped to 74.4% (19% absolute gain over RL).

- WebShop: pass@3 reached 89.1% (14% absolute gain over RL).

Even when LAMER starts similar or slightly lower on pass@1, it gains more across multiple tries (larger improvements from pass@1 to pass@3), showing stronger test-time scaling. This means the agent learns from mistakes and gets better with each attempt.

More diverse, smarter strategies

Agents trained with Meta-RL kept a wider variety of action sequences (“trajectory diversity”) than standard RL, which often becomes too predictable. More diversity equals better exploration, which helps discover effective solutions.

Generalization to harder tasks

LAMER-trained agents handled tougher versions of the same problems better than RL:

- Harder Sokoban (more boxes): around 10% higher success than RL in the hardest setting.

- Harder Minesweeper (more mines): around 5% higher success than RL in the hardest setting.

Generalization to unseen tasks

In ALFWorld, LAMER generalizes beyond the tasks it was trained on:

- Unseen tasks like “Cool” and “Pick Two”: LAMER improved success rates by 23% and ~14% over RL, respectively.

- It also performed better on familiar (in-distribution) tasks.

Practical knobs that matter

- The cross-episode discount factor controls exploration vs. exploitation. Different tasks benefit from different settings (e.g., Minesweeper liked a larger value, which promotes longer-term exploration).

- What you store in memory matters. Keeping only reflections (short, focused summaries) sometimes worked best—cleaner guidance led to more effective adaptation.

Implications and Potential Impact

- Smarter language agents: LAMER helps build agents that don’t just follow fixed plans. They can explore, learn from feedback, and adapt immediately—more like how humans learn.

- Better performance with repeated tries: Agents improve more during testing, making them useful for complex real-world tasks where you often need a few attempts to succeed.

- Stronger generalization: Agents trained with LAMER handle harder and unseen scenarios more robustly.

- Efficient test-time compute: Instead of using massive models, better test-time strategies (like explore-then-exploit with reflection) can deliver big gains.

Limitations and Future Directions

- Training time: Because episodes in a trial depend on each other, they can’t be fully parallelized yet, making training slower than standard RL. Smarter sampling could speed this up.

- Composability: LAMER can likely be combined with stronger RL algorithms or better reasoning models to push performance even further.

- Toward generalist agents: Results suggest the approach can scale to more varied environments, inching closer to agents that adapt to completely novel tasks.

In short, LAMER teaches language agents how to explore wisely, learn from experience, and adapt quickly—making them more capable, flexible problem-solvers across many kinds of tasks.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concise list of what remains missing, uncertain, or unexplored in the paper, phrased to enable concrete follow-up work:

- Scalability across model sizes and architectures: Does LAMER’s benefit persist or change with significantly larger/smaller LLMs and different instruction-tuned/backbone variants (beyond Qwen3-4B and limited Llama3.1-8B results)?

- Effect of “thinking” (deliberate Chain-of-Thought) vs “non-thinking” mode: How does enabling/controlling test-time reasoning depth interact with LAMER’s exploration and adaptation?

- Multimodal applicability: Validation on visual/UI environments (e.g., real browser GUIs, embodied vision) to test claims that LAMER “naturally applies” beyond text-only settings.

- Formal training of reflections: Precise mechanism for credit assignment to reflection generation (are reflections treated as actions with their own advantages, or only as context?), and token-level training details.

- Adaptive cross-episode discounting: Methods to learn or schedule automatically per environment/task, rather than manual tuning.

- Contribution disentanglement: Controlled ablations to isolate gains from cross-episode credit assignment vs self-reflection vs sequential rollouts (e.g., RL+reflection without meta-RL objective).

- Training efficiency: Design and evaluation of asynchronous or parallelized episode sampling to mitigate ~2× training time overhead; profiling and optimization of reflection generation costs.

- Reflection robustness: Analysis and mitigation of hallucinated or misleading reflections (e.g., detection, verification, pruning), and their impact on adaptation.

- Exploration quality metrics: Beyond entropy/diversity, measure directed exploration (information gain, novelty, uncertainty reduction) and correlate it with downstream success.

- Sample efficiency: Learning curves vs number of trajectories; quantify data efficiency and label/reward efficiency compared to RL baselines.

- Safety/guardrails in web environments: Procedures to ensure safe exploration/exploitation (e.g., constraints, policy shields) and measure unintended behaviors.

- Stronger OOD generalization: Systematic evaluation on domains truly out of training distribution (beyond two ALFWorld categories), including drastically different task structures.

- Robustness to sparse/deceptive rewards: Performance under extreme sparsity, delayed signals, or deceptive feedback; required reward shaping or curriculum strategies.

- Reward design transparency: Detailed per-environment reward specification and sensitivity studies (terminal-only vs shaped per-step rewards).

- Hyperparameter sensitivity: Systematic sweeps on number of episodes per trial (N), group size, , sampling temperatures, and their interactions.

- Test-time scaling beyond pass@3: Compute–performance tradeoffs for higher attempt budgets; adaptive stopping criteria and policies that decide when further episodes are worthwhile.

- Memory management strategies: Effects of context length, summarization, forgetting policies, and retrieval augmentation on adaptation efficacy and token efficiency.

- External memory/retrieval: Integrating tools like vector databases or episodic memory modules to structure cross-episode information and reflections.

- Optimizer/critic comparisons: Head-to-head evaluation across PPO/GRPO/GiGPO with/without critics; stability and variance analysis under the meta-RL objective.

- Gradient-based meta-learning baselines: Empirical comparison to MAML-style adaptation for LLM agents to assess trade-offs of in-context vs gradient inner loops.

- RLHF/verifier integration: Combining LAMER with human or automated reward models/verifiers; impact on exploration safety and sample efficiency.

- Baseline fairness with CoT: Re-run prompting baselines (ReAct/Reflexion) with enabled CoT/thought tokens to ensure fair comparisons under similar reasoning modes.

- Reproducibility details: Full release of prompts, seeds, environment configs, dataset sizes, and token budgets to enable exact replication of reported results.

- Theoretical guarantees: Analysis of convergence, bias/variance in cross-episode advantage estimation, and conditions under which exploration emerges from the objective.

- Reflection prompt design: Systematic study of reflection templates, structure (plans, checklists, error taxonomies), and whether to learn them end-to-end.

- Action/tooling richness: Evaluation with richer tool APIs (e.g., programmatic browser control, file systems) and how expanded action spaces affect exploration and adaptation.

- Episode reset assumptions: LAMER restarts from the same initial state—how does it perform in irreversible or dynamically changing environments that violate this assumption?

- Multi-agent settings: Extension to cooperative/competitive agents and whether cross-episode meta-RL induces coordinated exploration strategies.

- Online adaptation of meta-parameters: Mechanisms to adjust and other meta-parameters on-the-fly for a new task distribution without retraining.

Glossary

- Advantage estimation: A technique in policy optimization that measures how much better an action is compared to a baseline, guiding gradient updates. "a more advanced advantage estimation strategy"

- ALFWorld: A text-based embodied environment benchmark for household tasks used to evaluate language agents. "ALFWorld provides text-based embodied environments."

- Cross-episode credit assignment: Allocating rewards across multiple episodes within a trial to encourage learning from earlier exploration. "Trajectory discount factor ytraj is used for cross-episode credit assignment."

- Discounted return: The cumulative reward over time where future rewards are multiplied by a discount factor, emphasizing near-term gains. "The objective of reinforcement learning is to maximize the expected discounted return:"

- Explore-then-exploit strategy: A policy that first gathers information through exploration and then uses it to maximize rewards. "This explore-then-exploit strategy implemented by the agent is itself an RL algorithm"

- Exploration–exploitation trade-off: The balance between trying new actions to gain information and using known actions to maximize rewards. "reaching a better trade-off between exploration and exploitation."

- GiGPO (Group-in-Group Policy Optimization): A critic-free policy optimization method tailored for LLM agents that structures training groups hierarchically. "critic-free approaches such GRPO (Shao et al., 2024) and GiGPO (Feng et al., 2025)."

- GRPO (Group Regularized Policy Optimization): A critic-free policy optimization algorithm that regularizes groups of trajectories for stable learning in LLM agents. "critic-free approaches such GRPO (Shao et al., 2024) and GiGPO (Feng et al., 2025)."

- In-context learning: The ability of LLMs to adapt behavior based on information provided in the prompt without parameter updates. "naturally leveraging LLMs' in-context learning abilities."

- Inner loop (meta-RL): The adaptation process within meta-learning that encodes an RL algorithm for rapid task-specific learning. "it involves an inner-loop that represents an RL algorithm (i.e., an adaptation strategy) by itself"

- Markov decision process (MDP): A formal framework for sequential decision-making defined by states, actions, transitions, and rewards. "This process can be formulated as a Markov decision process M = (S, A, P, R, Ystep)"

- Meta reinforcement learning (Meta-RL): A paradigm where agents learn to quickly adapt to new tasks by training across task distributions. "Meta reinforcement learning (Meta-RL) (Beck et al., 2025) focuses on 'learning to reinforcement learn' in order to rapidly adapt to new environments."

- Meta-parameters: The higher-level parameters optimized by meta-learning so that inner-loop adaptation becomes effective across tasks. "optimizes meta-parameters, such that the agent can solve new tasks quickly."

- MineSweeper: A partially observable logical deduction game used as an environment to assess exploration and planning. "MineSweeper is a board game about logical deduction on hidden cells."

- Outer loop (meta-RL): The optimization of meta-parameters that shape the inner-loop adaptation strategy across multiple tasks. "together with an outer-loop that updates the meta-parameters so that the inner loop becomes more effective across many tasks."

- Pass@k: A metric reporting success rates under k attempts, reflecting test-time scaling and exploration benefits. "For each method, we report the success rates under 1, 2, and 3 attempts (i.e., pass@1, pass@2, and pass@3, respectively)."

- Policy gradient: A family of RL methods that optimize policies directly via gradients of expected returns. "can be optimized with standard policy gradient methods."

- Proximal Policy Optimization (PPO): A widely used on-policy RL algorithm that stabilizes training by constraining policy updates. "The framework is compatible with widely used optimizers such PPO (Schulman et al., 2017)"

- ReAct: A prompting strategy for LLM agents that interleaves reasoning with actions using in-context examples. "ReAct (Yao et al., 2023b) prompts LLMs with in-context examples to generate both textual actions and reasoning thoughts."

- Reflexion: A method where agents reflect on previous episodes and use a memory of reflections to guide future actions. "Reflexion (Shinn et al., 2023) extends this principle to the multi-episode setting, where the agent verbally reflects on the last episode and maintains their own reflection buffer for the next episodes."

- Reflection buffer: A memory component storing the agent’s self-reflections across episodes to inform subsequent decisions. "maintains their own reflection buffer for the next episodes."

- RLOO: A REINFORCE-style optimization baseline used for training LLM agents via policy gradients. "RL methods (PPO (Schulman et al., 2017), RLOO (Ahmadian et al., 2024), GRPO (Shao et al., 2024), and GiGPO (Feng et al., 2025))"

- Self-reflection: The agent’s process of generating feedback and plans based on prior attempts to adapt its policy in-context. "we propose a self-reflection based strategy to adapt the policy in-context"

- Sokoban: A classic grid-based planning game used as a fully observable environment for evaluating agents. "Sokoban is a classic grid-based game on planning where the environment is fully observable."

- Test-time compute: The computational budget allocated at inference, which can be strategically spent on exploration and adaptation. "This is essentially a better way of spending test-time compute"

- Test-time scaling: Improvement in performance as the number of attempts or inference-time computation increases. "Meta-RL exhibits stronger test-time scaling."

- Text-based embodied environment: An interactive setting where agents perform embodied actions described and controlled via text. "As a text-based embodied environment, ALFWorld contains 6 categories of common household activities"

- Trajectory diversity: The variety of distinct action-state sequences an agent produces, often linked to exploration behavior. "Trajectory diversity of base and trained models."

- Trajectory discount factor (Ytraj): A discount applied across episodes in a trial to prioritize long-term returns and exploration. "where Ytraj € [0, 1] is the cross-episode discount factor."

- Webshop: A benchmark simulating web-based shopping tasks for evaluating language agents in partially observable environments. "Webshop simulates realistic web-based shopping tasks"

Practical Applications

Practical Applications of LAMER (Meta-RL for Language Agents)

Below are actionable applications derived from the paper’s findings, methods, and innovations (cross-episode Meta-RL; in-context policy adaptation via reflection; exploration–exploitation tuning with the trajectory discount factor y_traj; improved test-time scaling and diversity; better generalization to harder and out-of-distribution tasks). Each item notes sectors, potential tools/workflows, and feasibility assumptions or dependencies.

Immediate Applications

The following use cases can be deployed now, especially in text-based or simulated environments with observable feedback loops.

- Exploration-first web shopping assistant

- Sectors: e-commerce, software (RPA), search and recommendation

- Use case: An agent that iteratively explores product pages and filters in early attempts, then exploits findings to complete purchases or retrieve optimal items in later attempts (aligned with Webshop gains: +14% over RL).

- Tools/workflows: “LAMER WebShop Agent SDK”; pass@k compute scheduler to allocate attempts; reflection buffer to log and reuse search strategies; y_traj knob for exploration depth.

- Assumptions/dependencies: Access to structured web environments and feedback signals (success/failure); guardrails for compliance (privacy, ToS); stable LLM (e.g., Qwen3/Llama) and context window limits.

- Customer support workflow navigator

- Sectors: enterprise software, CX/CRM

- Use case: Agents that explore alternative support flows across systems (ticketing, knowledge bases), reflect on failed paths, and adapt in subsequent episodes to resolve cases faster.

- Tools/workflows: Reflection-only memory summarization for concise guidance (shown to outperform trajectory+reflection in ablation); exploration budget manager using y_traj; audit-ready reflection logs.

- Assumptions/dependencies: Multi-turn, partially observable enterprise environments with clear reward signals (resolution status); IT integration and data access permissions.

- Automated UI testing and QA for complex apps

- Sectors: software engineering, QA

- Use case: Agents explore diverse UI paths to uncover edge cases, then exploit known paths to reproduce and verify bugs; measure trajectory diversity to detect regression in exploration.

- Tools/workflows: Trajectory diversity monitor (entropy-based as in paper); cross-episode credit assignment to prioritize discovering new paths in early runs; pass@k CI step.

- Assumptions/dependencies: Instrumented test environments that surface outcomes/rewards; safe sandboxing; consistency of UI states across episodes.

- Multi-step information retrieval and browsing

- Sectors: knowledge management, enterprise search

- Use case: Multi-episode browsing agents that explore sources and evidence, reflect to summarize, and exploit in the final attempt for high-confidence answers.

- Tools/workflows: Reflection buffer tuned for concise guidance; exploration controller y_traj adapted per task (e.g., higher y_traj for sparse signals); pass@k routing when uncertainty is high.

- Assumptions/dependencies: Access to content repositories and feedback (relevance, answer verification); prompt engineering for reflection quality.

- Agent evaluation and benchmarking in academia

- Sectors: research, evaluation

- Use case: Use LAMER to study exploration–exploitation strategies in partially observable tasks (ALFWorld, Minesweeper, Sokoban), including OOD generalization.

- Tools/workflows: Reusable Meta-RL training scripts; standardized pass@k metrics; entropy-based diversity diagnostics; y_traj sweeps for environment-specific tuning.

- Assumptions/dependencies: Public benchmarks/simulators; reproducible seed management; compute budget for sequential episode sampling.

- Test-time compute optimization in ML operations

- Sectors: ML engineering, platform ops

- Use case: Operationalize pass@k policies so agents spend test-time compute on exploration in earlier attempts and exploitation in later ones; amortize compute via learned in-context adaptation.

- Tools/workflows: “Exploration Budget Manager” tied to y_traj; attempt-aware routing; reflection-based adaptation without gradients to reduce runtime costs.

- Assumptions/dependencies: Clear success metrics; cost controls; monitoring for diminishing returns across attempts.

- Safety, compliance, and audit-ready agent operations

- Sectors: policy, risk & compliance, governance

- Use case: Use reflection logs and cross-episode memory as interpretable artifacts for audits; enforce exploration limits via y_traj; implement guardrails for high-risk actions.

- Tools/workflows: Reflection log archiving; exploration budget caps; policy templates defining pass@k ceilings; red-team tests focused on exploration behaviors.

- Assumptions/dependencies: Organizational policies on agent autonomy; PII/security constraints; ability to disable or sandbox actions.

- Puzzle/game coaching and testing

- Sectors: education (logic training), gaming QA

- Use case: Agents that coach users through multi-step puzzles (Sokoban, Minesweeper) by exploring solution branches and reflecting on failures to propose improved strategies.

- Tools/workflows: Reflection-only hints; exploration controllers; pass@k interactive coaching modes (attempts become structured lessons).

- Assumptions/dependencies: Low-stakes environments; access to clear reward signals (win/loss); prompt designs that prioritize pedagogical feedback.

- Synthetic data and trajectory generation for training

- Sectors: ML data engineering

- Use case: Generate diverse interaction traces and reflections for downstream training (SFT/RLHF) using Meta-RL’s higher trajectory diversity compared to RL.

- Tools/workflows: Diversity-aware samplers; reflection summarization pipelines; labeling workflows that use exploration-phase trajectories.

- Assumptions/dependencies: Quality controls for synthetic data; coverage measurements; compatibility with desired training objectives.

Long-Term Applications

These require further research, scaling, multimodal integration, infrastructure, or regulatory readiness before deployment.

- Household service robots with explore–then–exploit policies

- Sectors: robotics, smart home

- Use case: Robots explore new environments (mapping, object search) in early episodes and exploit refined plans to complete tasks (pick/place, clean, heat/cool) in later episodes (ALFWorld analogues).

- Tools/workflows: Multimodal Meta-RL (vision, proprioception); in-context reflection via language for planning updates; cross-episode reward shaping.

- Assumptions/dependencies: Reliable sensors; real-time safety; sim-to-real transfer; robust feedback signals; regulatory and safety certifications.

- Warehouse and industrial automation

- Sectors: logistics, manufacturing

- Use case: Agents/robots adapt to new layouts and inventory states by exploring task options (routes, grasp strategies) and exploiting learned plans for throughput gains.

- Tools/workflows: Async trial orchestration to mitigate sequential training costs; reflection memory distillation into compact policies; y_traj tuned for sparse rewards.

- Assumptions/dependencies: High-fidelity simulators; integration with WMS/robot control stacks; safety interlocks; large-scale compute.

- Clinical decision support with constrained exploration

- Sectors: healthcare

- Use case: Multi-episode triage or diagnostic reasoning where early exploration gathers patient history/evidence and later episodes consolidate decisions; reflection logs for physician review.

- Tools/workflows: Verified reflection buffers; exploration caps; pass@k with clinician-in-the-loop; uncertainty-aware y_traj policies.

- Assumptions/dependencies: Regulatory approval; medical-grade data privacy; risk-managed exploration; validated reward models aligned to outcomes.

- Finance: strategy exploration and exploitation

- Sectors: finance, algorithmic trading, risk management

- Use case: Agents explore candidate strategies across episodes using y_traj to balance near-term and long-term rewards, then exploit high-confidence strategies; reflections form audit trails.

- Tools/workflows: Backtesting simulators with episodic feedback; exploration governance gates; reflection-based reports for compliance.

- Assumptions/dependencies: Market risk controls; latency; robust simulation fidelity; strict compliance requirements.

- Energy systems optimization

- Sectors: energy, utilities

- Use case: Multi-episode exploration of grid control policies (demand response, storage dispatch), then exploitation of stable configurations under varying conditions.

- Tools/workflows: Simulator-driven Meta-RL; pass@k scenario runs; y_traj tuned for long-horizon stability.

- Assumptions/dependencies: Accurate simulators; safety and reliability constraints; integration with SCADA; measurable rewards (efficiency, stability).

- Governance frameworks for autonomous agents’ exploration

- Sectors: policy, standards

- Use case: Define exploration budgets, pass@k ceilings, and audit requirements for deployed agents; standardize reflection logging for interpretability.

- Tools/workflows: “Exploration Governance Policy Pack”; monitoring dashboards for trajectory diversity and test-time compute usage.

- Assumptions/dependencies: Cross-industry consensus; regulatory buy-in; enforceable technical controls.

- Generalist agent training services (Meta-RL fine-tuning)

- Sectors: AI platforms, B2B services

- Use case: Offer training-as-a-service that uses cross-episode Meta-RL and reflection-based in-context adaptation to produce agents that generalize across domains (as shown in ALFWorld OOD tasks).

- Tools/workflows: Meta-RL pipelines compatible with PPO/GRPO/GiGPO; selective memory strategies (reflection-only); y_traj auto-tuning by environment.

- Assumptions/dependencies: Diverse multi-task datasets; scalable trial orchestration; customer-specific reward definitions.

- Asynchronous and distributed trial orchestration

- Sectors: AI infrastructure

- Use case: Overcome sequential rollout bottlenecks by parallelizing trials and asynchronously managing episode dependencies to reduce the 2× training time overhead noted in the paper.

- Tools/workflows: Trial DAG scheduling; memory synchronization; off-policy credit assignment for cross-episode rewards.

- Assumptions/dependencies: Engineering investment; correctness guarantees; monitoring for data races or inconsistent memories.

- Multimodal Meta-RL for embodied and vision-centric tasks

- Sectors: robotics, AR/VR, autonomous systems

- Use case: Extend in-context exploration/adaptation to settings with images/video/sensor input, enabling agents to learn exploration strategies from richer feedback.

- Tools/workflows: Multimodal reflection prompts; memory compression; curriculum over task difficulty (as in harder Sokoban/Minesweeper).

- Assumptions/dependencies: Large-context multimodal models; efficient memory management; standardized multimodal rewards.

- Low-resource, offline, self-improving assistants

- Sectors: consumer tech, public sector

- Use case: Assistants that adapt across attempts via reflection without gradient updates, suitable for devices with limited compute or intermittent connectivity (amortized test-time compute).

- Tools/workflows: Reflection-only memory profiles; small-model Meta-RL fine-tuning; pass@k scheduling under battery/network constraints.

- Assumptions/dependencies: Compact LLMs; robust local inference; careful exploration caps to avoid user-friction.

Collections

Sign up for free to add this paper to one or more collections.