- The paper introduces a teacher-free, self-resampling regime that simulates inference errors by degrading history frames during training.

- The methodology employs dynamic history routing with top-k selection to limit attention scope, reducing computational cost while preserving temporal coherence.

- Empirical results demonstrate improved temporal consistency and competitive visual quality compared to teacher-forced and distillation-based approaches.

End-to-End Teacher-Free Autoregressive Video Diffusion via Self-Resampling

Introduction

Autoregressive video diffusion models advance world simulation by modeling sequential dynamics under strict temporal causality, essential for predictive modeling in domains such as interactive simulation, physical reasoning, and real-time content creation. Despite strong representational capacity, autoregressive video generators suffer exposure bias—train-test discrepancy caused by conditioning on ground-truth histories during training while relying on their own imperfect outputs at inference. This mismatch induces error accumulation, manifesting as severe degradation in long-horizon video synthesis.

Recent post-training techniques, e.g., distillation from bidirectional teachers or adversarial discriminators, partially address exposure bias but mandate external models and complicate scalable training. This work introduces a teacher-free, end-to-end solution wherein autoregressive video diffusion models are robustly trained from scratch via a novel self-resampling regime. The proposed method simulates inference-time model errors by degrading history frames during training and conditions predictions on these imperfect contexts. Additionally, a memory-efficient dynamic history routing mechanism restricts attention to the most relevant historical frames, enabling scalable synthesis of long videos with nearly constant attention complexity.

Methodology

Error Simulation via Self-Resampling

Autoregressive video diffusion models factorize generation sequence-wise (p(x1:N∣c)=i=1∏Np(xi∣x<i,c)) and perform intra-frame denoising by reversing a stochastic process. Conventional teacher-forcing regimens train on perfect (ground-truth) history, resulting in sensitivity to model errors that compound along the autoregressive loop.

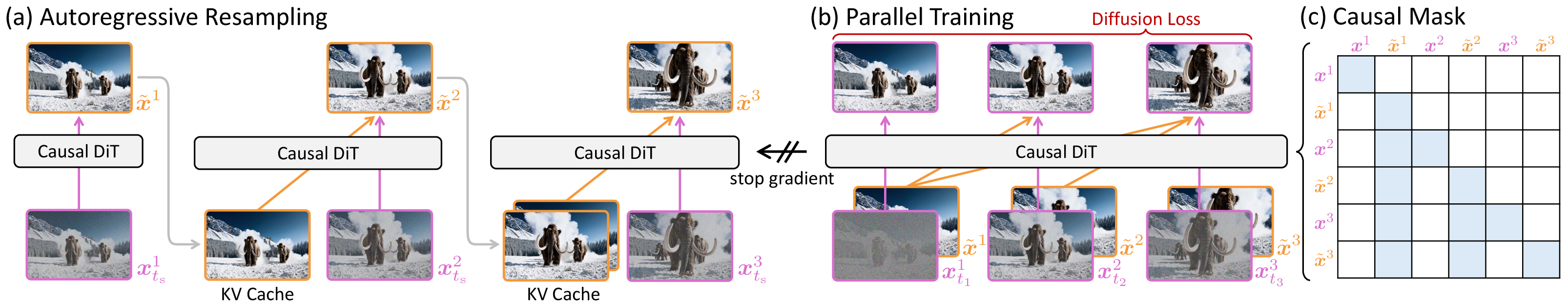

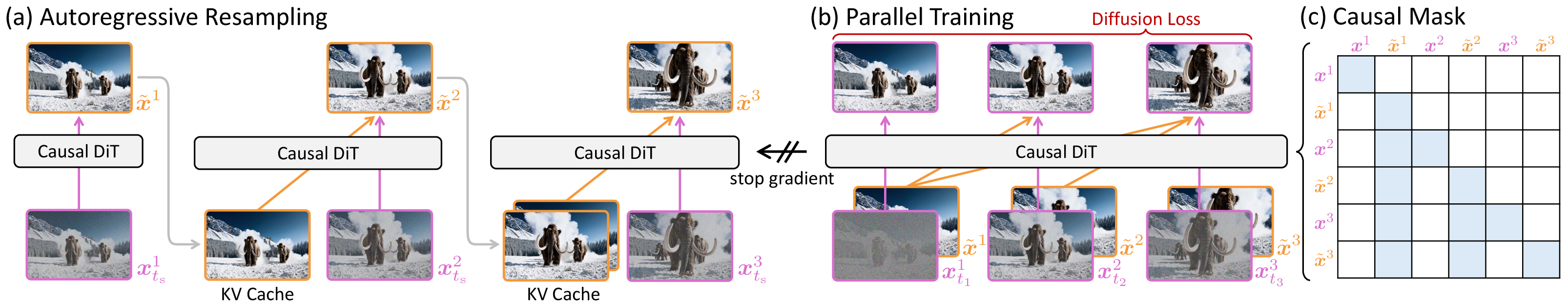

To mitigate this, the framework performs self-resampling:

- During training, ground-truth frames are corrupted at a randomly sampled denoising timestep ts (logit-normal distributed, with further shifting to balance simulation strength).

- The current online model is used to complete denoising and produce a degraded frame, which replaces the history input for subsequent prediction.

- This autoregressive resampling is performed in parallel over the full sequence using sparse causal masks to enforce strict causality.

- The error simulation is detached from gradient backpropagation to prevent shortcut learning and ensure robustness against both intra-frame detail loss and inter-frame drift.

This mechanism enables the model to adaptively learn error correction while maintaining target predictions over error-perturbed conditioning. Teacher-forcing warmup is utilized initially to stabilize convergence before transitioning to self-resampling.

Figure 1: Core architectural components of self-resampling: error simulation on histories, parallel frame-level diffusion training, and sparse causal masking for strict causality.

Efficient History Routing

Autoregressive rollout inflates history attention complexity linearly with sequence length. To alleviate this, dense causal attention is replaced or augmented with dynamic history routing:

- For each query token, a top-k selection mechanism computes relevance scores (dot product between query and mean-pooled key descriptors) and restricts attention to the most pertinent history frames.

- Routing operates in a head-wise and token-wise fashion to maintain a high effective receptive field.

- Implementation fuses intra-frame and routed sparse inter-frame attention branches via log-sum-exp aggregation, leveraging FlashAttention for optimal memory efficiency.

This approach achieves substantial sparsity (e.g., 75% with top-5 out of 20 selected frames) without notable quality loss, outperforming sliding-window attention mechanisms in preserving global temporal consistency, especially under minute-scale generation budgets.

Empirical Results

Comparative Analysis

Extensive benchmarking is conducted against recent autoregressive video generation systems, including strict-causal and relaxed-causal baselines, and distillation-based post-training methods:

- Visual inspections demonstrate reduced accumulation of artifacts and preservation of temporal semantics under strict autoregressive rollout.

- Quantitative evaluation on VBench metrics (temporal, visual, text alignment) shows the new method achieves superior temporal consistency and competitive visual/text scores across all partitioned segments of 15-second videos, outperforming teacher-forced and distillation models of similar or larger scale.

Notably, models relying on bidirectional teacher distillation (e.g., LongLive, Self Forcing) exhibit violation of strict causality in physical reasoning cases (milk-pouring), attributable to temporal leakage during distillation. The self-resampling framework maintains monotonic semantic progressions, upholding physical law constraints.

Ablation and Analysis

Sequential ablations affirm that:

- Autoregressive self-resampling delivers the highest generation quality versus parallel resampling or naive noise augmentation.

- Timestep shifting parameters regulate the robustness-quality trade-off; moderate values optimally suppress error accumulation without incurring content drift.

- Dynamic routing yields attention patterns combining sliding-window and attention-sink characteristics, distributing context selection adaptively over frame history according to content requirements.

Empirical studies also highlight negligible performance losses with increasing sparsity via top-k routing and robustness against history context reduction—crucial for scaling generative video models under computational constraints.

Implications and Future Directions

The teacher-free self-resampling approach provides a scalable solution to the exposure bias problem in autoregressive video diffusion. It achieves:

- End-to-end training from scratch, without external teachers or discriminators.

- Strict adherence to causal dependencies, suitable for downstream tasks that require faithful prediction of future physical states and interactive synthesis.

- Scalable long-horizon video generation with efficient memory design via content-based dynamic routing.

Current limitations include inference latency due to multi-step denoising and dual sequence processing requirements. These may be alleviated by integrating accelerated solvers, one-step distillation, or architectural streamlining inspired by recent adversarial post-training.

Theoretically, the approach advances unified training strategies for autoregressive generative models under imperfect histories. Practically, it opens the door for minute-scale or real-time interactive video synthesis on resource-constrained hardware. Future prospects include combining teacher-free self-resampling with multi-agent world modeling, advanced hardware-aligned sparse attention, and interactive video editing applications.

Conclusion

This work presents a robust, teacher-free autoregressive video diffusion model that simulates train-test mismatches via self-resampling and deploys dynamic history routing for scalable attention. The method achieves strong temporal consistency, comparable visual quality to the best distillation baselines, and strict causality needed for predictive simulation. It constitutes a practical and theoretically sound foundation for next-generation video generative modeling in research and industry.

Reference: "End-to-End Training for Autoregressive Video Diffusion via Self-Resampling" (2512.15702)