- The paper's main contribution is the DynamicGen pipeline that integrates metric reconstruction, multi-object recovery, and hierarchical captioning for accurate 4D world modeling.

- It reports superior performance in video depth, camera pose, and segmentation tasks, outperforming baselines on datasets like Sintel and TUM-dynamics.

- The framework bridges the synthetic-real gap with scalable in-the-wild data, driving advancements in embodied AI and 4D vision-language models.

DynamicVerse: A Physically-Aware Multimodal Framework for 4D World Modeling

Motivation and Problem Statement

Scaling physically-grounded, multi-modal 4D scene understanding remains a persistent bottleneck for embodied perception, vision-language modeling, and physically-realistic digital twins. The absence of large, truly “world-scale,” semantically-rich 4D datasets with metric geometry, motion, and detailed captions constrains the development of generalist AI models capable of operating on dynamic, real-world video. Most existing datasets are constrained to controlled settings (e.g., indoor, driving), synthetic content, or lack critical annotations (such as metric depth, camera intrinsics/extrinsics, or detailed language grounding). DynamicVerse addresses this gap by introducing an automated scalable pipeline—DynamicGen—for extracting holistic 4D multi-modal representations from in-the-wild monocular video while delivering a large-scale, richly annotated benchmark dataset.

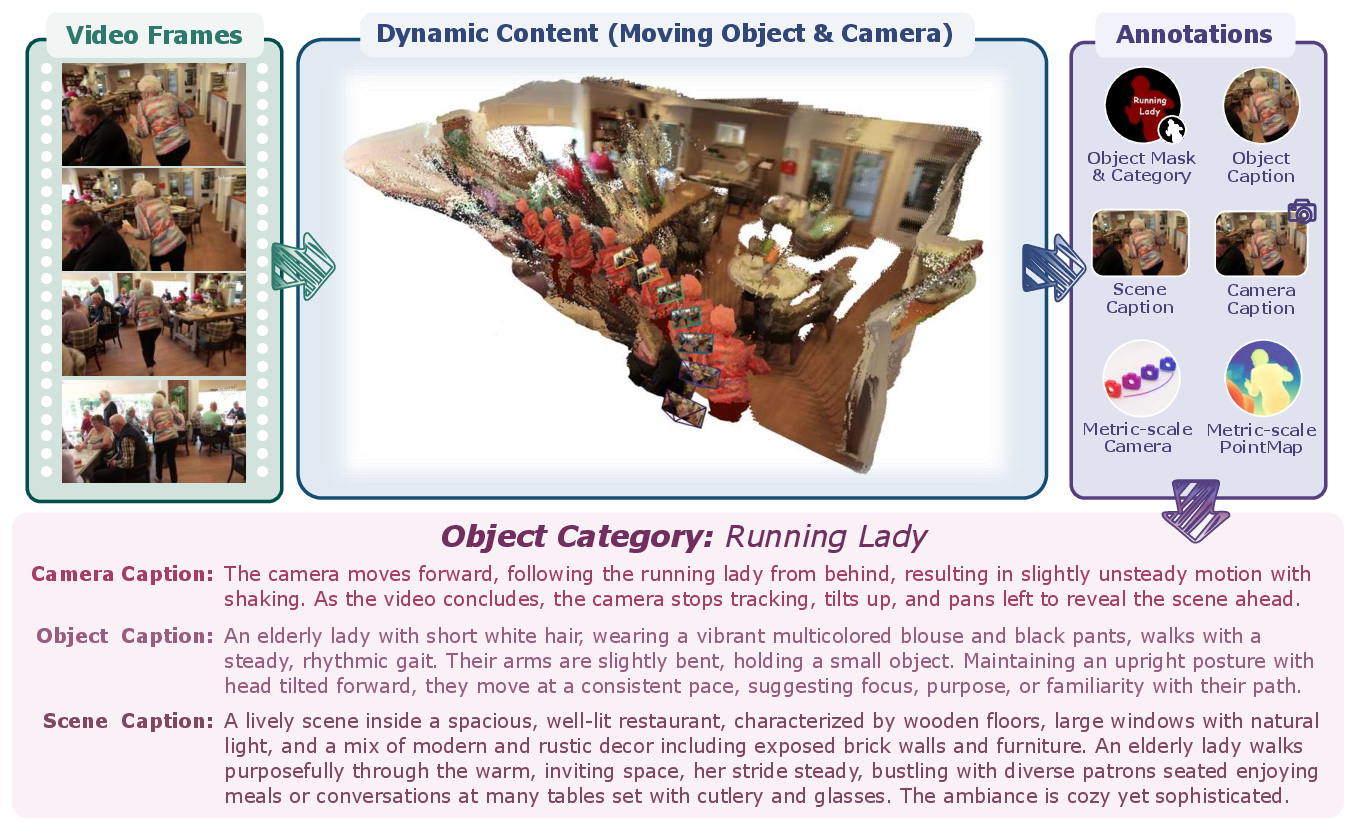

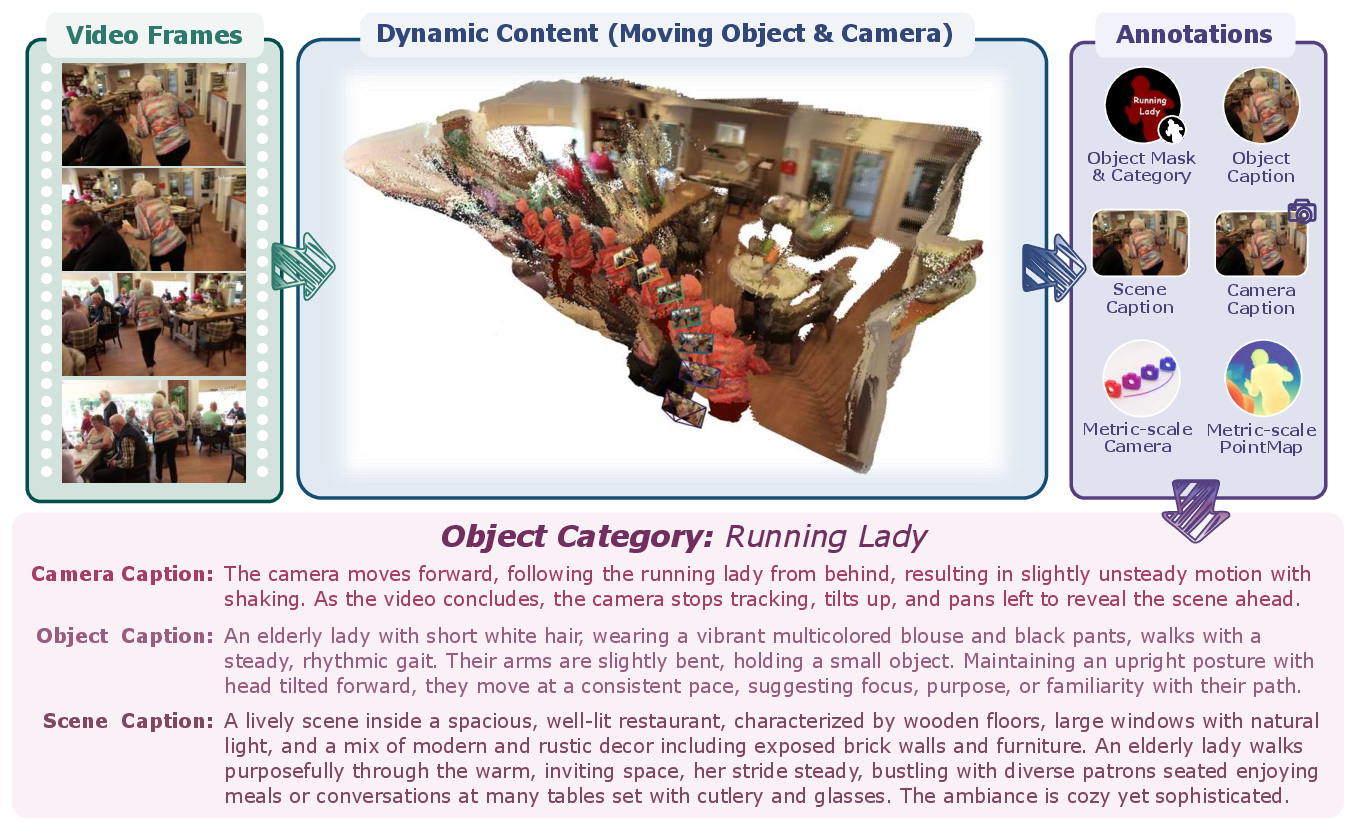

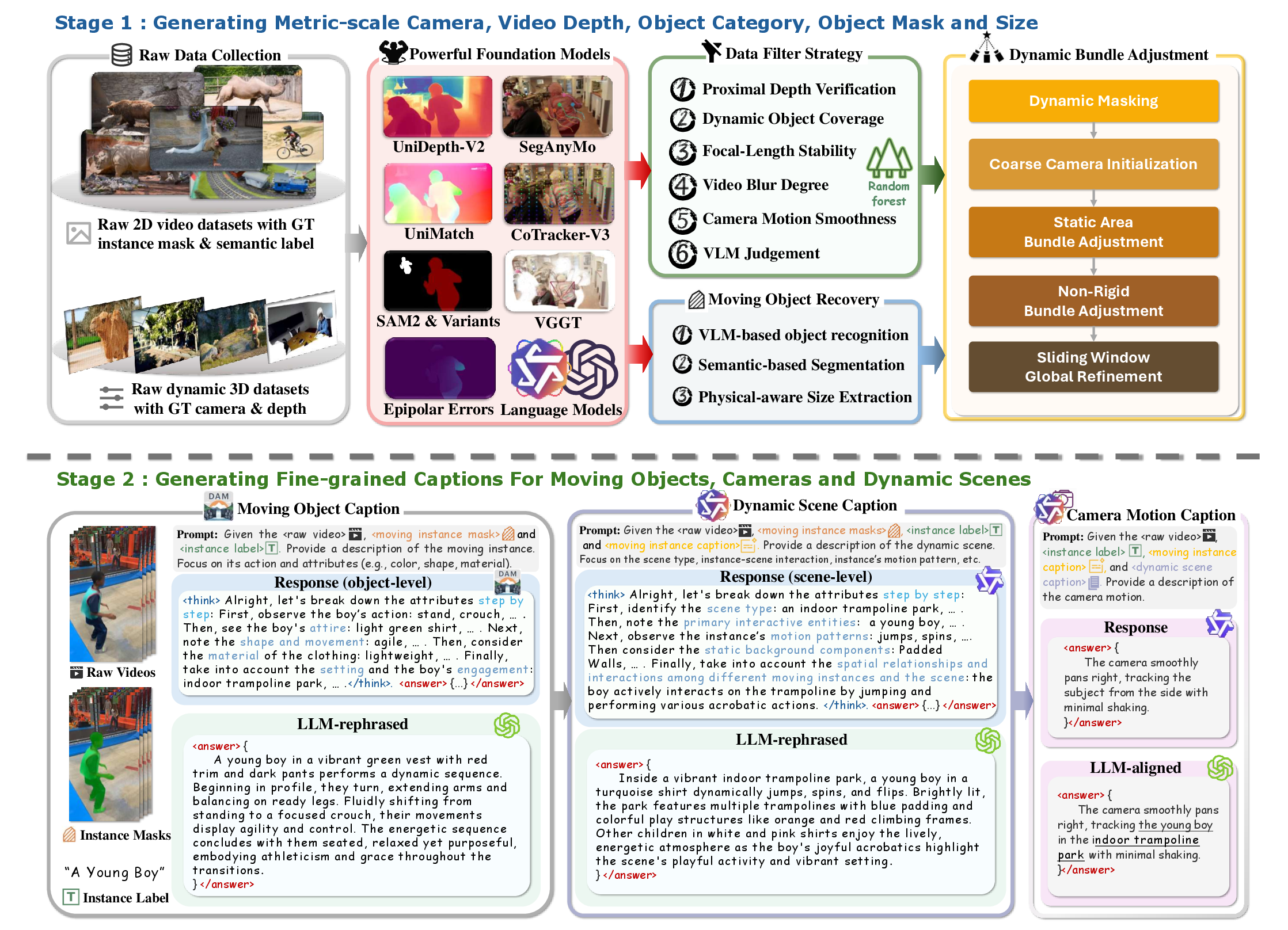

Figure 1: The overview of physically-aware multi-modal world modeling framework DynamicVerse.

DynamicVerse Framework: Data Generation and Curation

DynamicGen, the core pipeline of DynamicVerse, operationalizes a physically-aware 4D world modeling system by combining metric reconstruction, dense multi-object recovery, and hierarchical semantic captioning.

Data Sources and Scale:

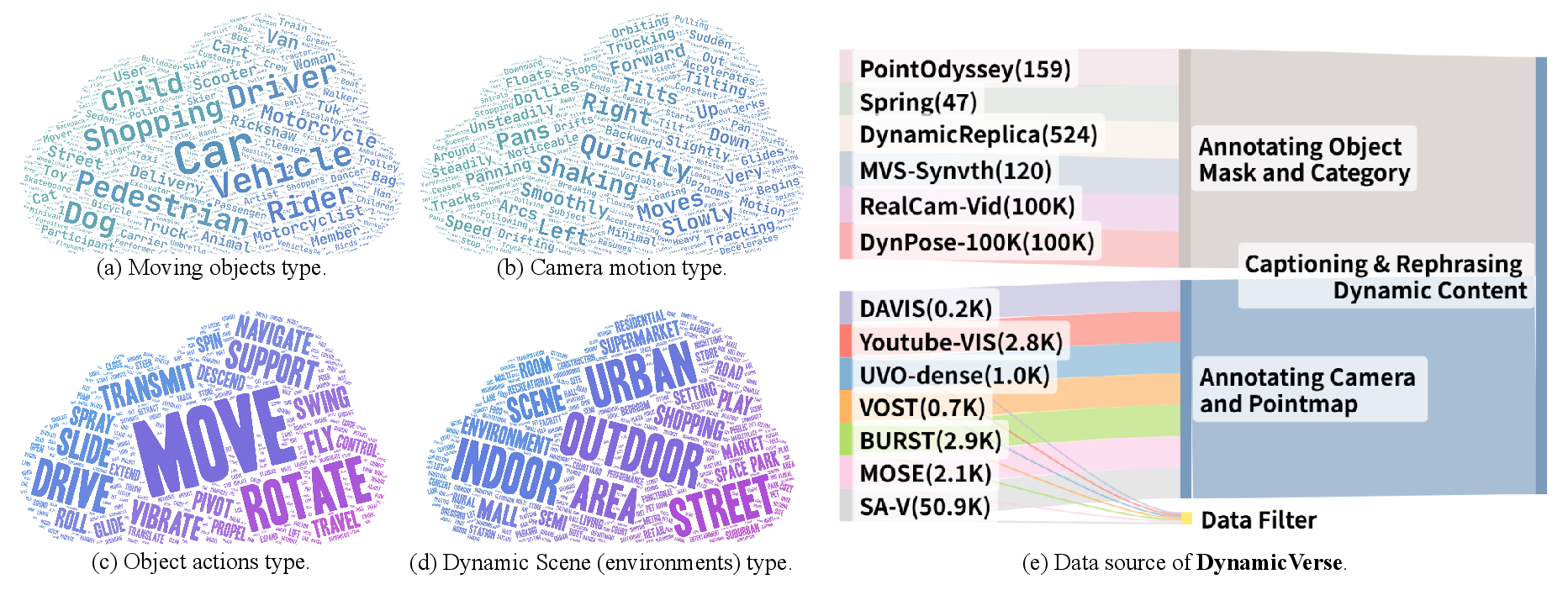

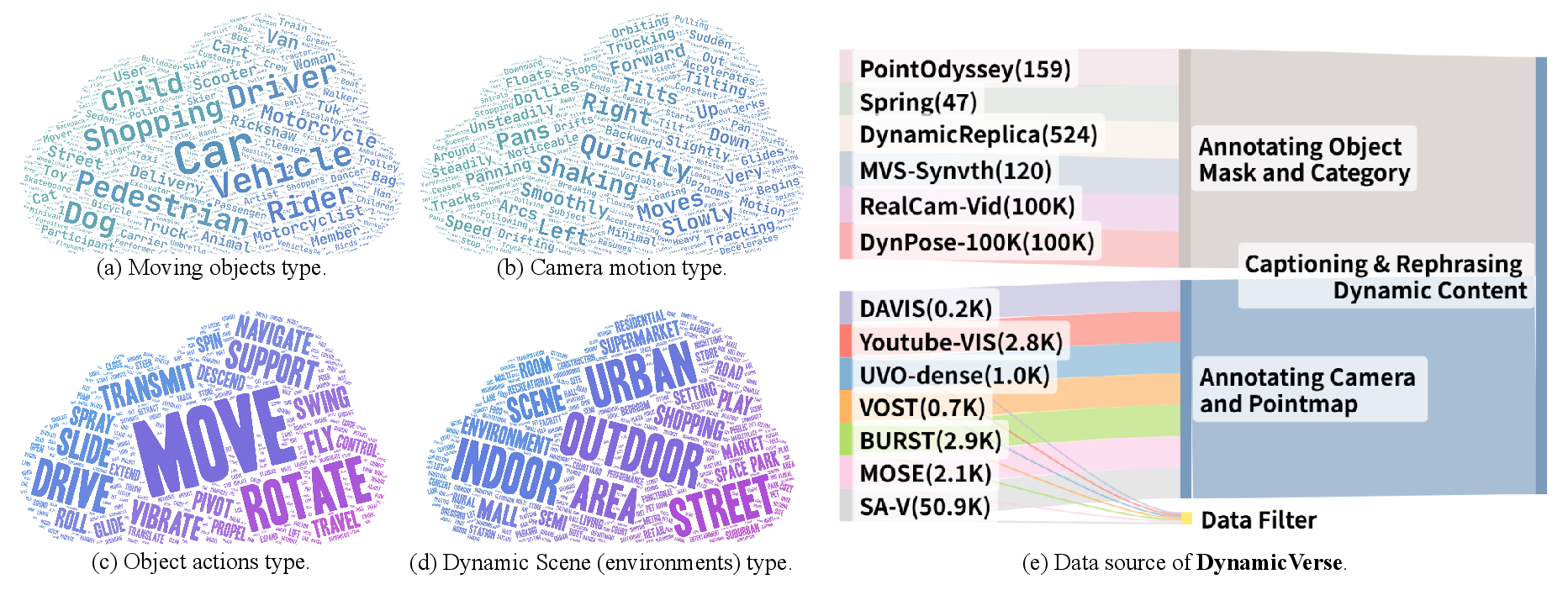

DynamicVerse aggregates over 100K web-scale and synthetic 4D video sequences, yielding 800K+ annotated instance masks and 10M+ frames. The diverse sources ensure broad scene and motion coverage and facilitate generalization for downstream foundation models.

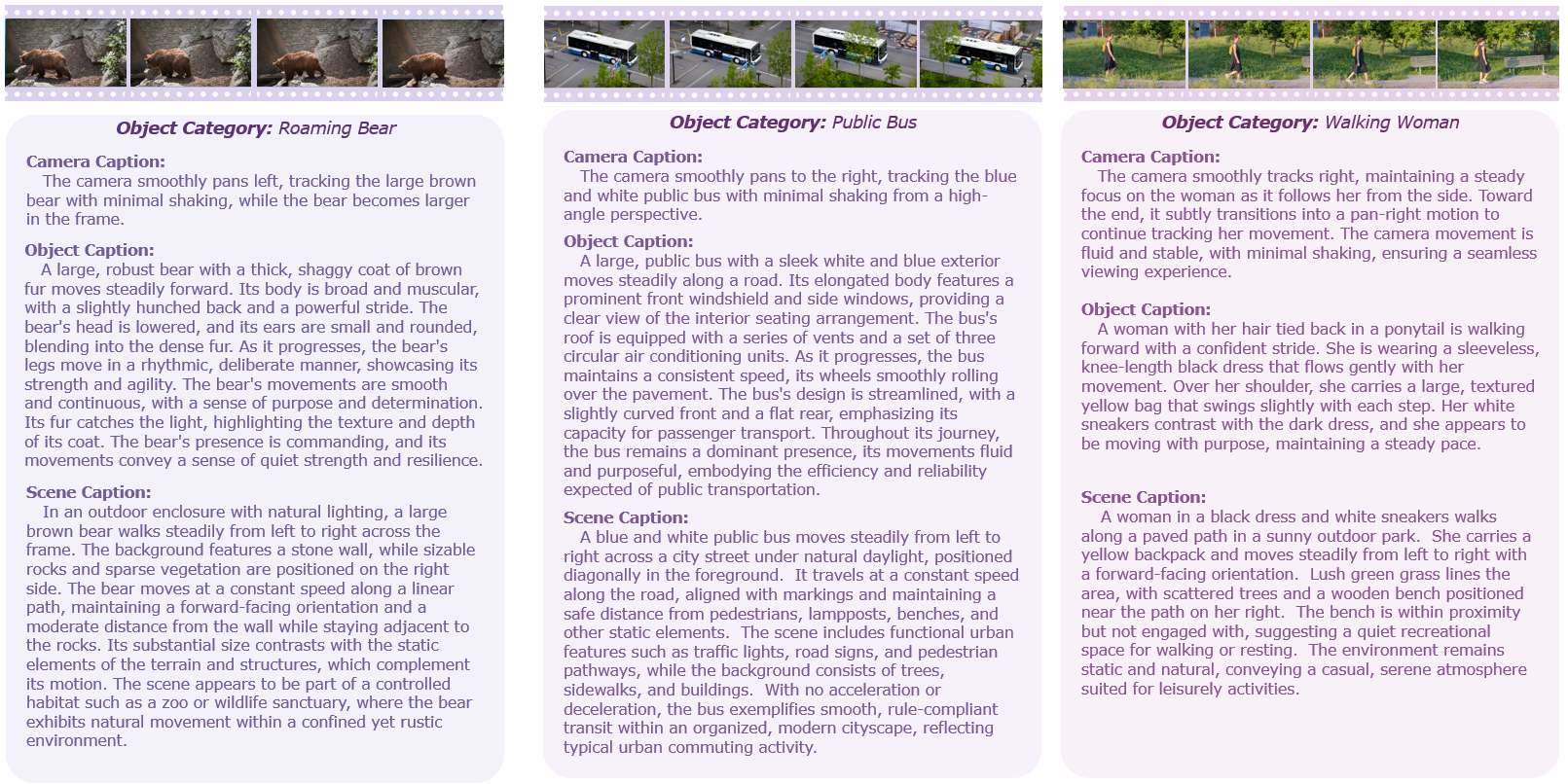

Figure 2: The statistics and data source of DynamicVerse.

DynamicGen Pipeline Structure:

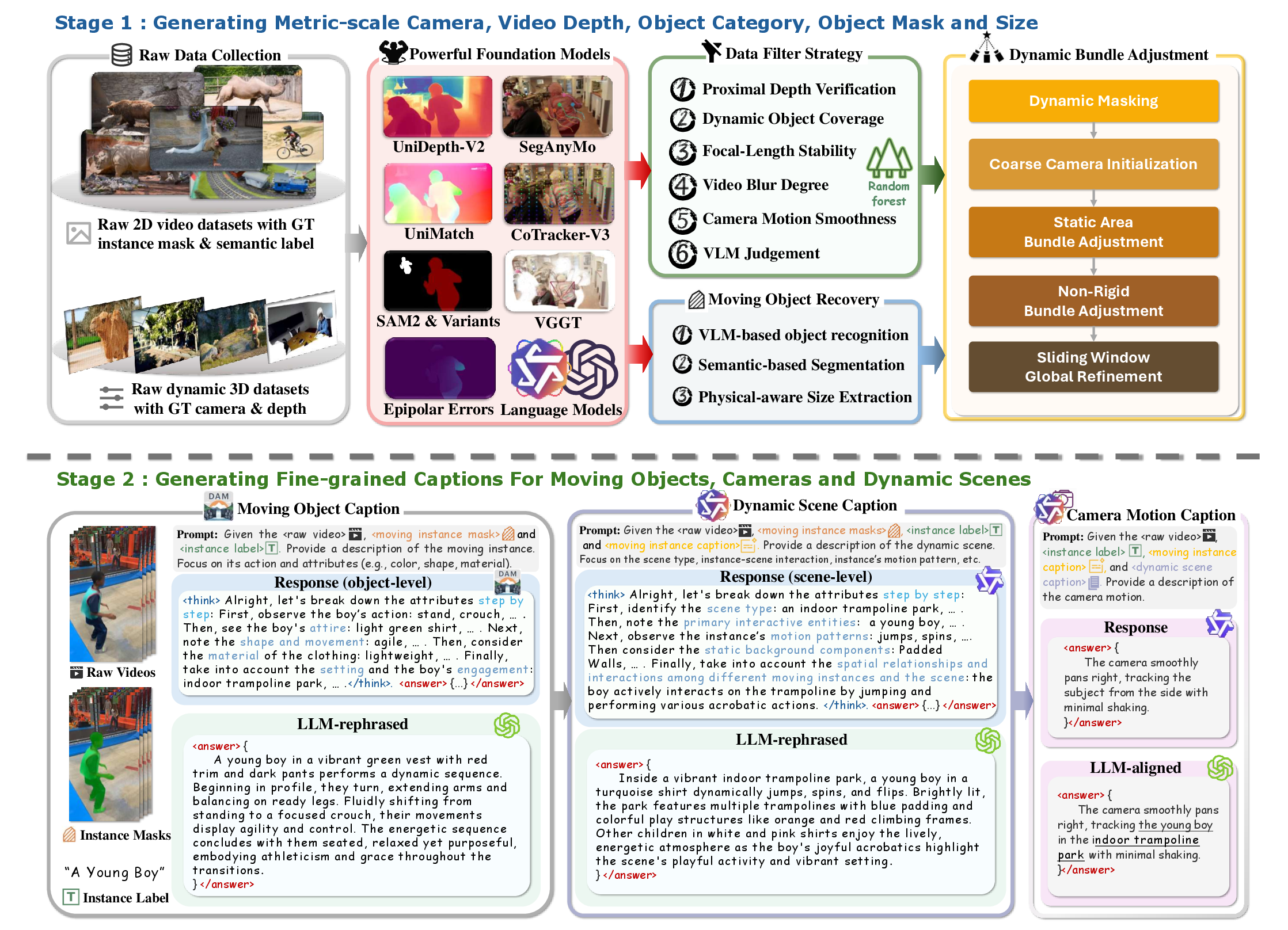

- Video Curation and Filtering: Extensive filtering is required to ensure data utility. DynamicGen trains a Random Forest video quality predictor on metrics such as depth statistics, blur, focal stability, and camera motion. VLM-based categorical exclusion further refines the curated pool.

- Foundation Model-Annotated Masks and Semantics: Qwen2.5-VL and SA2VA are used in tandem: Qwen2.5-VL proposes object categories and tracks dynamic actors, which are then tightly segmented by SA2VA. The system incorporates per-frame metric-scale depth via UniDepthv2, as well as geometric and tracking features via CoTracker3 and UniMatch.

- Physically-Consistent 4D Geometry Recovery: DynamicGen employs a multi-stage bundle adjustment strategy, segmenting static and non-rigid dynamic points, aligning depth, pose, and scene geometry with global optimization. Unique to this approach is the use of hierarchical dynamic masking and sliding-window flow-based refinement, which ensures both local and global consistency across diverse video content.

- Hierarchical Captioning:

Grounded captions are generated at three semantic levels: (i) object (via DAM), (ii) scene (via structured LLM prompting to Qwen2.5-VL), and (iii) camera motion (yielding trajectory-level descriptions via specialized VLMs). Chain-of-Thought (CoT) LLM prompting and iterative human-in-the-loop review ensure fluency, relevance, and detail.

Figure 3: The physically-aware multi-modal 4D data generation pipeline DynamicGen.

Benchmarks and Results

DynamicVerse establishes new state-of-the-art results on classic physically-grounded video tasks: depth estimation, camera pose estimation, and camera intrinsics estimation.

Video Depth Estimation

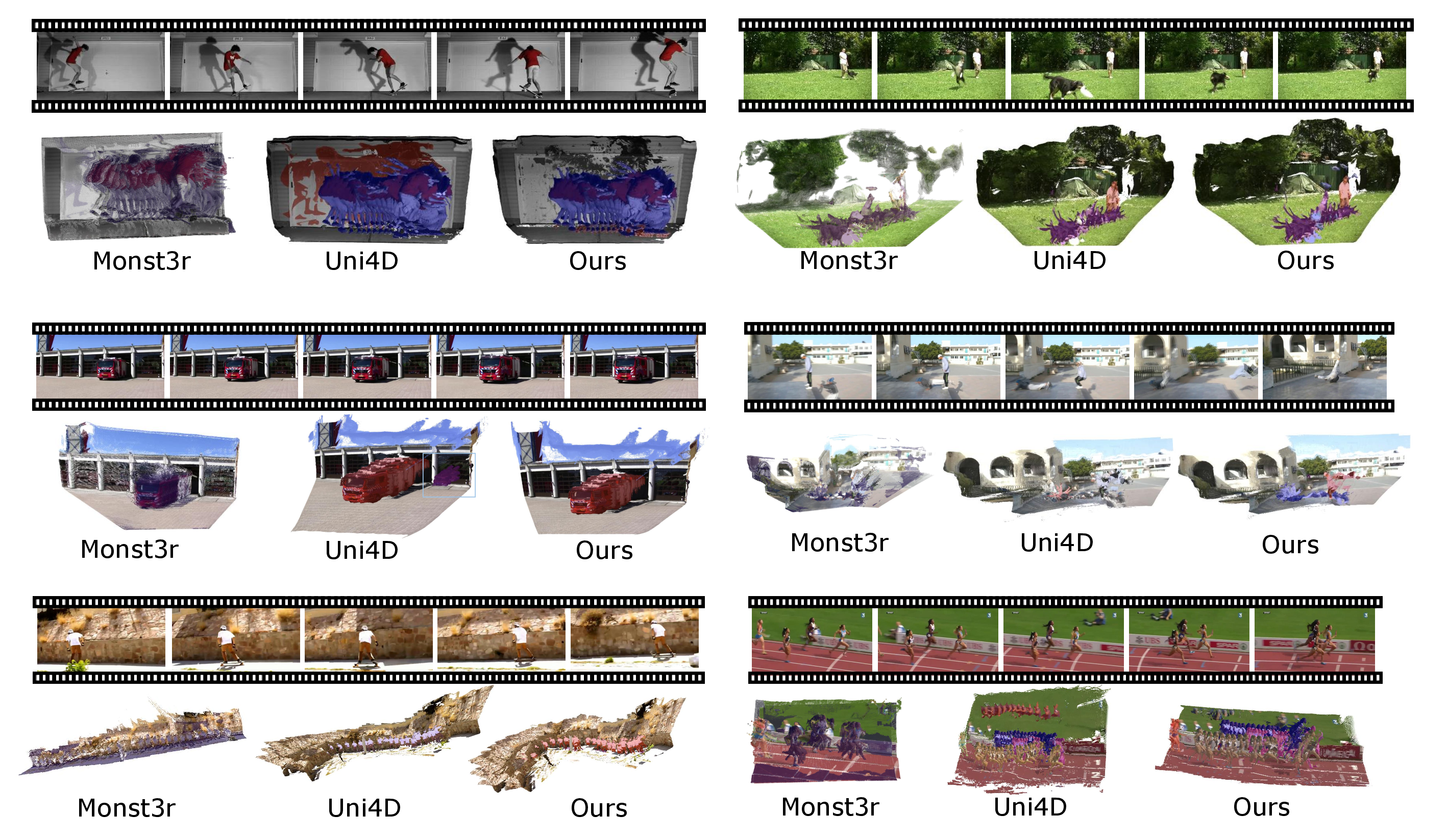

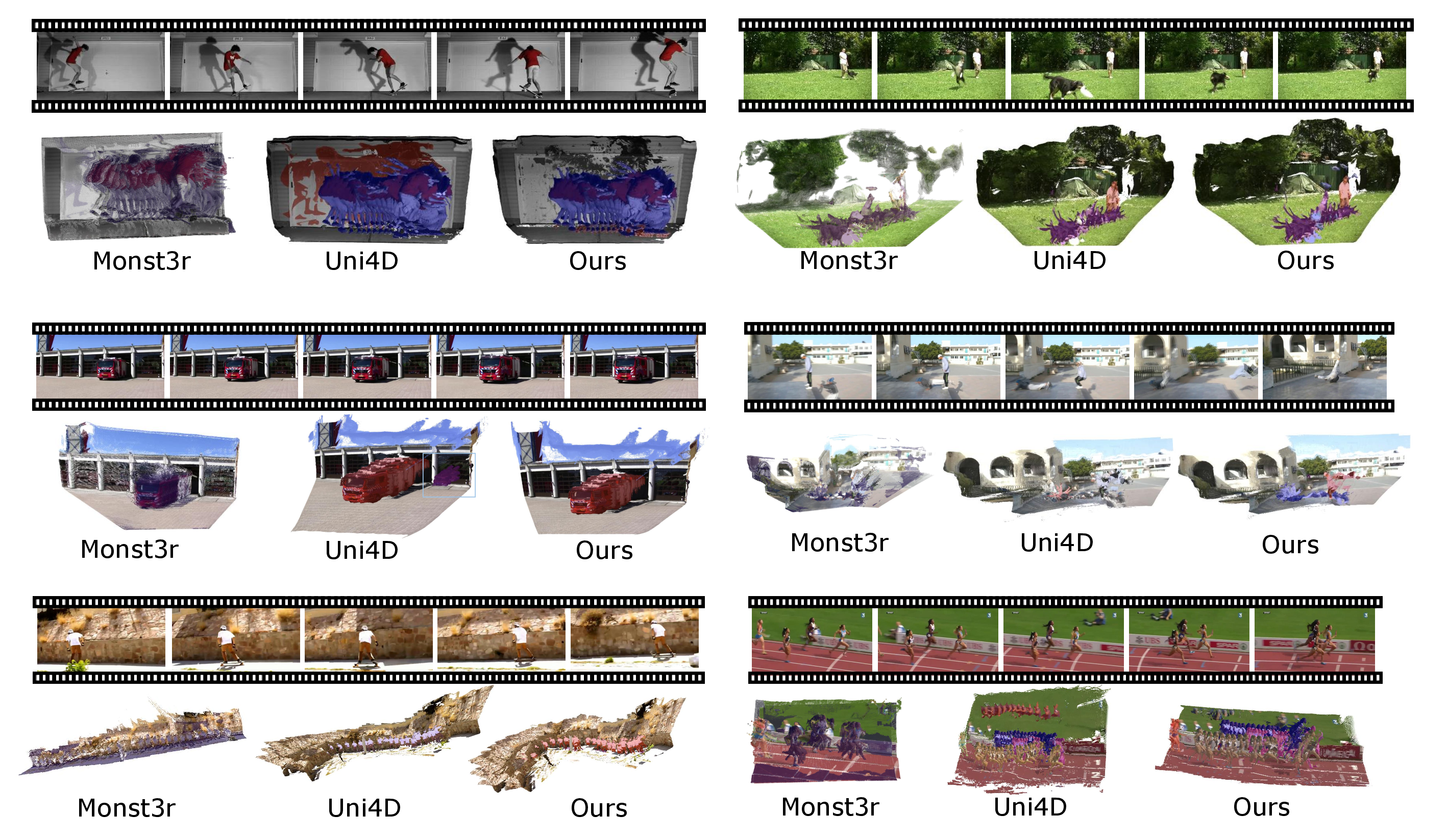

DynamicGen demonstrates superior performance versus strong baselines (e.g., MonST3R, Uni4D, Depth-Pro, Metric3D, DepthCrafter) on both Sintel and KITTI. On Sintel, it achieves an absolute relative error of 0.205 and δ1.25 of 72.9, outperforming all alternatives in both absolute and geometric consistency metrics. Qualitatively, reconstructions are denser, less noisy, and more accurately capture dynamic object geometry compared to prior methods.

Figure 4: Visual comparisons of 4D reconstruction on in-the-wild data.

Camera Pose and Intrinsics Estimation

DynamicGen sets a new standard across absolute trajectory error (ATE), relative translation, and rotation errors on both synthetic (Sintel) and real-world (TUM-dynamics) video. On Sintel, it yields ATE of 0.108 and RPE (rotation) of 0.282, outperforming both classic and learning-based approaches such as DPVO, LEAP-VO, Robust-CVD, and MonST3R. For focal length/imaging intrinsics, DynamicGen exhibits lower absolute and relative error compared to strong single-image and multi-frame methods (e.g., UniDepth, Dust3r).

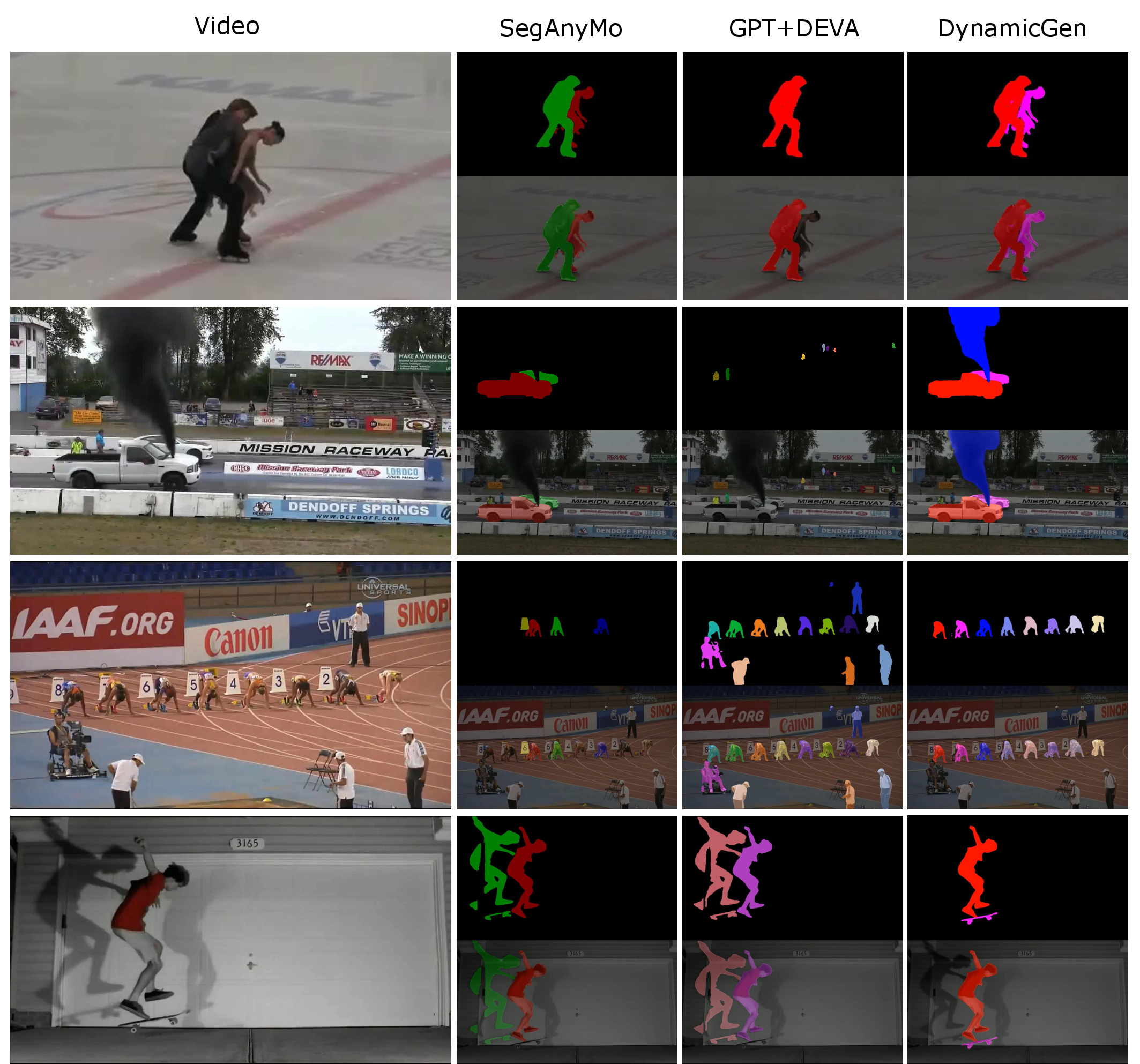

Physically-Aware Segmentation

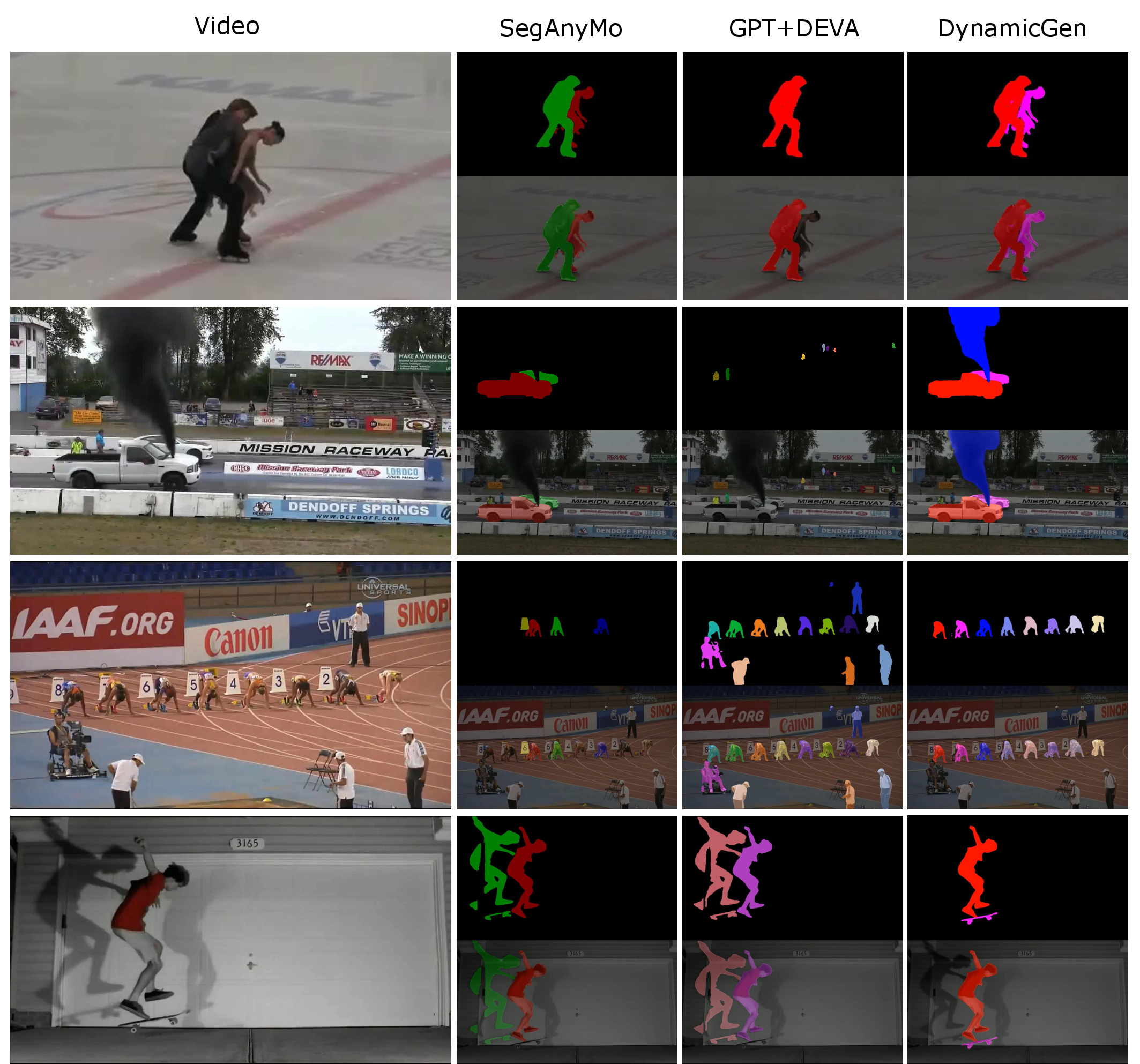

Leveraging both 2D and geometric priors, DynamicVerse delivers improved moving object segmentation, showcasing robust, interpretable masks.

Figure 5: Qualitative Results of Moving Object Segmentation on Youtube-VIS, indicating superior instance mask precision compared to prior work.

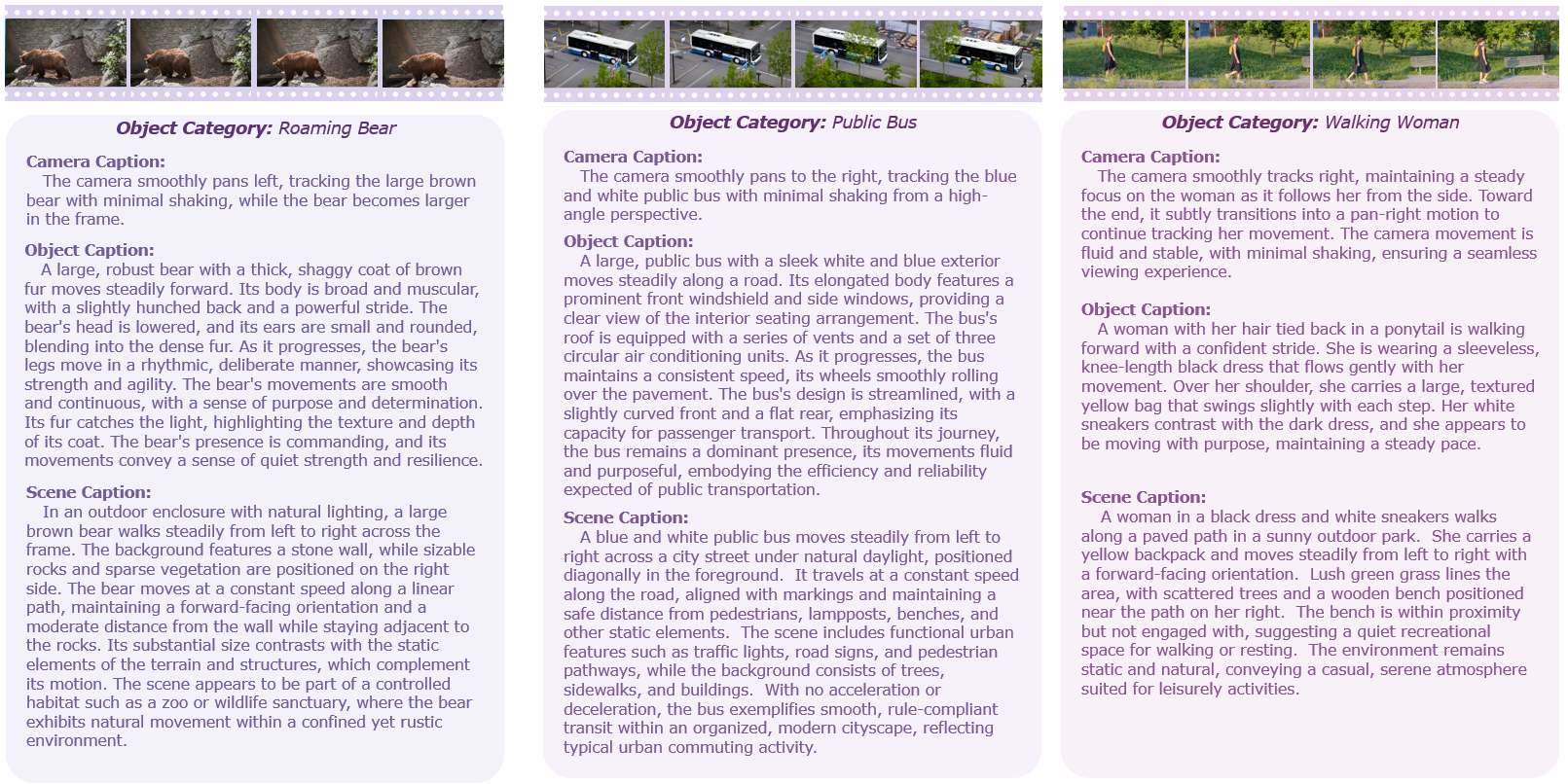

Hierarchical Captioning

In both human and LLM-as-Judge evaluations (via G-VEval), captions generated by DynamicGen are more accurate, relevant, complete, and concise compared to direct vision-language outputs or prior datasets. Heavy use of hierarchical prompting, per-object grounding, and CoT-based refinement results in highly actionable, contextually nuanced semantic annotations.

Figure 6: Examples captions on DAVIS dataset, demonstrating object, scene, and camera motion caption granularity.

Practical and Theoretical Implications

DynamicVerse’s scalable and physically-aware methodology has several critical implications:

Limitations and Future Directions

Despite substantial progress, DynamicVerse inherits several limitations:

- Data Bias and Noise: In-the-wild video data still introduces annotation noise and class imbalance, which may bias learning and impair generalization to tail scenarios.

- Computational Overheads: Processing remains costly due to reliance on large foundation models and multi-stage optimization, which limits real-time or resource-constrained deployment.

- Coverage Limits: Even with its scale, DynamicVerse cannot exhaustively model all real-world dynamics or scene structures, especially in extreme occlusion or highly non-rigid scenarios.

Future work should address domain-specific generalization, more efficient annotation by leveraging accompanying sensor data where available, and integration with reinforcement-learning-based embodied agents for closed-loop evaluation and data augmentation.

Conclusion

DynamicVerse, through its DynamicGen pipeline, delivers both a novel methodology for physically-aware 4D multi-modal data extraction and a substantive benchmark dataset. Experimental evidence confirms state-of-the-art performance in core spatial-temporal vision tasks and establishes a scalable framework for holistic 4D understanding. The generality and annotation richness of DynamicVerse make it a critical resource for 4D scene understanding, driving broader advances in foundation models, embodied intelligence, and language-grounded scene reasoning.

Figure 8: DynamicVerse dataset, highlighting annotation density and multimodal diversity.