- The paper demonstrates that structured prompting improves model evaluation by boosting accuracy (up to +4%) and reducing output variability.

- It employs DSPy to generate multiple prompt variants, revealing prompt-induced confounding and causing rank reversals in benchmark leaderboards.

- Cost-effectiveness analysis identifies Zero-Shot CoT as the optimal strategy, achieving significant gains with minimal token overhead.

Structured Prompting for Robust Holistic Evaluation of LLMs

Introduction

The paper "Structured Prompting Enables More Robust, Holistic Evaluation of LLMs" (2511.20836) presents rigorous empirical evaluation of structured prompting techniques within the HELM framework, quantifying their effects on evaluation robustness for state-of-the-art LLMs. By integrating declarative and automatically optimized prompts via DSPy, the authors address deficiencies in conventional fixed-prompt leaderboard benchmarks, specifically the underestimation of performance ceilings and prompt-induced confounding in model comparisons.

Methodological Framework

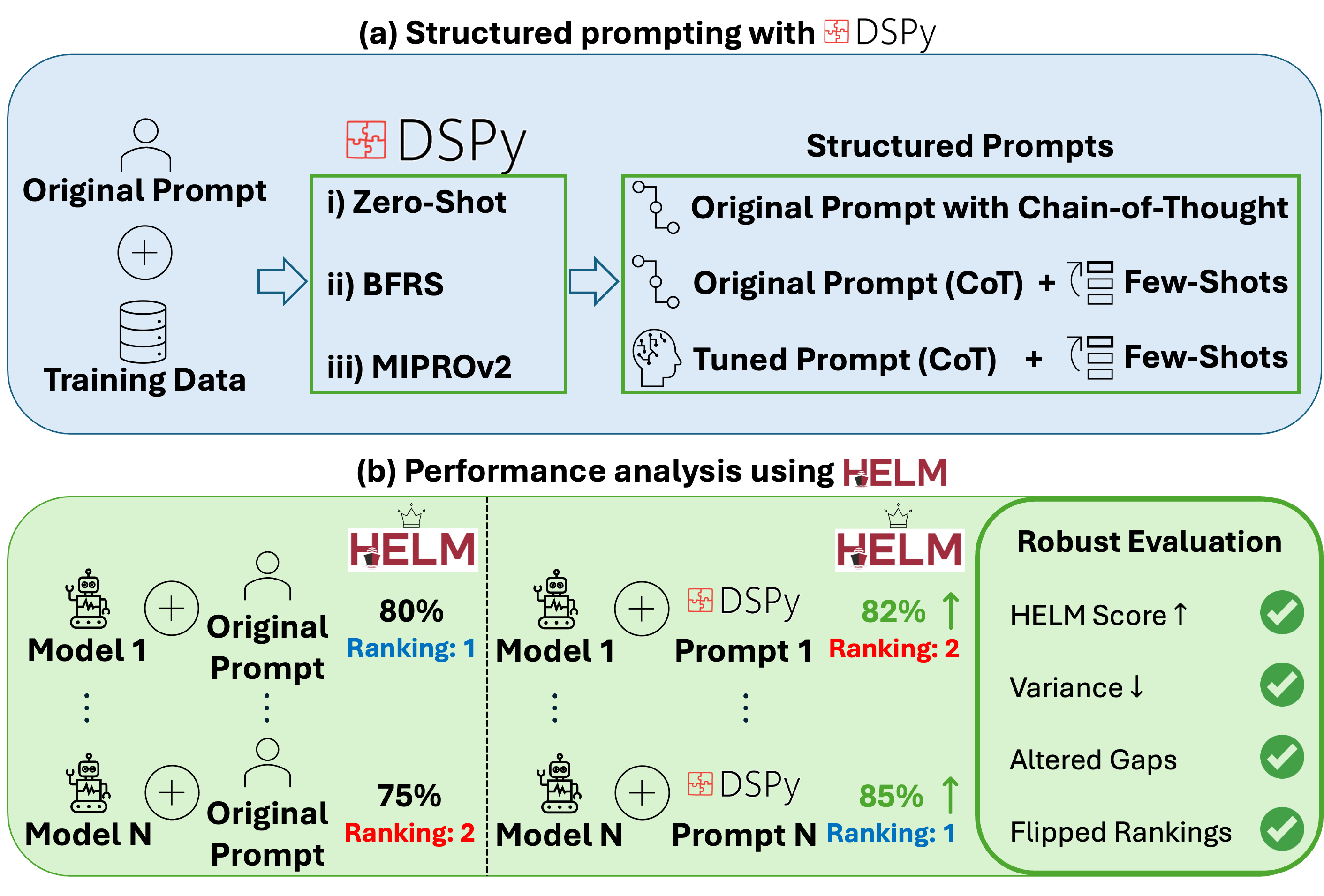

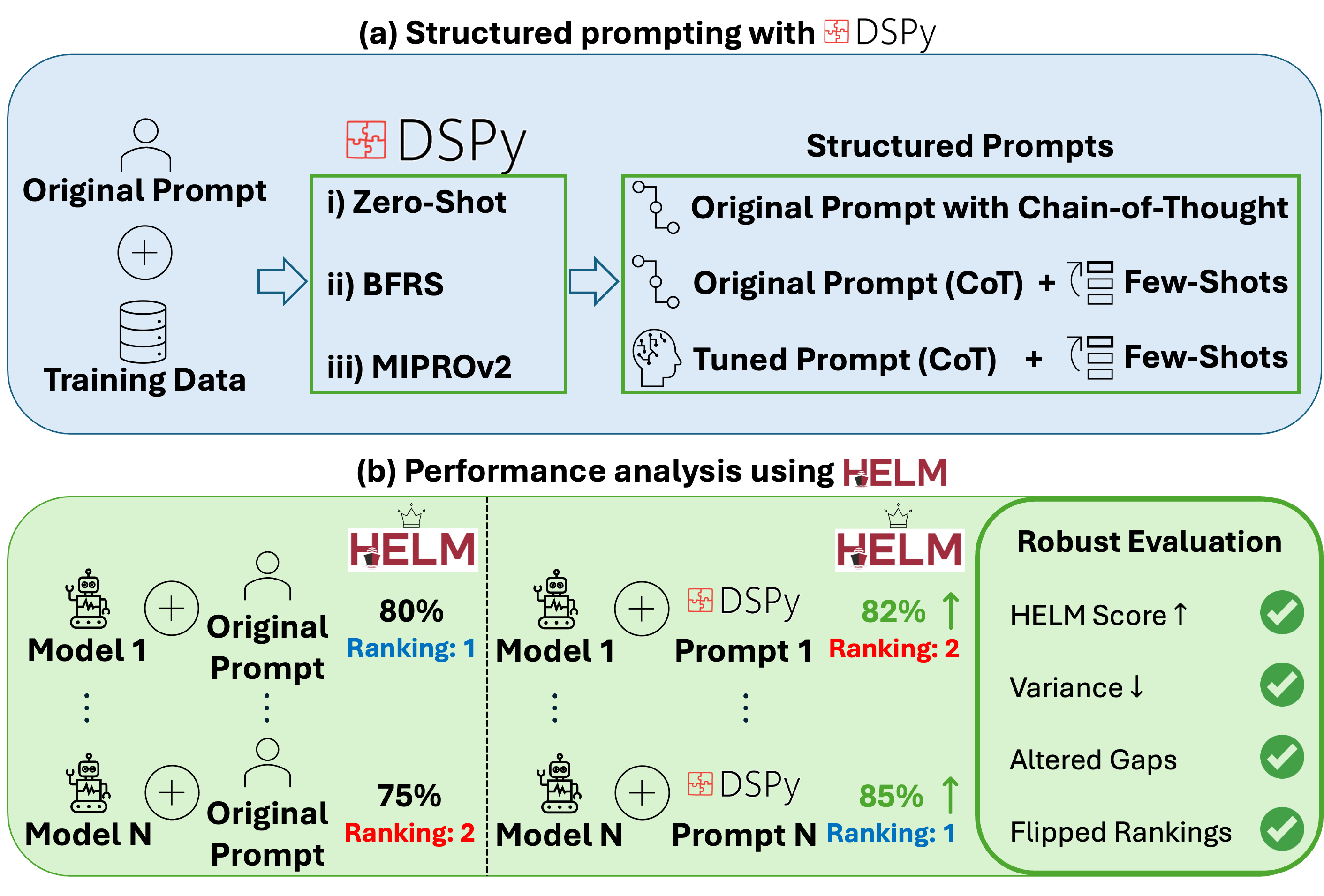

The experimental pipeline leverages DSPy to systematically produce structured prompt variants from HELM's baseline configuration and evaluates model responses under each variant (Figure 1). The paper benchmarks four frontier LMs (Claude 3.7 Sonnet, Gemini 2.0 Flash, GPT 4o, o3 Mini) across seven diverse tasks spanning both general and medical domains. The prompting strategies encompass HELM Baseline, DSPy's Zero-Shot Predict, Zero-Shot Chain-of-Thought (CoT), Bootstrap Few-Shot with Random Search (BFRS), and the MIPROv2 joint optimizer. The latter two utilize explicit prompt optimization to select instructions and in-context demonstrations.

Figure 1: DSPy produces prompt variants from HELM baseline, enabling robust multi-method evaluation and induced shifts in accuracy and ranking gaps.

Benchmarks include MMLU-Pro, GPQA, GSM8K (general reasoning and QA), alongside MedCalc-Bench, Medec, HeadQA, and MedBullets (medical reasoning, error detection, and board-style QA), exposing the models to a spectrum of reasoning, calculational, and classification demands.

Structured Prompting: Effects on Evaluation Metrics

Across all benchmarks and models, introduction of structured prompting via CoT and prompt optimizers yields an average absolute accuracy improvement of +4% over HELM baseline, with non-reasoning models benefiting the most (+5%), and the o3 Mini model exhibiting robustness (+2%) due to its native reasoning capability. Structured prompting also reduces standard deviation of scores across benchmarks by approximately 2%, quantifying diminished prompt sensitivity and improved evaluation stability.

Benchmark leaderboard configurations are not invariant to prompt changes: three out of seven benchmark rankings are flipped after accounting for the highest achievable scores under optimized prompting. For example, in MMLU-Pro, the relative performance of o3 Mini and Claude 3.7 Sonnet reverses under ceiling estimation, revealing benchmarking artifacts in fixed-prompt leaderboards. The paper further demonstrates that model-to-model performance gaps may both narrow (as in aggregate macro-averaged scores) or widen (as in GPQA) when structured prompting is adopted, emphasizing prompt-induced confounding in comparative evaluations.

Benchmark Sensitivity Profile

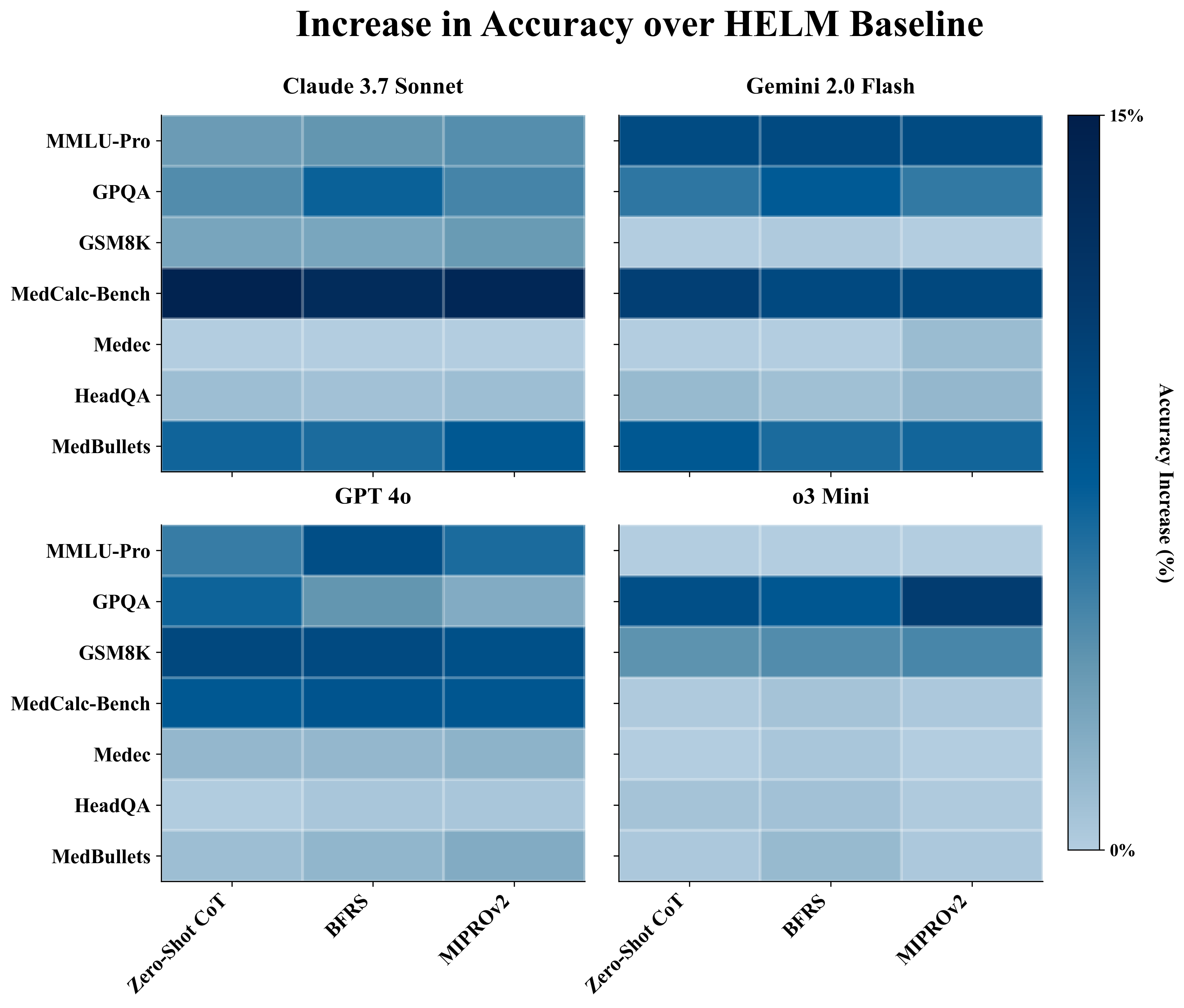

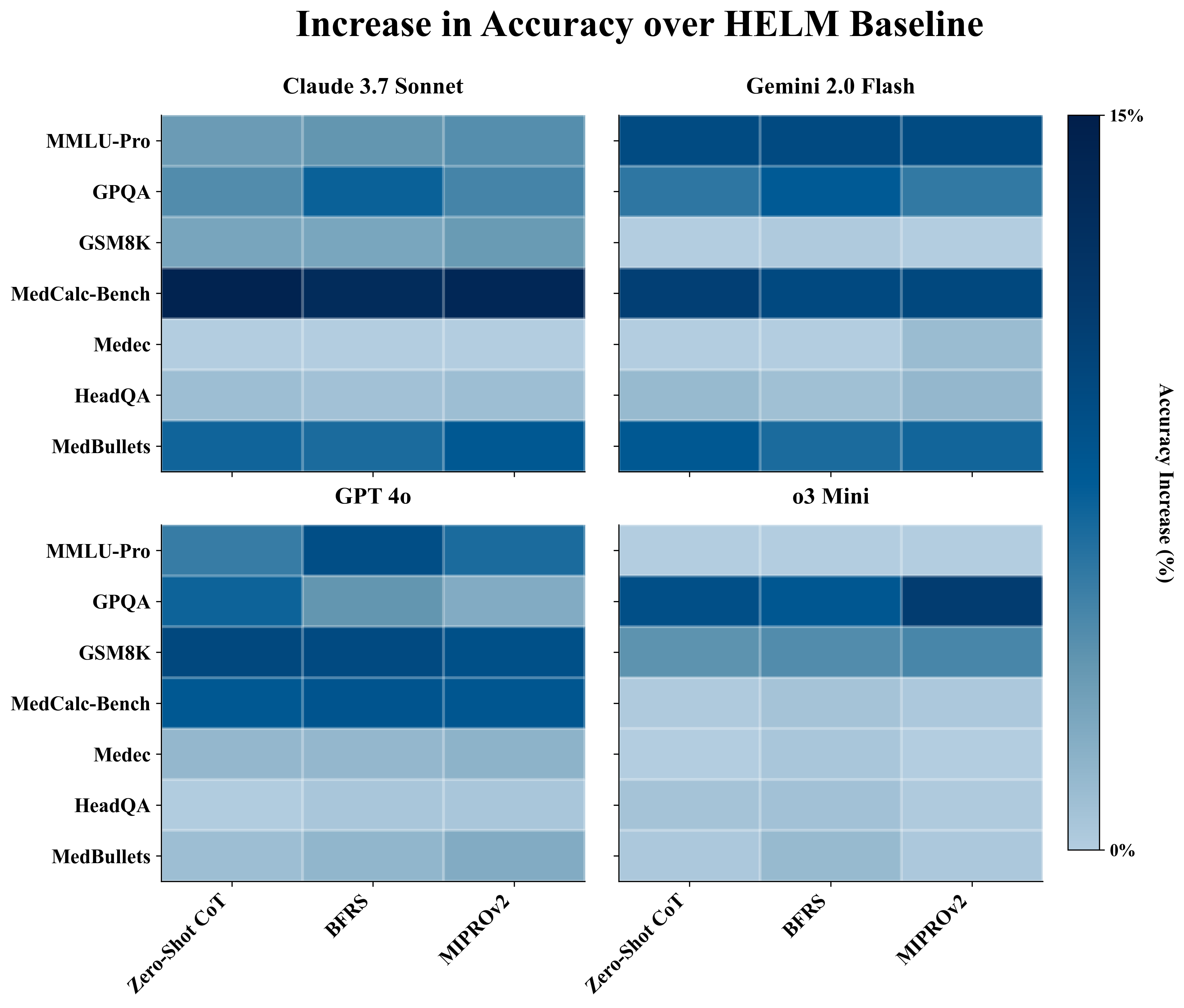

Structured prompting delivers maximal gains on tasks with pronounced reasoning components (e.g., MMLU-Pro, GPQA, GSM8K, MedCalc-Bench, MedBullets), with mean improvements on these tasks exceeding +5.5% accuracy. In contrast, benchmarks such as HeadQA and Medec, with high baseline performance or knowledge-bounded task structure, exhibit minimal benefit, indicating that structured reasoning prompts most effectively address ambiguity and inference in model responding.

CoT-Induced Prompt Insensitivity

Substantial performance gains are observed upon transitioning from baseline to Zero-Shot CoT prompting, with minimal further improvement when adding sophisticated prompt optimization (BFRS/MIPROv2). CoT restructuring fundamentally alters model inference, increasing decision margins and rendering the output distribution invariant to moderate changes in prompt design. Total variation and KL-divergence bounds formalize this effect, where maximization over reasoning paths (self-consistency) mediates robust prediction and immunity to prompt reweighting, except for near-tied or ambiguous items.

Cost-Effectiveness Analysis

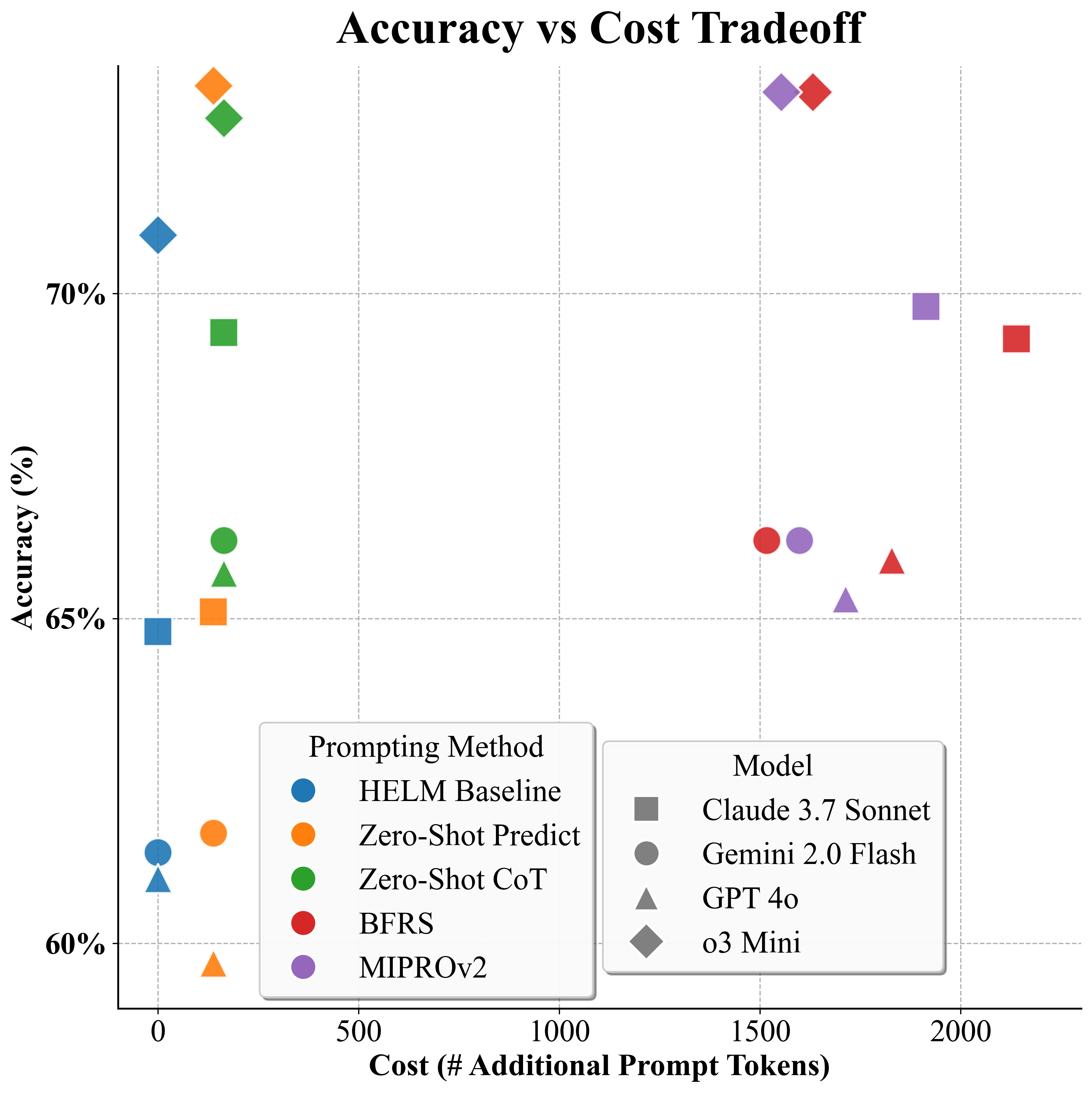

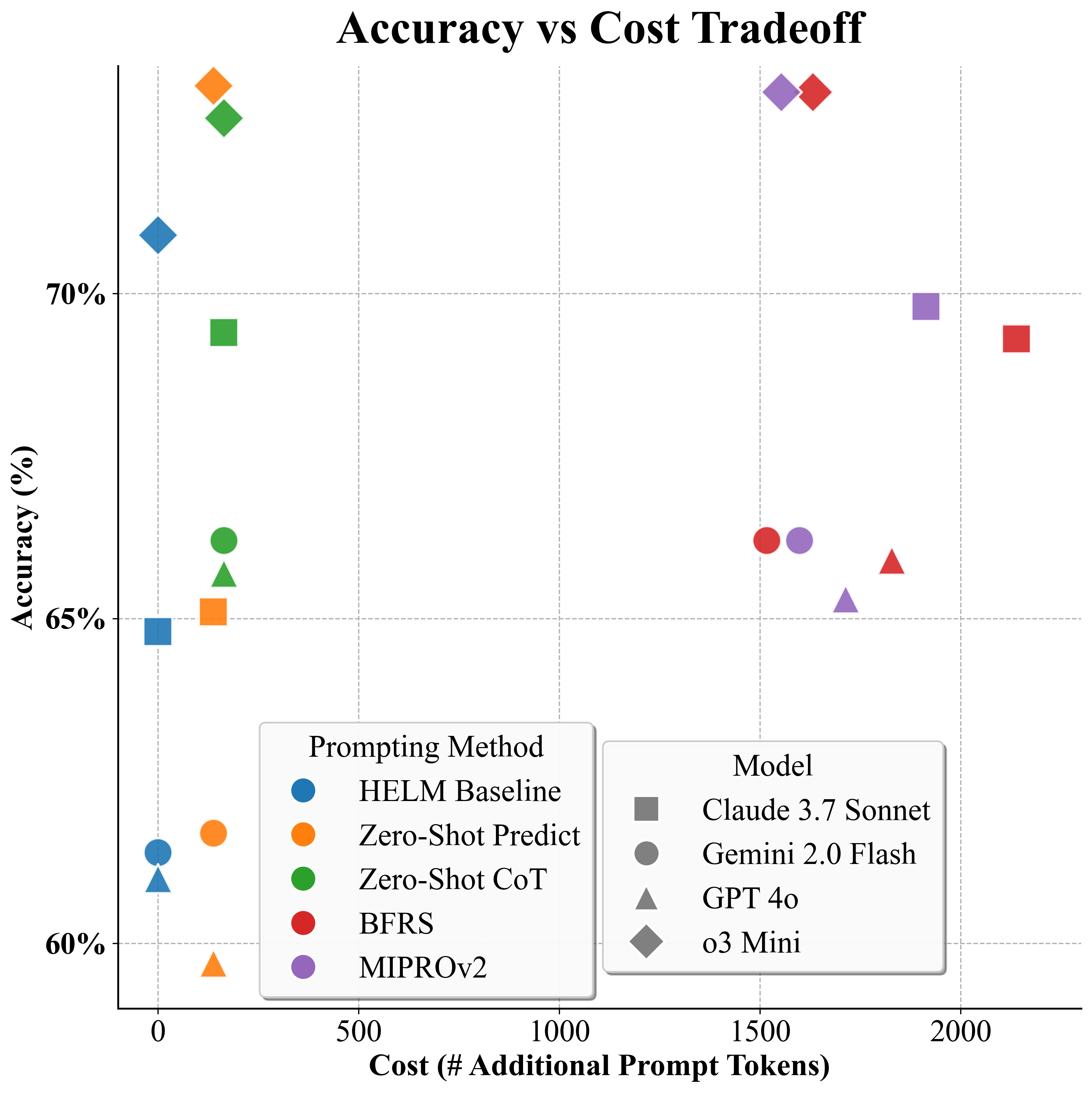

Inference-time computational cost measured in token budget correlates nonlinearly with accuracy gains (Figure 2). Zero-Shot CoT achieves most of the aggregate performance improvement with a negligible increase in prompt length (~164 tokens over baseline), while BFRS and MIPROv2 incur substantial overhead (>1700 tokens per query). The amortized cost of prompt optimization is minimal relative to downstream deployment volumes. The paper establishes Zero-Shot CoT as the dominant cost-effective strategy across all tested models.

Figure 2: Comparative analysis demonstrates Zero-Shot CoT is maximally cost-effective, minimizing prompt tokens while approaching peak accuracy.

Heat map visualization outlines the per-task, per-model impact of each prompting strategy over HELM's fixed baseline. Structured prompting provides consistent, nontrivial improvements for all non-reasoning optimized models, while o3 Mini, with native reasoning, is essentially invariant across prompt configurations, confirming hypothesized insensitivity (Figure 3).

Figure 3: Structured prompting yields uniformly positive Δ-accuracy over HELM baseline across models and tasks, with o3 Mini as an outlier in prompt insensitivity.

Implications and Theoretical Considerations

The findings reject the adequacy of fixed-prompt leaderboard evaluations for robust performance estimation of LMs. Failure to estimate each LM's ceiling via structured or reasoning-enabling prompts risks both under-reporting model capabilities and inducing arbitrary leaderboard ranking reversals. The demonstrated advantage of chain-of-thought prompting supports modeling decision boundaries as distributions over reasoning trajectories, formalized via KL-divergence inequalities to bound prediction invariance under prompt changes.

The practical implication is clear: decision-useful deployment of LMs requires ceiling estimation via cost-effective structured prompting, especially for applications in risk-sensitive domains such as medicine. Theoretically, prompt optimization is rendered near-redundant for truly reasoning-competent LMs once CoT is introduced, signaling a plateau in marginal utility for further prompt refinement. For future developments, the integration of automated reasoning traces with prompt search could enable adaptive, context-aware benchmarking frameworks, reducing dependency on hand-engineered exemplars and further enhancing scaling, reproducibility, and comparability.

Conclusion

The paper provides direct evidence that structured, reasoning-inducing prompting strategies yield more accurate, lower-variance, and decision-consistent benchmarks for model evaluation within HELM. Chain-of-thought prompting serves as the major driver of robustness and cost effectiveness, minimizing the confounding role of prompt configuration for advanced LMs. These insights mandate a paradigm shift in model evaluation practices and prompt further research toward scalable, automatic benchmarking pipelines for next-generation LLMs.