Vidi2: Large Multimodal Models for Video Understanding and Creation (2511.19529v1)

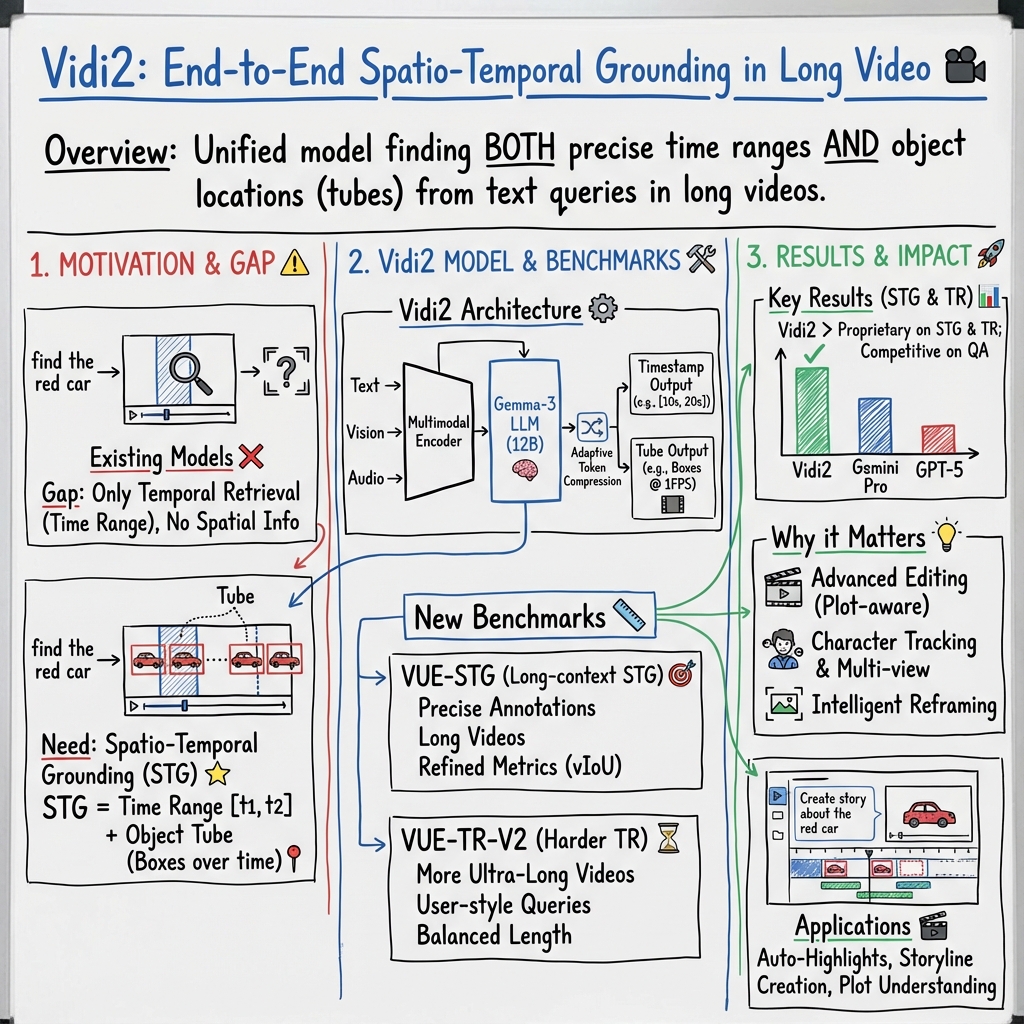

Abstract: Video has emerged as the primary medium for communication and creativity on the Internet, driving strong demand for scalable, high-quality video production. Vidi models continue to evolve toward next-generation video creation and have achieved state-of-the-art performance in multimodal temporal retrieval (TR). In its second release, Vidi2 advances video understanding with fine-grained spatio-temporal grounding (STG) and extends its capability to video question answering (Video QA), enabling comprehensive multimodal reasoning. Given a text query, Vidi2 can identify not only the corresponding timestamps but also the bounding boxes of target objects within the output time ranges. This end-to-end spatio-temporal grounding capability enables potential applications in complex editing scenarios, such as plot or character understanding, automatic multi-view switching, and intelligent, composition-aware reframing and cropping. To enable comprehensive evaluation of STG in practical settings, we introduce a new benchmark, VUE-STG, which offers four key improvements over existing STG datasets: 1) Video duration: spans from roughly 10s to 30 mins, enabling long-context reasoning; 2) Query format: queries are mostly converted into noun phrases while preserving sentence-level expressiveness; 3) Annotation quality: all ground-truth time ranges and bounding boxes are manually annotated with high accuracy; 4) Evaluation metric: a refined vIoU/tIoU/vIoU-Intersection scheme. In addition, we upgrade the previous VUE-TR benchmark to VUE-TR-V2, achieving a more balanced video-length distribution and more user-style queries. Remarkably, the Vidi2 model substantially outperforms leading proprietary systems, such as Gemini 3 Pro (Preview) and GPT-5, on both VUE-TR-V2 and VUE-STG, while achieving competitive results with popular open-source models with similar scale on video QA benchmarks.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces Vidi2, an AI system that can understand and help create videos. It doesn’t just find where something happens in a video; it can also point to exactly where the object is on the screen at the right time. The authors also built two new “tests” (benchmarks) that make it easier to judge how good different video AIs are, especially on long, real-world videos.

What questions are the researchers asking?

They focus on three simple questions:

- Can an AI find the right moments in a video for a given text query? (Example: “When does the cat jump on the table?”)

- Can it also point to the exact object on the screen during those moments? (Example: not just the time, but also a box around the cat while it jumps.)

- Can it answer questions about videos in general, like a quiz? (Example: “What color is the car that drives past at the end?”)

How did they paper it?

The Vidi2 model (the AI they built)

- Vidi2 looks at three types of information together: text, visuals (the frames), and audio (the sound).

- It uses a LLM as its “brain” and a special video encoder so it can handle both short clips and very long videos.

- To keep long videos manageable, it compresses information in a smart way, so it doesn’t “forget” important moments.

- It was trained on a mix of synthetic (made-up) and real video data, which helps it work well in real situations.

New tests for fair, real-world evaluation

- VUE-STG: A new benchmark for spatio-temporal grounding (STG). “Spatio” means space (where on the screen), and “temporal” means time (when it happens).

- Videos range from about 10 seconds to 30 minutes, so the AI must handle long stories, not just short clips.

- Queries are written clearly (often as noun phrases) to reduce confusion. Example: “the ambulance the player is loaded into” rather than a vague sentence.

- Human experts carefully labeled the correct time ranges and drew accurate boxes around targets.

- They use refined accuracy scores that check both time matching and box overlap.

- VUE-TR-V2: An updated benchmark for temporal retrieval (finding the right time ranges).

- Has many more long and extra-long videos (over an hour), closer to what you’d see on the internet.

- Uses more natural, human-style queries.

How they measure accuracy (in everyday terms)

- Think of a video like a long comic strip:

- Temporal retrieval is like marking the panels where a certain action happens.

- Spatio-temporal grounding is like marking the panels and also circling the right character in each panel.

Key ideas:

- Bounding box: a rectangle that shows where the object is in a frame.

- Tube: a chain of bounding boxes over time (like linking the boxes for each second to track the object).

- Overlap score (IoU): how much your predicted box overlaps the correct box. Higher is better.

- tIoU (temporal IoU) checks if your time ranges are right.

- vIoU (video IoU) checks both time and box accuracy.

- vIoU-Intersection focuses on how well you overlap where both your prediction and the ground truth exist at the same time.

What did they find?

- On their new benchmarks, Vidi2 scored higher than other strong systems they tested (like Gemini 3 Pro Preview and GPT‑5) at:

- Finding the correct time ranges in videos (Temporal Retrieval).

- Finding both the time and the exact on-screen location of the object (Spatio-Temporal Grounding).

- Vidi2 was especially strong on long videos and tricky cases, like small objects, dark scenes, or multiple people.

- On public video question-answering tests, Vidi2 performed competitively with popular open-source models of similar size, though top proprietary models still did better overall.

Why this matters:

- If an AI can find “when” and “where” something happens, it becomes very useful for editing and searching through long videos—things people do every day on the internet.

What does this research mean for the future?

This work could make video editing and creation much easier and faster. Here are a few practical uses:

- Highlight extraction: The AI can automatically pull out the best moments from a long video and even suggest titles.

- Plot and character understanding: It can track who appears where and when, and help cut scenes by character or event.

- Smart reframing and cropping: It can keep the important subject in view when reframing videos for different screen sizes.

- Multi-camera switching: It could switch between camera angles automatically to follow the action.

- Storyline-based creation: Given several clips, it can propose a narrative structure, transitions, music, and timing suggestions like a junior video editor.

In short, Vidi2 shows that AI can understand both the “when” and the “where” in videos with fine detail, even over long durations. That’s a big step toward tools that help anyone make professional-looking videos, speed up editing, and search video content more intelligently.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, consolidated list of what remains missing, uncertain, or unexplored in the paper, phrased to be concrete and directly actionable for follow-on research.

- Reproducibility of Vidi2: Precise architectural details (module-level design, tokenization, fusion strategy), training hyperparameters, and inference settings (frame rate, input resolution, number of tokens per modality) are not disclosed, hindering replication and controlled ablations.

- Adaptive token compression: The redesigned compression strategy is not specified or evaluated. There is no analysis of compression ratios versus accuracy/latency trade-offs or how compression scales with video length and content complexity.

- Training data transparency: The composition of synthetic vs. real video data (sources, volumes, domains, licenses) is not reported. There is no contamination check against evaluation sets or deduplication strategy to mitigate train–test leakage.

- STG data synthesis: The method for synthesizing spatio-temporal pairs from image-level grounding datasets is not described (motion models, temporal consistency constraints, sampling policies), leaving unclear what biases or artifacts this introduces.

- Audio integration details: The paper claims multimodal (audio–visual–text) support but lacks specifics on the audio encoder, alignment/fusion mechanisms, and how audio signals are sampled and represented (e.g., spectrograms vs. learned audio tokens).

- Audio contribution ablations: No ablation quantifies how much audio improves temporal retrieval or STG, especially for queries with explicit audio cues versus vision-only conditions.

- Output head for numeric tubes: The mechanism to produce structured numeric outputs (timestamps and boxes) from an LLM backbone is unspecified (e.g., constrained decoding, function calling, auxiliary regression heads), as is the rate of JSON formatting/parsing failures.

- Stability of tube predictions: No evaluation of temporal smoothness, jitter, or identity consistency of predicted tubes (e.g., ID switches across fragments/cuts), which are critical for editing applications.

- Fragmented tubes handling: While non-contiguous tubes are included, there is no metric for measuring re-identification correctness across disjoint segments or cuts beyond vIoU/vIoU-Int. Extend metrics to explicitly capture re-entry consistency and ID persistence.

- Single-target bias in STG: VUE-STG adjusts ambiguous queries to a single target object, leaving multi-object and relational grounding (e.g., “person A hands a cup to person B”) largely unevaluated. A dataset split and metrics for multi-entity relations remain open.

- Frame sampling at 1 FPS for STG: Ground-truth tubes are annotated at 1 fps; fast actions or small objects may be missed or under-penalized. Evaluate and annotate at higher frame rates (e.g., 5–10 fps) to quantify sensitivity to fast motion and small/brief events.

- STG scope limited to ≤30 minutes: VUE-STG does not test spatio-temporal grounding on ultra-long videos (>60 minutes). Investigate scaling behavior and memory/latency on hour-long videos for practical editing workflows.

- Metric sensitivity to sampling: vIoU/tIoU are computed on discretized, per-second timestamps; the effect of temporal discretization on ranking and error analysis is unstudied. Calibrate metrics across different sampling rates and propose continuous-time alternatives.

- Lack of confidence/calibration: The model outputs structured tubes without uncertainty estimates. Add confidence scoring and calibrated thresholds for controllable precision–recall trade-offs in production editing.

- Baseline fairness: Comparisons with proprietary LMMs are confounded by API constraints (e.g., GPT-5’s 120-frame cap, lack of audio input). Reassess baselines under matched input budgets and with audio-enabled variants to isolate modeling differences.

- Specialist STVG baselines: The model is not compared to specialized spatio-temporal grounding methods on standard datasets (e.g., VidSTG, HC-STVG). Benchmarking against specialist systems and reporting cross-dataset generalization is needed.

- Cross-dataset generalization for STG: Vidi2 is only evaluated on VUE-STG for STG. Assess transfer to existing benchmarks and unseen domains (e.g., surveillance, sports, egocentric, cinematic content).

- Open-ended vs. MC QA: Video QA evaluation uses only multiple-choice datasets. Test open-ended QA with automatic and human evaluation to measure generation quality, fidelity, and hallucination rates.

- Audio QA benchmarks: Despite claims of auditory question answering, no results on audio-focused video QA/ASR/alignment datasets are presented. Evaluate on benchmarks explicitly requiring audio cues.

- Error analysis: No qualitative or quantitative breakdown of failure modes (e.g., small-object misses, occlusions, lighting, fast motion, crowded scenes). Provide per-category error analyses to guide targeted model improvements.

- Efficiency and deployment: There is no report of throughput, latency, memory footprint, or compute requirements on long videos, which is essential for mobile or real-time editing. Include benchmarks on commodity hardware and edge devices.

- Scaling and cost: Training compute budgets, training time, and carbon footprint are not reported. Provide scaling laws and cost–performance curves to inform future model design.

- Token budget vs. length: While performance by video length is reported, there is no paper of how token budgets are allocated across modalities and time for long-context videos, or how this impacts accuracy.

- Query naturalness: VUE-STG uses refined noun-phrase queries to reduce ambiguity; real user queries are often longer, messier, multi-intent, and multilingual. Evaluate robustness to natural, noisy, and multilingual queries.

- Multilingual support: There is no assessment of cross-lingual performance for retrieval, STG, or QA. Add multilingual benchmarks and training data, and quantify performance degradation across languages.

- Human-in-the-loop editing: Applications (highlights, plot understanding, storyline creation) are illustrated but not evaluated. Conduct user studies measuring edit quality, time saved, and subjective satisfaction.

- End-to-end editing outcomes: There is no metric linking retrieval/grounding accuracy to downstream editing quality (e.g., cut accuracy, composition quality, audience retention). Define task-specific KPIs and correlate them with STG/TR metrics.

- Data annotation quality: While high-precision manual annotations are claimed, inter-annotator agreement, quality control protocols, and annotation guidelines are not documented. Report IAA and error bars.

- Privacy and ethics: Beyond face blurring in examples, privacy risks, IP/licensing of public videos, and content moderation in outputs are not discussed. Formalize data governance and ethical safeguards.

- Release artifacts: It is unclear whether model weights, training code, prompts, and evaluation scripts will be released. Provide full artifacts for reproducibility and community benchmarking.

- Robustness to distribution shift: No tests on adversarial prompts, domain shifts (e.g., animation, medical, industrial), or degraded inputs (noise, compression, low light). Develop robustness suites and stress tests.

- Temporal segmentation strategy: For TR, the approach to merging/splitting predicted ranges, handling overlaps, and choosing thresholds is unspecified. Provide a deterministic post-processing pipeline and show its effect on AUC.

- JSON adherence and failure rate: Baseline models’ structured output success is discussed; the paper lacks statistics on Vidi2’s own formatting error rate and recovery strategies. Report parse failure rates and fallback mechanisms.

- Multi-view and identity-aware editing: The paper mentions potential for multi-view switching and character-aware editing but provides no identity recognition evaluation (e.g., face/body re-ID) or multi-camera synchronization tests.

- Compositional generalization: There is no paper on generalizing to novel attribute/action/object compositions (e.g., “red umbrella held by the shortest person while walking upstairs”). Construct compositional splits and measure performance.

- Fine-grained spatial precision: With per-second bounding boxes and normalized coordinates, sub-frame and sub-pixel localization accuracy is unknown. Evaluate against higher-frequency annotations and measure boundary tightness (e.g., AP at multiple IoU thresholds).

- Long-term memory limits: The maximum effective context window (in tokens/seconds) and degradation patterns beyond it are unspecified. Characterize memory limits and the efficacy of summarization or memory mechanisms.

- Continual and few-shot adaptation: The model’s ability to adapt to new domains or user-specific concepts (e.g., personalized objects/people) via few-shot examples is untested. Explore lightweight adapters or in-context learning strategies for STG/TR.

- Safety and failure containment: For autonomous editing, mechanisms to prevent harmful or misleading outputs (e.g., wrong identity attribution) are not discussed. Incorporate verification/consistency checks and human override protocols.

Glossary

- Adaptive token compression strategy: A method to reduce and balance tokenized representations for videos of varying lengths to improve efficiency. "we re-design the adaptive token compression strategy, improving the balance between short and long video representation efficiency."

- Area Under Curve (AUC): A metric that summarizes performance across thresholds, often for precision, recall, or IoU. "We follow the previous VUE-TR [14] benchmark that uses the AUC (Area Under Curve) score of temporal-dimension precision, recall, and IoU (Intersection over Union) in the evaluation, denoted as P, R, IoU in Sec. 4."

- Attribute-based slicing: Partitioning evaluation data by attributes (e.g., duration, object size) to enable fine-grained analysis. "It supports attribute-based slicing for fine-grained performance analysis as follows."

- Bounding-box IoU (bIoU): The Intersection over Union between two 2D bounding boxes, measuring spatial overlap. "Bounding-box IoU (bIoU). We define bIoU(B1, B2) = Area(B1 nB2) Area(B1 U B2) (2) as the spatial overlap metric between two bounding boxes B1 and B2."

- Frame-level IoU: IoU computed per frame at time t between predicted and ground-truth boxes. "Frame-level IoU. For any t € Tpred UTgt, we define the frame-level IoU as"

- LLM backbone: The core LLM component integrated with multimodal encoders. "introducing key enhancements in both the encoder and LLM backbone (e.g., Gemma-3 [12])."

- Multimodal alignment: A training strategy that aligns signals across text, vision, and audio (often with temporal awareness). "We also observe that scaling up multimodal alignment data consistently improves overall video QA performance."

- Noun phrase (query format): A query phrased as a noun phrase rather than a full sentence to reduce ambiguity. "queries are mostly converted into noun phrases while preserving sentence-level expressiveness;"

- Precision (tP): Temporal precision; the fraction of the predicted time span that overlaps with ground truth. "Temporal precision and recall (tP and tR) measures how much of the predicted time span overlaps with the ground truth, while temporal recall measures how much of the ground truth duration is covered by the prediction:"

- Recall (tR): Temporal recall; the fraction of the ground-truth duration covered by the prediction. "Temporal precision and recall (tP and tR) measures how much of the predicted time span overlaps with the ground truth, while temporal recall measures how much of the ground truth duration is covered by the prediction:"

- ROC curve: A performance curve across thresholds; here used to compare IoU, precision, and recall. "We also present the ROC curve for different metrics in Figure 1, and we can observe that Vidi2 consistently outperforms all competitors in IOU, precision, and recall across the entire range of thresholds."

- Sampling rate (1 FPS): The frequency of frame sampling used to discretize time for evaluation/input. "we use the sampling rate 1 frame per second throughout this work."

- Spatial normalization: Mapping bounding boxes to a standard coordinate system (e.g., [0, 1]) for consistent evaluation. "For spatial normalization, we map all bounding boxes to a shared coordinate system in [0, 1]."

- Spatio-Temporal Grounding (STG): Identifying both the time ranges and spatial locations (boxes) of target objects given a text query. "The second release, Vidi2, introduces for the first time an end-to-end spatio-temporal grounding (STG) capability, identifying not only the temporal segments corresponding to a text query but also the spatial locations of relevant objects within those frames."

- Spatio-temporal tube: A sequence of bounding boxes over time representing an object’s location across frames. "A spatio-temporal tube is modeled as a time-dependent bounding box, i.e.,"

- Supervised fine-tuning (SFT): Post-pretraining supervised training on curated datasets to improve task performance. "The supervised fine-tuning (SFT) dataset for temporal retrieval has been expanded and refined in both quality and quantity."

- Synthetic data: Artificially generated data used to enhance coverage and stability during training. "While synthetic data remains essential for coverage and stability, increasing the proportion of real video data significantly enhances performance across all video-related tasks."

- Temporal discretization: Converting continuous time into discrete steps (e.g., per second) for modeling and evaluation. "In practice, the temporal axis is discretized by uniform sampling, i.e., we use the sampling rate 1 frame per second throughout this work."

- Temporal intersection (Tn): The overlapping time interval between predicted and ground-truth spans. "where Tn = Tpred ATgt is the temporal intersection."

- Temporal IoU (tIoU): Intersection over Union computed on time intervals between prediction and ground truth. "Meanwhile, temporal IoU (tIoU) measures the alignment between the predicted and ground-truth time in- tervals using an IoU-style ratio:"

- Temporal retrieval (TR): Retrieving relevant time ranges in a video given a multimodal query. "Vidi models continue to evolve toward next-generation video creation and have achieved state-of-the-art performance in multimodal temporal retrieval (TR)."

- Temporal union (Tu): The combined time span where either the predicted or ground-truth tube exists. "we average the frame-level IoU over the temporal union Tu = Tpred UTgt:"

- Video Question Answering (Video QA): Answering questions about video content using multimodal reasoning. "Vidi2 advances video understanding with fine-grained spatio-temporal grounding (STG) and extends its capability to video question answering (Video QA),"

- vIoU: Spatial IoU averaged over the temporal union; measures spatio-temporal alignment across the full span. "Spatial IoU over the temporal union (vIoU). To evaluate spatio-temporal accuracy over the entire time span where either tube exists, we average the frame-level IoU over the temporal union Tu = Tpred UTgt:"

- vIoU-Int.: Spatial IoU averaged over the temporal intersection; measures alignment only where both tubes exist. "Spatial IoU over the temporal intersection (vIoU-Int.). For completeness, we also compute the average IoU over the time interval where both tubes are defined as"

- vP (spatio-temporal precision): Average frame-level IoU over the predicted temporal span. "Spatio-temporal precision and recall (vP and vR). We measure the average frame-level IoU over the predicted and ground-truth temporal spans:"

- vR (spatio-temporal recall): Average frame-level IoU over the ground-truth temporal span. "Spatio-temporal precision and recall (vP and vR). We measure the average frame-level IoU over the predicted and ground-truth temporal spans:"

Practical Applications

Immediate Applications

Below are actionable use cases that can be deployed with the paper’s current capabilities (spatio-temporal grounding, temporal retrieval, and video QA), including sector links, plausible tools/workflows, and key assumptions.

- Text-to-timeline search and trim (Media & Software)

- Tools/Workflows: “Query-to-cut” pipeline using Vidi2’s TR/STG to generate EDL/JSON cut lists; plugins for NLEs (e.g., Premiere, CapCut) that output timestamp ranges and per-second bounding boxes.

- Assumptions/Dependencies: API/model access; GPU inference for long videos; reliable parsing of the unified JSON tube output; rights to process content.

- Composition-aware auto reframing and smart cropping (Social platforms, Marketing/Ads)

- Tools/Workflows: Auto vertical/portrait crop for Shorts/Reels/TikTok using STG tubes to keep subjects centered, avoid cuts across occlusions, and respect composition.

- Assumptions/Dependencies: Accurate box tracking at 1 FPS for small/fast objects; robust handling of fragmented tubes/camera cuts; content style preferences.

- Automatic highlight extraction with titles (Sports, Gaming, Creator tools)

- Tools/Workflows: Highlight generator that outputs timestamped segments plus concise titles using TR + video QA; publish-ready short-form clips for social.

- Assumptions/Dependencies: Audio often present (improves temporal localization); sufficient QA accuracy for meaningful titles; editor-in-the-loop for final curation.

- Auto multi-view switching for recorded events/podcasts (Live streaming, Events)

- Tools/Workflows: “Auto director” that uses audio-visual cues to detect active speakers/points of interest and generate switch cues or multi-camera edit lists.

- Assumptions/Dependencies: Stream synchronization; acceptable latency for near-real-time workflows; stable performance in low-light or noisy audio.

- Content moderation and compliance scanning (Platforms, Policy)

- Tools/Workflows: STG/TR to locate segments with policy-sensitive content (violence, accidents, PII exposure), provide timestamped evidence for review.

- Assumptions/Dependencies: Domain-specific taxonomies; thresholding for recall vs. precision; human verification to reduce false positives.

- Media asset management indexing and search (Broadcast, Studios, MAM systems)

- Tools/Workflows: Batch indexing that stores tubes/time ranges per asset; “search-in-video” UI for finding objects/actions; integration with DAM/MAM.

- Assumptions/Dependencies: Storage footprint for tube metadata; scalable GPU batch processing; content licensing.

- Lecture and meeting chapterization (Education, Enterprise software)

- Tools/Workflows: Topic “jump-to” markers generated via TR and QA; precise time ranges for whiteboard segments, demos, or Q&A.

- Assumptions/Dependencies: Privacy controls; audio/transcript availability; accuracy under long-duration meetings.

- Surveillance/dashcam forensic retrieval (Security, Automotive)

- Tools/Workflows: Post-hoc search for “person wearing a red backpack” or “car changing lanes” across hours; export timestamped evidence.

- Assumptions/Dependencies: Generalization to low-light/weather; face blurring/privacy compliance; legal admissibility standards.

- Accessibility enhancements (Accessibility, Compliance)

- Tools/Workflows: Auto chapter titles and audio descriptions for key segments; composition-aware reframing to keep sign-language interpreters visible.

- Assumptions/Dependencies: Domain adaptation for accessibility contexts; editorial review; platform support for accessibility metadata.

- Academic benchmarking and evaluation (Academia)

- Tools/Workflows: Adoption of VUE-STG and VUE-TR-V2 and refined vIoU/tIoU metrics; long-context, audio-assisted queries for realistic evaluation.

- Assumptions/Dependencies: Benchmark licensing and availability; reproducible protocols; community buy-in for metric standardization.

Long-Term Applications

The following use cases require further research, scaling, integration, or real-time capabilities before broad deployment.

- Real-time multi-camera auto-directing (Broadcast, Sports)

- Tools/Workflows: Live “Auto Director” agent that fuses STG with audio to control switchers, choose the best angle, and frame shots dynamically.

- Assumptions/Dependencies: Low-latency inference; multi-stream temporal alignment; robust tracking of small/fast subjects; fail-safe manual override.

- Character-centric plot editing and story-aware cut lists (Film/TV, Streaming)

- Tools/Workflows: Identity tracking + causal reasoning to produce character-specific edits and coherent storylines across multi-episode content.

- Assumptions/Dependencies: Reliable character recognition under occlusions/costumes; stronger commonsense and world knowledge; rights to perform character-based edits.

- Agentic end-to-end video creation (Creator economy, Advertising)

- Tools/Workflows: Multi-asset ingestion, script generation, voiceover/music, transitions, and export—an “editing agent” that outputs a publishable narrative.

- Assumptions/Dependencies: Integration with generative audio/video tools; brand safety and approvals; IP licensing for assets and music.

- Personalized interactive playback (Consumer streaming)

- Tools/Workflows: In-player querying (“show me all dunks by Player X”), dynamic crop/zoom with STG, and context-aware replays.

- Assumptions/Dependencies: Per-user inference costs; content rights; edge/cloud compute strategies; UI/UX for interactive controls.

- Sports analytics and coaching (Sports tech)

- Tools/Workflows: Play finder extracting formations and key events with player/object tubes; heatmaps over time; multi-view synchronization.

- Assumptions/Dependencies: Calibrated multi-camera geometry; improved small-object tracking; team adoption and workflow integration.

- Surgical and medical video indexing (Healthcare)

- Tools/Workflows: Procedure timelines (e.g., instrument usage sequences), automatic annotation for training/resident education, QA for critical moments.

- Assumptions/Dependencies: Regulatory clearance; clinical-grade datasets; high precision and traceability; privacy and PHI handling.

- Robotics/AV incident mining and dataset curation (Robotics, Autonomous vehicles)

- Tools/Workflows: Mining long video logs for specific events (near-miss, object crossing), automatic clip export for training and compliance auditing.

- Assumptions/Dependencies: Domain transfer from internet/video benchmarks to machine perception feeds; on-device or edge compute; safety-critical reliability.

- Industrial inspection and maintenance (Energy, Manufacturing)

- Tools/Workflows: Long-form inspection video analysis (pipelines, turbines) to localize anomalies, assemble timelines of issues and recommended actions.

- Assumptions/Dependencies: Specialized sensor/video modalities (thermal, infrared); high stakes accuracy; integration with CMMS workflows.

- Advertising slot detection and programmatic editing (Media, Ads)

- Tools/Workflows: Identify natural narrative breaks for ad insertion; automate bumper transitions and safe cropping.

- Assumptions/Dependencies: Publisher standards; audience impact testing; policy compliance for insertion points.

Collections

Sign up for free to add this paper to one or more collections.