Advancing Spatial-Temporal Modeling and Audio Understanding in Video-LLMs: An Overview of VideoLLaMA 2

The field of Video LLMs (Video-LLMs) has seen increasing attention due to the inherent complexities and rich information content in video data. The paper "VideoLLaMA 2: Advancing Spatial-Temporal Modeling and Audio Understanding in Video-LLMs" presents significant advancements in this domain by introducing a sophisticated framework designed to enhance video and audio understanding. This essay provides a detailed overview of the technical contributions and implications of this research.

Key Contributions and Architecture

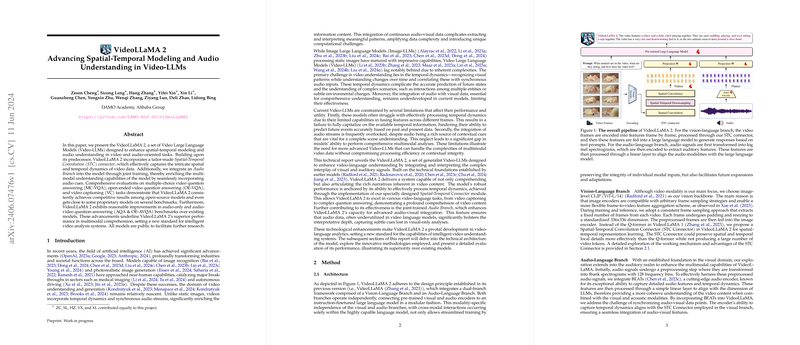

VideoLLaMA 2 builds on its predecessor by introducing two critical components: the Spatial-Temporal Convolution (STC) connector and a joint-trained Audio Branch. These components address the unique challenges associated with video and audio analysis, leading to improved performance across several benchmarks.

The architecture of VideoLLaMA 2 consists of dual branches for vision-language and audio-language processing, each operating semi-independently but ultimately converging to provide coherent responses to multimodal inputs. The visual encoder, based on CLIP, processes static video frames, while the audio encoder BEATs extracts detailed auditory features. These features are then integrated and aligned with the LLM using the STC connector and additional audio processing layers.

Spatial-Temporal Convolution Connector (STC)

One of the notable innovations in VideoLLaMA 2 is the STC connector, which replaces the Q-former used in previous versions. The STC connector employs a combination of 3D convolutions and RegStage blocks to maintain temporal dynamics and local details, reducing the number of video tokens without significant information loss. This approach outperforms other vision-language connectors, particularly in video tasks requiring intricate temporal modeling.

Audio-Language Processing

The introduction of a joint-trained Audio Branch marks another significant contribution. By enriching the model with audio cues, VideoLLaMA 2 enhances its ability to understand and interpret multimodal data. This component is trained in three stages: audio-language pre-training, multi-task fine-tuning, and audio-video joint training. The comprehensive training regimen ensures robust performance in both audio-only and audio-visual contexts.

Evaluation and Results

VideoLLaMA 2's performance was rigorously evaluated against numerous benchmarks for video and audio understanding. Here are some key results from the evaluation:

- Multiple-Choice Video Question Answering (MC-VQA): On the EgoSchema benchmark, VideoLLaMA 2-7B achieved an accuracy of 51.7%, significantly outperforming other open-source models. It also demonstrated competitive results in Perception-Test (51.4%) and MV-Bench (53.9%), showcasing its enhanced understanding of temporal dynamics in video data.

- Open-Ended Video Question Answering (OE-VQA): On the MSVD dataset, VideoLLaMA 2-7B scored 71.7%, underscoring its superior ability to generate coherent and contextually accurate responses.

- Video Captioning (VC): In the MSVC benchmark, VideoLLaMA 2 performed well, with scores of 2.57 in correctness and 2.61 in detailedness, leveraging both visual and auditory inputs to generate enriched descriptions.

- Audio Understanding: VideoLLaMA 2-7B achieved strong results on audio-only benchmarks, such as Clotho-AQA, where it outperformed Qwen-Audio with a 2.7% higher accuracy despite using significantly fewer training hours.

Implications and Future Directions

The advancements presented in VideoLLaMA 2 have significant practical and theoretical implications:

- Enhanced Multimodal Comprehension: The integration of spatial-temporal modeling and auditory processing sets a new standard for Video-LLMs, making models like VideoLLaMA 2 more adept at handling real-world applications that require sophisticated video and audio understanding.

- Scalability and Efficiency: The modular approach of the STC connector and the audio branch allows for streamlined training and potential expansions, indicating a path forward for even larger and more capable systems.

- Potential Applications: These improvements pave the way for advancements in several application areas, including autonomous driving, medical imaging, and robotic manipulation, where accurate multimodal analysis is crucial.

In conclusion, VideoLLaMA 2 represents a significant step forward in the domain of Video-LLMs, addressing the complexities of video and audio integration with innovative architectural designs and thorough training methodologies. The superior performance across diverse benchmarks underscores the potential of VideoLLaMA 2 to influence future developments in AI, particularly in contexts requiring detailed spatial-temporal and multimodal understanding.