Enhancing Reasoning through Process Supervision with Monte Carlo Tree Search

The paper "Enhancing Reasoning through Process Supervision with Monte Carlo Tree Search" addresses a critical shortcoming of LLMs: their limited reasoning capabilities. While LLMs have shown proficiency across various language tasks, reasoning remains a significant challenge. This paper explores the use of Monte Carlo Tree Search (MCTS) to enhance reasoning by generating process supervision data which allows LLMs to improve their ability in a training iteration framework.

Methodology

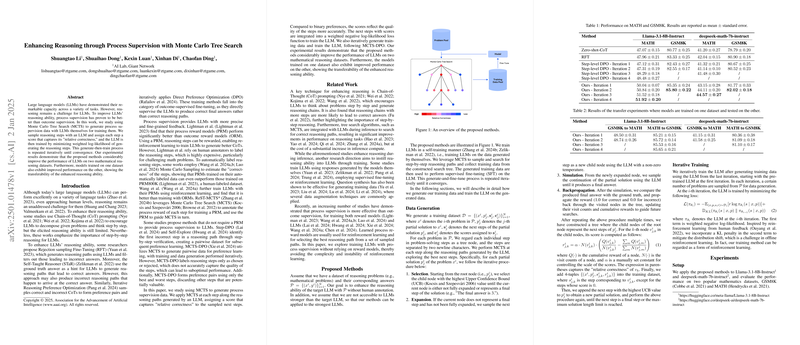

The authors propose a technique to augment reasoning capability in LLMs by employing MCTS in generating training data for process supervision. The approach leverages the sampling of reasoning paths with LLMs, assigning each reasoning step a measure of "relative correctness" via MCTS, which is then used for supervising the model. Key aspects of the methodology include:

- Monte Carlo Tree Search (MCTS): MCTS is applied to model the search tree where each node represents a reasoning step. The process iterates over selection, expansion, simulation, and backpropagation steps, typical of MCTS, to build a search tree that explores possible reasoning paths.

- Relative Correctness Scoring: Each sampled step in the reasoning process is assigned a score reflecting its correctness relative to other steps. This scores are used to train LLMs, focusing on generating correct reasoning paths rather than just correct outcomes.

- Iterative Training Framework: The model is trained iteratively, fine-tuning LLMs on data generated by previous iterations until convergence is achieved. This iterative refinement promotes continuous enhancement of reasoning abilities.

Results

Experimental evaluations were conducted using two mathematical reasoning datasets: GSM8K and MATH. These experiments revealed substantial improvement in reasoning performance:

- The proposed method outperformed baseline techniques such as Zero-shot-CoT and Rejection Sampling Fine-Tuning (RFT).

- Notably, the methods showed transferability; models trained on one dataset demonstrated improved performance on another, indicating effective generalization of the learned reasoning abilities.

Tables presenting the numerical results underscore the efficacy of the proposed MCTS-based process supervision in enhancing reasoning tasks within these datasets.

Implications and Future Directions

The implications of this work are significant both practically and theoretically. Practically, enhancing LLM reasoning through efficient process supervision addresses the bottleneck in deploying LLMs for complex problem-solving tasks, particularly in fields like mathematics and logic where step-by-step reasoning is critical.

Theoretically, this paper contributes to our understanding of how LLMs internalize and apply reasoning strategies. The iterative training framework, coupled with MCTS, presents a robust approach to fine-tuning neural models beyond conventional outcome-based supervision.

While promising, the paper indicates that performance gains plateau after several training iterations. Future research might explore the underlying causes of this saturation and seek methods to extend the iterative improvement cycle. Alternative exploration-exploitation strategies within MCTS, or hybrid approaches integrating different machine learning paradigms, could potentially extend the convergence horizon.

Moreover, while LoRA was employed to train models in these experiments, further research might explore the impact of full fine-tuning to assess whether improved outcomes could be realized.

In conclusion, this paper contributes a sophisticated method for enhancing reasoning in LLMs using MCTS and sets a foundation for future exploration into automated reasoning supervision methods. This research opens avenues for more nuanced approaches to LLM training that potentially extend the applicability and effectiveness of these models in reasoning-intense domains.